![]()

8.0 Overview

One of the most important applications of natural language processing is in the extraction of information from unstructured text. Starting in the 1990s, the amount of accessible data on the World Wide Web began to dwarf the amount of data found in structured databases. Each day people email, text, make phone calls, post on Instagram and Facebook, visit websites, read news articles, magazines, and blogs, and make online purchases. Doctors, lawyers, scientists, and other professionals work hard to keep up with journals and technical manuals. Amazon receives over 75 million visits a day.

All these activities generate data. Most of it is unstructured. Gartner Group estimates that 80% of corporate data is unstructured (Rizkallah, 2017). We all search the web on a regular basis to retrieve information. You have probably noticed how much “smarter” web search has become. For many years, a web search would return just a list of articles that matched the keywords in the query. As an example, an infobox from a Google search for Albert Einstein is shown below:

Today, web searches that request information about an entity return with detailed infoboxes like the one above produced by a Google search for Albert Einstein. Consider the search query

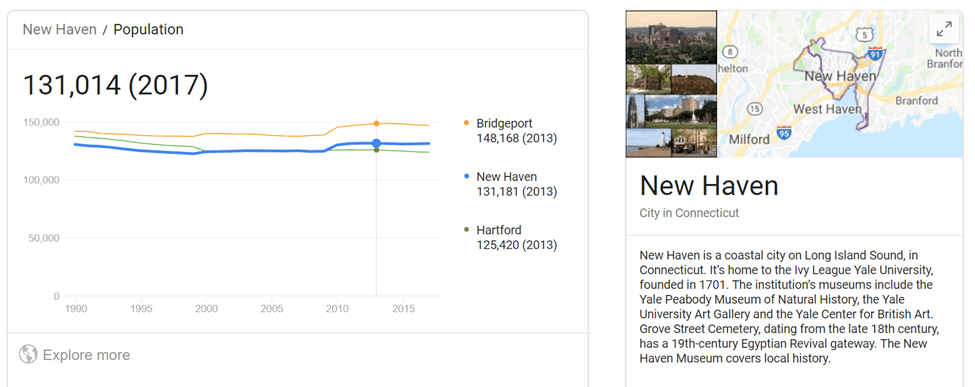

How many people live in New Haven CT

Google responds with this detailed infobox:

The search returns the number of people who live in New Haven highlighted in a graph as illustrated above. Many different types of organizations need to extract information from unstructured text. Hospitals and medical researchers benefit when they can pull specific data such as diagnosis, outcome, and drugs administered from the unstructured text of patient records into structured databases. Corporations need tools to automatically extract information from social media posts, news stories, emails, and many other forms of unstructured text. Some examples:

- Crawl the web to extract facts to populate a knowledge graph

- Read social media posts and extract information regarding malware

- Read scientific articles and/or drug information statements to extract adverse drug interactions

- Read news article to extract details of terrorist events

- Read social media posts to determine user reaction to a new product release

8.1 Types of IE

AI researchers focus primarily on four types of IE:

- Entity extraction

- Template extraction

- Knowledge base population

- Sentiment analysis

8.1.1 Entity extraction

Entity extraction is identifying the people, places, organizations, and other things referenced in a document.

Named entity recognition (NER) is the extraction of proper names of entities from text. The primary challenge for NER is that many different strings can refer to the same entity including:

- Different names: “Former President Obama” vs. “Barack Obama”

- Nicknames: “William Clinton” vs. “Bill Clinton”

- Abbreviations: “George Walker Bush” vs. “George W. Bush”

- Word order: “Yale Department of Psychology” vs. “Psychology Department at Yale”

- Other name variants including things like aliases and legal name changes. There can also be acronyms, translations transliterations, and typos.

One might think that NER can be accomplished just by comparing the words in a news article with a gazetteer. Unfortunately, the words used to reference a named entity can vary a great deal from the dictionary to the text due to word ambiguity, morphology, and use of pronouns and other anaphoric constructions.

8.1.2 Entity linking

NER identifies entity strings and entity types (e.g. person or place). For example, suppose two facts are mentioned in the same or different documents:

Barack Obama was born in Hawaii

Former President Obama is married to Michelle Obama

NER would extract the entity “Barack Obama” from the first sentence and the entity “former President Obama” from the second sentence. However, NER by itself would not recognize that the two entities refer to the same person.

One way to do this is to link both references to the Barack Obama Wikipedia article or some other canonical standard. Wikipedia is particularly good for this as each article typically covers one entity. This linking process is termed entity linking (EL). The primary challenges involved in EL are:

- Phrasing differences, i.e. the different ways the entity can be phrased in the sentence vs. the knowledge base.

- Ambiguities, i.e. multiple entities with the same or similar names. As an example (Sil et al, 2018), consider two abbreviated knowledge base entries:

-

- Alex Smith is a quarterback for the Kansas City Chiefs of the National Football League.

- Alex Smith was a Scottish-American professional golfer who played in the late 19th and early 20th centuries.

Consider the input sentence

Alex Smith threw 3 touchdowns last week.

Similarly, for the input sentence

Alex Smith had 8 birdies in one round

the correct context is (2) and not (1). An EL system doesn’t need to understand any of these sentences in order to determine that the correct context is (1) and not (2). To make this determination, an AI system merely needs to scan many documents and record which words frequently co-occur with which other words. By doing so, the system will find that words like “quarterback” and “touchdowns” are frequently present in the same news stories. Similarly, “golfer” and “birdies” are words that frequently co-occur.

One of the major challenges for entity linking is coreference resolution. There are two types of named entity coreference problems that are commonly found in documents:

Pronominal Resolution: Documents often contain pronouns such as “he”, “she”, or “it” that refer to a prior named entity reference in a document.

Nominal Entity Resolution: A nominal entity reference is an indirect reference to a named entity. For example, a document might contain both “New York City” and “the largest city in the US”.

8.1.3 Template extraction

In most information extraction applications, simply extracting entities doesn’t provide standalone value. More often, identifying entities is a pipeline step towards the broader goal of extracting facts. Often the goal is to extract facts from unstructured text and create a structured set of data. Template extraction is extracting structured data from unstructured documents to create structured data. A template defines the information to be extracted. There are four types of templates that have received the most attention:

- Entity templates: Extract specific facts about a specific type of entity like reading newspaper ads to find apartment rentals and extracting the neighborhood, # of bedrooms, and rental cost.

- Relationships: Extract simple facts like

produced_by (Star Wars, George Lucas)

Relations are the type of fact found in knowledge graphs.

- Frame templates: Extract data into academic templates such as FrameNet, PropBank, and OntoNotes.

- Event templates: Extract specific attributes of an event are extracted from news text.

8.1.3.1 Temporal extraction

Temporal extraction involves extracting spans of words that identify a time period or duration and converting them to a canonical form (temporal normalization) and is often required for template extraction. Natural language references to points in time and time intervals can take a wide variety of surface forms. For example, these two utterances

the first five days of March 2018

March 1-5 2018

should produce the same representation. Time references can also be relative. For example, “yesterday” might refer to March 1 today but will refer to March 2 tomorrow. A natural language request to a virtual assistant might take on the form

Email me the first day of every month a report showing the prior month’s sales

To process this correctly, the assistant would need to understand that “the first day of every month” is a recurring series of points in time and that “the prior month’s sales” can’t just be resolved to a single time interval (e.g. June 1-30, 2018) because on August 1 a report for the July 1-31 time period will be expected.

8.1.3.2 Numeric extraction

Similarly, extraction and normalization of quantities, currency, and other numeric expressions are often required.

8.1.4 Sentiment analysis

Sentiment Analysis is used to characterize tweets, blogs, recommendations, articles, and other texts according to the sentiment in the text. Classifications include general sentiment (positive vs. negative aka valence) and emotion classification (e.g. anger, anticipation, disgust, fear, joy, sadness, surprise, trust).

8.2 IE methods

For many years, rule-based systems were the primary method of information extraction. Then, supervised learning methods gained prominence but brought with them increased computational requirements and the need for labeled data. More recently, large language models have reduced or eliminated the need for labeled data but have even higher computational requirements. As a result, all three types of methods are still in use.

There have been four categories of techniques used for relationship and event extraction:

- Rule-based systems

- Supervised learning systems

- Large language models

8.2.1 Rule-based systems

IBM researchers (Chiticaru et al, 2013) reviewed 54 commercially-available natural language processing tools in 2013 and found that 83% of large vendor tools and 67% of all tools had some degree of handcrafting of rules. While supervised learning and, more recently, fine-tuned language models, have overtaken rule-based systems, are fast becoming the gold standard for IE, many IE systems still use handcrafted rules because they are fast to implement and inexpensive.

Let’s discuss how rules can be used to identify the spans of text that fill slots in an identified frame. For example, suppose you want to build a database of rental properties from ads in the newspaper or online such as:

Capitol Hill – 1 br twnhme. fplc D/W W/D. Undrgrnd pkg incl $2675. 3 BR, upper r of turn of ctry HOME. incl gar, grt N. Hill loc $2995. (206) 999-9999

[ Example from Soderland, 1999 ]

The goal here might be to extract information such as

Rental: Neighborhood: Capitol Hill Bedrooms: 1 Price: $2675

Rental: Neighborhood: Capitol Hill Bedrooms: 3 Price: $2995

One approach is to hand-code a set of regular expressions and/or textual patterns such as

Pattern: * ( Digit ) ‘ BR’ * ‘ ( Number )

Output: Rental {Bedrooms $1} {Price $2}

This approach can work well especially for tightly constrained domains such as apartment rentals. However, each new domain requires a new set of handcrafted patterns. Why spend the effort to use handcrafted rules at all if there are systems that can learn the rules? All the learning technologies have limitations. Commercial tools such as IBM’s SystemT (Chiticaru et al, 2010) offer a way to code handcrafted rules by providing a consistent language and debugging tools that make the process easier and more transparent.

For entity linking, the first step is to identify a set of candidate knowledge base (e.g. Wikipedia) entries. For example, a Carnegie Mellon team (Ma et al, 2017) used all Wikipedia articles in which one of the entity names of the KB entry is a substring of the text of the named entity mention. Then, the candidate Wikipedia entries for all named entities are compared to a “semantic interpretation graph” of the entire Wikipedia in which the links between two Wikipedia entries is the Google PageRank score (i.e. how relevant they are to one another). They use this graph to identify the best matching candidate Wikipedia entry.

An IBM team (Sil et al, 2018) attacked a somewhat different goal. Their goal was to train an entity linking system in English in a way that would transfer to other languages. They used multilingual word embeddings for both English and the target language and used a variety of similarity scorinsystems to identify the best match between an entity mention in a document and a Wikipedia page.

Hand-crafted patterns can also be used for NER.

Coreference resolution algorithms can include rules based on syntactic features, word embedding similarity, and many other types of features.

8.2.2 Supervised learning

Starting around 2010, supervised learning overtook rule-based approaches and became the dominant paradigm for IE. Conditional random fields became the dominant form of supervised learning. Typical feature inputs to supervised learning systems (Ratinov and Roth, 2009) include:

- Entity type predictions for the previous two tokens

- Current word

- Current word type (all-capitalized, is-capitalized, all-digits, alphanumeric, etc.)

- Prefixes and suffixes of the current word

- The current window (i.e. the tokens one and prior and following the current word)

- The capitalization pattern in the current window

- The conjunction of the tokens in the current window and entity type prediction for the prior token

- POS tags

- Shallow parsing (i.e. identification of noun phrases, verb phrases, adjective phrases, and prepositional phrases)

- Information from gazetteers

Most of these systems treat NER as a large classification problem in which each unique entity is its own class and used networks that required large datasets of examples. For example, IBM researchers (Sil et al, 2017) developed a supervised learning entity matching algorithm to determine the best prior named entity reference for each nominal entity mention using both logistic regression and neural network models and input features that included words in context, capitalization flags, entity dictionaries, and many others.

When a training corpus is available, multiple supervised learning techniques can be used to determine the sentiment of a social media entry, review, or news article. For example, in one conference competition in 2018 (Mohammad et al, 2018), the task was to analyze affect in tweets. A training corpus divided into training and test datasets was available and most teams approached the problem as a supervised learning problem. Neural networks including convolutional networks and LSTMs (both mono- and bidirectional) were well-represented approaches as were SVM’s, and linear and logistic regression. Word embeddings affect lexicon features, word and character n-grams were the most popular sources of features but some teams used also used syntactic parse and other features.

Supervised learning models are far from perfect. For example, Google’s Jigsaw division created a tool named Perspective to identify toxic social media comments. They created a large training dataset of comments, labeled them as toxic or not, and used a supervised learning regression algorithm to train the system. The first broad test of the system analyzed 92 million comments by more than two million authors and found that commenters in Vermont issue a higher percentage of toxic comments than any other state.

However, when Vermont librarian Jessamyn West dug into the details by typing various comments of her own into Perspective, she found the reason. When she typed, “People will die if they kill Obamacare,” she received a 95% toxicity score (higher scores indicate higher toxicity). When she typed, “I am a gay man,” she received a 57% toxicity score. The supervised learning algorithm had learned to key in on words like “kill” and “gay.” The algorithm had probably found comments like, “I’d like to kill you” and the “You are gay” that raters judged as having malignant intent. Not only did the resulting system perform poorly but it apparently learned some racial and gender biases that were inherent in the data because while “I am a man” received only a 20% score, “I am a woman” received a 40% score and “I am a black woman” received an 85% score. As a result, Google recommended that the tool only be used as a way of sorting through massive numbers of comments to highlight possible toxic comments that should then be reviewed by people to determine if they are actually toxic.

8.2.2.1 Semi-supervised learning approaches

One difficulty with supervised learning approaches is that a fully-annotated corpus is required. The creation of annotated corpora for new areas can be expensive. As a result, researchers have developed semi-supervised learning methods in which only a small annotated corpus is required.

For example, suppose we wanted to develop an information extraction system to read news articles and extract the names of all the cities and towns in the world. The supervised learning approach would be to annotate a large number of news articles in a way that identifies the patterns of words that indicate cities. The custom approach is approach would be to read numerous news article and identify all the patterns that indicate cities and towns such as

…located in <city>

…mayor of <city>

The semi-supervised approach is to seed the system with a small number of examples of syntactic patterns, phrases, and/or regular expressions that indicate cities. Then, every time it sees one of these patterns, it can hypothesize that the noun phrase following it is a city name. After reading many articles, it learns many city names. Then it can re-read those articles, recognize the just-learned city names, and learn new word patterns like those above. Using these new patterns, it can learn more city names and use those to learn more patterns.

One difficulty with semi-supervised methods is semantic drift. For example, while “located in” will often signal a city name, it’s not always the case, e.g.

…located in flood zones

Now, if we take “flood zones” as the name of a city and then learn other patterns involving “flood zones”, our list of cities and towns can contain a lot of bad data.

Another problem is that the learned patterns lead to problems when applied blindly. For example, suppose we use a simplified version of some of the Hearst patterns (Hearst, 1992) as a starting point to identify ISA relationships:

NP such as NP

such NP as NP

In these regular expression patterns, NP stands for “Noun Phrase”. Now, consider these sentences (Wu et al, 2012):

…household pets other than animals such as reptiles…

…household pets other than dogs such as cats…

In the first example, people have no problem interpreting “such as” to mean that reptiles are an instance of the animal category. An IE system using this pattern would correctly infer that reptiles are animals. In the second example, however, people don’t interpret “such as” to mean that cats are instances of dogs. An IE system using this pattern would at least initially infer the wrong conclusion. When using semi-supervised learning, it is important to validate inferences.

8.2.2.2 Distant supervised learning

A classic supervised learning technique was created by Stanford researchers in 2009 (Mintz et al, 2009). They started with 1.8 million instances of 940,000 entities and 102 relations in Freebase. They used 1.87 million Wikipedia articles as unlabeled text and analyzed the sentences in each article. If two entities with a Freebase relation appeared in a sentence, it was assumed that the sentence might be an instance of the Freebase relation. They trained a logistic regression classifier for each Freebase relation using features based on syntactic rules and lexical features including:

- The sequence of words between the two entities

- The part-of-speech tags of these words

- A flag indicating which entity came first in the sentence

- A window of k words to the left of Entity 1 and their part-of-speech tags

- A window of k words to the right of Entity 2 and their part-of-speech tags

Using this classifier, they were able to create 10,000 new instances of the 102 relations with a precision of 67.6% (i.e. 67.6% were actually instances of the relation).

8.2.3 Large language models

Fine-tuned language models have become almost universally preferred over supervised learning with a large set of labeled data because far fewer training examples are required. Large language models (LLMs) can also often be used without fine-tuning by providing examples in the input buffer. It is possible to use an LLM to scan a large set of documents, pull out specific pieces of information, and use that information to create a structured database that supports reporting. A big disadvantage of LLMs is hallucinations. That structured database may contain data that was hallucinated. As a result, other methods of information extraction remain relevant.

8.2.4 Off-the-shelf tools

There are also several open source tools that do a good job of NER such as the NER component of Stanford’s CoreNLP system (Manning et al, 2014), spaCy, and Flair. The Stanford NER tool was trained using a conditional random field classifier on labeled data from academic competitions. This tool can be used out of the box to identify entities for texts in English. It also offers out of the box Arabic, French, German, Spanish, and Chinese models. Pre-trained entities recognized include:

- People

- Locations

- Organizations

- Numbers (currency, numeric, ordinal, percent)

- Time (dates, times, durations, sets)

The system also contains regular expression patterns that can be used to extract email addresses, URLs, cities, states, countries, nationalities, religions, job titles, ideologies, criminal charges, and causes of death. You can also train your own models with CoreNLP for specific application domains and/or languages if you have annotated corpora. CoreNLP also contain the Stanford SUTime algorithm (Chang and Manning, 2012) module which is widely used to convert natural language time references to a canonical representation. It uses handcrafted rules to recognize time and date range references and translate them to a normalized (canonical) format. Last, CoreNLP contains functions for extraction and normalization of quantities, currency, and other numeric expressions are capabilities.

HeidelTime is another widely used free temporal normalization tool. Natural language processing systems can then use these normalized representations to perform a limited form of reasoning. For example, systems that answer questions based on reading news articles can use temporal reasoning to compare normalized forms and determine whether two events are contemporaneous. The various forms of temporal reasoning are detailed in Fisher (2008).

spaCy has more pre-trained entity types than CoreNLP including

- PERSON People, including fictional

- NORP Nationalities or religious or political groups

- FAC Buildings, airports, highways, bridges, etc.

- ORG Companies, agencies, institutions, etc.

- GPE Countries, cities, states

- LOC Non-GPE locations, mountain ranges, bodies of water

- PRODUCT Vehicles, weapons, foods, etc. (not services)

- EVENT Named hurricanes, battles, wars, sports events, etc.

- WORK OF ART Titles of books, songs, etc.

- LAW Named documents made into laws

- LANGUAGE Any named language

- DATE Absolute or relative dates or periods

- TIME Times smaller than a day

- PERCENT Percentage (including “%”)

- MONEY Monetary values, including unit

- QUANTITY Measurements, as of weight or distance

- ORDINAL Ordinals (e.g., “first”, “1st”)

- CARDINAL Numerals that do not fall under another type

Off-the-shelf NER systems are generally quite effective at identifying entities. However, all NER systems can have difficulty with named entities that were not seen as part of the system’s training set. These novel entities can be found in web text, domain-specific text (e.g. neurology texts), and on social media.

That said, if a domain-specific training set is available, a good NER system can easily be built using a tool like CoreNLP. Also, while training sets are available for major languages, they are rarely available for low-resource languages.

© aiperspectives.com, 2020. Unauthorized use and/or duplication of this material without express and written permission from this site’s owner is strictly prohibited. Excerpts and links may be used, provided that full and clear credit is given with appropriate and specific direction to the original content.