1.0 Introduction

AI has blasted its way into the public consciousness and our everyday lives. It is powering advances in medicine, weather prediction, factory automation, and self-driving cars. Even golf club manufacturers report that AI is now designing their clubs.

Every day, people interact with AI. Google Translate helps us understand foreign language webpages and talk to Uber drivers in foreign countries. Vendors have built speech recognition into many apps. We use personal assistants like Siri and Alexa daily to help us complete simple tasks. Face recognition apps automatically label our photos. And AI systems are beating expert game players at complex games like Go and Texas Hold ‘Em. Factory robots are moving beyond repetitive motions and starting to stock shelves.

When OpenAI released ChatGPT in November, 2022, it achieved 100 million users in two months.

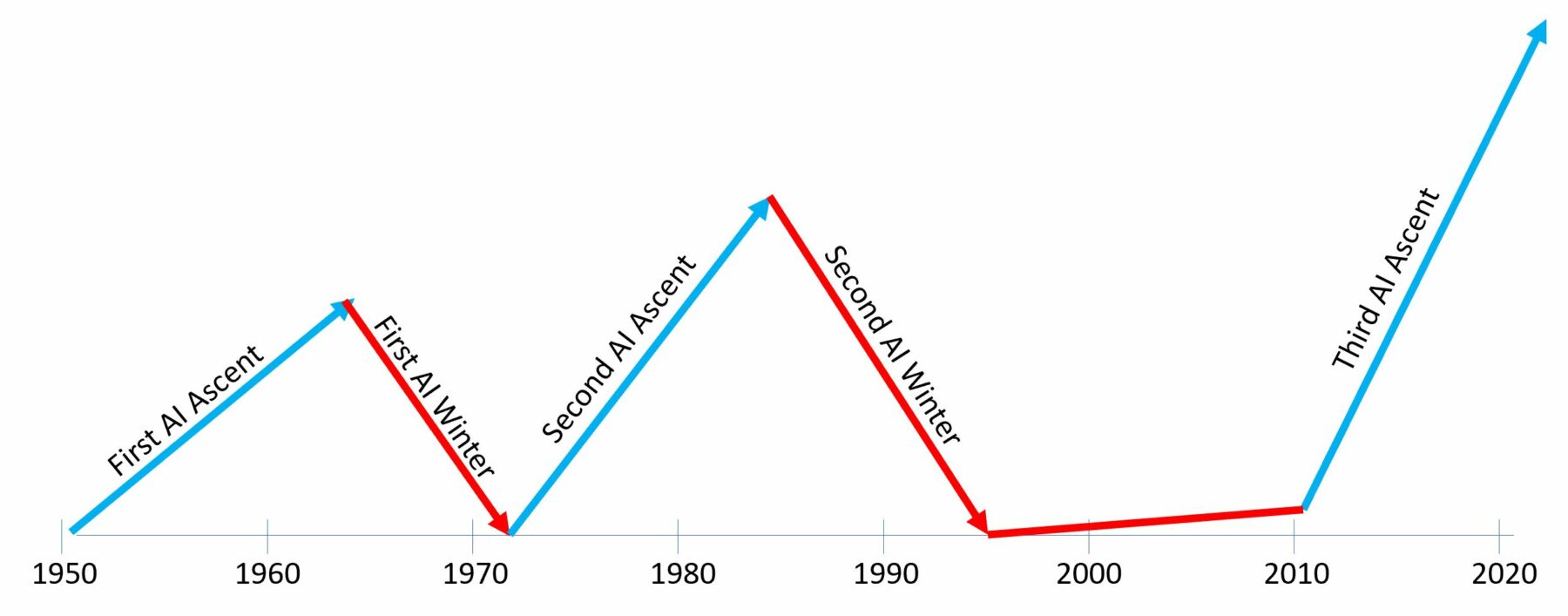

AI has captured the public imagination, but this is not the first time; we are actually in a third wave of AI progress and interest. The first occurred in the early 1960s and again in the early 1980s. In both cases, expectations about progress toward artificial general intelligence (AGI) systems—systems with humanlike intelligence—far exceeded reality. Both periods were followed by AI winters, in which investment and interest in AI plummeted in the timeline shown below:

1.1 The first AI hype cycle

The first AI system was built in 1951 by Marvin Minsky and Dean Edmonds at Harvard University. It was built with 3000 vacuum tubes and simulated a rat learning to search a maze for food. It was based on a paper by Walter McCulloch and Walter Pitts (1943) that characterized the human brain as a neural network.

John McCarthy coined the term artificial intelligence in 1956. The first AI conference was a two-month workshop held at Dartmouth College that same year. The goal was to bring together researchers in automata theory, neural networks, and the study of intelligence. The participants included several individuals who would go on to become leaders in the field of AI, including Minsky, McCarthy, Allen Newell, and Herbert Simon.

1.1.1 Early successes

Early work in AI showed tremendous promise. In 1956, at Rand Corporation, Allen Newell and Herbert Simon created the Logic Theorist, which was able to prove many mathematical theorems.

In 1960, when he was at MIT Lincoln Labs, Bert Green (who became my PhD thesis advisor 18 years later) wrote BASEBALL, a computer program that could answer questions about the 1959 baseball season, like these:

- Who did the Red Sox lose to on July 5?

- Where did each team play on July 7?

- Which teams won 10 games in July?

- Did every team play at least once in each park?

For his PhD thesis at MIT in 1964, Daniel Bobrow developed the STUDENT system, which accepted a restricted subset of English in which it was possible to express a wide variety of algebra word problems such as this one:

If the number of customers Tom gets is twice the square of 20 percent of the number of advertisements he runs, and the number of advertisements he runs is 45, what is the number of customers Tom gets?

STUDENT transformed the input question into a set of simultaneous equations that it then solved. The success of STUDENT, along with other early successes, led Herbert Simon to proclaim in 1965 that

“Machines will be capable, within twenty years, of doing any work that a man can do.”

1.1.2 Early failures

During this early achievement period, there were also some significant failures, the most notable of which was machine translation—computer systems that translate text from one human language to another. Researchers first attempted machine translation in the 1950s by programming a computer to act like a human translator who had a pocket translation dictionary but did not know the target language. The technique was to first store the equivalent of a pocket dictionary in the computer. Then, the program processed the source text word by word, placing the corresponding word in the target language into the output text.

Some of the more sophisticated systems also used syntactic analyses to correct for differences in word order in the two languages. For example, the phrase “the blue house” in English might otherwise translate to the equivalent of “the house blue” in a language such as French: “la maison bleue”.

These early machine translation attempts produced disappointing results. The major problem was the mistaken assumption of a one-to-one correspondence between words in the source and target languages. For example, because of word ambiguity, a substantial proportion of the words in the target language had too many potential equivalents in the source language to come up with an accurate translation.

When forced to choose among the possible target language alternatives for each source language word, these systems often produced humorous results. Several realistic, even if not actual, anecdotes have become part of the lore of AI. For example, one system reportedly translated the English phrase “water buffalo“ into the equivalent of “hydraulic goat” in the target language.

Idiomatic and metaphorical material caused big problems. One system reportedly translated the English “the spirit is willing, but the flesh is weak“ into the equivalent of “The vodka is good, but the meat is rotten” in Russian. Another system reportedly translated “out of sight, out of mind“ into “invisible idiot.”

In a 1960 review of machine translation efforts, an Israeli linguist named Yehoshua Bar-Hillel asserted that human translators could not only understand the meaning of the source language but could also reason based on their knowledge of the world—abilities that computers had yet to demonstrate. For computers to be able to translate language, he argued, they would have to do the same thing in the same way.

1.2 The first AI winter

Bar-Hillel’s point of view was reiterated six years later in the infamous ALPAC (Automatic Language Processing Advisory Committee) report, which convinced the US government to reduce its funding for this type of research dramatically. The ALPAC report concluded that machine translation was slower, less accurate, and twice as expensive as human translation and stated that there was no point in further investment in machine translation research. The US government canceled nearly all of its funding for academic machine translation projects that year. Not long after, Sir James Lighthill’s 1973 report to the UK Parliament criticized the failure of AI to achieve “grandiose” objectives. As a result, the UK government canceled its funding for most AI research in the UK.

Neural networks like the ones that power most of today’s high-profile AI applications were described in 1958 by Frank Rosenblatt, at Cornell University. His pioneering paper explained how a neural network could compute a function relating inputs to outputs. The approach received a lot of press, including this quote from a 1958 New York Times article:

“The Navy revealed the embryo of an electronic computer today that it expects will be able to walk, talk, see, write, reproduce itself, and be conscious of its existence.”

Research on neural networks continued through the 1960s but was stopped in its tracks by the 1969 book Perceptrons, written by Marvin Minsky and Seymour Papert, which appeared to prove that further progress in neural networks was impossible. We now know that Minsky and Papert’s criticism, although it was accurate for the type of perceptron used by Rosenblatt, does not apply to neural networks in general. Nonetheless, the book was responsible for stopping most neural network research at the time.

1.3 The second AI hype cycle

For the next decade, research funding diminished, but some continued at universities. In the late 1970s and early to mid-1980s, excitement about AGI once again built to a frenzied peak, primarily as a result of research again into natural language processing and expert systems.

Rather than giving up, these later AI researchers took Bar-Hillel’s critique as a head-on challenge. They started to think about how to represent the meaning of a natural language utterance and, more generally, how to represent commonsense knowledge in a computer system in a way that can support human-like reasoning.

For example, my former colleagues at Yale University, led by Roger Schank, developed a theory of how to use hand-coded symbols to represent meaning in a computer system. Schank and his students developed several impressive AI systems, such as FRUMP (Dejong, 1979) and SAM (Schank et al, 1975). FRUMP could read stories about train wrecks, earthquakes, and terrorist attacks directly from the UPI newswire and could then produce summaries of those stories. SAM read short stories such as

John went to a restaurant. He ordered lobster. He paid the check and left.

The computer could then answer questions such as

Question: “What did John eat?”

SAM: “Lobster.”

These systems, along with other natural language processing systems developed at research institutions throughout the world, left a strong impression that the development of computers that could understand language at an AGI level was right around the corner.

Other researchers focused on building what came to be known as expert systems. They built systems that performed tasks that previously required human intelligence by processing large bodies of hand-coded symbolic rules. For example, an auto repair expert system might have this rule:

IF the temperature gauge indicates overheating and the radiator is cool to the touch

THEN the problem is a sticky thermostat.

Researchers such as Stanford University’s Edward Feigenbaum achieved significant recognition for early expert systems, such as the MYCIN system, which diagnosed blood infections. With 450 rules, MYCIN performed as well as some experts.

Governments poured vast amounts of money into AI during the early 1980s. In 1982, Japan announced its Fifth Generation Project, intended to create intelligent computers within 10 years. In response, DARPA (the US Defense Advanced Research Programs Agency) committed $1 billion in 1983 to the Strategic Computing Initiative, an effort to build AGI in ten years. At the same time, a group of twenty-four large US corporations banded together to start MCC (Microelectronics and Computer Technology Corporation). Its flagship project was to hand code all commonsense knowledge in a system named Cyc and was headed by Douglas Lenat. The commonsense knowledge they hand-coded included symbolic facts such as “birds have wings,” “airplanes have wings,” and “if it has wings, it can fly.” Unless, of course, it is a chicken. The UK had its own effort named Alvey, and Europe formed ESPRIT (European Strategic Program on Research in Information Technology).

Our company, Cognitive Systems, became a hot property. At one point, I recall deciding that I had to stop doing newspaper and magazine interviews to get any work done. Cognitive had an IPO in 1986, despite not having much revenue. A sister company to Cognitive Systems named Nestor that was commercializing neural network technology also had an IPO in 1983, and Feigenbaum’s expert systems company, Teknowledge, had one in 1984.

AI was so hot in the 1980s that researchers created two companies with significant venture capital backing, Symbolics and LMI, to build computers specially designed to run the Lisp programming language, which was the preferred programming language of the AI community.

1.4 The second AI winter

In the mid-1980s, AI was featured on the covers of many publications. For example, the March 1982 cover of Business Week proclaimed “Artificial intelligence: The second computer age begins.”

Company after company rebranded their products as “AI-based.” It seemed that AI researchers had figured out how to make AGI systems and to represent human knowledge symbolically. It appeared that the only thing standing in the way of AGI was a bit of elbow grease to encode all human knowledge and the types of reasoning needed for intelligent behavior.

I admit to having been a significant contributor to the hype of the time. Our Cognitive Systems brochure headline was grandiose: “The vision to change the world.” We were able to make a successful public offering in February 1986, without significant revenues, on the strength of the general hype around AI.

My next company, Intelligent Business Systems, raised over $50 million in venture capital ($120 million in 2019 dollars). Raising venture capital was as difficult then as it is now. But we eventually put together a consortium of VCs and scheduled the closing for noon on a Friday at a country club. The idea was to take care of the final contractual issues in the morning and play golf in the afternoon while the lawyers were preparing the final drafts. However, we did not resolve those final issues until midnight, and we did not get to play golf. I was so tired that I ran into the back of my cofounder’s car at the first stoplight. He was 6’5” and had played tight end in the NFL. The policeman who arrived on the scene commented that we must be friends because otherwise I would be in worse shape than my car.

We sold a natural language–based accounting system. Users could ask questions such as “Who owes me money for over 90 days?” However, the company ended up failing when the second AI winter hit, and we could not raise a follow-on round of funding. The good news was that the skills of my employees were in high demand, and no one stayed unemployed for long.

My third company, Esperant Technologies, developed a natural language system that became one of the leading business intelligence products of the early 1990s. However, because AI had fallen into disfavor again, even though the product was successful, I had to strike all references to AI from the product and marketing literature.

AI failed for the second time because the symbolic hand coding of knowledge was time-consuming, expensive, and did not perform well in the real world. Expert systems could perform only very narrowly defined tasks, and natural language interfaces had limited coverage that required users to learn what they could and could not say. Symbolic AI came to be known as good, old-fashioned AI, fell into disfavor by 1990, and was out of favor for thirty years. But it might make a comeback in the 2020s.

The AI bubble of the 1980s deflated gradually, and by the end of the 1980s, the second AI winter had begun. The Japanese government disbanded its Fifth Generation project without it having produced any significant AI technology. DARPA significantly cut back on its AI funding. MCC wound down in 2000. The market for Lisp machines imploded. Professor Stuart Russell said that his AI course at the University of California at Berkeley had 900 students in the mid-’80s and had shrunk to only 25 students in 1990.

1.5 The third AI hype cycle

During the second AI winter, DARPA continued to support AI research in universities. However, there was relatively little public awareness of AI, few products branded themselves as AI, and there was certainly no fear of it.

AI started to heat up again in the late 1990s and early 2000s, when the US National Institute for Science and Technology (NIST) held a periodic competition for machine translation systems during the first decade of the new millennium. In 2005, Google participated for the first time and blew away the competition. Rather than using symbolic, hand-coded translation systems based on grammar and other linguistic features, Google used only automated processes. They employed large computer systems to process massive databases of news stories and other text to determine which words and phrases occurred most often before or after other words and phrases. “Just like that, they bypassed thirty years of work on machine translation,” said Ed Lazowska, the chairman of the computer science department at the University of Washington.

Especially since 2010, companies, government agencies, nonprofits, and other organizations are once again pouring money into R&D. However, this time, it is China that is making the gigantic push into AI. In July 2017, China’s State Council issued the Next-Generation AI Development Plan to become the “premier global AI innovation center” by 2030, and that calls for China’s AI business to be worth $148 billion. Russian president Vladimir Putin said the country that takes the lead in AI would rule the world.

Companies, once again, are rebranding products as having AI inside. A study of European startups that advertise their AI capabilities found that almost 45% do not even use AI. As The Atlantic said, AI is “often just a fancy name for a computer program.”

Private companies have recruited many of the leading academic researchers in the AI field. Google, Facebook, Microsoft, Apple, Netflix, Uber, Amazon, Nvidia, Baidu, IBM, Tencent, Alibaba, Salesforce, and many others have AI teams that publish significant numbers of academic papers and blog posts.

Jeff Dean, head of Google AI, noted that the number of AI papers published had followed Moore’s Law with the number of publications more than doubling every two years from about 1,000 per year in 2009 to over 20,000 per year in 2017. Tickets to the 2018 Conference on Neural Information Processing Systems reportedly sold out 15 minutes after registration opened on the website. The 2019 conference had 13,000 registered participants and 6,743 papers submitted.

In early 2020, it is fair to say that AI has become hot once again, that it is all over the press, and that interest in AI is reaching new peaks every year. Are we in another bubble? Or is this time for real?

This time, it is clear that AI is succeeding. Search engines answer a lot of our questions intelligently. We can go to a webpage in a language we do not know, click translate, and easily understand the page’s contents. AI can identify the people in our photos. And new and exciting AI applications are being released daily.

1.6 No third winter in sight

There have been three periods of high exuberance around the prospects for AGI. Each of the first two cycles ended in extended periods of low interest in AGI. The current cycle has staying power because of all the successful AI commercial applications.

1.7 What is AI?

During the first two cycles of AI, researchers focused on hand coding symbolic knowledge and reasoning rules. This proved to be brittle and required far too much manual effort. The current cycle of AI relies on automated learning, which overcomes the difficulty of hand coding symbolic rules.

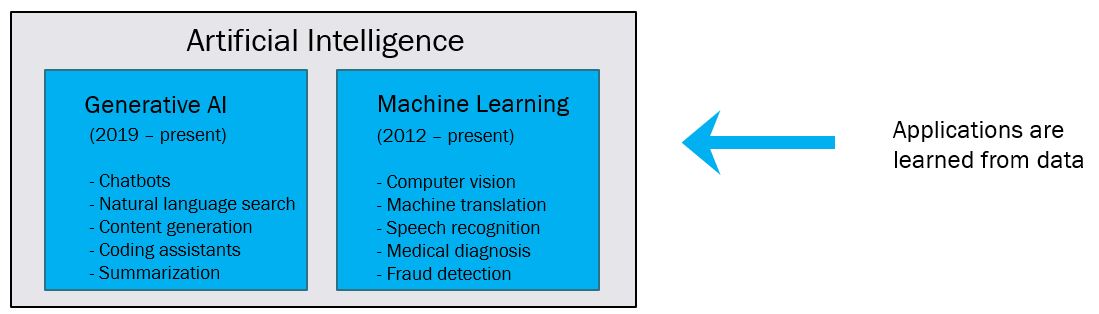

There are two main types of AI systems as of late 2023. They are illustrated below:

Generative AI started making waves in the academic community with the 2019 release of OpenAI’s GPT-2 (Radford et al, 2019) and captured the public imagination with the 2023 release of OpenAI’s ChatGPT. The primary applications of generative AI are for chatbots (e.g. customer service, natural language search, content generation (e.g. writing assistance), coding assistants, and document summarization.

Machine Learning had it’s breakthrough moment in 2012 when a team of University of Toronto researchers (Krizhevsky et al, 2012) used a deep learning neural network named AlexNet to win a 2012 image recognition contest. They not only won the contest but beat the second best system by 26% after years of 1% or 2% improvements. This caused a switch in focus from older AI techniques to deep learning neural networks and led to the development of speech recognition, machine translation, and facial recognition systems we all use today.

The primary characteristic of these AI systems is they learn from data as opposed to having rules explicitly coded by people.

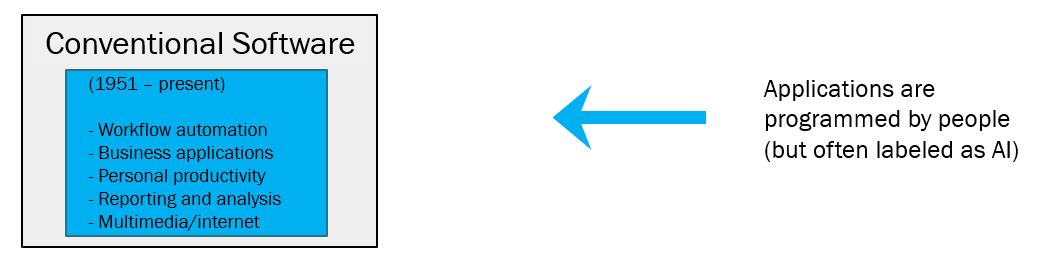

It’s important to distinguish AI systems that learn from conventional software:

Conventional software systems are developed with rules and procedures that are hand-coded by human programmers. Because these often perform tasks that are often associated with intelligence, they are often labeled as AI but they really aren’t AI.

This book has chapters covering a variety of AI topics:

- Chapter 2 examines supervised learning

- Chapter 3 explains deep learning

- Chapter 4 describes transfer learning

- Chapter 5 provides an overview of large language models

- Chapter 6 reviews thinking and reasoning research on large language models

- Chapter 7 discusses natural language processing

- Chapter 8 explains information retrieval

- Chapter 9 discusses machine translation

- Chapter 10 provides an overview of speech recognition

- Chapter 11 covers computer vision

- Chapter 12 explains reinforcement learning

- Chapter 13 discusses robotics

- Chapter 14 explains unsupervised learning

The material in this book is intended as both an AI tutorial for readers who want to understand how AI works but don’t have the background or patience for the advanced mathematics found in many AI textbooks. Most textbooks and journal articles on AI contain a great deal of advanced mathematics (multivariate calculus, numerical optimization, linear algebra, graph theory, set theory, symbolic logic, and probability theory). This is necessary for computer scientists and statisticians who are looking to develop new AI algorithms and even for data scientists whose job is to apply AI algorithms. However, the advanced math represents a barrier to everyone else who would just like to understand how AI works.

This book provides an in-depth explanation of how AI works without any advanced math. You’ll see hyperlinks throughout the book to the original research articles (that contain advanced math) and you can use these as an index to the research literature to find even more in-depth information. However, the material in this book is dense and research-oriented and is not intended to be read like a book. Please help support this effort by commenting. If you see any inaccuracies, find portions difficult to understand, and/or have suggestions, please leave them as comments and I’ll do my best to address them.