7.0 Overview

Huge numbers of artificial intelligence use cases can be characterized as requiring natural language processing technology because natural language processing is required for any interaction that involves voice or machine-readable text. Some example use cases include:

- Searching unstructured data in files and/or databases

- Chatbots that interact via voice or text

- Translating from one language to another

- Answering questions

- Finding information on the internet and/or from news sources

- Analyzing or summarizing news commentary

- Converting medical notes to structured data

- Analyzing the sentiment of posts and/or news articles

- Searching legal documents

Big data has made these use cases even more important. There are almost half a billion tweets per day on Twitter. How does a company sort through all those to determine how customers are reacting to a new product introduction? How does a political organization analyze voter reaction to a recent speech? In the world of finance, regulatory filings contain massive amounts of text. How can financial analysts sort through the mountains of information in multiple languages to find key pieces of data? Natural language processing is now dominated by large language models.

The field of natural language processing has seen multiple paradigm shifts over the last 50 years. The dominant paradigms have been:

Symbolic NLP: ~1955 to 1990

Statistical NLP: ~1990 to 2013

Deep learning / Transfer learning: ~2013 to 2020

Large language models: ~2020 to present

Deep learning approaches were discussed in the chapter on transfer learning. They started with word embeddings around 2013 and progressed to fine-tuning of smaller language models such as BERT in 2018.

Large language models were discussed extensively in the chapters on generative AI and reasoning.

Symbolic NLP dominated the early years of AI from the 1960’s through the 1980’s and is still in wide use today in commercial applications. Symbolic NLP is the primary topic of this chapter

Statistical NLP (Manning and Schütze, 1999) became the dominant paradigm in universities and research labs in the 1990’s and is briefly discussed at the end of this chapter.

7.1 Natural language processing challenges

7.1.1 Literal vs. implied meaning

Three-year-old children understand language. We have computers that can beat chess champions. Why is building systems that understand natural language difficult? It’s natural to think that the meaning of a sentence is the composite of the individual meanings of the words in the sentence and the meaning of a paragraph is the composite of the meanings of the sentences in the paragraph.

The principle of compositionality was first put forth by philosopher Gottlob Frege in 1882 and states that the meaning of a sentence (or text) is the meanings of the individual words plus the syntactic rules for combining the word meanings. But this literal meaning is just the tip of the iceberg in human understanding. Language understanding involves far more than knowing the dictionary meaning of the words and applying grammatical rules. Even children make extensive use of world knowledge in understanding language. For example, consider this statement:

The police officer held up his hand and stopped the truck

As psychologists Allan Collins and Ross Quillian (Collins and Quillian, 1972) pointed out, people’s understanding of this sentence goes far beyond the literal meaning and includes a great deal of implicit meaning. The understanding of this sentence includes facts such as:

- Cars have drivers

- People obey police officers

- Cars have brakes that will cause them to stop

- Drivers can step on the brake to stop the car

Even an eight-year-old would include these bits of world knowledge in their understanding of the sentence. In contrast, consider this very similar statement:

Superman held up his hand and stopped the truck

Our understanding of this sentence is very different (Schank and Abelson, 1977). Here, we draw on our knowledge of a science fiction character and we understand that Superman applied physical force to stop the truck. Our understanding of these two sentences goes far beyond the meanings of the individual words plus the grammatical rules (which are basically the same in both sentences). Similarly, if we hear someone say

I like apples

We know they are talking about eating even though eating was never mentioned (Schank, 1972). If you hear that

John lit a cigarette while pumping gas

our commonsense knowledge tells us that this is a bad idea and we expect the next sentence to tell us whether there was an explosion. Along the same line, consider the examples below:

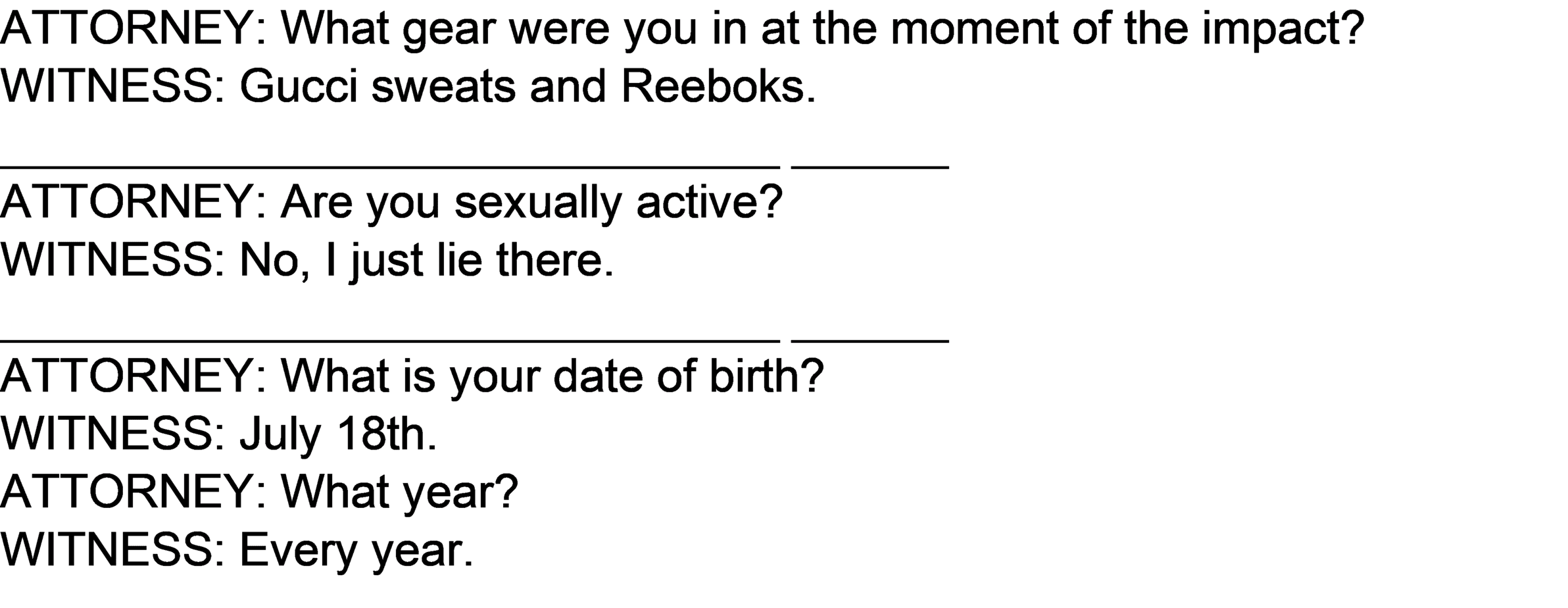

Source: Disorder in the Court

In each of these examples, we laugh because the witness misunderstood the attorney’s question. But think about how much world knowledge we had to apply to correctly understand the attorney’s intent. In the first example, the word “gear” has multiple meanings and the witness chose the wrong meaning. Without any other context, most of us reading the attorney’s question will infer that this was a court case that had something to do with a car accident. We could go on ad infinitum about all the world knowledge we need and all the inferences we need to make in order to understand the attorney’s questions in each example.

7.1.2 Commonsense world knowledge

People have a great deal of knowledge about the world that is used to understand natural language by inferring the implied meaning of natural language utterances. Some examples of world knowledge include:

Entities. We know a lot about entities – people, places, and things. We know facts about Barack Obama, Tiger Woods, Paris, London, the Taj Mahal, and the Super Bowl, and much more. As importantly, we know how to find information when we need it.

Concepts. We know that German Shepherds are dogs, that dogs are mammals, and that mammals are animals. We also know that dogs have four legs, a tail, and bark (usually).

Relationships. We know a lot about relationships between entities. We know that Orson Welles produced the movie, Citizen Kane. Or, if we don’t know it, we know how to look it up.

Events: We know a lot about events such as the crash of the Hindenburg.

Numbers. We know that 10 is more than 5. We understand fractions, percentages, and currency.

Geography. We can picture in our heads the relative locations of cities, states, countries, bodies of water, and mountains.

Time. People understand clocks, calendars, the number of hours in day, and birthdays.

Aging. People know to expect different cognitive capabilities and behaviors from a newborn, a toddler, a child, a teenager, an adult, and a senior.

Scripts. We know the typical patterns of events of eating in a restaurant, buying goods in a store, or lending money to a friend (Schank and Abelson, 1977).

Daily living. We know how to eat, bathe, and use a cell phone.

Psychology. We know about emotions, moods, relationships, attitudes, and beliefs.

Physics. We know that if we drop a glass, it will fall, contact the ground, and shatter into pieces. We have at least a rudimentary understanding of concepts like gravity, friction, condensation, evaporation, erosion, elasticity, inertia, support, containment, light, heat, electricity, magnetism, conduction, and many more principles and concepts that can be collectively termed “intuitive physics” (e.g. Lake et al, 2017).

Biology. We understand that people and most animals need to eat food, breathe, sleep, and procreate. We know that lions eat antelopes, birds eat worms, and small fish eat plankton.

Statistics. We know that if we roll a dice, on average we’ll get the same numbers ones, twos, threes, fours, fives, and sixes.

Rules of thumb. We know that most but not all dogs bark. We know that most but not all birds fly. We know to avoid snakes and alligators.

Visual information. When people are asked the shape of a German Shepherd’s ear, most report visualizing a German Shepherd and inspecting their mind’s eye for its shape (Kosslyn et al, 1979).

Space. We know that the world exists in three dimensions and can process references to “above”, “near”, and “to the left of…”.

Numbers: We understand quantities, currencies, and many other types of numbers.

Math: We know how to perform mathematics on numbers.

Procedures: We know many procedures. For example, “First, jack up the car. Then take off the old tire. Then replace it with the new tire.”

Emotions: We understand anger, fear, joy, sadness, and many other emotions.

Moods: The mood of the speaker (e.g. cheerful, irritable, depressed)

Attitudes: The beliefs, preferences, and biases of the speaker

Personality: The speaker’s personality traits (e.g. nervous, anxious, jealous)

Causality: We understand cause-and-effect relationships. For example, if I turn on a light switch, the light will turn on.

Specialized knowledge. A banker has specific knowledge of banking. A pediatric ophthalmologist has specific knowledge of childhood eye diseases.

7.1.3 Word ambiguity in natural language processing

Consider this headline that appeared in a newspaper (Shwartz, 1987):

VILAS, UPSET AND UPSET, COMPLAINS TO A FAULT

Vilas refers to Guillermo Vilas, a famous tennis player in the mid-1980s with a bit of a temper. An adult human reading this story infers that the first instance of the word UPSET refers to someone who loses a sports event when they were favored to win. People also realize that the second instance of the word UPSET doesn’t refer at all to a favorite losing. Someone who plays or follows tennis but who doesn’t know or remember Vilas but would likely infer that Vilas was a tennis player via the double entendres expressed in the word FAULT and UPSET. The word “upset” has at least two different meanings and both are required to understand the Vilas newspaper headline.

This is not an unusual occurrence for either English or most other natural languages. Many words have multiple meanings. For example, the word “make” in English has 32 different meanings in the Merriam-Webster dictionary plus 41 idiomatic uses (e.g. make a face, make do). In fact, a very high percentage of the most frequently used words and phrases in natural languages are ambiguous.

Identifying the intended word sense for each word and/or phrase in a sentence is a difficult task. Going back to our tennis example, to make this inference, a person (or machine) must know what a sporting event is. They must know that tennis is a sporting event. They must know that people often gamble on sporting events. They must know that gambling establishments set the odds of a person or team winning the sporting event and that the predicted winner is known as the favorite. They must know that if the favorite loses, it is known as an upset.

The second use of the word upset has a different word sense and refers to the state of mind of the loser. This requires intuitive psychological knowledge of the range of human emotions. To interpret “COMPLAINS TO A FAULT”, it would be wrong to interpret the word FAULT in its usual tennis content which would be a missed serve. The reader may consider that interpretation initially. Even to consider the interpretation, the reader must know how tennis matches are scored, that each point starts with one player making a serve, which means that the player makes an overhand motion to strike the ball and that a legal serve must land in a certain area of the court. And, of course, they must know what a tennis court is and how it is marked. But even if that interpretation is briefly considered, it is quickly discarded in favor of the interpretation that Vilas complained too much. To make that interpretation, one needs to understand what it means to complain in general, what it means to complain about a tennis call, that a judge made the call, and most of all that questioning a judge’s call in moderation can be considered reasonable behavior. However, too much complaining is bad manners which are a personality fault. Of course, we must understand what a personality is and what personality faults are and on and on and on.

7.1.4 Coreference resolution

Words such as pronouns often refer to other parts of the sentence and/or previous sentences. For example,

John gave the toddler ice cream.

He liked it.

People don’t have a lot of trouble determining that “He” refers to the toddler and “it” refers to ice cream but this is a major issue for computers.

7.1.5 Other linguistic natural language processing phenomena

To extract meaning, a parser not only needs to be able to resolve word ambiguities and references as discussed above but also needs to handle many linguistic phenomena such as:

Idioms: The meaning of idiomatic phrases such as “a hot potato” and “at the drop of a hat” have a meaning that is unrelated to the literal meaning of the individual words.

Metaphors: Statements such as

John is a couch potato

are metaphors. The meaning of the statement is not that John is a member of the potato family. Rather, John spends a lot of time sitting around.

Metonymy: The use of the name of one thing for that of another of which it is an attribute or with which it is associated. For example, the use of “Vietnam” in “Bill Clinton’s presidency was haunted by sex, drugs, and Vietnam” (Markert and Nissim, 2007). The meaning that must be extracted and represented here is quite complex.

Parts of speech: At a very high-level, English contains nine well-known Parts Of Speech (POS) that are listed below:

Noun: A person, place, thing, or idea

Pronoun: Used in place of a noun, e.g. “he”, “she”, “they”, “it”

Verb: Expresses an action

Adjective: Modifies or describes a noun

Adverb: Modifies or describes a verb, adjective, or another adverb, e.g. “carefully”, “very”

Preposition: A word that indicates the relationship between the word or phrase following it to words that precede it, e.g. “the dog with the brown nose”. Example prepositions include “with”, “to”, “on”, “in”, “from”, and “by”

Conjunction: Joins words, phrases, or clauses, e.g. “and”, “or”, “but”

Interjection: A word that expresses emotion, e.g. “Oops!”

Determiner: Indicates definiteness vs. indefiniteness, e.g. “a”, “an”, “the”]

However, computational linguists have proposed several finer-grained categorization such as the 36-category Penn Treebank (Marcus et al, 1993) categorization (Santorini, 1991) shown below:

1. CC Coordinating conjunction 2. CD Cardinal number 3. DT Determiner 4. EX Existential there 5. FW Foreign word 6. IN Preposition or subordinating conjunction 7. JJ Adjective 8. JJR Adjective, comparative 9. JJS Adjective, superlative 10. LS List item marker 11. MD Modal 12. NN Noun, singular or mass 13. NNS Noun, plural 14. NNP Proper noun, singular 15. NNPS Proper noun, plural 16. PDT Predeterminer 17. POS Possessive ending 18. PRP Personal pronoun 19. PRP$ Possessive pronoun 20. RB Adverb 21. RBR Adverb, comparative 22. RBS Adverb, superlative 23. RP Particle 24. SYM Symbol 25. TO to 26. UH Interjection 27. VB Verb, base form 28. VBD Verb, past tense 29. VBG Verb, gerund or present participle 30. VBN Verb, past participle 31. VBP Verb, non-3rd person singular present 32. VBZ Verb, 3rd person singular present 33. WDT Wh-determiner 34. WP Wh-pronoun 35. WP$ Possessive wh-pronoun 36. WRB Wh-adverb

In addition to POS categories for words, computational linguists assign categories to phrases such as:

-

- Noun Phrase: A noun plus modifiers (e.g. “men’s small white shirt”)

- Verb Phrase: A verb plus a direct or indirect object (e.g. “John gave Mary a present”)

- Prepositional Phrase: A preposition and its object (e.g. “the dog with the brown nose”)

The Penn Treebank (see below) has 20 phrasal POS categories for English.

Synonyms: Many words have the same or very similar meanings such as “brave” and “courageous”.

Relational nouns: Relational nouns define a relationship. For example,

Dave is John’s brother.

Here, “brother” defines a relationship be Dave and John.

Collocations: It is often necessary to look at pairs of words to determine meaning. For example, “saving time” has one meaning and “saving money” has another meaning. A collocation can also be more than two words such as “making the bed”.

Out of vocabulary words: Natural language processing systems often encounter words not in the system’s lexicon. Common examples are proper names, technical terms, and newly coined terms. For example, if I see a sentence that says

The gorf was visibly upset

even though I don’t know what a gorf is, I can infer that it is an entity type that belongs to the animal hierarchy.

Elliptical references: Sentences with omitted words. For example, “John washed and ironed his clothes” is equivalent to “John washed his clothes and John ironed his clothes”.

Compound sentences: Sentences with two independent clauses such as “My favorite team is the Patriots but I also like the Giants”.

Copulas: Also known as “linking verbs”, copulas are words (usually verbs) whose purpose is to link a subject and complement (i.e. the part of the sentence immediately following the verb. The verb “is” is commonly used as a copula (e.g. “The dog is brown”). Oftentimes, the more common meaning needs to be ignored (e.g. John is taking a test).

Modality: Modal language constructions express the speaker’s rhetorical stance (i.e. the speaker’s attitude towards the facts expressed in the sentence). Examples of modal expressions include probabilities and possibilities (e.g. John might be coming to the party), time (“An Ipad is always on”), conditionals (e.g. If the light is on, John is home), permissions and requirements (John should give his brother a ride), and necessity (John must give his brother a ride).

Sentence type: A sentence can be a statement, a question, a command, or an exclamation.

Conditionals: Sentences expressing conditional actions or events such as

If it’s cloudy, it might rain

Quantifiers: The use of quantifiers such as “every” (e.g. John has crashed every car he has owned).

Sets: For example,

John won 2 of the 3 games.

Compound sentences: Sentences with two independent clauses such as “My favorite team is the Patriots but I also like the Giants”.

Themes and character development: The meaning of a text is more than the sum of the meanings of the individual sentences. For example, in a novel, there are themes and character development.

Expectations: When one starts to read a news article about a train wreck, the understanding of the story includes expectations about what will come next in the article. For example, one would expect to hear if people were injured.

Negatives: A good meaning representation should include a representation of negatives in sentences such as

John did not go to the store yesterday

Stance: The interpersonal stance taken by the speaker (e.g. distant, warm, contentious, supportive).

Other frequently occurring linguistic phenomena complicate the process of understanding language for both humans and computers include misspellings and abbreviations and ungrammatical utterances. Also, some languages such as Chinese don’t have spaces between words. Additionally, punctuation marks such as commas and periods must be accounted for as tokens. Some other linguistic phenomena that are especially troublesome for machine translation include:

Word order: In English, French, and Italian, the word order for a sentence is SVO (SUBJECT VERB OBJECT). Subjects are typically noun phrases that appear before the verb and Objects appear after the verb. In contrast, in German and Japanese the typical word order is SOV (SUBJECT OBJECT VERB). For example, in English one would say “John hit the ball”. In German one would say the equivalent of “John has the ball hit” (“John hat den Ball getroffen”). Other languages are OVS, VOS, and VSO and many languages tolerate multiple word orders.

Word alignment: The Russian word for “hand” is “ruka”. However, “ruka” is also the Russian word for “arm”. The English language has one word for “snow”. The Alaskan Yupik and Inuit languages have numerous words for snow.

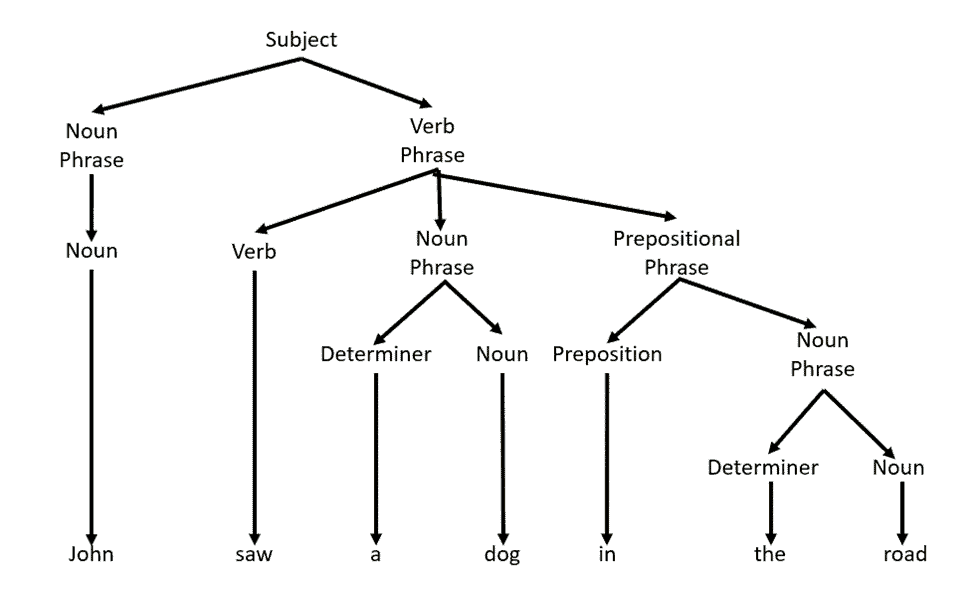

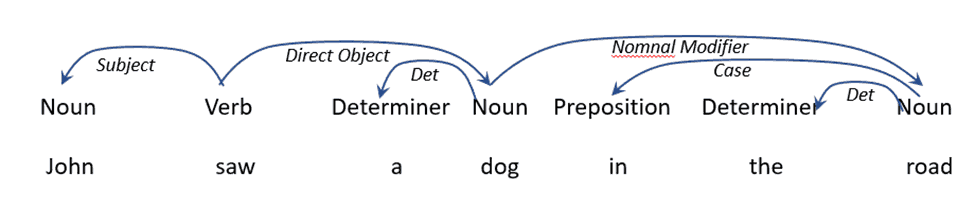

Language syntax: The syntax (or grammar) of a language defines how POS categories are arranged to constitute a well-formed sentence in that language. An example of a syntactic parse tree is shown below:

Computational linguists typically represent the syntax of a sentence in a parse tree such as the one above. Since each language has different POS categories and languages differ in sentence structure, it is not surprising that every language has its own syntax.

Sentence lengths: A problem specific to machine translation is that the correct translation from a source language to a target language will often have a different number of words in the source and target languages.

Discontiguous translations: For example, the word “not” in English translates to the discontiguous French pair “ne … pas”.

Function words: Function words like “a” and “the” appear in some languages but not others.

Morphology: A morpheme (or inflection) is the smallest unit of meaning in a language. For example, the English word “indistinguishable” has three morphemes: “in”, “distinguish”, and “-able”. The word “distinguish” is known as a “free morpheme” because it can stand on its own. The two affixes (the prefix “in” and the suffix “-able”) are known as “bound morphemes” because they have must be bound to a free morpheme. Compound words like “chairman” are concatenations of two or more free morphemes.

Inflectional forms provide valuable clues to the syntactic and semantic function of a word, phrase, or another part of a sentence. For example, the verbs “likes” and “liked” convey significantly different meanings. The adjective “inefficient” has a very different meaning than the adjective “efficient”.

Agglutination: Many languages have words that are created by stringing together morphemes. For example, in German, the word “handschuhschneeballwerfer” means a snowball thrower who wears gloves.

Parts of speech: What makes POS categories difficult for machine translation is that each language can have different POS categories. As a result, when translating, a source language POS category might not exist in the target language.

Speech recognition systems also have trouble with homonyms, i.e. words that sound the same but are different such as “night” and “knight”.

See Ponti et al (2019) for a comprehensive analysis of linguistic typology, i.e. the structural and semantic variations across the world’s languages.

7.2 Representing meaning

For symbolic NLP researchers, understanding language at a human level was thought to require an AI system that translated natural language inputs into an internal representation (i.e. a data structure) that captures the full implied meaning of the sentence. There are well over a hundred different theoretical frameworks that define the representation of meaning (Schubert, 2015). NYU professors Brenden Lake and Gregory Murphy (2020) have defined a minimum set of five basic language functions that any meaning representation must support:

- Describe a perceptually present scenario, or understand such a description. Examples: That knife is in the wrong place. The orangutan is using a makeshift umbrella.

- Choose words on the basis of internal desires, goals, or plans. Examples: I am looking for a knife to cut the butter. Book a flight from New York to Miami.

- Produce and understand novel conceptual combinations. Examples: That’s a real apartment dog. The apple train left the orchard.

- Change one’s beliefs about the world based on linguistic input. Examples: Sharks are fish but dolphins are mammals. Umbrellas fail in winds over 18 knots.

- Respond to instructions appropriately. Examples: Pick up the knife carefully. Find an object that is not the small ball.

This notion of an internal meaning representation is likely still meaningful for deep learning and large language models. However, these models are black boxes and we can’t as yet see inside them to determine the nature of the learned information.

The process of transducing a natural language text into a meaning representation is termed parsing.

7.2.1 Representational challenges

Let’s look at some of the characteristics of meaning that need to be captured in a human-level meaning representation.

7.2.1.1 Lexical vs. canonical representation

A meaning representation can be canonical or lexical or somewhere in between. A canonical meaning representation is one in which the representation is the same for any two textual strings with the same meaning. This type of representation has two key characteristics (Schank, 1975):

- For any two sentences that are identical in meaning, regardless of language, there should be only one representation.

- Any information in a sentence that is implicit must be made inferred and made explicit in the representation of the sentence.

For example, the following surface forms (i.e. the words used in a sentence) all refer to the same entity:

Bill Clinton William Jefferson Clinton The 42nd President of the US President Clinton

Relationships between entities also have multiple surface forms. For example, the following surface forms express the same relationship from a meaning perspective:

President Clinton was born in Arkansas Arkansas was the birthplace of President Clinton President Clinton’s place of birth was Arkansas President Clinton’s parents lived in Arkansas when he was born

Suppose there are 10 sets of words that refer to the entity Bill Clinton (there are almost certainly more than that), 10 sets of words that refer to the entity Arkansas, and 10 ways of expressing the “born in” relationship between the two entities. This means there are at least 10 x 10 x 10 = 1000 different ways of expressing this fact in English (not to mention other languages). If the fact

Born_In (Bill_Clinton, Arkansas)

is expressed with a canonical relationship (e.g. each entity is a link to its Wikipedia page and the relationship name and form is the same regardless of the words used), then this fact will be stored only once in the database. If the fact is stored lexically (i.e. using the words themselves for the entities and the relationship), there could be 1000 different versions of the fact stored. Why is this a problem? Suppose, someone asks the question:

Where was Bill Clinton born?

Based on the analysis above, there are 1000 different ways to express this question. This is not a problem if there are the 1000 different variations of this fact in the database and each matches a question variant exactly (e.g. the exact words “Bill Clinton” and the exact word “born”). However, suppose only 500 of the different variations are stored as facts. This would mean that only half the ways of asking the question would result in an answer. In contrast, if a canonical form is used, the task for a parser (i.e. the program that converts text input string to a meaning representation) is to link all the different ways of expressing this question to this one fact. Aside from answering simple factual questions with direct answers in the database, there is another major advantage of a canonical representation. Suppose the question is:

Was Bill Clinton born in the US?

If we have one fact that says Arkansas is in the US, then we can marry it with the one fact that Bill Clinton was born in Arkansas and respond affirmatively. However, this is not straightforward if the facts are stored lexically because there are so many facts that all mean the same thing. If there are 1000 lexical representations of each of two facts, there could be 1000 x 1000 = one million different combinations of the two facts which would make it very difficult to answer the question. The examples above are for simple factual statements that don’t require much in the way of inference. Most natural language statements require accessing world knowledge and making inferences based on that world knowledge. For example, if someone says

I like apples

the meaning representation produced for that utterance will include the fact that sentence refers to eating even though this is inferred (i.e. not explicitly stated).

A canonical representation should also be language-independent. If the meaning representation is language-independent in addition to being canonical, then all that is needed for learning a new language is a target language parser that can transform words in the language to the meaning representation and a language generator that can transform answers in meaning representation form to the target language. One advantage of a language-independent meaning representation is that individual machine translation programs are not needed for every language pair.

Without a meaning representation, to enable translation among each pair of languages would require the development of a separate system for each language pair. There are over 7000 languages in the world. Creating separate machine translation systems for every pair of languages would require the development of over 40 million translation systems. However, if a canonical meaning representation is used, we only need 7000 systems that translate text into a meaning representation and 7000 systems that generate text. Interestingly, pairwise translation systems that don’t use meaning representations dominate the machine translation landscape. Another advantage of a language-independent representation is that all the inferences that correspond to human thought will work unchanged. If a language-specific meaning representation is used, then all the inferences must be recreated in each new language. When a person learns that one can infer from a purchase event that possession of an object has been transferred from the buyer to the seller, they don’t need to relearn that inference in the new language. Similarly, a bilingual or multilingual system will be much more efficient and more likely to perform at a human competence level if only one set of inferences is required.

7.2.1.2 Breadth of the meaning representation

If we were to manage to build a computer system that could understand language as well as a person or a science-fiction system like C3PO or HAL-9000, it would need to understand and be able to represent utterances that reference all of the types of world knowledge discussed above.

7.2.1.3 Breadth of topics and genres

Human-level AI systems like C3PO or HAL-9000 also understand language about a wide range of subject areas. People understand history, sports, relationships, and many other subject areas. Similarly, people understand natural language utterances in a wide range of genres ranging from news articles to fiction books to user manuals.

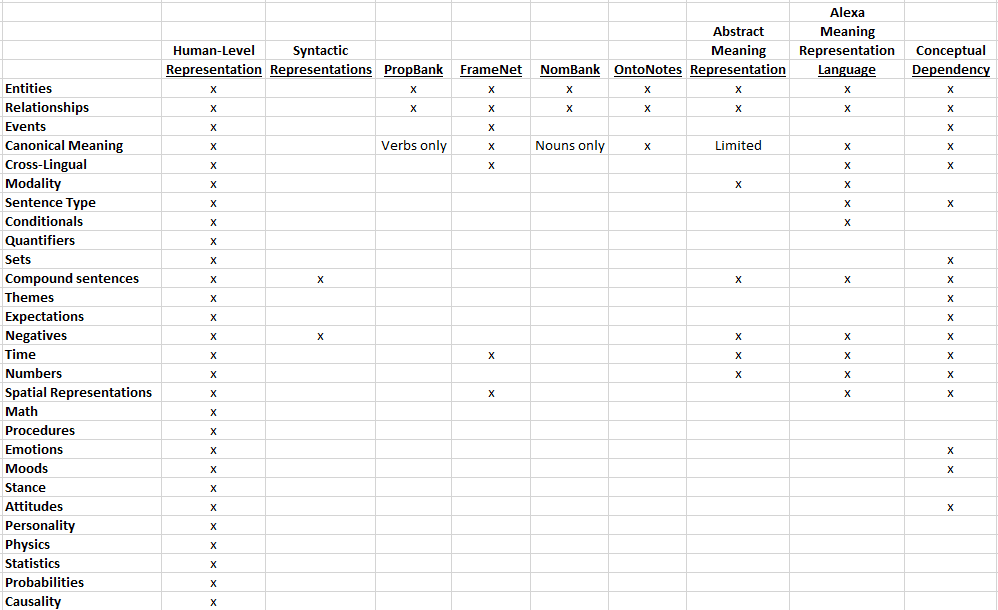

7.2.2 Symbolic representation schemes

To carry on a conversation at a human level, it is necessary to: (1) Be able to represent the meaning of every natural language utterance that can be understood by an average person. (2) Parse each natural language utterance into a meaning representation. To date, no one has been able to define a representation that can capture the full meaning of every natural language utterance. Moreover, the more complex the representation, the harder it is to parse natural language utterances into the meaning representation. That said, many less than comprehensive meaning representations and parsing schemes for these representations have been developed and have been found to be useful for various tasks. Some of these are shown below in this comparison of the expressivity of various meaning representations:

The meaning representations only capture a small slice of potential literal meanings and, except for Conceptual Dependency, don’t capture any of the implied meaning.

7.2.2.1 Syntactic processing

Most linguistics agree that syntactic cues play an important role in the understanding of language by people. There are two broad types of syntactic parsing: Constituency parsing The goal of a syntactic constituency parse is to take an input sentence and produce a parse tree like the one above. The development of competent syntactic parsers was accelerated in the early 1990s by the release of the Penn Treebank (Marcus et al, 1993) corpus that contained over seven million words of part-of-speech tagged text. Today, out-of-the-box syntactic parsers that do a good job are freely available such as the one found in Stanford’s freely-available CoreNLP system. Dependency parsing Dependency parsing focuses on which words modify which other words. Here is an example of a dependency parse in which all the words in the sentence modify the verb:

The verb has a subject and a direct object and so on. A dependency parse provides some information about the meaning of a sentence. Syntactic parsing is necessarily different for different languages because each language has its own grammatical categories and structure. Universal dependencies (universaldependencies.org) is an effort to create a cross-linguistically consistent method of annotation to facilitate multilingual parser development. Many researchers believe that, in order to determine the true meaning of a sentence, we need to determine the syntactic structure and, more specifically, which elements are dependent on which other elements. Others in both the deep learning camp and those that use an explicit meaning representation argue that an explicit syntactic representation is not a necessary step in the process. A syntactic representation, however, does capture even the literal meaning of a natural language utterance, much less the full understanding (i.e. implied meaning).

7.2.2.2 Shallow meaning representations

Semantic role labeling (SRL) systems (also known as shallow semantic parsing systems) attempt to identify the predicate-argument relationships in sentences (and between sentences). For example, in the sentence

John broke the window

the relationship to be discovered is some form of breaking and the arguments are John and the window. These systems are typically based on lexical resources such as PropBank, NomBank, and FrameNet.

PropBank: The Proposition Bank (Palmer et al, 2005) extends the Penn Treebank syntactic annotations with hand-annotated shallow meaning representations. Specifically, each sense of each verb in the corpus is assumed to represent a predicate. For example, in the sentence

John broke the window

the predicate would be “break” and a shallow meaning for the sentence can be specified by the predicate-argument relationship

break (John, window)

Many verbs have multiple meanings and PropBank accommodates this by essentially making each verb sense its own predicate with its own set of arguments. However, these predicate representations are lexically-based rather than canonical and suffer from the issues discussed earlier in this chapter.

NomBank: NomBank (Meyers et al, 2004) is similar to PropBank but for noun arguments instead of verb arguments. Like PropBank, NomBank annotates the Penn Treebank corpus with arguments that are expected for nouns. PropBank can be used to extract predicate-argument relationships for sentences such as

IBM appointed John

but not for sentences where the predicate-argument relationship is triggered by a noun instead of a verb such as

…the appointment of John by IBM

NomBank fills in this gap by annotating the nominal (i.e. noun) forms of predicates. Like PropBank, the NomBank predicates are lexically-based and suffer from the problems discussed earlier in this chapter.

FrameNet: FrameNet was created by Charles Fillmore and colleagues (Baker et al, 1998) at The University of California at Berkeley in the late 1990s.

An example of a FrameNet frame is the revenge frame (Fillmore and Baker, 2011). A revenge scenario is one in an Offender commits an Offense that offends or injures an Injured Party. An Avenger (which could be the Injured Party) then engages in a Punishment event to punish the Offender. The revenge frame elements include Offender, Offense, Injured Party, Avenger, and Punishment.

These frames are identified independently of the lexical items that might evoke the frame. For example, the revenge frame might be evoked by lexical items including the following:

avenge, retaliate, revenge, get back at, get even with, pay back, payback, reprisal, retaliation, retribution, revenge, vengeful, vindictive, take revenge, wreak revenge, exact retribution, quid pro quo, tit for tat

Instead of each of these lexical items mapping to a different frame, at least one sense of each would map to the revenge frame. A given lexical item (e.g. the word “hot”) can map to multiple frames (e.g. temperature and taste).

OntoNotes (Hovy et al, 2006; Weischedel et al, 2011) was designed to provide a scaffolding for a broader representation of the meaning of sentences than PropBank. It extends PropBank to additional genres including broadcast news, broadcast conversation, and web text and to Arabic and Chinese in addition to English. It also localizes lexical item references in an ontology (see below) so that multiple words and phrases with the same meaning have the same reference point and it includes named entities and NomBank data for nouns that trigger predicates.

In PropBank and NomBank, the underlying predicates are defined by the lexical items (verbs and nouns) themselves. As a result, there may be multiple lexical items (or senses of those lexical items) that map to different predicate frames that have the same meaning. In contrast, FrameNet is designed so that there is only one predicate frame for a given meaning, i.e. the frames are designed to be canonical and language-independent.

7.2.2.3 Semantic dependency representations

Semantic dependency parsing adds semantic information to a syntactic dependency parse. Specifically, it adds predicate-argument relationships – primarily those found in SRL representations such as PropBank and NomBank. Semantic dependency parses are graph-based which means that a word can have multiple dependency branches. In contrast, a syntactic dependency parse is a tree structure in which a word can only be dependent on one branch. However, there is no attempt to capture either the full literal meaning or any of the implied meaning of a sentence. Moreover, the semantic dependency relationships are lexically-based and while there is some attempt to resolve word ambiguity in service of identifying the predicate-argument structure, the dependencies diagrams are lexically-based and diagram how the specific words found in the input sentence relate to one another. Examples of semantic dependency corpora include the Prague Czech-English Dependency Treebank 2.0 (Hajič et al, 2012) and DeepBank (Flickinger et al, 2012)

7.2.2.4 Abstract Meaning Representation

Semantic parsing is similar to SRL but based on more expressive representations. Semantic parsing formalisms include abstract meaning representation (AMR) (Banarescu et al, 2013) and the universal conceptual cognitive annotation (Abend and Rappoport, 2013). The Penn Treebank corpus spawned a great deal of research on syntactic parsing that led to the strong off-the-shelf syntactic parsers available today such the constituency and dependency parsers in the Stanford CoreNLP tool. The purpose of the AMR corpus is to provide a similarly valuable resource for research on semantic parsing. AMR is a corpus of 39,000+ sentences hand-annotated with a meaning representation. Concepts in the representation are English words, Propbank framesets, special entity types such as dates and world regions, quantities including money and units of measure, and logical conjunctions such as “and”, “or”, and “but”. AMR also has one hundred relations that become slots in the framesets. Examples of general semantic relations include accompanier, age, beneficiary, cause, compared to, concession, condition, consist-of, degree, destination, direction, and domain. There are also relations for quantities such as number, units of measure, currency, and scale and for dates such as day, month, year, weekday, time, timezone, quarter, season, decade, and century. In addition to relations, AMR contains 139 fine-grained entity types such as person, country, and sports-facility. The AMR representations abstract away syntactic information. The goal of AMR parsing is to produce the same meaning representation for different syntactic forms of sentences that mean the same thing. For example, the following two sentences would have the same AMR representation:

John offered to buy the car

John made an offer to buy the car

In contrast, in PropBank, these would invoke two different predicates because one is a verb form and one is a noun form though PropBank is moving towards a more unified representation (Bonial et al, 2014). The AMR representation overcomes some weaknesses in SRL representations. However, AMR is still very limited in terms of its expressiveness and does not capture any implied meaning.

7.2.2.5 Alexa Meaning Representation Language

A variant of AMR, the Alexa Meaning Representation Language (AMRL) is used by Amazon in its Alexa personal assistant. AMRL is cross-lingual and it explicitly represents fine-grained entity types, logical statements, spatial prepositions and relationships and support type mentions.

7.2.2.6 Conceptual dependency theory

Roger Schank and his students and colleagues and Yale University (Schank, 1975; Schank and Abelson, 1978; Schank and Riesbeck, 1981) created Conceptual Dependency theory which contained both a canonical meaning representation and an approach to parsing surface forms into meaning representations that included the implied meaning.

7.2.2.6.1 Primitive acts in conceptual dependency theory

As the basis of conceptual dependency theory, Schank proposed fourteen Primitive ACTs intended to be able to capture the meaning of most sentences describing human actions. These ACTs were:

PTRANS: The transfer of physical location of an object. PTRANS is the primary concept in the representation of meaning for sentences such as

John moved the table to the wall. John picked up the table.

ATRANS: The transfer of an abstract relationship such as possession, ownership or control. ATRANS is the primary concept in the representation of meaning for sentences such as

ATRANS an object from the actor to the recipient. (e.g. the verb “give”) ATRANS an object from someone to the actor. (e.g. the verb “take”) is an ATRANS from actor1 to actor2 and an ATRANS of something of value from actor2 to actor1 (e.g. the verb “take”)

PROPEL: The application of a physical force to an object (e.g. “throw”, “push”).

MOVE: The movement of a body part of a person or animal means

INGEST: The act of eating

EXPEL: To force something out from inside you that was previously INGEST-ed.

GRASP: The grasping of an object by an actor.

CONC: means to focus attention on, as well as to perform mental processing on.

MTRANS represents a change in the mental control of a conceptualizatlon and underlies verbs like recall, commit to memory, perceive, sense, and communicate. It is similar to but different than PTRANS for two reasons: First, the object that is TRANSed does not leave control of the donor, but is copied into the control of the recipient. Second, the donor and recipient are not two different people but two different mental processors (e.g. consciousness, long-term memory, and sensory systems).

MBUILD: represents the results of thinking such as drawing conclusions and proving something to oneself.

SPEAK is the act that produces sounds and its objects are always ‘sounds’.

LOOK-AT takes physical objects as objects and is the instrument of seeing.

LISTEN-TO takes ‘sounds’ as objects and is the instrument of hearing

SMELL is the act of smelling and takes only smells as objects

Schank’s theory starts with a set of primitive ACTs (Schank, 1975) shown above. Each of the primitives had a set of slots and restrictions on how those slots could be filled. For example, PTRANS had slots for

- The ACTOR that initiated the transfer

- The OBJECT of the transfer (restricted to PHYSICAL OBJECTs)

- The FROM and TO locations

What do we mean by a set of slots? If you are a little familiar with computer software, you can think of a primitive ACT and its slots as a data structure that looks a lot like a class or table with fields. The ACT is the class or table and the slots are the fields. Marvin Minsky (1975) coined the term “frame” for class-like (and table-like) data structures that are used in AI. In this context, the primitive ACTs can be considered as a specific type of frame.

7.2.2.6.2 Reasoning and inference

One of the most important contributions of conceptual dependency theory is the idea of how to capture implied meaning in addition to literal meaning. Capturing implied meaning requires reasoning (termed “inference” during the 1970s and 1980s but that term has been co-opted by deep learning in the 2000s). For a phrase like “John went…”, John is obviously the ACTOR. However, what isn’t explicitly stated and must be inferred and must be present in the meaning representation is that John is also the object of the action. One of Schank’s students, Chuck Rieger (Schank et al, 1973) defined sixteen categories of inference that people make when understanding language:

Specification Inference

John picked up a rock. John hit Bill. INFERENCE: John hit Bill with the rock.

Causative inference

John hit Mary with a Rock INFERENCE: John is probably mad at Mary

Resultative inference

Mary gave John a car. INFERENCE: John has the car.

Motivational inference

John hit Mary. INFERENCE: John wanted to hurt Mary.

Enablement inference

John went to Europe INFERENCE: Where did he get the money?

Function inference

John wants the book. INFERENCE: John wants to read the book.

Enablement-prediction inference

John looked in his cookbook to find out how to make a roux. INFERENCE: John will now begin to make a roux.

Missing enablement inference

John couldn’t see the horses finish. She cursed the man in front of her. INFERENCE: The man blocked her vision.

Intervention inference

The baby ran into the street. John ran after him. INFERENCE: John wants to prevent the baby from getting hurt.

Action prediction inference

John wanted some nails. INFERENCE: He went to the hardware store.

Knowledge propagation inference

John told Bill that Mary hit Pete with a bat. INFERENCE: Bill knows that Pete was hurt.

Normative inference

Does John have a liver? INFERENCE: Yes.

State duration inference

John handed a book to Mary yesterday. Is Mary still holding it? INFERENCE: Probably not.

Feature inference

Andy’s diaper is wet. INFERENCE: Andy is probably a baby.

Situation inference

John is going to a masquerade party. INFERENCE: He will probably wear a costume.

Utterance-intent inference

John couldn’t jump the fence. INFERENCE: Wonder why she wanted to.

Each ACT carried with it certain types of inference. For example, for the PROPEL ACT:

A TRANS is implied if the object is not fixed

If the object is propelled towards a one human by another human, then the first human may have been angry.

If the object is rigid and brittle and the speed of the propel is high, then the object may be negatively affected

7.2.2.6.3 Scripts in conceptual dependency theory

Schank and his students recognized that conceptual dependency by itself was not sufficient to capture the meaning of went on to define many other forms of meaning representation. To expand the capture of meaning beyond primitive actions, they postulated a set of higher-order data structures including scripts, plans, goals, MOPs, and others. They theorized that people have scripts that they use to make sense of the world. For example, the restaurant script encodes the basic stages of eating in a restaurant:

ENTERING -> ORDERING -> EATING -> PAYING/LEAVING

So, when we hear that

John went to a restaurant He ordered chicken He paid and left

we can infer that John was seated and that he ate the chicken. It should be noted that the description above is a simplification for the purpose of illustration. The actual scripts had detailed conceptual dependency representations including roles. For example, the customer signals the waiter (represented as an MTRANS), the waiter comes to the table (represented as a PTRANS), and so on. One of Schank’s students Rich Cullingford (1978) created a program named the Script Applier Mechanism (SAM) that could read newspaper articles, create internal conceptual dependency representations that were augmented by inferences made from scripts, and then answer questions about the articles. Because the conceptual dependency representation is language-independent, SAM was also able to generate summaries in other languages.

7.2.2.6.4 Memory organization packets in conceptual dependency theory

One of the limitations of scripts was that the set of scripts had no structure. For example, there should be some notion of similarity between a doctor visit script and a dentist visit script (Lytinen, 1992). Similarly, the ordering scene in a restaurant script should have some overlap with the ordering scene in a store shopping script. Memory Organization Packets (MOPs) were created by Schank and his team to account for this phenomenon. The idea of MOPs was to abstract common aspects of scripts into a separate knowledge structure. For example, the doctor and dentist scripts would be organized by a professional office visit MOP.

7.2.2.6.5 Themes, plans and goals in conceptual dependency theory

To understand a story about an event, it is usually necessary to understand the motivations of the actors in the story and the specific plans they chose to achieve those goals. Understand a story often requires the reader to make inferences such as why an actor chose a particular goal, what goals might be in conflict with this goal, what other goals might be inferred for the actor, and what might make the actor abandon the goal? Schank and Abelson (1977) defined a codification of goals including:

Satisfaction goals (S—goals) arise from recurring biological needs. Examples include Satisfy—hunger, Satisfy—sex, and Satisfy—sleep.

Enjoyment goals (E—goals) are optionally pursued for pleasure. Examples include Enjoy—entertainment, Enjoy–travel and Enjoy—eating.

Achievement goals (A—goals) include the establishment of abilities, possessions, and social positions. Examples include Achieve—good—job and Achieve—skill.

Preservation goals (P—goals ) involve the preservation or maintenance of some desirable state. Examples include Preserve—health and Preserve—possessions.

Instrumental goals (I—goals) are goals that are realized in the pursuit of other goals.

Delta goals (D—goals) are actually a special case of I—goals that occur frequently and can be achieved in many ways. Examples include D—prox (changing one’s location) and D—know (changing one’s knowledge state).

Schank and colleagues also developed a codification of plans that could be used to achieve goals and developed programs such as Robert Wilensky’s (Wilensky, 1978) Plan Applier Mechanism (PAM) that could apply knowledge of plans and goals to make inferences vital to story understanding. These plans were called “planboxes”. For example, planboxes applicable to D-Goals included:

ASK: Make a request.

INVOKE—THEME: A variant of ASK. The planner reminds the person to be persuaded of an existing thematic relationship between them (e.g. “as a friend…”).

INFORM—REASON: Giving someone a reason to perform the requested action.

BARGAIN—OBJECT: Offering something in exchange for fulfilling the request.

BARGAIN—FAVOR: Offering to do something in exchange for fulfilling the request.

THREATEN — Threatening to do something undesirable if the other person does not agree.

Goals also tend to be dominated by broader themes (e.g. the parent-child relationship, friendship, …). By knowing a theme, one can infer what other goals will be generated and what other themes are likely to coexist and/or be in conflict.

7.2.2.6.6 Question answering in conceptual dependency theory

The Yale prototype systems that were developed were able to process paragraphs like this one (Dyer and Lehnert, 1980):

John left his office early Friday afternoon so he could attend a going-away party for his friend Bill. Bill had been transferred to California. When he arrived there were lots of people and plenty of beer. Three years ago Bill had borrowed $50 from John which John hoped to get back before Bill left. He was about to remind Bill about it when he was called to the phone. It was his wife, Mary, and she was hysterical. Mary had just received a call from a stranger who claimed to have kidnapped their daughter Susan. He said he would call again. John told Mary to stay at home, keep off the phone, and wait until he got there. John drove home immediately. Just as he walked in the door, the phone rang. John picked it up. But it was only Karen, one of Mary’s friends. John told her Mary was out and hung up. Mary wanted to call the police but John thought they should wait. They were discussing this when Susan walked in the door. She had stayed after school for a gymnastics meet and taken a late bus home. Nothing had happened to her. It was all a hoax.

It could then answer questions like:

Q: Why did John go to the party? Boris: He wanted to have a good time and he was Bill’s friend and he wanted to get 50 dollars back from him.

Q: Why did John leave the party? Boris: He was worried about Susan.

Q: Why? Boris: Susan was kidnapped.

Q: What happened to John at the party? Boris: He got a call from Mary.

Q: Why did Mary call John at the party? Boris: She wanted to tell him about Susan’s kidnapping.

Q: Why did John want money from Bill? Boris: He borrowed 50 dollars from him.

Q: Why didn’t John talk to Bill? Boris: He was more worried about Susan.

Q: Who called John at home? Boris: Karen.

Q: Why? Boris: She wanted to talk to Mary.

Q: Was Karen upset? Boris: No, She did not know about Susan’s kidnapping.

Q: Did John kidnap Susan? Boris: No. He was her father.

Q: Where was Susan? Boris: She was attending a gymnastics meet.

Q: What happened when Susan came home? Boris: John was relieved that she was safe.

As the Yale researchers noted, there are huge complexities in understanding the paragraph above. For example, the word “old” in the paragraph does not refer to age. It refers to the length of the relationship between Richard and Paul. The primary innovation of the Yale research efforts was to demonstrate what it means to represent a deep level of meaning in a computer and how meaning representations can support reasoning processes that fill in the gaps of the “literal” meaning. The actual primitives and higher-order data structures have been criticized as being non-comprehensive (e.g. Schubert, 2015). However, Schank never claimed that they were comprehensive. He was more interested in setting forth a general approach.

7.2.2.6.7 Limitations of the conceptual dependency approach

The primary limitation of Schank’s approach was the amount of hand-coding required. The definition of a comprehensive meaning representation that covers all subjects and all forms of text would have been a huge (perhaps impossible) effort. Worse, that effort would have been dwarfed by the effort required to build a complete lexicon. For every word in the English language, a lexicon would need to contain enough information to enable the natural language processing system to perform all the reasoning and inference of people. A separate lexicon would be needed for each language such that all sentences in any language that have the same meaning result in the same meaning representation. And that effort would likely have been dwarfed by the effort to build all the scripts and other forms of knowledge necessary to support the reasoning process. Today’s AI approaches are mostly centered around machine learning in order to avoid the massive hand-coding requirements. It would be difficult to apply machine learning to Schank’s approach because it would be very difficult to create a large corpus of sentence to meaning representation pairs because all the examples in the corpus would require hand-coding by specially-trained individuals.

7.2.3 Symbolic NLP research tools

Over the years, symbolic NLP researchers have developed many language resources and tools that facilitate both NLP research and natural language processing engineering.

7.2.3.1 Corpora

Corpora are large sets of text and speech data that can be used to train natural language processing algorithms. Just like other machine learning systems, natural language processing systems require large amounts of data in order to support the learning process. Unsupervised natural language processing learning systems require huge sets of text from newspaper articles, Wikipedia articles, web pages, social media posts, and other sources. Supervised natural language processing learning systems make use of corpora of annotated (i.e. labeled) text. For example, the Penn Treebank (Marcus et al, 1993) contains 7 million words of part-of-speech tagged text from 2499 Wall Street Journal articles that were annotated by University of Pennsylvania linguistics graduate students. This corpus is by far the most widely used corpus for syntactic parsing. Both the AMR and AMRL representations discussed above have associated corpora that map natural language sentences to the correct meaning representation. These corpora can then be used to train natural language processing systems to parse natural language utterances into AMR or AMRL representations.

7.2.3.2 Knowledge representations

Knowledge representations capture relationships between entities that can be used by natural language processing systems.

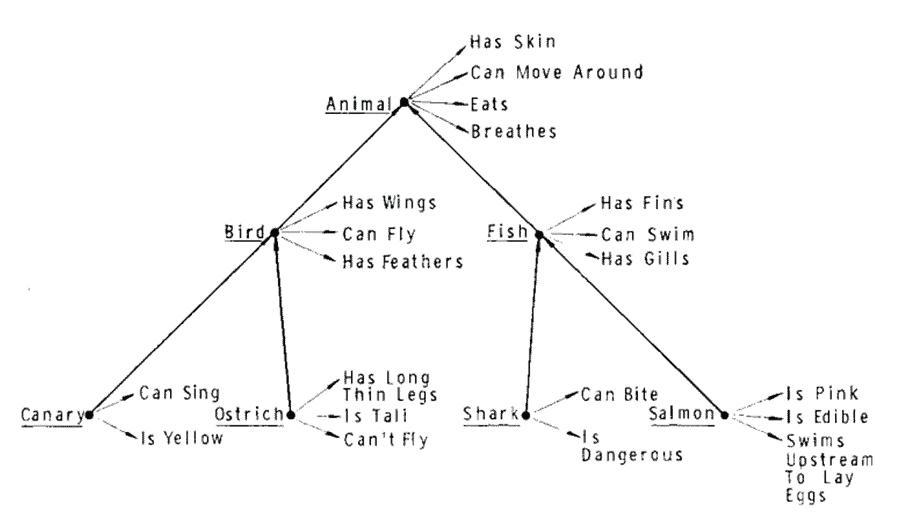

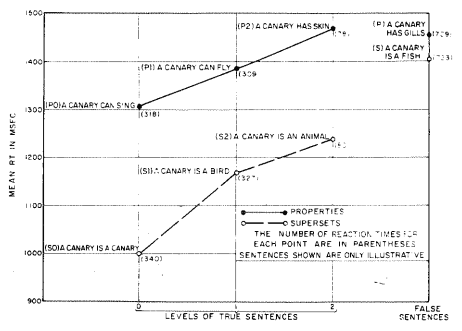

In the late 1960s, two Bolt Beranek and Newman researchers, Allan Collins and Ross Quillian (1969) proposed a representation for word meaning as a model of how people represent concepts in memory known as a semantic network. Their theory was based on concepts structured as a taxonomy, i.e. a hierarchical representation of concepts plus facts for each concept. An example portion of such a taxonomy is shown below:

Source: Collins and Quillian (1969)

In psychological experiments, they showed that the time it took human subjects to verify statements like

A canary is a bird A canary can fly

was a function of the number of hops in the hierarchy required to find the answer as illustrated below:

Source: Collins and Quillian (1969)

However, this theory when it was shown to be incomplete by a number of researchers including a Stanford group (Rips et al, 1973) who showed that it was faster to determine that

A bird is an animal

than it was to determine that

A bird is a mammal

despite the fact that mammal should be positioned in the hierarchy in between bird and animal. As a result, semantic networks lost traction as a theory of human memory. Though taxonomies lost traction as a theory of human memory, taxonomies support a very specific form but a very important form of reasoning known as first-order logic. Referring back to the semantic network diagram above, if we’re told that Harry is a canary, we can make a number of other inferences about Harry based on the knowledge of the world embedded in the semantic network. We know that Harry

is yellow can sing is a bird has wings can fly has feathers is an animal has skin can move around eats breathes air

even though we’ve never been explicitly told any of these things about Harry.

The semantic web was defined in a paper by Tim Berners-Lee, who is often considered the inventor of the World Wide Web, and two other academic researchers, Jim Hendler and Ora Lassila (Berners-Lee et al, 2001). The power of the web then, and still today, is that it stores a huge amount of human-readable content. The first website was put online in 1991. By 2001, there were 26 million websites. As of 2018, that number was just under two billion websites. However, it was very difficult for computer programs to make use all that great content. These researchers proposed a means for the web to be annotated in a way that could be used by computer programs. They proposed to extend the traditional Web of Documents to a Web of Data that could be consumed by software agents. As an example, they proposed a hypothetical personal assistant that could find an open medical clinic:

At the doctor’s office, Lucy instructed her Semantic Web agent through her handheld Web browser. The agent promptly retrieved information about Mom’s prescribed treatment from the doctor’s agent, looked up several lists of providers, and checked for the ones in-plan for Mom’s insurance within a 20-mile radius of her home and with a rating of excellent or very good on trusted rating services. It then began trying to find a match between available appointment times (supplied by the agents of individual providers through their Web sites) and Pete’s and Lucy’s busy schedules.

The basic idea was for creators of websites to include more than just human-readable text. Website creators would annotate the sites with information that could be used by software agents. In 1994, Berners-Lee had founded the world wide web consortium (W3C) which became the de facto standards organization for the web. In the early 2000’s, the W3C developed a number of standards to implement the Berners-Lee and colleagues’ vision. Three of the most important standards are RDF, OWL, and SPARQL.

RDF: The resource description framework (RDF) provides a standardized way for website authors to express facts about the world. The format of RDF is a set of subject, predicate, object triples. Because the format is standardized, it enables software agents to easily consume the information.

OWL: The Web Ontology Language (OWL) provides a means of expressing hierarchical relationships between the entities (subject and objects) found in RDF triples.

SPARQL: A SQL-like query language for creating complex queries.

Berners-Lee also proposed the idea of linked open data (LOD). One of the key LOD ideas is that metadata references to entities should use URIs (uniform resource identifiers). So, for example, if a web page about birds uses the DBpedia URI for birds, then it can be related not only to other websites that discuss birds, it can be related to pages discussing superordinate concepts such as mammals and subordinate concepts such as robins. Today the Linked Open Data Cloud has 1231 datasets with more than 1000 records. It also eliminates the ambiguities introduced by using names. For example, the Wikipedia disambiguation page for the word “head” has over 60 entries. If instead of marking the page with the word “head”, one marks it with one of the 60+ URIs, there is no ambiguity.

The BBC news network published a news article (Raimond et al, 2010) explaining how LOD made the feeds the BBC provided to third-party developers more effective. Before using LOD, one could get news stories from the feeds but could not find all stories about a particular topic or execute a more complex search query until it was organized around LOD.

The high-level goals of the semantic web community are lofty ones. Unfortunately, the idea that people will correctly markup their websites has turned out to be a bit unrealistic for several reasons: People lie. People want their websites to be displayed in the page one list of search results. This leads to massive numbers of sites with web meta tags that are at best overstatements or are just not true. The pornography industry is probably the best example of this phenomenon. People are lazy. It seems like everyone is time-constrained these days. Most website creators don’t have time to create meta-tags to benefit software agents. People have a profit motive. Websites that contain lots of data that is valuable to software agents are often behind a paywall. As a result, rather than using the semantic web directly, many AI researchers have focused on extracting well-structured data from the web in the form of ontologies and knowledge bases that will be discussed in the next section.

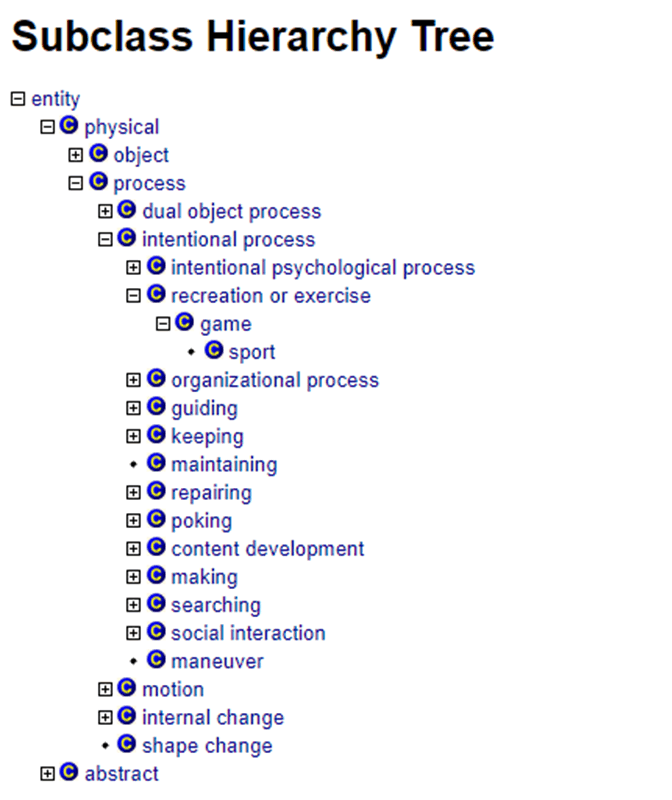

Ontologies are computer databases that organize knowledge as a set of related concepts plus properties of each concept. Ontologies link concepts with relationships. For example, the semantic network discussed above is an example of concepts linked by ISA relationships (e.g. a bird is a mammal, a dog is a mammal, and a mammal is an animal). In ontologies, concepts can also have properties (e.g. a bird has feathers). This is an example of a portion of the SUMO ontology:

Ontologies gained prominence as a means of organizing factual knowledge found in the World Wide Web. An ontology is a set of entity concepts and relationships between the concepts. Many ontologies such as YAGO and SUMO also serve as lexicons by having mappings of words into concepts.

A knowledge base (KB) is a set of facts and relationships about the world. A knowledge graph (KG) is a knowledge base of entities in which the relationships between the entities are captured in an ontology. A KG can be thought of as an ontology plus instances of the ontology. For example, a movie ontology might include the entities producer and movie title joined by the relationship produced_by. A movie knowledge graph would additionally have instances. For example, the Internet Movie Database (IMDB) is a set of facts about films and other video media. The individual movies and other media are the instances. The IMDB can also be considered an ontology that organizes the relationships between entities such as actors, films, and locations. For example, the IMDB knowledge graph has a produced_by relationship that links instances of the producer entity and the film entity. An example instance of this relationship is

produced_by (Citizen_Kane, Orson_Welles)

It should also be noted that the distinction between an ontology and a KG is a bit fuzzy because many ontologies contain instances (see for example YAGO below). The idea of a KG was first put forth by a group of Yahoo researchers in 2009 (Dalvi et al, 2009). In mid-2018, the Gartner Group identified knowledge graphs as a new key technology in their Hype Cycle for Artificial Intelligence and in their Hype Cycle for Emerging Technologies.

The IBM Watson team made extensive use of KG’s in building the computer system that beat the Jeopardy champions in 2012. The purpose of a KG is to enable searching docs for “things, not strings” as Google put it when they introduced their KG. Having a lot of information about each entity (the thing) in a single place enables Google to present a box of relevant information about each entity to the right of the search results rather than just presenting a list of articles containing the search words. Knowlege bases can be used in many different types of natural language processing tasks. For example, Microsoft Asia researchers (H. Wang et al, 2018) showed how news recommendation systems can be improved by recommending articles that reference similar KB entries. If articles are just related by the actual words, the recommendations can’t consider relationships between concepts and are watered down by the problem of word ambiguity.

7.3.2.4.1 Proprietary knowledge bases for natural language processing

Google’s Knowledge Graph (GKG) was first released in 2012 as an under-the-hood component of its search engine. GKG has millions of entries that describe real-world entities like people, places, and things. These entities form the nodes of the graph. When users asked about people, places, or things, rather than just displaying a long list of relevant articles, the Google search engine started showing a detailed infobox on the right-hand side of the page displaying key information about the queried entity. Google’s KG is also used by the Google Assistant found on Android smartphones and Google Home to answer factual questions. Google also offers a publicly available API through which users can get lists of entities that provide answers to factual questions. However, the API does not return much data about each entity beyond a link to a webpage for the entity. The facts (entities and relationships between entities) in the Google KG come from multiple sources including Wikipedia, the CIA World Factbook, Wikidata (see below), and licensed data (e.g. weather.com). Google also published an article in 2014 (Dong et al, 2014) in which they described a methodology for extracting facts from the web and rating their accuracy. This methodology is likely incorporated in GKG. However, Google has been largely silent on this point.

Similarly, Microsoft has a proprietary KG codenamed Satori that powers its Bing search engine. It also powers its Cortana chatbot and provides Excel dropdown data types such as airlines, companies, and cities of the world. According to the Microsoft website, as of 2018, it had more than two billion entities and 50 billion facts as of 2018.

The Cyc project started in 1982 when a group of 24 large US corporations banded together to start MCC (Microelectronics and Computer Technology Corporation) as an American response to a large Japanese AI initiative. The flagship project of MCC was to hand-code all “common-sense” knowledge in a system named Cyc headed by the late Douglas Lenat. Common-sense knowledge includes facts such as “birds have wings” and “airplanes have wings” as well as rules like “if it has wings it can fly”. Lenat and his team started out by going through an encyclopedia and encoding not just the facts but what the reader assumed based on their knowledge of the world in order to understand the sentences (Lenat and Feigenbaum, 1987). For example, if coke is used to turn ore into metal, then the value of both coke and ore must be less than the resulting metal and more generally the products of commercial processes have a higher value than the raw materials.

They estimated that less than one million concepts would be required in the KB (30,000 articles x 30 concepts per article). They noted that roughly matched an earlier estimate by Marvin Minsky who calculated that there are 200,000 hours between birth and age 21 and that if people learned four concepts per hour, that would be 800,000 concepts. When MCC folded in 2000, the project was spun out into a small company named Cycorp. As of 2011, $100 million had been invested to define 600,000 concepts and five million rules in 6,000 knowledge areas (termed “microtheories”) (Sowa 2011). As of 2018, according to Wikipedia, it contains 1.5 million terms, one million concepts, and 24 million rules. Commercial versions of Cyc are now available through a tiny company named Lucid.ai headed up by Lenat. Unfortunately, the Cyc project, despite the massive effort, has not produced any significant publicly-disclosed AI systems. This might be because it is proprietary. It might be because the form of the conceptual representations and logic are hard to use or not generic enough.

7.2.3.2.4.2 Open source knowledge bases for natural language processing

Wikidata (Vrandečić and Krötzsch, 2014) is a project of Wikimedia whose goal is to store crowdsourced facts. The project went live in 2012 and as of the end of 2018 has over 53 million facts and has had over 815 million crowdsourced edits. Wikidata requires all facts to have source information to enable fact-checking. In Wikidata, “items” represent entities and each item can have one or more key-value pairs. For example, the “Albert Einstein” item includes the following key-value pairs:

instance of: human sex: male date of birth: 14 March 1879 place of birth: Ulm …

Wikidata is translated into many languages. For some languages (not including English), Wikidata is used as the source for Wikipedia infoboxes.

Freebase (Bollacker et al., 2008) was a crowdsourced knowledge base created by a private company named Metaweb and released in 2007. Metaweb was acquired by Google in 2010 and presumably became the foundation of Google’s Knowledge Graph. Freebase was one of the primary KB’s used by natural language researchers from about 2008 to 2015. Google discontinued Freebase in 2014 and offered to support moving all the Freebase data to Wikidata. Unfortunately, due to a large number of incompatibilities between the two KB’s (see Tanon et al, 2016), only about 10% of the data was actually moved.

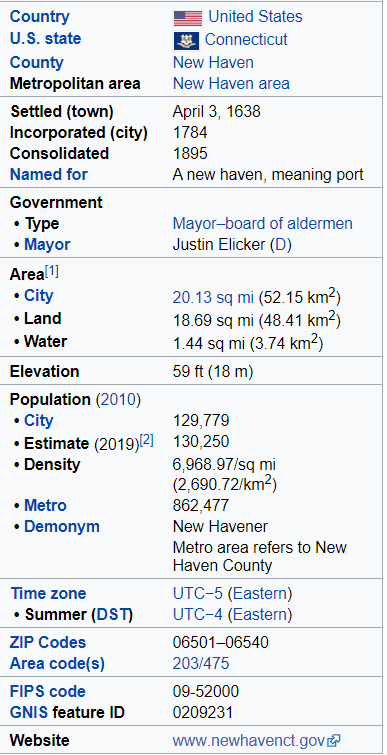

Wikipedia is the fifth most frequently accessed website in the world and is the go-to encyclopedia for most of us. In 2023, it had over 6.7 million articles in 339 languages. It is very easy to find information about any prominent entity. However, it is very difficult to search Wikipedia using more complex queries. For example, it would be very difficult to find all rivers that flow into the Rhine and are longer than one hundred miles, or all Italian composers that were born in the eighteenth century (Lehmann et al, 2012). DBpedia (Auer et al, 2007) facilitates complex queries by extracting a multilingual knowledge base from Wikipedia. It extracts data by processing Wikipedia templates such as infoboxes using basic natural language processing techniques such as regular expression parsers (Auer and Lehmann, 2007). For example, the Wikipedia infobox for the city of New Haven, CT is shown below:

The underlying Wikipedia template (which you can see by going into Edit mode) is

{{Infobox settlement | name = New Haven, Connecticut | official_name = City of New Haven | settlement_type = [[City]] | nickname = The Elm City … | established_title = Settled (town) | established_date = 1638 | established_title2 = Incorporated (city) | established_date2 = 1784 | established_title3 = Consolidated | established_date3 = 1895 … }}

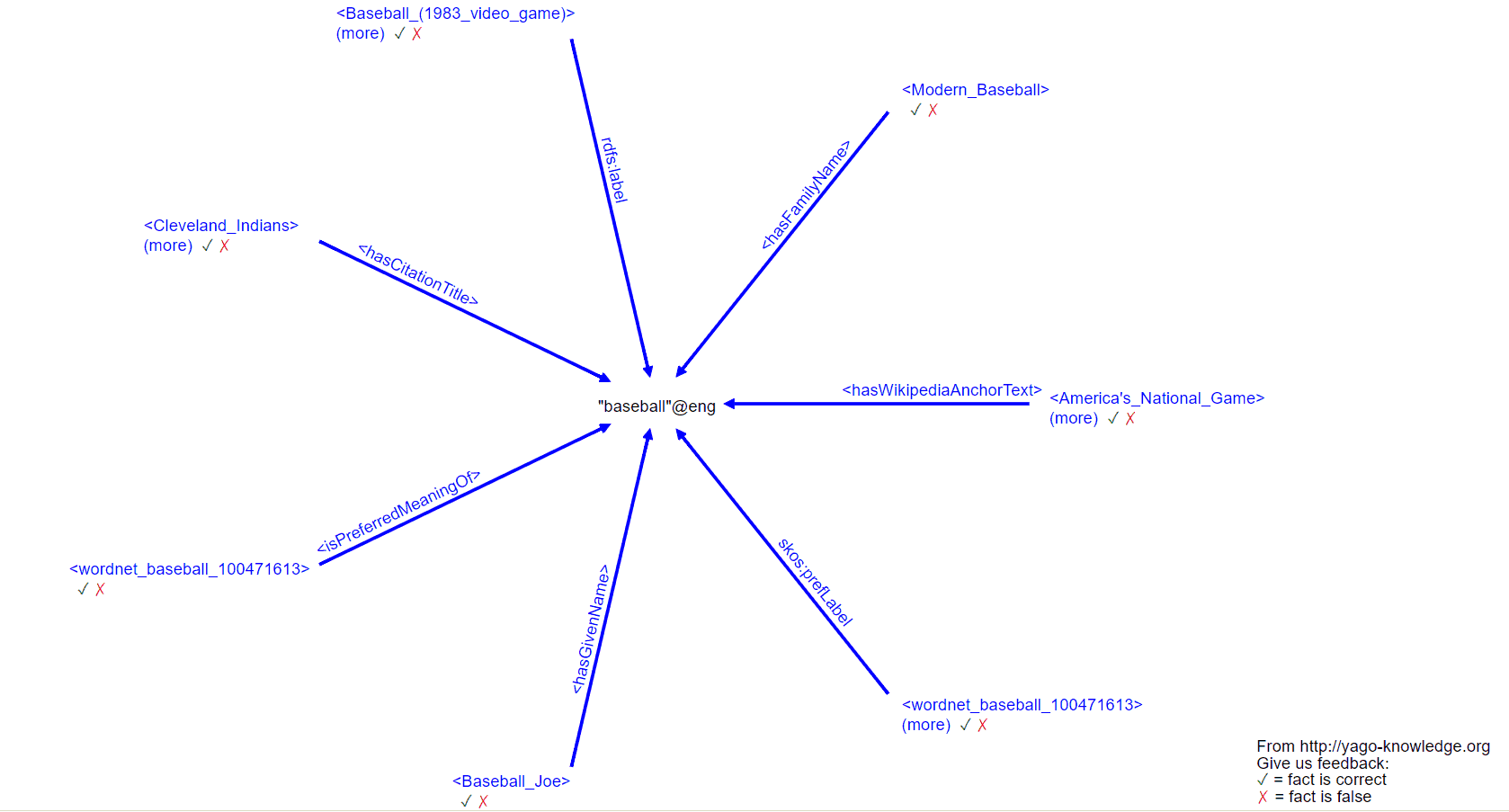

Even without any understanding of natural language processing techniques, it’s not hard to imagine how a computer program could extract critical facts about New Haven. In 2010, the New York Times published a number of articles describing their use of DBpedia for organizing their media assets. DBPedia has 1.8B facts including information on 4.587 million entities including 1.45 million persons, 735,000 places, 411,000 creative works, 241,000 organizations, 251,000 species, and 6,000 diseases in 125 languages. DBpedia extracts Wikipedia data from infobox templates, titles, abstracts, geo-coordinates, categories, images, links (e.g. to the web and/or other wiki pages), redirects, and disambiguations. It has the advantage of changing as Wikipedia changes. DBPedia (dbpedia.org) enables query-based search of Wikipedia. Using DBPedia, one can search Wikipedia using SQL-like queries for articles with certain properties (e.g. genre, author, …). YAGO (Suchanek et al, 2007), which stands for Yet Another Great Ontology, is an ontology and KG managed by the Max Planck Institute in Germany. YAGO has ten million entities and 120 million facts. It extracts these facts from Wikipedias in ten different languages and also has time and space dimensions for many facts and entities. An example portion of the KG is shown below:

YAGO also encodes time and location as independent dimensions for facts. One advantage of YAGO for natural language processing tasks is that it contains a mapping from words to entities and relationships. There will be further discussion about this type of KG below. SUMO (Niles and Pease, 2001) is an open source ontology created by an IEEE working group that claims to be the largest formal public ontology. An example portion of the hierarchy tree above. SUMO is the only formal ontology mapped to all of WordNet. SUMO contains 80,000+ concepts and 25,000+ terms. It also has language mappings for Hindi, Chinese, Italian, German, Czech, and English. ConceptNet is a KG that resulted from an MIT project that started in 2004 named the Open Mind Common Sense (OMCS) project. The goal was to collect commonsense wisdom from everyday people. The OMCS people gave people simple stories such as

“Bob had a cold. Bob went to the doctor.”

and asked what facts they can infer from the story or from their knowledge of the world. For these stories, users responded with facts like:

Bob was feeling sick Bob wanted to feel better The doctor made Bob feel better People with colds sneeze The doctor wore a stethoscope around his neck A stethoscope is a piece of medical equipment The doctor might have worn a white coat A doctor is a highly trained professional You can help a sick person with medicine A sneezing person is probably sick

The first-generation system enabled the construction of 400,000 assertions from 8000 people and has since been augmented with data from OpenCyc, Wiktionary, DBpedia, and other sources. As of 2017, ConceptNet v5.5 (Speer et al, 2017) contained 8 million nodes, 36 types of relations, and 21 million relationships in 83 languages. In contrast to most KG’s whose relations link two entities, ConceptNet relations can link two arbitrary phrases. For example, the relation CAUSES could link the phrases “soak in hot spring” and “get pruny skin” (Li et al, 2016). Of course, one downsize of this scheme is that each entry in ConceptNet only matches the exact words in the entry. So, for example, “bathe in hot spring” would not match “soak in hot spring”. However, neural networks can be trained to make this match by comparing word embeddings (Li et al, 2016). They can also be trained to extract additional entries from raw text like Wikipedia (Jastrębski et al, 2018). Like YAGO, ConceptNet maps words and phrases to concepts in sources including WordNet, Wiktionary, OpenCyc, and DBPedia.

Probase (Wu et al, 2012) is a Microsoft-developed massive taxonomy of over 2.7 million concepts. A taxonomy is a limited form of an ontology that only contains ISA relationships.