9.0 Overview

Language translation is difficult even for human translators. Lingualinx.com has a large collection of signs in various countries that were so poorly translated into English that they are amusing. A few examples:

In a cocktail lounge in Norway: “Ladies are requested not to have children in the bar”

At a hotel in Acapulco: “The manager has personally passed all the water served here”

In a hotel in Bucharest: “The lift is being fixed the next day. During that time we regret that you will be unbearable.”

In 1977, in an address in Poland, US President Jimmy Carter said that he

“…wanted to understand the Polish people’s desires for their future”.

His translator was a bit lazy and used the wrong translation of “desires”. What was communicated in Polish was the equivalent of “I wish to have sexual relations with the Polish people”.

More recently, in 2020, Facebook translated Xi Jinping, the name of the Chinese leader to “Mr. Shithole”.

There are over 7000 languages in the world and within a specific language, there are often many dialects. Yet people from different parts of the world need to communicate.

In the 1870s, an attempt was made to create a common language, Esperanto, that would be reasonably easy for people to learn. The dream was for everyone in the world to speak the same language. Unfortunately, Esperanto never really took hold. There would be many advantages of a single language spoken by everyone in the world. But there would also be distinct cultural disadvantages. There is a significant amount of culture built into the different languages of the world.

Different languages represent different ways of thinking and perceiving the world. For example, there is a Philippine tribe with 92 words for rice. If all those languages fell into disuse, a great deal of culture and ways of thinking would be lost. In any case, the plethora of languages will likely remain for the foreseeable future and this means that, in order to communicate, people who speak different languages must do so via either a human or machine translator.

If you have a smartphone or web browser, there is a good chance that you’ve used Google Translate over the years to translate web pages and other documents. According to Google (Turovsky, 2016), there were 500 million Google Translate users and 100B words translated per day by Google Translate in 2016.

Facebook (Constine, 2016) claimed 800 million users and 2B text translations (presumably more than single words) per day in 2016. Today’s machine translation systems do a great job of translating web pages for major world languages.

Translations are especially good for everyday uses such as news articles, emails, directions, and so on. They tend to be not as good for academic texts and for technical areas such as IT manuals and medical notes because the training data tends to be made up of everyday words and phrases rather than academic and technical terms. Translations of creative works like poetry are poor.

Google Translate was launched in 2006, and for many years, the translations were good enough to provide the gist of the text on the web page but it was often hard to fully understand the translated text. That changed in dramatic fashion at the end of 2016 when Google changed the underlying paradigm from statistical phrase-based translation (see Section 9.2.3.6) to a neural network-based paradigm and reduced translation errors by 55-85% on several major language pairs.

The New York Times, reporting on the new system, wrote that a Japanese professor, Jun Rekimoto, translated a few sentences from “The Great Gatsby” into Japanese and back to English using Google Translate and was astonished at how well it performed. He then translated parts of Hemingway’s “The Snows of Kilimanjaro”. Using the old phrase-based system, the first line of the translation read:

Kilimanjaro is 19,710 feet of the mountain covered with snow, and it is said that the highest mountain in Africa.

Like most internet text translated by Google Translate to this point, one could make a fairly accurate guess at the meaning, but the translation was nowhere near human quality. The day after the switch to the neural translation model, the first line read:

Kilimanjaro is a mountain of 19,710 feet covered with snow and is said to be the highest mountain in Africa.

Since that switch, text translated with the neural network model are near-human quality. Unfortunately, as good as Google Translate has become, it supports only 133 of the world’s 7000+ languages as of May 2022. Further, as will be explained below, this situation might not improve rapidly as the newer technology requires the existence of a very large set of sentences in the source language with their corresponding translations in the target language.

9.1 Relationship of machine translation to automatic speech recognition

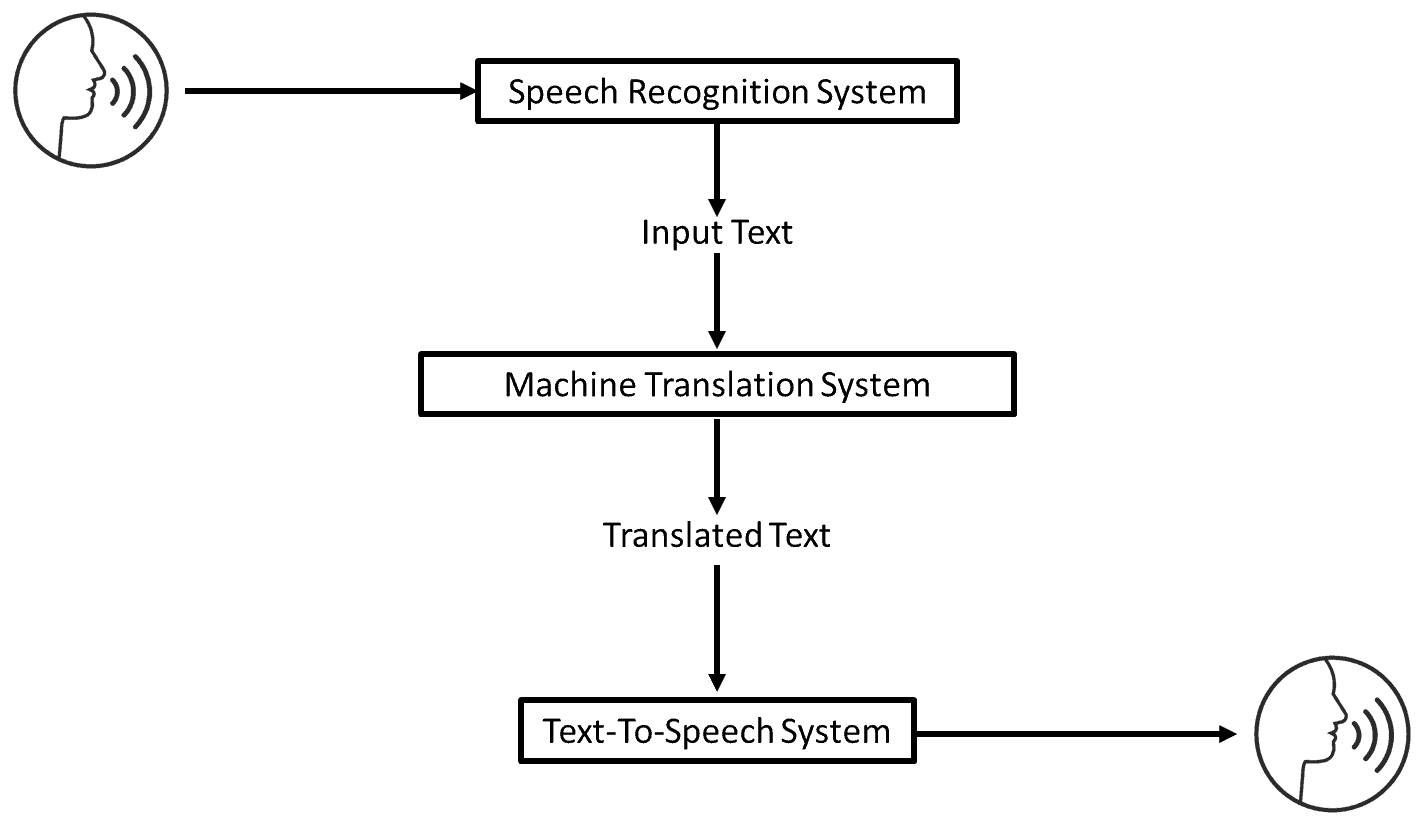

When using Google Translate, one can either type in a source language input or speak it. If it’s spoken, the speech signal is first routed to an automatic speech recognition (ASR) system that produces text output. Then it is processed by Google Translate just as if it was typed. This is the logical marriage of automatic speech recognition and machine translation, i.e. automatic speech recognition produces electronic text which can then serve as the input to a machine translation system.

While this is how most of the systems in use today operate, this is not the optimal approach for two reasons (Matusov et al, 2006):

- The output of ASR systems don’t have sentence boundary or punctuation marks. Optimal identification of the boundary markers can require both ASR (e.g. intonation) and textual cues.

- ASR systems produce a set of hypotheses and the output is the highest-scoring hypothesis. One could imagine an approach in which more than one hypothesis is passed from the ASR system to the machine translation system and the machine translation system is used not only to translate but to decide on the best ASR output.

For a speech-to-speech system, a third component that produces text-to-speech is required. Adequate text-to-speech systems have been available for years and have become more and more natural-sounding over the years.

9.2 History of machine translation systems

9.2.1 Machine translation competitions

Research into machine translation has been greatly aided by government-sponsored competitions including:

TRANSTAC: Translation System for Tactical Use is a DARPA-sponsored that ran from 2006 to 2010 and was intended to develop speech-to-speech technology to enable military personnel to converse with civilians in non-English speaking countries.

MADCAT: The Multilingual Automatic Document Classification Analysis and Translation program ran from 2008 to 2013 with the goal of translating Arabic document images into English. This program had both an optical character recognition and machine translation component.

GALE: Global Autonomous Language Exploitation is a DARPA-sponsored competition that ran from 2006 to 2011 and included both automatic speech recognition and machine translation competitions.

BOLT: Broad Operational Language Translation is a DARPA-sponsored program that ran from 2011 to 2015 and was designed to further progress in both machine translation and Information Extraction. Informal data sources were used including discussion forums, text messaging, and chat. The languages studied were Chinese, Arabic, and English.

IWSLT: The International Workshop on Spoken Language Translation is an annual competition that started in 2003 and is still running. The goal is spoken language translation (i.e. automatic speech recognition plus machine translation).

WMT: The Workshop on Machine Translation is an annual competition that has been running since 2006 and takes place during computational linguistics conferences (NAACL, ACL, EACL, or EMNLP meetings).

9.2.2 Rule-based machine translation

Rule-based machine translation systems dominated machine translation research from the 1950s through the early 1990s. Rule-based machine translation systems generally fell into three categories:

- Direct Translation Systems

- Interlingua Systems

- Transfer Systems

Below is a brief discussion about each type. All three categories use handcrafted rules to create translations. All three types of systems use a variety of types of processing both in the Analyzers that process the Source Language (SL) text and in the Generators that create the Target Language (TL) text. These types of processing include:

Morphological analysis transforms the surface forms of words (i.e. the way they appear in text) into a base form (i.e. its lemma) plus grammatical tags. For example, the surface form “children” has a lemma of “child”, the number is plural, and the part of speech is a noun.

Other types of inflections that can be tagged include gender, tense, person, case, noun class, verb form, voice, negative, definite, and clitic. Different languages can have different inflections. Last, morphological analysis can split apart compound words like “underestimate”. Morphological generation does the reverse of morphological analysis.

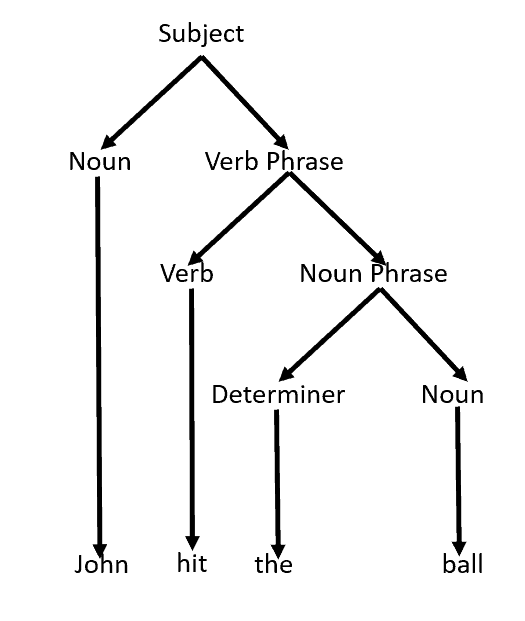

Syntactic analysis transforms sentences into syntax trees such as the one shown below. There are many different types of syntactic analysis that can occur including identification of phrase structures, dependencies, subordination, and many others. The reverse process occurs in syntactic generation.

Semantic analysis can be limited to the resolution of word and/or structural ambiguities or can be as complex as identifying the full meaning of an input text including any inferences that need to be made based on world knowledge.

9.2.2.1 Direct dictionary systems

On my bookshelf, I have a whole row of language dictionaries that I used to use for travel. As an English speaker, if I traveled to Germany, I would bring the German dictionary with me. But it really wasn’t much use. Yes, there were some common phrases for questions such as “where is the train station?”. However, for the most part, the process of looking up word translations one-by-one and pasting them together not only was time-consuming but it just didn’t work very well.

That is how direct dictionary systems work. They use word-for-word and sometimes phrase-for-phrase dictionaries for each language pair. For example, an English to Russian translation system would have a dictionary that lists each English word and its Russian counterpart. These systems also typically include some rules that do things like rearrange the words to account for different word orders and resolve ambiguities.

The first demonstration of a machine translation system was the GAT system at Georgetown University in 1954. The GAT system remained under development in the lab until 1964 when it was delivered to the US Atomic Energy Commission at Oak Ridge National Laboratory and to EURATOM in Ispra, Italy. Both organizations used GAT until the mid-70s to help them scan Russian physics texts to determine their content.

The translations were also enhanced by ad hoc rules that were added document by document. Overall, the translation quality was very poor but it enabled researchers to determine which passages and texts might benefit from expensive human translation.

In 1970, one member of the Georgetown GAT team left academia to found a commercial company. The company’s product, Systran, was initially developed for Russian to English translations. In 1976, the Commission of the European Communities used a Systran English to French system. This led to the creation of the Eurotra project to develop a multilingual system for all EC languages. Systran became available on the Minitel system in 1984 and on the internet in 1994 and the company Systran remains one of the prominent machine translation companies today.

Word-for-word translations can be unintelligible and often humorous – even for human translators. In 1977, in an address in Poland, US President Jimmy Carter said that he “…wanted to understand the Polish people’s desires for their future”. His translator was a bit lazy and used the wrong translation of “desires”. What was communicated in Polish was the equivalent of “I wish to have sexual relations with the Polish people”.

9.2.2.2 Interlingua systems

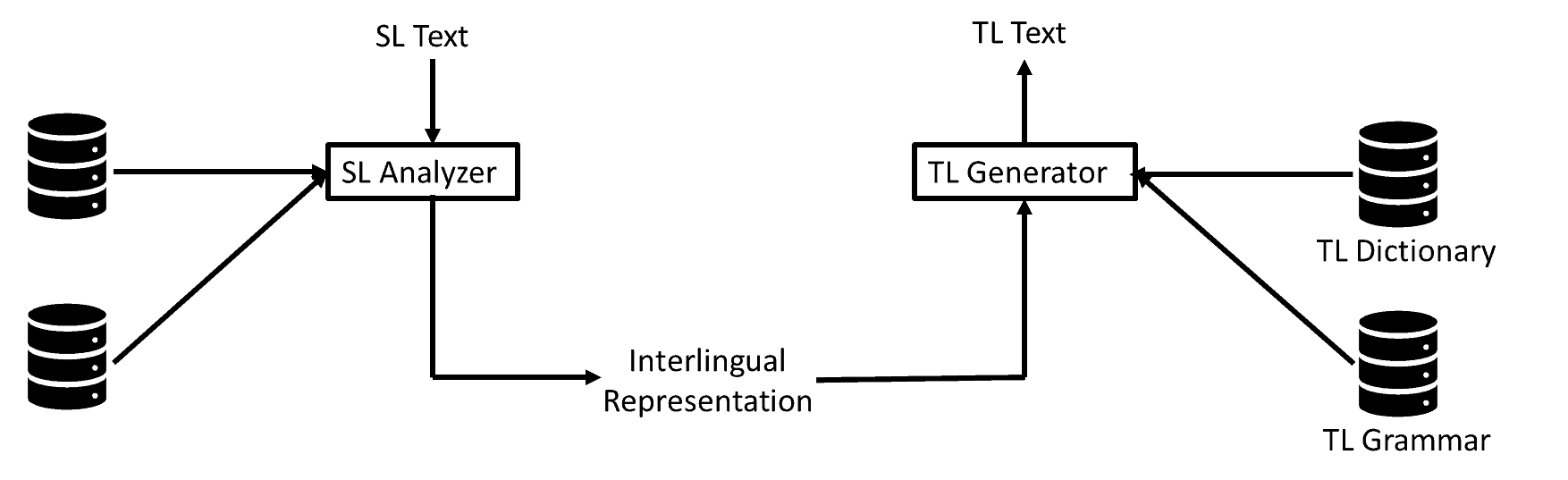

In the 1970s and 1980s, many researchers believed that the proper architecture for a machine translation system was to first translate the source input into an interlingua or intermediate meaning representation that would contain the same representation for a given meaning regardless of the source language (Shwartz, 1987). Such a representation would necessarily be language-independent.

There were two primary rationales for this approach: First, if one needs to translate among 100 languages since there are 9900 pairs of languages (counting for example English to French and French to English as two pairs), 9900 individual translation systems would have to be developed. This meant developing separate dictionaries, grammars, knowledge bases, and rules for each individual translation system.

It’s not quite as massive a task for today’s AI because everything is learned automatically. However, it still requires a parallel language corpus for each pair. And we’re still far away from being able to translate most of the world’s 7000+ languages.

The second, termed the interlingua approach, is illustrated below:

The use of an interlingual representation would dramatically reduce the number of individual translation systems that need to be developed. One would only need to develop an Encoder for each language and a Decoder for each language. So, for 100 languages, one would need 100 encoders and 100 decoders as opposed to 9900 individual systems (each of which has an encoder and a decoder).

A second advantage of an interlingual representation is that world knowledge and reasoning rules would only need to be coded once. Human understanding requires a great deal of reasoning based on world knowledge. If a language-independent meaning representation is used, then knowledge and reasoning rules can also be expressed in a language-independent format. The advantage would be that one does not need separate knowledge representations and reasoning rules for each language (or pair of languages).

In the 1970s, Roger Schank and his colleagues made a number of proposals about what such a meaning representation might look like. One component of that meaning representation was knowledge structure they term a script. For example, they hypothesized that most humans have a restaurant script that contains a sequence of steps: a person is seated, a waiter comes over, they order, they eat, they pay, and they leave. Suppose one hears a short narrative like “John order lobster. He paid the check and left.” According to Schank and colleagues, most will use their restaurant script to infer the fact the John ate the lobster.

One of the larger meaning representation efforts was the KBMT-89 system developed by Sergei Nirenberg and his colleagues at Carnegie Mellon University (Nirenberg, 2009). The IBM-sponsored KBMT-89 system was designed to translate PC manuals between English and Japanese in both directions. The system contained analyzers for English and Japanese that converted the source language inputs into an interligua that was language independent. The system also had generators for English and Japanese that took the interlingua as input and produced English and Japanese as output.

The system required a massive amount of manually-created “knowledge” including A domain model of about 1,500 concepts. The domain model was specific to the domain of PC manuals. The concepts included relations which were either objects, events, or properties. Properties could be relations or attributes. So, for example, the relation

((part-of) disk_drive computer)

represented the fact that a disk drive is a part-of a computer. In the interlingua, this relation would have had the same representation whether it was expressed in English or Japanese and might have been expressed in those languages in many different ways. Examples of attributes are age and color.

The analyzer lexicons contain entries for 800 Japanese and 900 English words and phrases. Each entry defined how a word or phrase should be mapped to an interlingua concept. For example, in English one might say “hold down the key” where in Japanese one would say the equivalent of “press the key with a long duration”. However, both would result in the same interlingua representation of an event concept which itself was quite complex.

Similarly, the generator lexicons specified how to map interlingua concepts to Japanese and English words and phrases. Finally, there were sets of both analysis and generation grammatical rules for English and Japanese. The KBMT-89 system requires a huge amount of manual coding of knowledge for a very small domain (PC manuals).

When one thinks about the amount of effort required to do this for all domains, it seems like an impossible task. And in fact, the effort to manually create knowledge structures and reasoning rules was eventually viewed by most researchers as a hill that was just too high to climb.

With the exception of projects such as Cyc, most researchers gave up on hand-coding of knowledge and rules. Fast forward to the present and the dominant paradigm for machine translation is to have separate systems for each pair of languages and each direction (e.g. English to French and French to English).

That said, there are a number of researchers working toward the development of systems that can automatically generate meaning representations (i.e. interlingua). The successful development of such systems would pave the way for an MT methodology that only requires a relatively small number of encoders and decoders rather than a separate system for each directional language pair.

Unfortunately, these systems seem a long way off and there is not yet any reason to be confident that we’ll ever there.

9.2.2.3 Transfer approach

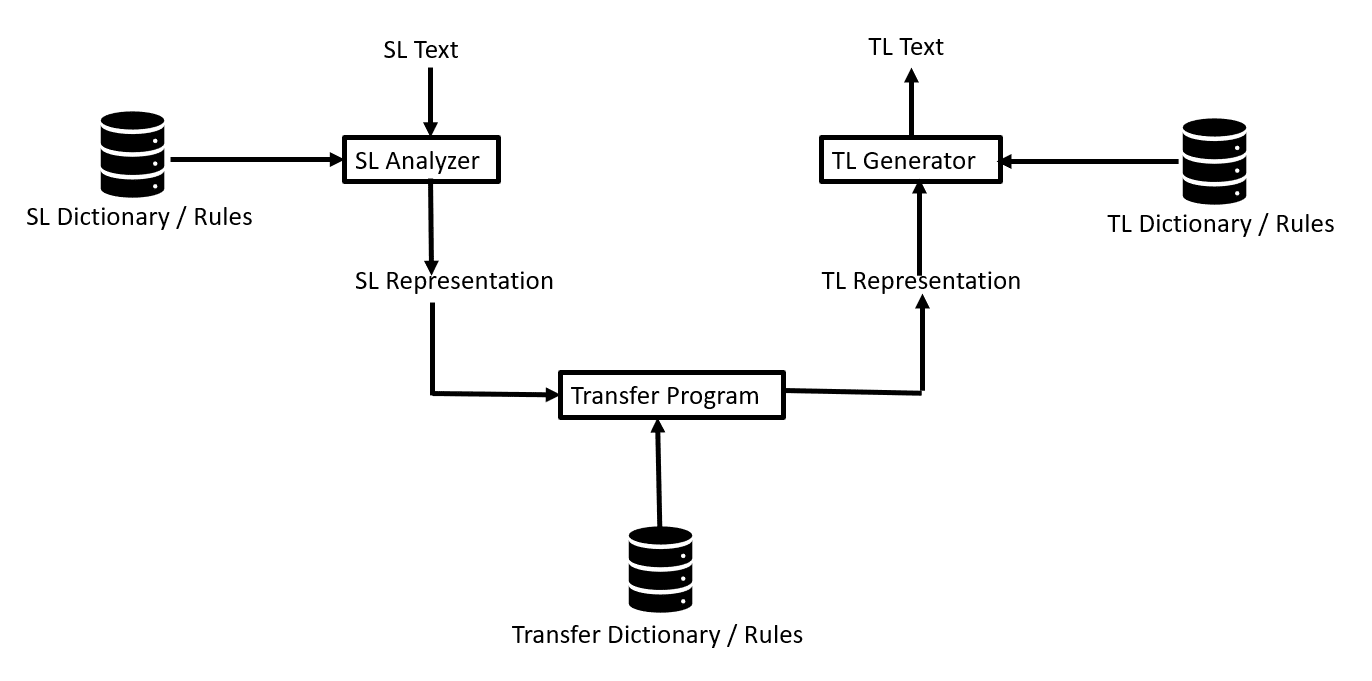

The transfer approach to machine translation is illustrated below:

A Source Language (SL) Dictionary is used that contains morphological, syntactic, and sometimes semantic information. This information is used by the SL Analyzer to create a parse tree such as the one shown below. The primary information used tended to be syntactic or grammar rules. Grammar rules define how parts of speech such as nouns, verbs, adjectives, adverbs, and prepositions can be combined to make grammatical sentences. These rules also define the typical word order for grammatical elements such as Subject and Object.

The structure of the parse tree can take many different forms and the parsing process itself has seen a huge number of variants. The Transfer Program then converts the SL Representation to a TL Representation. Here also, the Transfer Program has seen many variations.

One common theme, however, are rules and processes for converting SL syntax to TL syntax. For example, a subject-verb-object (SVO) language parse tree is converted to an subject-object-verb (SOV) language parse tree. The Target Language Generator takes the TL syntax and generates the translation in the target language.

One should only need to create an SL Analyzer for each language and a TL Generator for each language and Analyzers and the Generators should work for many different language pairs. Only the Transfer Program needs to be developed specifically for the language pair.

Unfortunately, it’s not that easy for a lot of reasons. At some point in the process, one still requires a language-to-language word dictionary. In many cases, the language-to-language word dictionary is used by the TL Generator. The biggest problem is word ambiguity.

There have been numerous schemes proposed to handle ambiguity. One way is to create a separate parse tree for each meaning of a word. Of course, if more than one word in a sentence has multiple meanings (and this is common), then a large number of possible parses might need to be created. One can winnow these down during the Analysis process by discarding parses that lead to ungrammatical sentences (which of course is risky because not all understandable utterances are grammatical). These alternatives can also be passed through to the Generator (e.g. Emele and Dorna, 1998) where they can each be transformed into the target language and a winner is chosen based on which makes sense in the target language.

Alternatively, semantic information can be attached to each possible parse and later used to choose the best translation. The most common form of semantic information is based on predicates and objects. Nouns and noun phrases usually refer to objects. Predicates define the relationship between two objects. For example, the ISA predicate defines hierarchical relationships. For example, a canary is a bird and a bird is an animal. A HASA predicate might define the attributes of an object, i.e. a bird has wings. If enough semantic information is derived by the Analyzer, then the model becomes an interlingual model.

9.2.3 Statistical machine translation

Rule-based machine translation requires so much hand-coding of dictionaries and rules that it is not practical for unrestricted machine translation. As a result, in the 1990s, the focus turned to automatic translation, an approach first suggested by Warren Weaver (1949) but one that didn’t get serious attention until the 1990s partly because the rule-based approach seemed attractive, partly because sufficient computational resources were not available, and partly because of the lack of electronically-available parallel texts. This section provides a very high-level overview of statistical machine translation. For a more in-depth understanding of statistical machine translation, see Koehn (2010).

9.2.3.1 Parallel texts

Until 1799, Egyptian hieroglyphs were frequently discovered but no one knew how to interpret them. That year, a Napoleon expedition to Egypt discovered the Rosetta Stone which had a decree by King Ptolemy V written in Egyptian hieroglyphs. The same stone had an ancient Greek translation of the decree. It took more than 20 years but scholars finally figured out how to translate the Egyptian hieroglyphs.

Statistical machine translation paradigms also rely on the availability of translated texts for each language pair. Automated techniques analyze the parallel texts and derive statistics and rules for machine translation of new text input.

One of the first large scale parallel text corpora that was electronically available was the Hansard corpus which contained English and French translations of the proceedings of the Canadian Parliament. One of the most heavily used parallel texts is a parallel corpus extracted from the proceedings of the European parliament. It contains texts in 21 European languages including Romanic (French, Italian, Spanish, Portuguese, Romanian), Germanic (English, Dutch, German, Danish, Swedish), Slavik (Bulgarian, Czech, Polish, Slovak, Slovene), Finni-Ugric (Finnish, Hungarian, Estonian), Baltic (Latvian, Lithuanian), and Greek.

The creation of this corpus was a massive effort (Koehn, 2005). The authors had to crawl the HTML files on the European Parliament website to collect 80,000 files per language. Then they had to manually align the documents so that there was one file per day per language. Next, they had to put each sentence on one line. For the most part, they relied on periods as end of sentence markers plus some minimal detection of periods that were just for abbreviations.

Google Translate started with this corpus and added many other parallel corpora including records of international tribunals, company reports, and all the articles and books in bilingual form that have been put up on the web by individuals, libraries, booksellers, authors and academic departments.

9.2.3.2 Sentence alignment

In their initial form, these corpora were not aligned. There was no indication of which sentences in the English translation corresponded to which sentences in the French translation or vice versa. Moreover, a paragraph in the one language might have different numbers of sentences than the corresponding paragraph in the other language.

A commonly-used sentence alignment algorithm (Gale and Church, 1994) was developed that worked by matching sentences of similar lengths and merging sentences based on the number of words in the sentence. After the alignment process, if a paragraph had different numbers of sentences in different languages, it was discarded. The result was a corpus of about one million sentences per language. This basic approach has been improved over the years (Moore, 2002) so that it achieves near-100% correct alignment.

9.2.3.3 Word alignment

In addition to aligning the sentences for the two languages, statistical machine translation also requires alignment of the words. Word alignment is more difficult than sentence alignment.

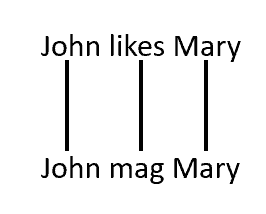

If all sentence pairs were directly aligned like the example above, alignment would be simple.

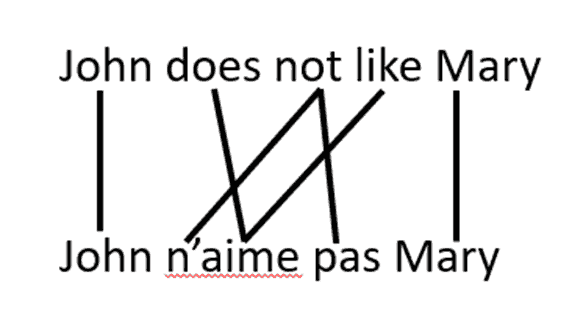

However, as you can see in this example, it’s usually not that simple. First, two English words must be aligned with one German word. Second, the two English words are in the second position in English but the fourth position in German.

In this, the English word “not” maps to two words in French that are separated by another word and one of the “words” is the beginning of a contraction. And there are many additional linguistic phenomena that complicate alignment.

Nevertheless, several word alignment algorithms have been created that work well. These algorithms are typically generative in nature meaning that they attempt to generate the target language translation from the source language text by trying different combinations of word orders (known as distortion) and word translations and use the most likely result both for alignment and translation. These algorithms also try word insertion and deletion, one-to-many alignments (known as ‘fertility’), and other schemes.

GIZA++ is a well-known open-source alignment tool designed (Och and Ney, 2003) that is based on these ideas. As one can imagine, it becomes computationally challenging to try all possibilities, so researchers have developed various means of narrowing the search space.

Alignment can also be improved by symmetrization. The word alignment process described above is from one language into another. As a result, each word in the source language is aligned with zero, one, or many words in the target language. However, this doesn’t account for situations where a word in the target language needs to be aligned with zero or many words in the source language. To fix this, the symmetrization process runs the word alignment process in both directions and then takes the union or intersection of the results.

Discrimination word alignment models were also studied. The idea is to encode various features of the input sentences in labeled training data then use a supervised algorithm (e.g. an SVM). However, these algorithms require large amounts of supervised data and alignment performance was not as good as for the generative models.

9.2.3.4 How statistical machine translation works

Now, let’s talk about how we might use a corpus like Europarl to train a machine translation system given a word-aligned corpus. For example, suppose our task is to learn enough from this corpus to be able to automatically create a German translation for a new English sentence that is not found in the corpus.

The statistical machine translation system will generate multiple alternative German translations by taking each word in the English sentence, looking it up in a cross-language dictionary, and collecting all the German words and phrases that might be translations of that word. If we do that for all the English words, we will have a big collection of German words and phrases.

Next, we’ll figuratively put all those words and phrases in a bag, shake it up, and generate all possible sentences that are combinations of those words and phrases. Some of these sentences will be grammatical. Most will be ungrammatical. For example, for the sentence “John has hit the ball”, we would collect the following German words:

John –> John

Has –> hat

hit –> angriff, anfahren, anschlagen, anstoßen, aufschlagen, brüller, erfolg, erzielen, erreichen, glückstreffer, geschlagen, getroffen, hauen, hinschlagen, knüller, kloppen, prallen, rammen, schlag, seitenhieb, stich, stoßen, schlagen, treffer, verbimsen

the –> der, das, die, den

ball –> kugel, knäuel, tanz, tanzball, tanzfest, ballen, ball, bällchen, kegelkugel, ei, leder, eier

If we generate possible sentences using the same word order, the closest we’ll get to the correct translation (“John hat den ball getroffen”) will be “John hat getroffen den ball”. However, if we generate all possible sentences using any word order, we’ll end up with a lot of more candidates, but we’ll include the correct sentence.

We can then use a language model to choose which is sentence is most likely to occur in actual texts and thereby arrive at the correct answer. In effect, these machine translation systems are using Bayes Rule in a very similar fashion to the ASR systems. The probability of each candidate German translation given the source English sentence is computed by multiplying

-

- The probability of the English sentence given a candidate German sentence with

- The probability of the German sentence

A translation model is used for estimating (1) and a language model is used for estimating (2).

A simplistic translation model would be to count the number of words in the German sentence are translations of words in the English sentence. If we only used this simplistic translation model, we wouldn’t account for word order differences between the languages and a lot of the generated sentences wouldn’t make sense because the wrong word senses were generated. However, it will still work reasonably well because that probability will be multiplied by the probability of the German sentence which will be low (or zero) for ungrammatical sentences. This latter probability would be estimated by using a language model. The result will be that only grammatical German sentences will have a high probability.

The next two sections will discuss better translation models.

9.2.3.5 Word-based statistical machine translation

Word-based translation models look not only at whether or not each candidate sentence word is a translation of a source sentence word, we can look at the likelihood of each translation. Professor Philipp Koehn offers the example of the German word haus. Using a parallel corpus, it was determined that haus was found to have 5 different English counterparts:

- House was the translation 8000 times in the corpus

- Building was the translation 1600 times in the corpus

- Home was the translation 200 times in the corpus

- Household was the translation 150 times in the corpus

- Shell (as in snail shell) was the translation 50 times in the corpus

A word-based translation model would assign the following probabilities to the possible translations:

- House: .8

- Building: .16

- Home: .02

- Household: .015

- Shell: .005

When a source sentence is encountered, each word in the source sentence has a set of counterparts in the target language, each with a different probability. If we have a sentence with 6 words and each word has 5 possible translations, the total number of translations is 5 x 5 x 5 x 5 x 5 = 3125 sentences. Sentences e for which the product of the individual probabilities is the highest are the most likely. However, they might still be ungrammatical and that is why translation model probability needs to be multiplied by the language model probability.

Another issue with this approach is that two translation models must be developed for each pair of languages. Having a good language model of French to English is no help in translating English to French (Brown et al, 1993).

9.2.3.6 Phrase-based machine translation

Phrase-based machine translation was developed to overcome some of the limitations of word-based machine translation. Word-based machine translation will typically translate idioms (e.g. “jump the gun”) in a “literal” word-for-word fashion which doesn’t preserve the meaning. Phrase-based systems treat the contiguous words in the idiomatic expression as a unit and translate into a set of words that better captures the meaning.

A phrase like “green tea” can be translated correctly into French as “thé vert” or incorrectly as “vert thé”. However, if the phrase “green tea” is processed as a single unit instead of two words, a correct translation is more likely to result. Phrase-based systems treat the contiguous words in a phrase or idiomatic expression as a unit and translate into a set of words that better captures the meaning.

For machine translation purposes, the definition of a phrase is any number of consecutive words in a sentence (or can even be multiple sentences).

In a hierarchical phrase-based machine translation system, phrases can contain other phrases. The larger the parallel corpus, the longer the phrases that can be successfully learned.

Phrase-based machine translation systems first use a word alignment algorithm to align the words in each source and target language pair. Then a translation table of source language words and phrases and their translations are extracted from the newly-aligned parallel corpus. Because each word or phrase can have multiple translations, the translation table also contains the probability of occurrence of each translation.

For example, if a given phrase has three different translations, one of which occurs 5 times in the corpus, one occurs 3 times, and one occurs 2 times, then the translation probabilities in the translation table will be set to .5, .3, and .2. The machine translation system can’t just take the highest probability word or phrase for each position in the input sentence because that result will often be an ungrammatical sentence while something other than the highest probability word/phrase at one or more output locations might produce a much better translation.

So, like the word-based approach, they figuratively put all those possible translations of words and phrases in a bag, shake it up, and generate all possible sentences that are combinations of those words and phrases. From there, one approach would be to try every possible sentence. However, this is often impractical. Suppose, for example, there are 8 output positions (i.e. we’re translating sentences with 8 words or phrases) and 10 possible translations per position. There would be 100 million total possible sentences to evaluate.

As a result, phrase-based machine translation systems use heuristic techniques to evaluate the translations at the first position in the sentence and eliminate the least likely translations for that word or phrase. Then, move onto the next position and evaluation the translations of the first two positions and eliminate the least likely, and so on. At each position in the output sentence, the possible translations are evaluated both on the frequency of occurrence listed in the translation table as well as the likelihood of occurrence of the sentence up to the current position based on a language model.

The most common pruning technique is beam search (Koehn et al 2003). One major advantage phrase-based machine translation is that phrasal translation inherently captures many of the local word order differences between languages. For example, the phrase “the blue house” in English might translate to the equivalent of “the house blue” in another language (e.g. “la maison bleue” in French).

Because of the direct phrase-to-phrase substitution in the translation process, this local word order difference is captured automatically. However, longer-distance orderings will not be captured automatically. For example, the ordering of two phrases might be different between languages. Several researchers have shown that rules can be developed for reordering adjacent phrases.

9.2.3.7 Statistical machine translation toolkits

The Moses open source toolkit (Koehn et al 2007) contains a wide range of standard tools and capabilities for phrase-based machine translation such as:

- The SRILM (Stolcke et al 2017) language modeling tool

- The GIZA++ word alignment tool (Och and Ney 2003)

- A translation evaluation tool using BLEU scores (Papineni et al, 2002)

It also contains several other finer-grained capabilities.

Phrase-based machine translation systems do a much better job of generating grammatically correct output in the target language than word-based statistical machine translation systems because the phrases themselves contain word order information. When a phrase in the target language that has one word order is substituted for a phrase in the source language that has a different word order, the resulting translation has the correct word order for that phrase. And, as mention above, phrasal reordering algorithms provide incremental improvements to grammaticality.

That said, the results are still often lacking especially when the source and target language have major syntactic differences. In syntax-based machine translation approaches, the system first learns a set of rules that can transform the syntactic structure of one language into the syntactic structure of another language (e.g. using the GHKM scheme proposed by Galley et al, 2004).

Then, a parser creates a parse tree like the one above for a sentence in the source language, and rules are used to create a parse tree in the target language. Then dictionaries are used to translate the words and phrases with alternate word senses disambiguated using the syntactic knowledge embodied in the target language parse tree. So, for example, if a word (e.g. “bank”) has one translation as a noun and another translation as a verb, the position in the parse tree can determine the correct translation. See Williams et al (2016) for a more detailed explanation of syntax-based machine translation.

9.2.4 Neural machine translation

From the mid-1990s until recently, phrase-based machine translation was the dominant paradigm in machine translation. Google Translate exclusively used syntax-based machine translation prior to 2017. Phrase-based translations, even for major languages, typically suffered from disfluencies such as incorrect grammar, bad word choices, and other issues. Translations for major languages were adequate but far from the level of human translators.

All that changed with the advent of neural machine translation. The job of a neural machine translation (NMT) system is to take an input sentence and find the most probable sentence in the target language. Unlike phrase-based systems, neural machine translation systems do not start with a bilingual dictionary of either words or phrases. They aren’t provided a language model. They aren’t given any pre-defined linguistic features. The neural machine translation system must learn all of these and whatever else it needs to perform that translation.

The neural machine translation system is simply trained with pairs of sentences (one from the source and one from the target language) and uses a cost function that maximizes translation performance.

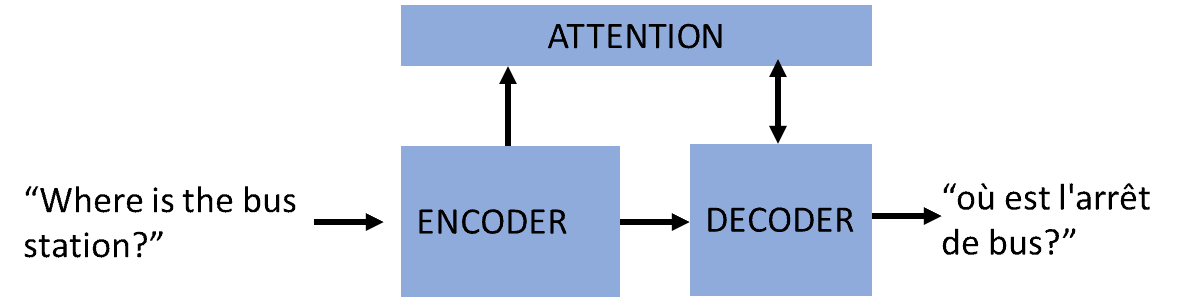

Neural machine translation systems use the encoder-decoder with attention (Cho et al, 2014; Sutskever et al, 2014; Bahdanau et al, 2015) architecture to translate text. The output of the encoder is the input to the decoder and must be sufficient to generate target language translations with the help of the attention mechanism. Neural machine translation systems, like phrase-based machine translation systems, generate several alternatives at each output position and using pruning techniques like beam search to evaluate the alternatives.

9.2.5 Comparing neural machine translation performance with phrase-based machine translation

Junczys-Dowmunt et al (2016) compared phrase-based machine translation and machine translationfor 30 translation directions using the UN Parallel Corpus of 11 million sentences that are fully-aligned over 6 languages. They found that their neural machine translation models beat their phrase-based machine translation models on 29 of the 30 directions (French to Spanish being the exception). Where Chinese was involved, the BLUE increase was 7 to 9 points.

They also compared hierarchical phrase-based machine translation and neural machine translation on 10 translation directions with neural machine translation outperforming on 9 of 10 directions (Russian to English was the exception). Bentivogi et al (2016) compared multiple phrase-based machine translation approaches (including hierarchical) with neural machine translation on a corpus of TED talks and found neural machine translation outperformed the phrase-based machine translation systems across the board.

Additionally, they looked at sentence length and found that, while all systems got worse as sentences got longer (performance on 35-word sentences was half that of 15-word sentences), neural machine translation systems degraded faster than phrase-based machine translation systems. Last, they found that neural machine translation systems made fewer morphology errors, lexical (word choice) errors, and word order errors.

9.2.6 Google neural machine translation

The Google neural machine translation system produces much better translations than the older phrase-based machine translation system. Google Translate is trained separately for each language pair and in each direction. Researchers train one Google Translate system on English to French. They train another on French to English, another on French to Japanese, and so on. When you type a sentence in a source language into Google Translate and request a translation in a target language, Google Translate passes the source text to the appropriate system. The

training dataset for each language and direction has one row per training sentence pair that contains the sentence in one language and the translated sentence in another language. Each row has a column for each word in the source language followed by a column for each word in the target language. For example, the source language sentence “Where is the bus station?” combined with the correct translation “où est l’arrêt de bus?” would constitute one row of the training table. The other rows would have different sentences.

Google does not disclose the size of its training datasets, but they likely have somewhere between tens of millions to billions of rows or more for each language pair. The job of the neural machine translation algorithm is to take as input a training dataset for a language pair and direction and learn to translate the source words into the target words. You can see the architecture of the neural machine translation system below:

Google neural machine translation is based on an encoder-decoder network model (Cho et al, 2014; Sutskever et al, 2014). It has 8 LSTM layers in both the encoder and the decoder in order to capture subtle irregularities in the source and target languages. The attention mechanism (Bahdanau et al, 2015) connects the last layer of the encoder to the first layer of the decoder for computational efficiency.

You can think of this architecture like this: the encoder passes along the gist, the attention system helps put it together word-by-word, and the decoder computes the translation in the target language. Together, these three deep neural networks learn a function that can translate from one language to another. The details of how this happens is beyond the scope of this book.

The Google team (Wu et al, 2016) that developed Google neural machine translation faced several challenges and developed several innovations: First, given the number of languages (and language pairs) supported by Google Translate, the team recognized that both training and inference were going to be potential bottlenecks. So, Google designed hardware (Google Tensor Processing Units) specially-designed to run Google’s TensorFlow neural network framework.

Second, rare words were a big concern. To solve the problem, they used wordpieces for input and output (see the next section on out of vocabulary words).

Third, they were finding too many sentences that were only partially translated. To fix this issue, that added a coverage penalty to the beam search scoring to encourage an output sentence that covers all the words in the input sentence.

The result was a system that reduced translation errors by an average of 60% compared to Google’s phrase-based translations.

9.2.7 Ensemble models

Neural machine translation systems can be constructed in many different ways. For example, the encoder might be an LSTM-based RNN or a convolutional network. It is logical to assume that each different neural machine translation learns different features. So, it makes sense to try ensemble models where the output is based on multiple models. The decoder in an ensemble model typically average the probabilities assigned by each model at each output step.

9.2.8 Out of vocabulary words

Out of vocabulary (OOV) words are rare words, technical terms, and named entities that may be present in the test set (or real-world) but are not present in the training set. These present difficulties for training neural machine translation systems. Even if rare words are present in the training set, neural machine translation systems typically limit their output vocabularies to the 30,000 to 80,000 most frequent words in each language because training time and computational resources increase with the size of the vocabulary.

Rare words, technical terms, and named entities, even when present in the training may not appear frequently enough in the training data to make the 30-80,000 word cutoff. Moreover, if they do occur, they will occur infrequently and the learning algorithm will not have much opportunity to learn anything significant about the word.

According to Zipf’s Law, the most frequent word in a language will occur twice as often in texts as the second most frequent and will occur about 80,000 times for each occurrence of the 80,000th most frequent word.

Morphology is another complicating factor. As Chung et al (2016) point out, an output vocabulary for an English corpus could easily contain the words “run”, “runs”, “ran”, and “running” as separate entries despite the fact that they share a common lexeme.

This issue is compounded in highly inflected languages with numerous morphemes and/or numerous compound words like German. Sennrich et al (2016) offer the example of the German word Abwasser|behandlungs|anlange which essentially means ‘sewage water treatment plant’. The earlier neural machine translation models were mostly word-based rather than character-based because the resulting sequences are much shorter and it’s much easier for an neural machine translation system to learn short-range dependencies than it is to learn long-range dependencies. However, word-based approaches struggle with out of vocabulary words.

9.2.8.1 Copying out-of-vocabulary words

One approach is to simply copy the source language word to the target language. This works well for named entities but fails if the target language word is different or has a different alphabet. It is also problematic for compound words where one word is translated into multiple words or vice versa.

9.2.8.2 Dictionary back-off

A group of Google researchers (Luong et al, 2015) proposed a clever solution: They augmented the training of the neural machine translation by creating a dictionary with word pair combinations derived from running a word alignment algorithm. In a post-processing step, they replace unknown words with the dictionary entry. If no word pair is available, the source word is copied.

A group of Montreal researchers (Jean et al, 2015) improved on this method by using the alignments discovered by the attention mechanism to identify which source word should be looked up in the dictionary. These dictionary back-off models were quite successful, their rare word processing still had limitations. Most importantly, if the unknown word was not in the training set, it won’t be in the dictionary.

9.2.8.3 Subword approaches

The earlier neural machine translation models were mostly word-based rather than character-based because the resulting sequences are much shorter and it’s much easier for an neural machine translation system to learn short-range dependencies than it is to learn long-range dependencies. However, word-based approaches struggle with out of vocabulary words. As a result, several subword approaches have been developed:

Byte pair encoding: University of Edinburgh researchers (Sennrich et al, 2015) created a subword dictionary by breaking the training corpus into a sequence of tokens using a compression technique known as byte pair encoding (BPE). Initially the tokens are the individual characters plus a special character to mark the end of words. Then tokens that occur together frequently are merged to create a new token and this process is repeated this process until the set of tokens reaches a predetermined size (e.g. 30,000).

There are several advantages of this technique for machine translation and also for large language models. First, it captures rare words (e.g. entity names) that aren’t included in the source or target language vocabulary. Second, it caps the size of the input and output vectors which is computationally advantageous.

One interesting aspect of this process is that common words will get re-constituted as new tokens. The Edinburgh researchers found that a neural machine translation model with BPE tokens as inputs outperformed the same model with word inputs.

Character-based neural machine translation: Researchers have also studied neural machine translation algorithms that take characters instead of words as input. The primary difficulty with character models is that it is much harder to learn long-term dependencies. A sentence of 8 words might have 48 characters resulting in more and longer-term dependencies to learn.

Chung et al (2016) note two additional advantages of character-level models: First, the system can potentially learn to successfully learn to process morphological variants of words not seen in training. Second, they do not require segmentation or tokenization. Instead, a character-based encoder considers all possible multi-character patterns and selects only the most relevant patterns.

Seoul National University researchers (Lee et al, 2017) note three challenges for character-to-character neural machine translation systems:

-

- Computation complexity of the attention mechanism grows quadratically with respect to the sentence length because it needs to attend to every source token for every target token.

- It is hard enough to extract meaning from word inputs because there are so many senses for each word. It is much harder to do this from character inputs.

- Long-range dependencies are more difficult with character inputs simply because they are 5-6 times longer on average than word inputs.

Ling et al (2015) proposed that, instead of using words as the input layer, they use an LSTM network to convert the characters in the word into word representations which then serve as the initial hidden layer of the encoder. The advantage is that morphological variants are encoded together rather than separately. Similarly, on the decoder side, an LSTM network converts characters to words.

Luong and Manning (2016) trained a word-based encoder-decoder in parallel with a character-based Encoder-Decoder but used the character-based network only for rare words. This led to a state of the art BLEU score of 20.7 for the WMT 2015 English to Czech translation task. However, the computation time required for the character-to-character network was prohibitive for the reasons outlined by Lee et al (2017) and discussed above.

Chung et al (2016) compared a neural machine translation encoder-decoder system that used Sennrich et al BPE subwords for both the encoder and decoder to an neural machine translation system that used BPE subwords for the encoder but used character-level decoding and found that character-level decoding improved performance.

Lee et al (2017) designed an encoder to overcome the shortcoming they noted that were discussed above. The encoder uses a convolutional layer followed by a max pooling layer and then a highway layer to dramatically shorten the input length and to capture local patterns. Then it is passed through a bidirectional GRU-based recurrent layer to help capture long-range dependencies. The max pooling layer is responsible for learning which character sequences are important. They found that the character-to-character system outperformed both a subword-to-subword and a subword-to-character system (all subword systems were BPE-based).

Google Wordpieces: As mentioned above, Google neural machine translation uses wordpieces which are sub-word units that are in between characters and words. The wordpiece segmentation algorithm leaves common words intact and splits up rare words. This enables Google neural machine translation to function with a much smaller vocabulary (less than 40,000 wordpieces per language) than would otherwise be necessary. The result is a nice balance between the flexibility of character models and the efficiency of word models.

Consider, for example, the sentence

_J et _makers _fe ud _over _seat _width _with _big _orders _at _stake

The underscore symbol indicates a new word. In this example, the word “jet” is broken into two wordpieces “_J” and “et”. This scheme enables easy reconstruction of the words by simply first removing spaces and then transforming the underscores into spaces. The wordpieces scheme enables Google neural machine translation to handle rare words more naturally because most will be broken into wordpieces. Wu et al (2016) compared wordpiece-based translation to both word- and character-based translation and found that the wordpiece-based system added about one BLEU point to the translation capability of the Google neural machine translation system.

The neural machine translation paradigm has become the dominant paradigm for machine translation. A team of Microsoft researchers (Hassan et al, 2018) developed an enhanced neural machine translation system that achieved parity with human translators on Chinese to English translation and exceeded the translation performance of crowdsourced translators.

9.2.9 Initialization of machine translation networks with language models

Google Brain researchers (Ramachandran et al, 2017) found that initializing both the neural machine translation encoder and decoder using pre-trained language models significantly improved neural machine translation performance.

9.2.10 Challenges for neural machine translation systems

Koehn and Knowles (2017) analyzed the state of the art of neural machine translation systems compared to the older phrase-based machine translation systems and found seven significant challenges for neural machine translation systems:

- Neural machine translation systems are trained on a specific domain represented by the training dataset (e.g. medical). Using an neural machine translation system trained on one domain does not perform well on new source language sentences from another domain (e.g. sports).

- Neural machine translation systems require more training data than phrase-based machine translation systems to perform well. As a result, for major languages, they outperform phrase-based machine translation systems. However, for low-resource language pairs, phrase-based machine translation systems do better. To understand why this is the case consider a set of neural machine translation translations of the following sentence into Spanish:

A Republican strategy to counter the re-election of Obama

Using a 375,000 word parallel corpus, the English equivalent of the Spanish translation is:

A coordinating body for the announcement of free determination

Not a very useful translation. Using a 1.5 million word parallel corpus, the English equivalent is

Explosion performs a divisive strategy to fight against the author’s elections

Not much better. It’s not until a 12 million word parallel corpus is used that we finally get a reason translation:

A republican strategy to counteract Obama’s reelection

- Neural machine translation systems that use sub-words (e.g. wordpieces) do better than phrase-based machine translation system (60% correct vs 53% correct) on previously unseen words but still don’t do well on highly inflected words (e.g. verb forms).

- Neural machine translation systems outperform phrase-based machine translation systems on sentences up to about 60 words in length but underperform on very long sentences.

- The neural machine translation attention mechanisms sometimes do not produce a correct word alignment.

- Beam search decoding only improves translation quality for narrow beams for both phrase-based machine translation and neural machine translation systems. The optimal beam size ranges from 4 (Czech—English) to 30 (English—Romanian).

- Neural machine translation systems are much less interpretable than phrase-based machine translation systems.

Additionally, misspellings and other types of noise dramatically reduce performance of NMT systems (Belinkov and Bisk, 2018).

9.2.11 Transformer-based machine translation

Transformer architectures have taken over from NMT in both machine translation research and practice. The Google Translate team started using transformers in place of NMT in 2020 (Caswell et al, 2020). Rather than training a large number of pairwise language translation systems, the transfomer-based Google Translate now employs multilingual training. All languages are trained simultaneously and each input is marked with a language token (Manning et al, 2022).

9.3 Machine translation for low-resource languages

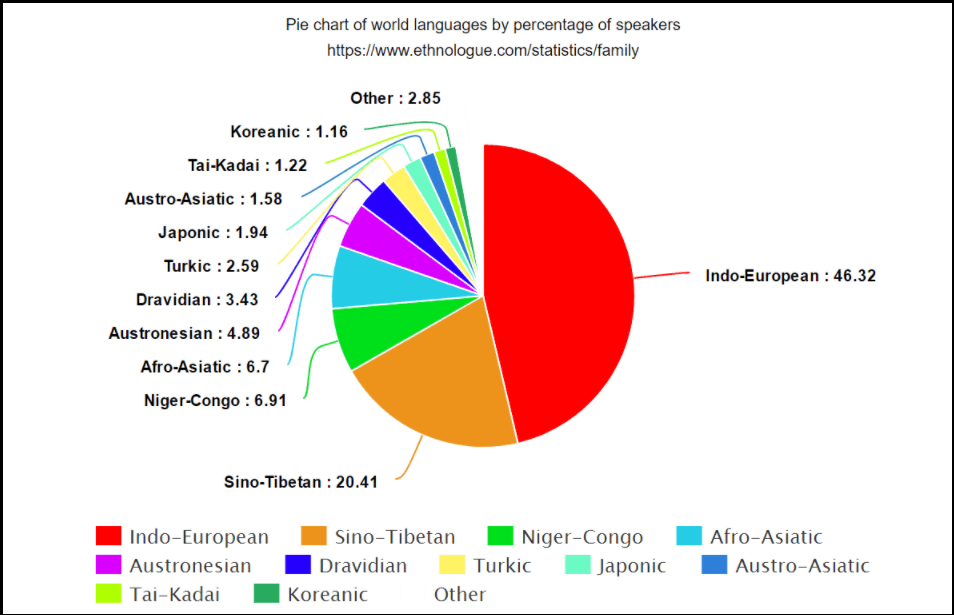

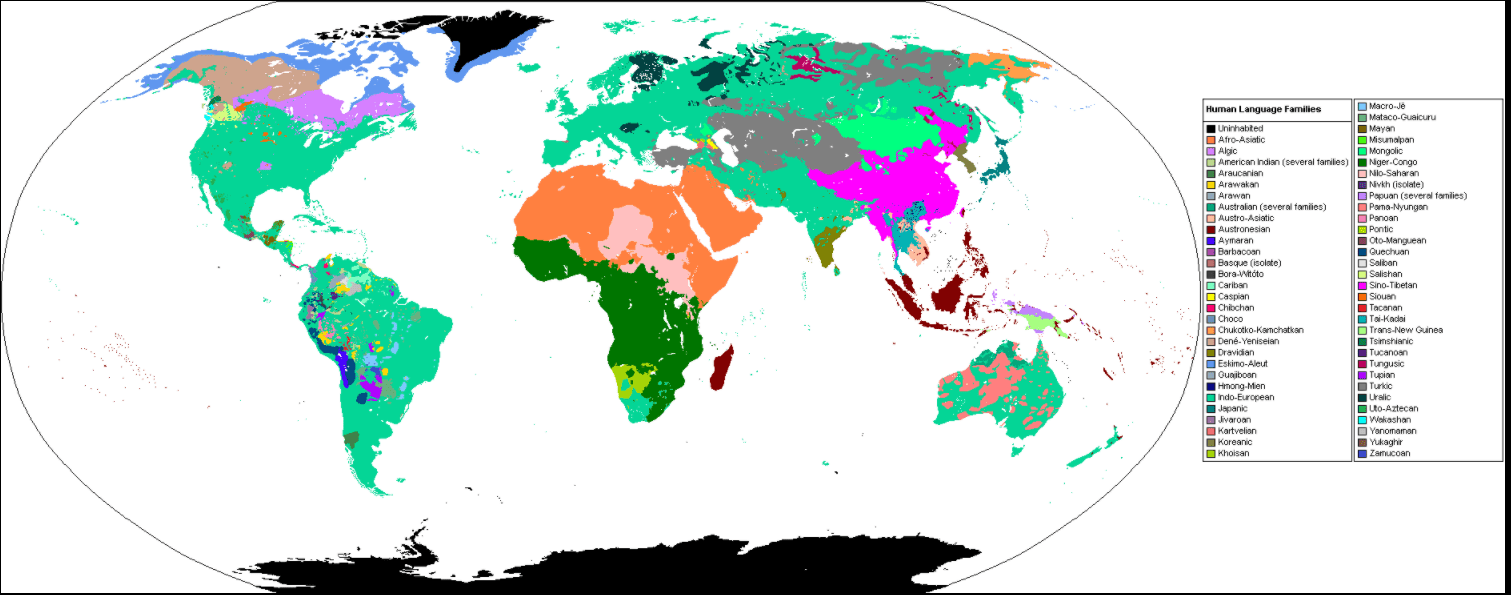

As discussed above, training a neural machine translation system requires a large body of parallel text. However, there are 7000+ languages in the world and over 40 million language pairs. Google Translate only supported 133 languages as of October 2023. In September 2019, it supported 104 languages. This is fairly slow progress toward a goal of providing translation capabilities for the entire world. The map below shows the geographical pattern of the major languages of the world:

Source: Wikipedia.

The ethnologue.com website (Eberhard et al, 2022) lists over 7151 languages in the world as of May 2022. The definition of what constitutes a language (as opposed to a dialect of a language) is not straightforward. However, the publisher of ethnologue.com has pushed through an international standard, ISO 639-3, that defines the following three criteria:

- Two related varieties are normally considered varieties of the same language if speakers of each variety have an inherent understanding of the other variety at a functional level (that is, can understand based on knowledge of their own variety without needing to learn the other variety).

- Where spoken intelligibility between varieties is marginal, the existence of a common literature or of a common ethnolinguistic identity with a central variety that both understand can be a strong indicator that they should nevertheless be considered varieties of the same language.

- Where there is enough intelligibility between varieties to enable communication, the existence of long-standing distinctly named ethnolinguistic identities coupled with well-developed standardization and literature that are distinct can be treated as an indicator that they should nevertheless be considered to be different languages.

These 7000+ languages can be broken down into 147 language families (ethnologue.com):

The largest language family is Indo-European languages which has almost 3B speakers and 437 languages. Indo-European languages include Spanish, English, Hindu-Urdu, and Russian. The second-largest language family is Sino-Tibetian languages which has approximately 1.25B speakers and 453 languages of which Chinese has by far the greatest number of speakers. The Niger-Congo family has the most languages (1524):

Source: Wikipedia.

If 7000+ languages is not enough of a headache for systems that need to process natural language, many languages have multiple dialects that differ both in pronunciation the actual words used by speakers. Each language also has different numbers of words and there is not even agreement on how many words are in each language. Estimates of the number of words in English range from 171,000+ words in the Oxford Dictionary to over 1 million words according to The Global Language Monitor. According to The Economist, a typical English-speaking adult knows 20-35,000 words. Eight-year-old children know 10,000 words. Four-year-old children know 5,000 words. Google Translate is a great resource for world travelers. I can speak or type almost anything and get a usable translation. However, as of September 2023, Google Translate only supports 131 languages. What about the other 6900+ languages? What if I’m in a part of the world where one of these other languages are spoken? More importantly, how do people whose primary language is one of these languages communicate, search the web, and so on?

9.3.1 Characteristics of low-resource languages

The development of natural language technology depends on having available large numbers of language resources such as text and speech. These languages resources include corpuses of labeled text, large bodies of unlabeled text (e.g. internet articles and posts), and language resources such as tokenizers, parsers, morphological analyzers, and named entity recognizers. These high-resource languages have massive amounts of and readily-available tools for processing data. The distinction between high-resource and low-resource languages should really be seen as a continuum. English and Mandarin Chinese have the highest numbers of resources. Languages like Arabic, French, German, Portuguese, Spanish, Japanese, Finnish, Italian, Dutch, and Czech all have adequate resources. Other languages have fewer resources.

One specific characteristic of low-resource languages is that they have little presence on the internet. For example, Wikipedia has documents in only 298 languages. That means there are over 6800 languages without any Wikipedia articles. Google researchers (Bapna et al, 2022) found sentences in 1586 languages on the internet. However, some had as few as one sentence. They found 1140 languages with at least 25,000 sentences.

Unfortunately, the vast majority of NLP research uses high-resource languages. NLP systems have relatively little exposure to the typological variations that are unique to low-resource languages. Advances in NLP technology therefore tend to be specific to high-resource languages (Joshi et al, 2020). Low-resource languages tend to lack a number of categories of linguistic resources (Hedderich et al, 2021) including:

- Availability of task-specific labels to facilitate supervised learning

- Availability of unlabeled language- or domain-specific text

- Availability of auxiliary data such as gazetteers

One major issue for machine translation systems is that there is substantial bitext (parallel corpora with word-level alignment) for only a few hundred languages. However, the vast majority of language pairs do not have a sufficient body of parallel text to enable successful supervised training (Lopez and Post, 2013). Probably the largest parallel sentence dataset was created by Google researchers (Bapna et al, 2022). The Google dataset contains 25 billion sentence pairs. However, it only spans 112 languages.

9.3.2 Multilingual machine translation

Training bilingual systems on language pairs that have little available data will generally result in poor translations. One aspect of multilingual translation research is to determine if some of the translation capability of high-resource languages can be transferred to low-resource languages.

Multilingual translation is also a critical capability for commercial providers of machine translation because training all pairwise languages in each direction can be very expensive. For 100 languages, there are 10,000 language pairs to be trained. For 7000 languages, there are close to 50 million.

Most of the recent progress on machine translation for low-resource languages has resulted from the development of multilingual models. These are models that are trained on parallel and monolingual corpora from multiple languages. Multilingual models learn a joint representation for both high- and low-resource languages and this benefits performance on the low-resource languages.

A group of Baidu researchers (Dong et al, 2015) looked at translating English into Spanish, French, Dutch, and Portuguese. They created individual models using a neural machine translation architecture for each language. They compared performance to training with a shared encoder and separate decoders and found better performance for all four output languages in the shared encoder architecture. They also simulated how this model would work for low-resource languages by running one version of the study using only 15% of the training sentences for two of the language pairs. They still found improvement in the multi-task architecture over the single-task versions. However, overall performance on the 15% language pairs was less than on the full language pairs.

It should also be noted that the lesser language pairs still had just under 300,000 sentence pairs which is far more than many low-resource language pairs so one can’t conclude this would work for languages with, say, less than 1000 sentence pairs. Also, Spanish, French, and Portuguese are all Latin languages and, while Dutch is a Germanic language, it shares a character set and European roots. Many low-resource language pairs have none of these shared characteristics.

A group of Google researchers (Johnson et al, 2017) expanded on this research using the Google neural machine translation language translation system. However, rather than using separate decoders, all languages were run through the same model. The only change was that a token was prepended to each input sentence to specify the target language. The Google team studied several scenarios:

(1) Two input languages –> One output language

The model was trained with both German to English sentence pairs and French to English sentence pairs combined into one corpus. They compared translation results using the industry-standard BLEU measure to neural machine translation trained separately and German to English and one trained separately on French to English.

Multi-language training actually improves BLEU scores in this scenario slightly by up to 3.5%. One possible hypothesis explaining the gains is that the model has been shown more English data on the target side, and that the source languages belong to the same language families, so the model has learned useful generalization.

(2) One input language –> Many output languages

The results varied here. Some output languages were very slightly better and some were very slightly worse. But performance was essentially equivalent to the scores on individual language pairs.

(3) Many input languages –> Many output languages

On this test, the average loss per language pair was about 2.5%. Lee et al (2017) found that character-based multilingual models have an advantage over word- and subword-based models because those models require a separate word (or subword) vocabulary for each language. They found that character-based multilingual translation performed significantly better than character-based bilingual translation using translations from German, Russian, Czech and Finnish to English. (For Russian, the Cyrillic characters were first transliterated to English).

A group of Carnegie Mellon researchers (Neubig and Hu, 2018) showed that multilingual training on a broad set of high-resource languages resulted in a model that could be used to initialize a network for fine-tuning on low-resource languages with only a small amount of training data available.

Another set of Google researchers (Arivazhagan et al, 2019) studied a system that learned to translate among 103 languages where each language pairs constituted two tasks (one for each direction) as a means of creating translation systems for low-resource languages. They found that when the training set contained as many low-resource examples as high-resource, low-resource language translation was improved but high-resource translation was significantly impaired. When examples were in proportion to the occurrence of examples from each language in the training set, performance for the low resource languages was poor but the high resource languages were not impaired.

As part of its No Language Left Behind project (Costa-jussà et al, 2022), Meta has developed a system that can translate among 200 languages. Moreover, Meta has open-sourced the code for this model to encourage faster progress.

Another group of researchers (Xu et al, 2020) showed that transfer to low-resource languages can be effected by adding bilingual dictionaries to the training. No text in the low-resource languages, aside from the dictionaries, was required.

Multilingual models have also become the basis for cross-lingual machine translation in which models are trained on high-resource languages and used to effect translation into low-resource languages with zero or just a few training examples in those languages.

Google researchers (Bapna et al, 2022) investigated zero-shot machine translation, i.e. learning to translate languages based on monolingual text (i.e. no parallel corpora). They developed a machine translation system for 1000 languages based on bitext for 112 languages and only monolingual text for the other languages. The results were encouraging but not good enough to use in Google Translate. They found several types of weaknesses in these models:

- They often confused distributionally similar words and concepts, e.g. “tiger” became a “miniature crocodile”

- Translation efficacy decreased for less frequent tokens

- Inadequate translations of short or single word input

- A tendency to magnify biases present in the training data

One big issue for zero-shot languages is that they struggle with the different word orders of different languages and tend to produce an output word order that mirrors the input language word order instead of the target language word order. Meta researchers (Liu et al, 2021) found that by making a tweak to the NMT architecture (removing residual connections), they were able to overcome this issue and dramatically improve zero-shot performance.

Cross-lingual transfer has also become the go-to methodology for creating multilingual task-oriented dialogue systems (Razumovskaia et al, 2022). Amazon’s AlexaTM model (Fitzgerald et al, 2022) used an encoder-decoder sequence-to-sequence model to achieve state of the art performance in multilingual few shot machine translation and summarization.

Multilingual models have made great progress in a relatively short period of time. In 2021, a multilingual model from Meta (Tran et al, 2021) beat bilingual models for the first time in the annual Workshop on Machine Translation conference challenge.

For an in-depth survey of multilingual machine translation, see Doddapaneni et al (2021). See also this Microsoft survey (Wang et al, 2021) of approaches to neural machine translation for low-resource languages and this surveys of all types of approaches (Hedderich et al, 2021).

9.3.3 Machine translation using pivot languages

When a translation model is needed for a language pair that doesn’t have a large corpus of parallel text, one alternative is to bridge the translation using two language pairs that do have large corpora of parallel text. For example, if there is not enough parallel text for SpanishTurkish, one can use a model that first translates from Spanish to English and then feeds the output into a model that translates English to Turkish.

There are two primary issues with this method: First, for many low-resource languages, there won’t exist enough parallel text to build the bridge.

Second, there can be a loss of fidelity in the resulting translations because translation issues for the single language pairs are magnified (Ozdowska and Way, 2009). To see why this happens, consider translating from Engish to German and back to English. Consider one English word “hit”. This word has 25 possible German translations (angriff, anfahren, anschlagen, anstoßen, aufschlagen, brüller, erfolg, erzielen, erreichen, glückstreffer, geschlagen, getroffen, hauen, hinschlagen, knüller, kloppen, prallen, rammen, schlag, seitenhieb, stich, stoßen, schlagen, treffer, verbimsen). However, if one takes each of these 25 words and looks up possible English words, one finds 224 English words.

The Google research just described (Johnson et al, 2017) showed how using the Google neural machine translation architecture can dramatically improve the pivot language approach. The Google team trained the neural machine translation system separately on Portuguese to English and English to Spanish. They then created a bridge from Portuguese to Spanish by inputting Portuguese sentences, ran them through the first translator to get English output, and then fed the English into the Spanish translator. They compared the results to machine translation systems that directly learned to translate Portuguese to Spanish.

The neural machine translation bridged model showed only a 2% loss compared to the neural machine translation direct Portuguese to Spanish model. This is not surprising since the individual neural machine translation models perform so well. The loss was even less when they trained the model with Portuguese to English, English to Portuguese, English to Spanish, and Spanish to English. However, when they tried a less related language, Japanese, there was a significant loss in accuracy.

© aiperspectives.com, 2020. Unauthorized use and/or duplication of this material without express and written permission from this site’s owner is strictly prohibited. Excerpts and links may be used, provided that full and clear credit is given with appropriate and specific direction to the original content.