Will Self-Driving Cars Really Prevent Accidents?

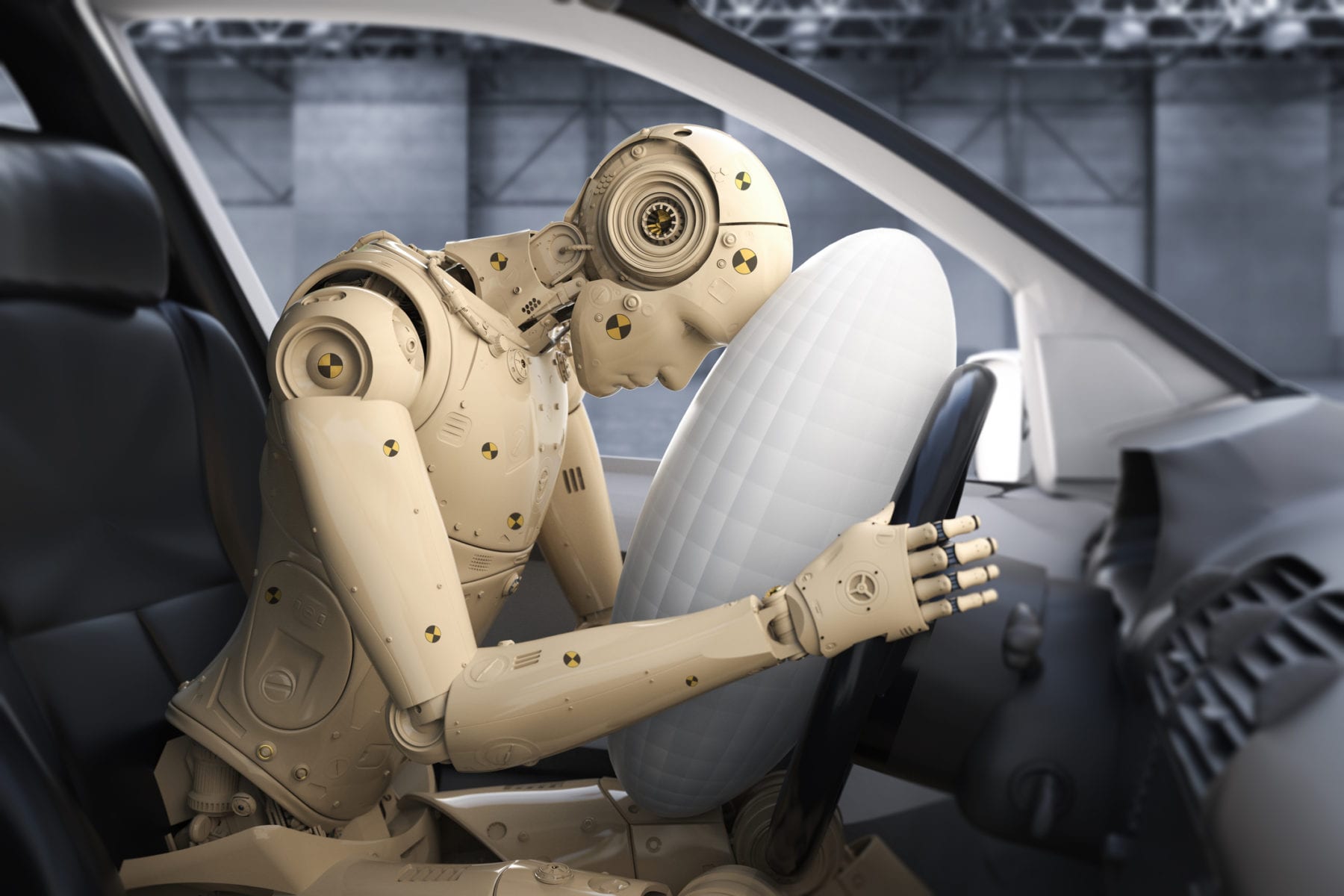

Photo: PhonlamaiPhoto / iStockPhoto

Photo: PhonlamaiPhoto / iStockPhoto

The NHTSA website’s section on self-driving vehicles starts off with some compelling statistics:

- 36,560 people killed in motor vehicle accidents in the US in 2018

- 90% of motor vehicles accidents are caused by driver error

Moreover, according to the website, today’s driver assistance technologies such as emergency braking and pedestrian detection are already saving lives. And I absolutely believe this is true. But where I part company with the NHTSA is that we should therefore throw caution to the wind and allow fully autonomous (i.e. driverless) self-driving vehicles onto our streets and highways without adequate testing.

Data Fundamentalism

There is a psychological phenomenon known as data fundamentalism, a term coined by MIT professor Kate Crawford, who studies the impact of AI on society. Data fundamentalism refers to the tendency to assume that computers speak the truth. When I was a postdoc at Yale, one of the professors took a group of students to Belmont Park to watch thoroughbred racing. Before the first race, he stood in front of the bleachers and did a lecture on handicapping. Of course, all the New York pundits in the stands rolled their eyes and elbowed each other: What does this guy know about handicapping? However, when the professor pulled out some notes written on green and white computer print paper (this was back in 1979), the pundits stopped laughing and crowded around to hear what he had to say. The point is that people often assume computers are always right. In the case of self-driving vehicles, this could be a fatal error. Aside from our tendency to incorrectly assume computers are always right, there are very good reasons to question the assumption that self-driving cars will prevent accidents.

Edge Cases

In 2009, Captain Sully Sullenberger had just piloted his plane into the air when a flock of Canadian geese took out the engines. The plane was only 2,900 feet above the ground, and Sullenberger and his copilots had only a few minutes to maneuver before the plane hit the ground. They had received no training on this specific scenario; they could only apply a few basic rules and common sense. To decide the best course of action, they factored in the likelihood of their passengers surviving various crash alternatives, the likelihood of injuring people on the ground, where rescue vehicles would be quickly available, and many other factors. Then they heroically landed in the Hudson River, all 155 passengers survived, and no one was hurt.

Pilots receive extensive training, but it is impossible to train them for every possible situation. For those edge cases—situations similar to but not exactly like their training—they must use their commonsense knowledge and reasoning capabilities. The same is true for automobile drivers. A Clearwater, Florida, high school student noticed a woman having a seizure while her car was moving. The student pulled her car in front of the woman’s car and stopped it with no injuries and only minor bumper damage.

Most of us have encountered unexpected phenomena while driving: A deer darts onto the highway. A flood makes the road difficult or impossible to navigate. A tree falls and blocks the road. The car approaches the scene of an accident or a construction zone. A boulder falls onto a mountain road. A section of new asphalt has no lines. You notice or suspect black ice. The car might fishtail when you try to get up an icy hill. We all have our stories. We do not learn about all these possible edge cases in driving school. Instead, we use our commonsense reasoning skills to predict actions and outcomes. If we hear an ice cream truck in a neighborhood, we know to look out for children running toward the truck. When the temperature is below 32 degrees, there is precipitation on the road, and we are going down a hill, we know that we need to drive very slowly. We change our driving behavior when we see the car in front of us swerving, knowing that the driver might be intoxicated or texting. If a deer crosses the road, we are on the lookout for another deer, because our commonsense knowledge tells us they travel as families. We know to keep a safe distance and handle passing a vehicle with extra care when we see a truck with an “extra wide load” sign on the back. When we see a ball bounce into the street, we slow down because a child might run into the street to chase it. If we see a large piece of paper on the road, we know we can drive over it, but if we see a large shredded tire, we know to stop or go around it. No one knows how to build commonsense reasoning into cars or into computers in general.

Because autonomous vehicles lack the commonsense reasoning capabilities to handle these unanticipated situations, their manufacturers have only two choices. They can try to collect data on human encounters with rare phenomena and use machine learning to build systems that can learn how to handle each of them individually. Or they can try to anticipate every possible scenario and create a conventional program that takes as input vision system identification of these phenomena and tells the car what to do in each situation. It will be difficult, if not impossible, for manufacturers to anticipate every edge case. It may be possible for slow-moving shuttles on corporate campuses, but it is hard to imagine for self-driving consumer vehicles. If cars cannot perform commonsense reasoning to handle all these edge cases, how can they even drive as well as humans? How many accidents will this cause?

Self-Driving Cars Do Not See Like People

Computer vision systems are prone to incorrect classifications and they can be fooled in ways that people are not fooled. For example, researchers showed that minor changes to a speed limit sign could cause a machine learning system to think the sign said 85 mph instead of 35 mph. Similarly, some Chinese hackers tricked Tesla’s autopilot into changing lanes. In both cases, these minor changes fooled cars but did not fool people, and a bad actor might devise similar ways of confusing cars or trucks into driving off the road or into obstacles. The differences in how self-driving cars perceive the world leads to concerns far beyond hackers. For example, in real-world driving, many Tesla owners have reported that shadows, such as of tree branches, are often treated by their car as real objects. In the case of the Uber test car that killed the pedestrian, the car’s object recognition software first classified the pedestrian as an unknown object, then as a vehicle, and finally as a bicycle. I don’t know about you, but I would rather not be on the road as a pedestrian or a driver if vehicles cannot recognize pedestrians with 100 percent accuracy!

Safety vs. Traffic Jams

Accidents are just one concern. In early 2020, Moscow hosted a driverless vehicle competition. Shortly after it began, a vehicle stalled out at a traffic light. Human drivers would reason about this edge case and decide to just go around the stalled car. However, none of the driverless cars did that, and a three-hour traffic jam ensued. We do not want autonomous vehicles to crash, but we also do not want them to stop and block traffic every time they encounter an obstacle. The Insurance Institute for Highway Safety analyzed five thousand car accidents and found that if autonomous vehicles do not drive more slowly and cautiously than people, they will only prevent one-third of all crashes. If manufacturers program cars to drive more slowly, the result will be more cars on the road at any given point in time. This will increase the already too-high levels of congestion on many of our roads.

We Need Testing Not Assumptions

The NHTSA appears ready to just assume that self-driving vehicles and allow them on the road without proof of their safety. And it’s not just the NHTSA. Florida statute 316.85 specifically allows the operation of autonomous vehicles and explicitly states that a driver does not need to pay attention to the road in a self-driving vehicle (e.g., the driver can watch movies). It also explicitly permits autonomous vehicle operation without a driver even present in the vehicle. And there are no requirements for manufacturers to pass safety tests beyond the non-self-driving car safety requirements. Whenever a car, truck, bus, or taxi company decides they are ready, they are free to test and sell driverless vehicles. I own a home in Florida this terrifies me. Many other states are also encouraging rollout of self-driving vehicles without safety standards. I believe the NHTSA has fallen prey to data fundamentalism and is operating under the improper assumption that self-driving vehicles are safer than than human drivers. This is likely to result in unnecessary accidents and traffic jams. Instead, the NHTSA should demand safety testing that proves these vehicles are in fact safer than human drivers.

Of course.

The main reason is that machines currently only process information. Humans do not. Humans input information in various ways, but they naturally, and subconsciously most often, quickly convert (integrate) into what most beings, including humans, naturally process: knowledge.

Common-sense, consciousness, intelligence, knowledge are all related and very ill-understood phenomena.

“Artificial Intelligence” (AI), combines two main concepts: Intelligence and Artificial. While it seems simple to understand that “Artificial” refers to implementing something in a machine or computer, there seems to be very few people, if any, that can define “Intelligence” in a precise, structured, and coherent enough way to make it possible to teach computers what to do and understand.

Until that can commonly be done, effectively “smart” things cannot be.

Automation can really be great and Intelligence can use it properly, but automation and intelligence are two very different things. As are also knowledge and information.

What is needed most now in this quest for effective artificial intelligence, common-sense, and consciousness, is not more implementations or faster computers. It is “Understanding”. Much more intellectual and scientific understanding of natural phenomena.