2.0 Overview

Supervised learning is responsible for most of the significant breakthroughs in AI, including machine translation, facial recognition, image classification, fraud detection, spam detection, and speech recognition.

Supervised learning systems learn to perform these tasks by analyzing tables of data and learning how to do one task from each table of data. If the same supervised learning system tries to learn to perform a task from a different table of data, it will forget everything it learned from the first table.

This is different from the way people learn. People learn through a variety of experiences, we learn how to do a lot of different things, and we can often apply what we learned from one experience to learn other things. Supervised learning is a type of machine learning. In 1997, Carnegie Mellon Professor Tom Mitchell defined machine learning as “the study of computer programs that improve automatically with experience“.

There are two other major types of machine learning, unsupervised learning, and reinforcement learning, which will be discussed in later chapters.

It is common knowledge that, to compute a Fahrenheit temperature value from a Celsius temperature value, one multiplies the Celsius value by 1.8 and then adds 32 degrees, i.e.

Fahrenheit = Celsius *1.8 + 32

This is an example of a function. Let’s call this the Fahrenheit function. The Fahrenheit function has one input variable, the Celsius value. It has one output value, the Fahrenheit value. The number 1.8 in this function will be referred to as the weight for that input variable and 32 as a constant. More complex functions will have many input variables and weights. Weights and constants are examples of parameters.

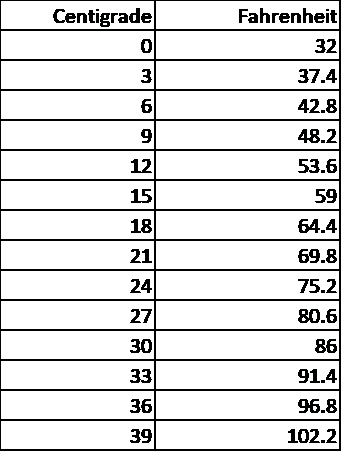

Suppose we have a table of data (i.e. a dataset) containing Fahrenheit and Celsius values. That is, each row in the data has a Fahrenheit value and its corresponding Celsius value. A portion of this dataset is shown below:

The columns are the input and output variables and the rows are the observations (also known as examples).

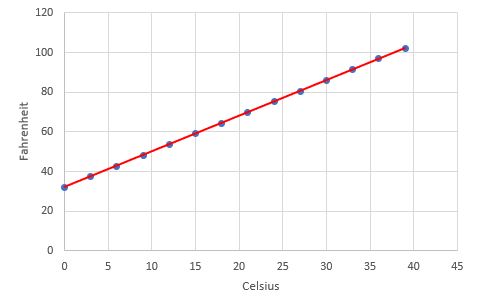

If we plot the dataset on a graph, all the data points in the dataset fall on a straight line in the graph as shown below:

The straight line in this graph is defined by the Fahrenheit function. The value of the output column for each row serves as a label for that row. The label holds the correct answer.

Now, suppose we didn’t know the Fahrenheit function and all we had was a table of data relating observed Fahrenheit values to Celsius values and that our job was to “learn” the function relating Fahrenheit to Celsius values. We could apply any one of a wide variety of supervised learning algorithms (see below) to compute this function from our table of observed values.

The learned function enables us to predict the Fahrenheit temperature for a given Celsius temperature that is not in the dataset. For example, if you look at the graph above, the Celsius value 22 is not in the dataset. But we can use the learned Fahrenheit function to predict that the Fahrenheit value will be 71.6.

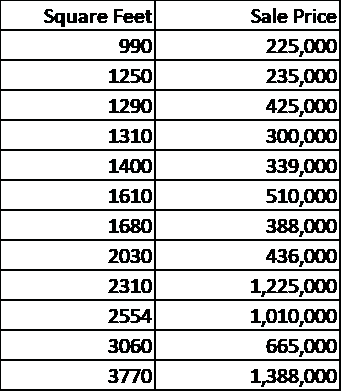

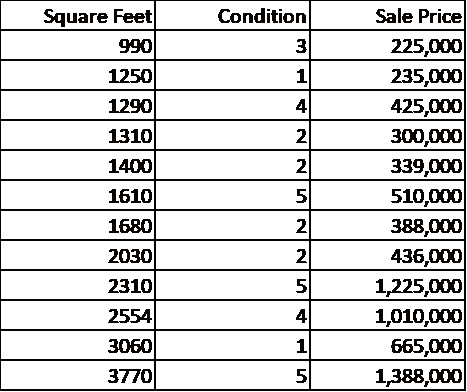

In the case of the temperature data, we already knew the function for Celsius to Fahrenheit conversion so we didn’t really have to learn it. But let’s take a slightly more complicated example where we don’t know the function in advance. For example, let’s look at a dataset composed of a random sample (i.e. a subset) of house sales in the Kings County area (which includes Seattle) from May 2014 to May 2015. The dataset contains the square footage and sale price of each house sold. A small portion of the dataset is shown below:

The full dataset is available on Kaggle.

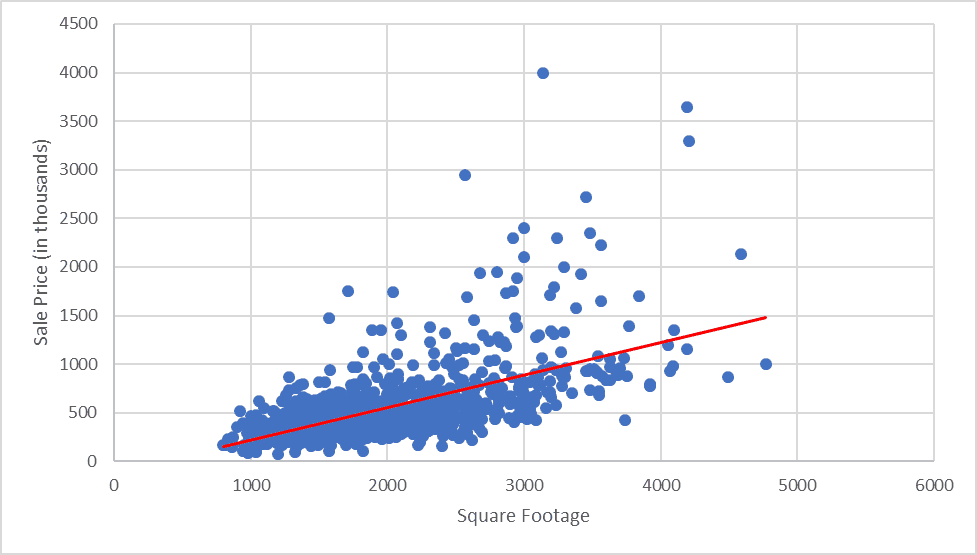

From this data, we can plot the sales on a graph as shown below:

In this dataset, we have one input variable (square footage) and one output variable (sale price). Mathematically, we can compute a function that defines the red line shown in the graph. The function that minimizes the average distance of each data point from the red line is

sale price = 29,283 * square footage – 63,164

This function provides a prediction of the house sale value given the square footage.

An algorithm is used to calculate the best-fitting line. An algorithm is simply a well-defined set of calculations (often instantiated in a computer program). What is interesting here is that, unlike our temperature example, the function is not a perfect predictor. For a given square footage, the actual data show a wide range of sale values. That isn’t surprising because there are many factors other than square footage that determine a house’s value. These factors might include the number of bathrooms, the proximity to water frontage, the school system rating, and many others.

Now, let’s look at a housing dataset with two input variables as shown below:

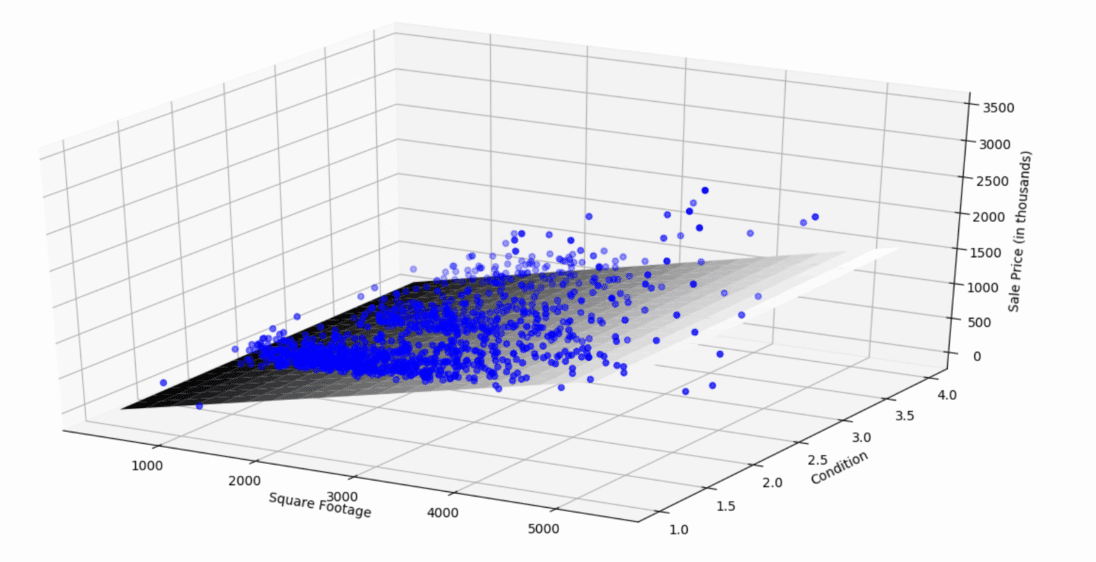

With two input variables, the function that best fits the data creates a plane and requires a 3-dimensional plot to visualize as shown below:

The plane that best fits the data is the one that minimizes the distances of the individual points from the plane and is defined by the function

Sale Price = 29,485 * Square Footage + 25,300 * Condition = 130,000

With three input variables, the function will define a hyperplane (rather than a plane) with four sides, each of which is a plane, though such an object is difficult to visualize. With more than three input variables, the resulting hyperplane is virtually impossible to visualize all at once. Both the two-variable and the three-variable functions are examples of linear functions. A linear function with two variables defines a line. A linear function with three variables defines a plane. A linear function with more than three variables defines a hyperplane. A function of two, three, or more variables that does not define a line, plane, or hyperplane is a non-linear function. When an underlying function is non-linear, visualization and function fitting become more complex.

This type of function is essentially what the online service Zillow uses, although the details of their process remain a secret. Zillow likely maintains a dataset containing one row for each house sale in the US. It also has columns for numerous house characteristics plus a column for the sale price. The Zillow team probably enters this table into a supervised learning algorithm that spits out a function relating the columns to the sale price. Zillow can then use this function to estimate the value of every house in the US.

Importantly, this does not mean that Zillow will necessarily make better sale price predictions that a human real estate agent. Despite the vast number of columns in the Zillow training table, a human agent might consider unique house features that are not in any of the Zillow columns. If these unique house features affect the selling price, the human real estate agent can theoretically make a better sale price prediction than Zillow.

In fact, in 2021, Zillow actually got into the home-buying business. They assumed that their algorithms could detect good values in homes. The reality: Zillow lost over a half billion dollars. We have to guess why because their data isn’t public. However, one can imagine that homeowners know many fact about their own house that aren’t in the Zillow dataset. Maybe their house is dark or there is evidence of water damage. These homeowners will gladly accept the price Zillow offers. In contrast, homeowners who own homes that they know will appeal to buyers are less likely to accept a low offer. So, Zillow probably ended up buying houses at higher cost than what real people would pay and ended up losing money as a result.

2.1 Models

In most cases, the input observations will only be a sample (usually a relatively small sample) of all the possible inputs. For the housing data, we were only looking at one year of data in a very small region. This makes it impossible to determine the true underlying function that defines the true data distribution. Instead, we compute the function that best fits the training data.

The learned function is typically instantiated in a computer program. These programs can be very small (e.g. as few as 5 lines of code). The programs typically make use of an external library of supervised learning algorithms via API calls.

As stated in the introduction, the goal of this reference material is to present machine learning concepts at a very high level without any complex mathematics. That said, many of the concepts are abstract and can sound quite complex. To bring them down to earth and make them more concrete, small snippets of code will be used to illustrate how the concepts are implemented. The coding language will be Python and the code presented will primarily be API calls to the scikit-learn library many machine learning algorithms themselves and to the Keras library (which is integrated with scikit-learn) for neural network algorithms. For those readers with a little bit of coding experience, the code should help clarify some of the concepts. For those readers with no coding experience, don’t be concerned about skipping the code discussions.

There are numerous supervised learning libraries from major vendors (e.g. Amazon, Microsoft, Google, IBM) and independent vendors and there are numerous open source libraries like scikit-learn. These programs take input values and predict the output value. These libraries contain many different supervised learning (and other types of machine learning) algorithms including neural networks that are used for deep learning.

Linear regression is a supervised learning algorithm that is often used to fit a linear function of two, three, or more variables to the data. Here is the sample source code for computing the temperature data using a linear regression algorithm:

If you want to predict the Fahrenheit values for a new set of Celsius values, the code is

If you want to determine how well the model fits either the training or test data, the code is

The .fit and .score methods also allow specification of an initial set of weights for the input variables. All learning classes in scikit-learn implement the .fit and .predict methods. Switching from one learning model to another can be as simple as swapping out one “model =” statement for another. The .score method allows the designation of a number of different scoring functions (e.g. mean squared error) or you can implement your own custom scoring function.

These programs will be referred to as models.

2.2 Assumptions

Each supervised learning algorithm carries with it certain assumptions about the data. Different algorithms require different assumptions. These assumptions include:

Linearity. This assumption specifies that all input variables have a linear relationship to the output variable (or to a transformation of the output variable such as the log of the output variable). To determine if the input variables have a linear relationship with the output variable, a scatter plot can be used. This is a plot of the data for each input variable vs the output variable and visually inspect the result to see whether or not the input variable has a linear relationship to the output variable. If the plot for an input variable is clearly not linear but has a recognizable shape, it may be transformable to a linear function. For example, if the function has a log shape, it can be transformed to a linear function with a log transformation. What this means is that, instead of using the input variable directly, one would use the log of the input variable instead. Other curvilinear trends may indicate that other transforms or a polynomial regression (see below) is required.

If outliers are present, i.e. individual data points that are so far from the rest of the data that they will skew the results, one can remove them. There is of course an inherent danger in removing outliers. They might not be just a bad data point in the training data. They might be found in test data and real-world data also. Detecting outliers can also be challenging. For datasets with a small number of variables, outlier detection can be performed by visual inspection of scatter plots. For high-dimensional datasets, algorithms are available to automatically detect outliers.

A second method for determining whether a linear relationship exists is by looking at plots of residual values. The residual values are the differences between the values predicted by the model and the actual values in the data.

If the true underlying model is non-linear, a plot of predicted values vs residuals will be non-linear. In a case like this, it may be better to move to a polynomial regression algorithm that results in a more linear plot of residuals.

Normal distribution. The relationship between each input variable and the output variable is a normal distribution. This can be determined by looking at a histogram. If the relationship is not normal, it is sometimes possible to transform the variable, often with a log transform. If the distribution deviates significantly from normal and cannot be transformed, it might be best to use a non-linear algorithm (see below).

Independence of input variables. The values of the input variables are not dependent on one another. This means there should be no correlation among input variables or any other type of dependency.

Independence and normal distribution of errors. The size of the errors around each input variable should be the same and they should be normally distributed.

Equal covariances. The covariances among the input variables are the same for all categories. You can think of the covariance between two input variables as more or less equivalent to the correlation between two input variables.

Unless you are working with a known relationship like our Fahrenheit/Celsius example, there is usually no way of determining with certainty that these assumptions are valid. It should also be noted that minor deviations from these assumptions won’t have a major effect on the usefulness of the model.

One can always compare the actual values in the training dataset to the values that are predicted by the learned function. One way to compare these values is to compute the sum of squared deviations divided by the number of observations. We square the differences to keep the magnitude but ignore the direction (positive vs negative) and divide by the number of observations to get a measure of performance known as a cost function.

This particular cost function is known as the Mean-Squared Error (MSE). It is a commonly used computation that measures how well the learned function (i.e. the model) fits the training data. If the normality assumptions are invalid, the MSE may be high. In general, linear models tend to have a low MSE if the assumptions are met and the ratio of the number of data points to the number of input variables is large.

Algorithms whose success depends on assumptions like these are termed parametric algorithms. Algorithms that make no assumptions about the underlying data are termed non-parametric algorithms. Parametric algorithms are easier to compute because we are estimating just a small number of parameters. The disadvantage is that parametric methods don’t work as well if data doesn’t conform to the assumptions about the underlying function.

2.3 Origin of the term “supervised learning”

If a software engineer writes a computer program that can add two numbers, the program performs the calculations using the exact instructions that were coded by the engineer. I will refer to coding exact instructions as conventional programming. Conventional programming implements an algorithm. We know precisely how a conventional program arrives at an answer because we (or someone we trust) hand-coded every step of the process.

In the 1980s, AI failed because it was too challenging to hand-code all the rules required for intelligent behavior using conventional programming. We can contrast conventional programming with machine learning, which has an element of learning on its own. In the temperature example earlier in this chapter, a program learned on its own how to convert Celsius to Fahrenheit. A human did not need to hand-code the temperature function. Calculating the function that defines the best-fitting line can be done with a simple but exact mathematical calculation. However, to create a model for data that have large numbers of input variables and/or require non-linear functions, exact mathematical calculations do not exist and the function can only be approximated.

The algorithms used to approximate these functions often work iteratively. Iterative algorithms take each observation in the dataset, look at the deviation between the actual output variable value and the predicted value, and then adjust the model so that it works well for all the observations that have been encountered to that point. This deviation is seen as a form of supervision (hence the term “supervised learning”). Just as a teacher’s feedback helps children learn, these deviations enable the algorithm to improve its estimates of the parameters in the model.

All supervised learning algorithms create a model (that computes a function) that optimally maps the input values to the output variable for the observations in the training data. This enables one to take a new observation (i.e. one not in the training set) and use the function to predict the value of the output variable for the new observation.

When a model has any degree of success in predicting values for new observations, it is said to have learned to generalize. The temperature function is a trivial example of supervised learning. However, applications like machine translation, computer vision, and speech recognition are non-trivial. Researchers tried for decades to hand-code rules for these applications and failed.

2.4 Model evaluation

The model we just created is our best estimate but how good an estimate is it? How well can we expect it to predict house prices given previously unseen observations? If you just train a model on a dataset and calculate a cost function like MSE, you will often be disappointed when you apply the model to predict real-world data. If you calculate the cost function on a new dataset, you will probably find that performance has decreased and maybe dramatically so. The reason is that, in any dataset, there will be real patterns of data plus random patterns of data.

Training a model on this dataset will cause the model to learn both the real patterns and the random patterns. When you apply the model to real-world observations, it won’t do as well because the random patterns aren’t applicable.

2.4.1 Splitting the data into training and test sets

Rather than training the model on the whole dataset, if we train on only part of the dataset, then we can test it on the remaining part in order to get a measure of how it will perform on new observations. The standard approach is to randomly partition the data into a set of training data and a set of test data (e.g. an 80-20, 70-30, or 60-40 splits). The model is created using the training data. Then the model is evaluated by how it performs on the test data.

To measure performance on the training and test sets, one computes a performance metric such as MSE for both datasets. If the MSE is high for the training data and low for the test data, it is likely the model will not perform well to predict new data. It is also important to note that even a good score on the test set does not necessarily mean the model will work well on new real-world observations.

The test set score only provides an estimate of how well it will work on new observations whose distribution of values is the same as in the test dataset. In many cases, the dataset will have biases. That is, the dataset will have more of some types of values than in the real world and less of others.

Splitting the data requires just one scikit-learn API call:

train_input, test_input, train_output, test_output = train_test_split(input_variables, output_variable, random_state = 80, train_size=.7, test_size=.3, shuffle=True)

This call also enables you to randomly assign samples to train and test, specify the percentage of samples that should go into the training vs test sets, and to shuffle the data so there are no order relationships. Specifying a random_state number enables one to recover the same train/test split in a future run if desired. |

2.4.2 Adding a validation dataset

Building supervised learning models is often an iterative process. The model is trained using the training set and is then tested. Then the model is adjusted, retrained, and re-tested. This process repeats until test performance stops improving. Re-testing multiple times on the test set introduces a bias into the model. Essentially, the model is tuned to fit the test set data and the final test isn’t a fair measure of the model’s performance.

To avoid this bias, it’s good practice to split the data into 3 sets: a training set, a validation set, and a test set. The validation set is used to iteratively test the model and the test set is only used for the final test of the model. Because the final model is based only on the performance of the training and validation performance on the test set, it provides an unbiased measure of performance against the dataset as a whole.

2.4.3 Cross-validation

If the size of the dataset is very large, then splitting the data into training and test (or training, validation, and test) works well. However, MSE’s tend to be larger for smaller datasets so breaking off a test set is likely to increase the resulting MSE because it makes the training and test sets smaller. Also, it is much more likely that different randomly selected test set will produce different MSE’s.

One solution to this problem is leave-out-one cross-validation. Using this method, instead of breaking the data into training and test sets, the test set is just one data point and the rest are used for training. The square of the distance from the test data point to the model is computed, then the process is repeated with all the other data points as single data point test sets. The sum of the squares of the distance for all the data points is divided by the number of data points to get the MSE.

Obviously, the computational requirements of this method increase with dataset size and becomes impractical for large datasets. A similar solution is k-fold cross-validation. Using this method, one randomly divides the dataset into k equal size datasets (or folds). Typically, 5 or 10 folds are used. Each data is used as the test set with the model trained on the remaining data and an MSE estimated for each fold. The average MSE for all the folds is used as the overall MSE. To use cross-validation, instead of

score = model.score(celsius, fahrenheit)

one uses

score = cross_val_score(lr, celsius, fahrenheit)

Here, the cross_val_score API call takes as input an estimator (i.e. an instance of a machine learning algorithm). In the example above, the estimator is a Linear Regression model. The input can also be a pipeline (a sequence of transformations such as scaling the data followed by an estimator). The cross_val_score API offers several optional arguments including a user-defined scoring algorithm, different splitting strategies, and whether or not to use multiple CPUs.

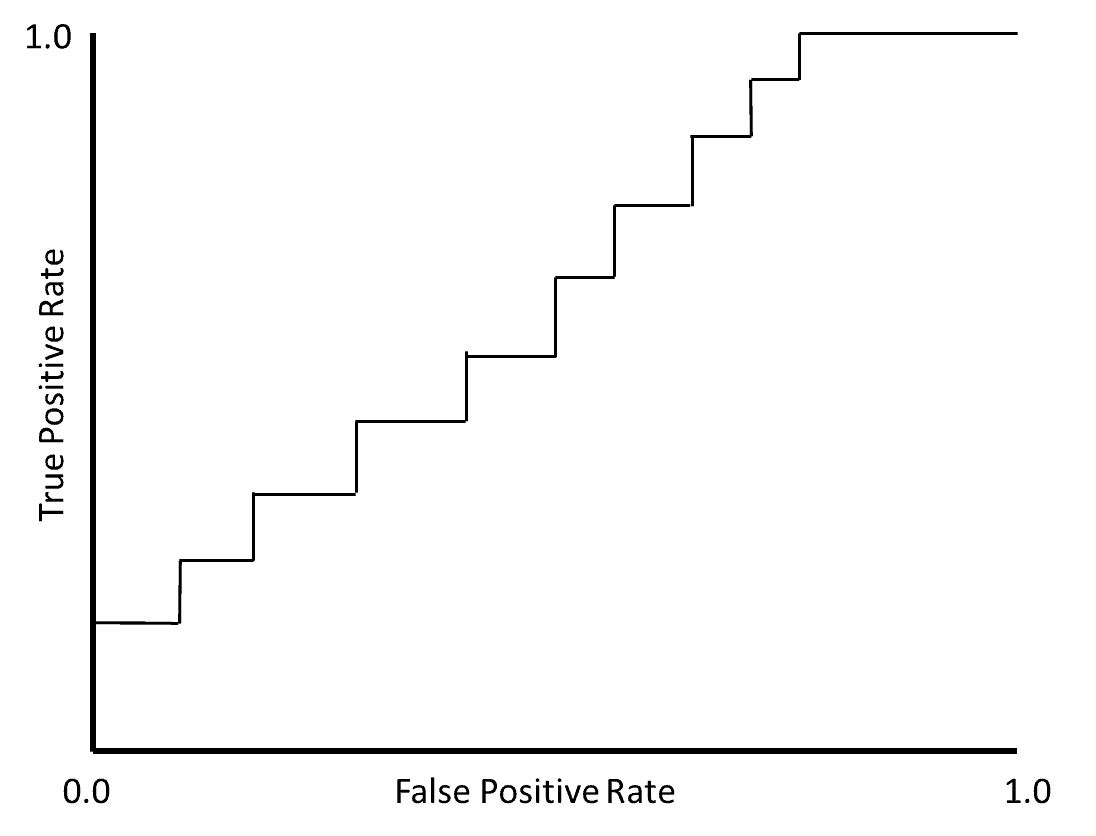

2.4.4 Area under the curve

For binary classification tasks such as determining whether or not an image is a picture of a dog, the area under the curve (AUC) of the Receiver Operating Characteristic (ROC) is often used. The ROC compares true positives (i.e. correct classifications such as correct determining that an image is a dog) to false positives (e.g. identifying an image as a dog when it’s really a cat). The ROC is a plot of the true positive rate i.e. the number of true positive classifications divided by the sum of the true positives plus the false negatives) on the y-axis and the false positive rate (i.e. the number of false positives divided by the sum of the false positives plus true negatives). A sample ROC curve is shown below:

The AUC is the area under the curve of the ROC curve. It ranges from 0 to 1 (perfect classification).

2.5 Underfitting and overfitting

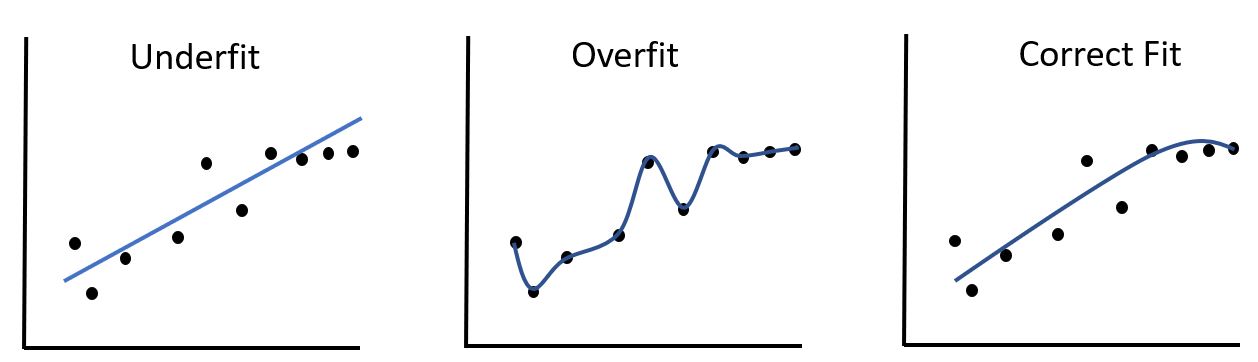

The goal of supervised learning is to create a model that estimates a function that works with real-world data. Let’s take a very simple example. Suppose we are trying to predict sales of a product based on the number of times a TV ad runs. Let’s suppose that the figure below plots some historical data on the effect on total sales (y-axis) of the number of times an ad runs (x-axis).:

The data seem to show that, up to a certain point, the more times the ad runs, the higher the sales. Then at a certain point, additional ad runs have little or no effect. (Note: the data points are the same in all three graphs).

In the left graph, we try to fit the data with a straight line. However, the straight-line predicts that we can increase sales forever by increasing the number of ad runs. A straight line underfits the data and is not a good predictor.

In the middle graph, we find a function that passes through every data point. This function fits the data we have perfectly. However, it is unlikely to do a good job of prediction on new data. This is because a lot of the variation in the data is probably random. If we try and use a function that predicts that random variation in the historical data, it probably won’t work well for predicting what will happen with a new set of TV ads. This is because the random patterns in the historical data probably won’t occur in the new data. This is an example of overfitting.

A better prediction function is shown on the right side. This function will probably do a reasonably good job of predicting what will happen in new TV ad campaigns.

2.6 Variance and bias

Variance is the amount the estimated function would change if we estimated with a different training dataset.

Bias is the error in the approximation method (e.g. using linear where real-life is non-linear).

As discussed earlier, it’s easy to find a low bias, high variance method – draw a curve that passes through every observation. Unfortunately, low bias, high variance methods don’t generalize well to test data and/or to real-world data. It is critical to find a method that has low variance and low bias.

2.7 Flexibility vs. interpretability

Data that can be fit with a linear regression algorithm produce a function that is easily understood. The result of applying a linear regression algorithm to a dataset if a function that specifies exactly how each input variable affects the output variable. However, as discussed below, the linear regression algorithm is not very flexible. Flexibility refers to the range of data distributions that can be fit by an algorithm. Linear regression is not flexible because, for it to work well, the data distribution must meet certain assumptions. Interpretability refers to the ease of understanding why an algorithm produces its predictions. The output of a linear regression algorithm is highly interpretable.

Even with linear models, the more input variables, the higher the dimensionality (each input variable can be considered a dimension), and the harder it is to understand how the model works. The additional variables add flexibility by increasing the fit to the data. However, they also make it harder to understand the resulting equation and therefore reduce interpretability.

Often, a linear regression model can be used but non-linear terms (e.g. squares, cubes, and other polynomial terms) are required to fit the data. These non-linear terms make the model more flexible but less interpretable. Non-linear regression algorithms express an algebraic relationship between the input and output variables. They tend to be more flexible but less interpretable.

Also, models produced with parametric algorithms are generally easier to understand than models produced with non-parametric algorithms.

Finally, deep learning neural networks tend to be very flexible but difficult to interpret.

Why do we care about interpretability? Sometimes, we don’t care. If we’re just building a model to automatically flag potential fraudulent credit card transactions, we might only care that it has a high hit rate (i.e. it finds a high percentage of fraudulent transactions) and a low false alarm rate (i.e. it flags a low percentage of non-fraudulent transactions). We might not care how the model figures it out.

On the other, if we want to make sure that the algorithm doesn’t discriminate against minorities, it’s very important to know how the model works. Or, if we build an AI system to approve or reject loans, we’ll probably want to be able to give an applicant the reason for the rejection. Similarly, if we build a model that predicts whether or not someone is likely to get diabetes, we need to understand how the model works so a doctor can use the model to counsel patients on what behaviors to avoid.

The European GDPR laws require explanations for any automated system that makes decisions that affect people such as loan or hiring decisions. Many other countries around the world, including the US, are putting similar requirements in place. Sometimes the choice between flexibility and interpretability can be difficult. It’s only natural to say “I want both”.

Researchers have developed a large of number of tools that analyze model inputs and outputs on a post hoc basis and attempt to explain how they work. In other words, even though a model might not be interpretable, it is still possible to make it explainable. In 2016, Marco Ribeiro and his colleagues developed LIME (Local Interpretable Model-Agnostic Explanations). Their system does not try to analyze the inner workings of prediction models. Instead, it tries to approximate the results of the model with an interpretable algorithm such as a linear regression algorithm or decision tree. For example, if a model predicts that a patient has the flu, LIME will try different inputs until it figures out that the model is focusing on sneezing and headaches and using ‘no fatigue’ as a contraindicator.

These authors subsequently developed a follow-on technique named Anchors. Another approach to interpretability is a technique developed at MIT termed Class Activation Mapping (Selvaraju et al, 2019) visually lights up the regions of an image that were critical in discriminating among image classes. ALE (Appley and Zhu, 2019) provides a means of visualizing feature impacts. See also this Google Research article on feature visualization. There are many other approaches to interpretability including SHAP (Lundberg et al, 2017), CEM (Jacovi et al, 2021), ProfWeight (Dhurandhar et al, 2018), DeepLift (Shrikumar et al, 2019), Global Importance Analysis (Koo, 2021) and LRP (Alexander Binder et al, 2016). DALEX (Biecek, 2018), InterpretML, Shapash, Explainer Dashboard, and DrWhy are open source sets of tools for interpreting models.

Finally, as researchers from IBM and Rochester Polytechnic Institute (Gruen et al, 2020) pointed out, there are many different types of explanations and defined 9 types:

1. Case-based, e.g. “To what other situations has this recommendation been applied?”

2. Contextual, e.g. “What broader information about the current situation prompted you to suggest this recommendation now?”

3. Contrastive, e.g. “Why administer this new drug over the one I would typically prescribe?”

4. Counterfactual, e.g. “What if the patient had a high risk for cardiovascular disease? Would you still recommend the same treatment plan?”

5. Everyday, e.g. “Why are gloves recommended when dealing with high-risk patients?”

6. Scientific, e.g. “What is the biological basis for this recommendation?”

7. Simulation-based, e.g. “What would happen if this recommendation is followed?”

8. Statistical, e.g. “What percentage of similar patients who received this treatment recovered?”

9. Trace-based, e.g. “What steps were taken by the system to generate this recommendation?”

These are all examples of local explanations.

However, global explanations are also important. Global explanations focus on the whole range of predictions made by a model and attempt to identify a single feature or handful of features that have the most influence on the model predictions.

That said, as Andrew Ng notes, different goals for explainability/interpretability muddy the waters. It is one thing to explain to a doctor the program’s decision rationale. It is quite another to explain to a regulatory agency why it’s not biased. And it is still another to explain the decision making process so an engineer can do an error analysis. People won’t use systems they cannot trust, especially in critical areas like medical decision-making.

The more transparent the decision-making process, the more likely it is that a model will be used. In the cult novel Hitchhiker’s Guide to the Galaxy, a supercomputer named Deep Thought took 7.5 million years to answer the ultimate question of life. The answer was 42 but it couldn’t explain its reasoning.

For an in-depth treatise on interpretability and explainability methods, see this online book by Christoph Molnar. For an overview of the frontiers of interpretability research as of October 2021, this article by Chris Olah, this NIST overview, and this Google Research overview.

2.8 Types of supervised learning

There are two categories of supervised learning algorithms known as regression and classification. Regression algorithms predict numeric values. Classification algorithms predict category values.

The temperature and housing data examples above are examples of regression algorithms. The sale price in the housing example is the output variable we are trying to predict and the square footage and condition are the input variables. When run on historical data, the regression algorithm creates a function that can predict the sale price of a new house coming on the market with varying degrees of certainty. Descriptions of other types of regressions algorithm can be found below.

The use of classification algorithms in AI is more prevalent than the use of regression algorithms. We used the temperature and housing regression examples because they are an easy way to illustrate how functions (and models) work. Both regression and classification algorithms require a dataset in which the correct output value is present for every observation. These output values essentially label the observation with the correct answer. Datasets composed of observations with labels are termed labeled data.

Some applications of supervised learning include:

- For a bank, predict whether a credit card transaction is fraudulent.

- In a hospital, predict if a patient will have a second heart attack.

- In an email system, predict if an email is spam.

- For an e-commerce system, predict what a consumer will want to purchase.

- In a photo app, predict whose face is in an image.

- To translate languages (e.g. Google Translate), predict the next word in the target language.

- For a driverless car, predict the correct next action (e.g. steering, braking, or acceleration).

- In a data center, predict power usage.

2.9 Classification algorithms

Classification algorithms require a labeled dataset and are used to compute functions. However, rather than predicting a quantitative variable like the regression algorithms, they are used to predict a qualitative or categorical variable. A classification task will have two or more categories and the learned classification model will map the input variables for an observation into one of the categories. The output variable has one value for each possible category.

For example, if you are trying to predict the weather, the possible categories might be rain, sun, or clouds. If you are trying to recognize handwriting, the categories are all the numbers and letters. If you are trying to find pictures of dogs, cats, and elephants, there would be four categories (dogs, cats, elephants, and none of the above).

The most common classification prediction tasks are binary: Is the transaction fraudulent or not? Will the loan applicant likely default or not? In contrast to regression where we are trying to find the line or hyperplane (or more complex surface) that minimizes the deviations of the examples from that line or hyperplane, the classification task is to find a line or hyperplane (or more complex surface) that maximizes the average distance between examples from the different categories.

2.9.1 Single vs multi-category classification

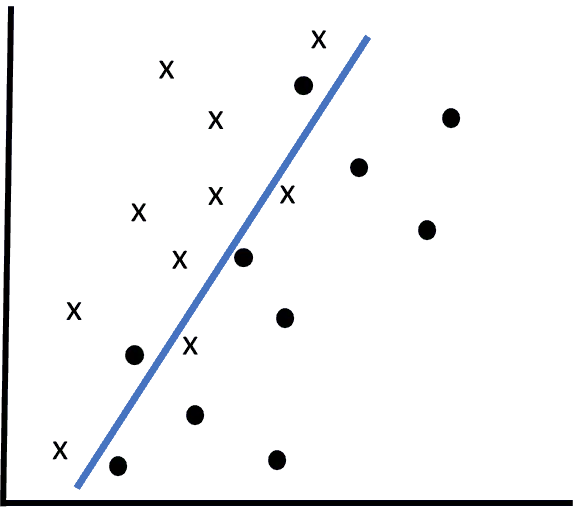

In the figure below, the x’s are training observations that are labeling as belonging to one category, and the o’s are another category.

In this example, we can draw a line that does a pretty good job of segmenting the two groups.

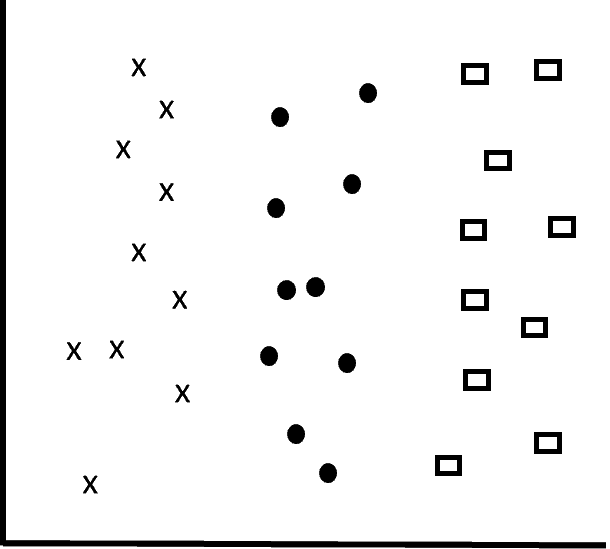

In contrast, in the figure below, there are three groups and two lines are needed to segment the three groups:

2.9.2 Linear vs non-linear classification

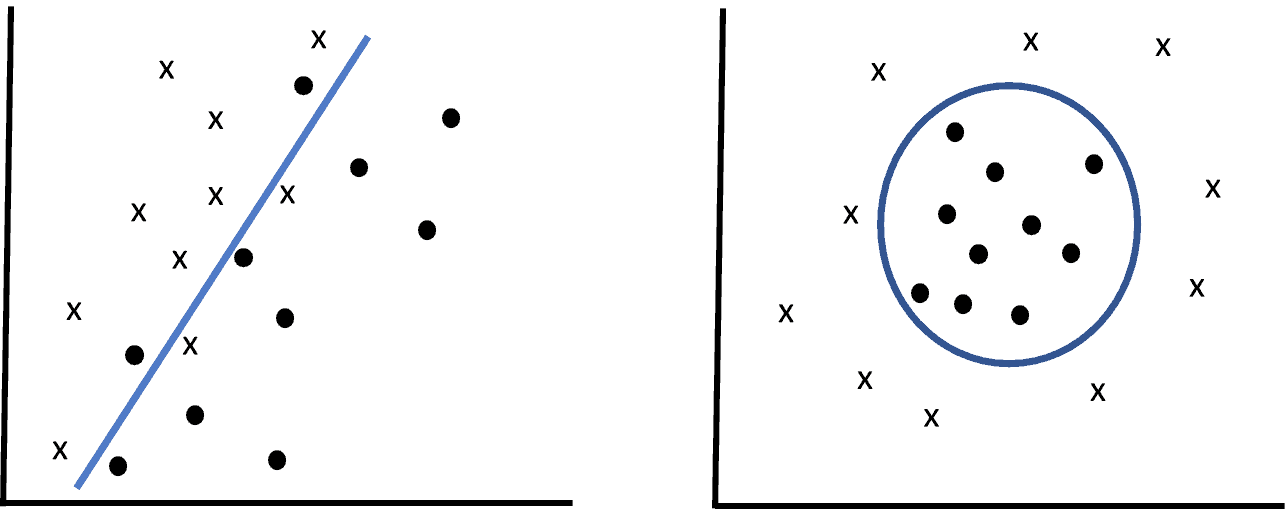

In many cases, it is possible to segment classes via a line or the hyperspace extensions of a line (e.g. a plane in 3-dimensions) as illustrated in the left side of the figure below:

In other cases, as illustrated on the right side of the figure above, a linear algorithm doesn’t work and a non-linear algorithm is required.

2.9.3 Classification algorithms

Below is a description of some widely-used classification algorithms.

Logistic regression is most commonly used for binary classification (even though it has the term ‘regression’ in its name). For example, one could use logistic regression to study the probability of getting diabetes (the output variable) based on input variables such as weight, age, gender, and other factors. Or one could use it to predict the likelihood of customer churn based on input variables such as the price paid for the software, the length of time the customer has been using the software, and the number of support calls.

Logistic regression assumes the input variables are independent and that there is a linear relationship between each input variable and the log of the odds of the output variable. It can also be used with more than two categories (known as either multinomial logistic regression). The models produced with multinomial logistic regression are often termed maximum entropy models.

Conditional random field (CRF) algorithms have been used extensively in natural language processing. CRFs are used when the output is a sequence of text predictions. For example, the best-known open source system for extracting named entities (i.e. people, places, and things) from text is the Stanford CoreNLP CRF algorithm. You can think of a CRF model as a logistic regression algorithm that is applied sequentially to different portions of the text. For a detailed explanation of CRFs, see Sutton and McCallum (2012).

Support vector machines (SVMs) find boundaries that separate two data classes by the maximum margin possible. The margin is distance between the boundary and the classes.

Linear SVMs find a line in two dimensions or a hyperplane in multiple dimensions that segments the observations into two classes. This methodology is primarily used for binary classification. Linear SVMs produce very similar results to logistic regression and both assume a normal data distribution and independence of input variables. However, SVMs are less prone to overfitting than logistic regression.

Two other advantages of SVMs are that they support non-linear classification and they are very effective when there are numerous input variables. A major disadvantage of SVMs is that it is hard to interpret the results because there are no weights that might be understandable.

SVMs use types of kernels that are essentially different ways of computing similarity (i.e. the inverse of distance) between two observations. Linear SVMs use a linear kernel that computes the similarity as the inner product of the input vectors.

Non-linear SVMs use different kernels including:

Radial basis function: Uses curves around data points to create a non-linear decision boundary so that data points that are within a certain radius of one another have high similarity and data points outside the radius have low similarity. This is probably the most popular non-linear kernel because it performs well in a wide variety of cases and doesn’t require any assumptions.

Gaussian: Similar to the inverse of a Euclidean distance compution. It results in similarity being high for objects that are close together in the high-dimensional space but similarity scores drop off quickly as the distance increases.

Polynomial: Similarity is the polynomial of the input vectors.

Sigmoid: Computes similarity using a sigmoid function.

Non-linear SVMs compute the boundary by finding a higher dimensional space in which there is a linear boundary. The kernel trick is an algorithm that efficiently performs this computation.

Linear discriminant analysis (LDA) is commonly used for classification tasks with more than 2 categories. The goal is to create a linear combination of input variables that will predict the correct category. It computes linear functions that segment the space by maximizing the distance between the centroids (i.e. the centers) of the distributions for each category. LDA assumes the input variables contain interval data that is normally distributed and that the input variables have equal covariances.

Quadratic discriminant analysis is used when the category boundaries are non-linear. The equal covariance assumption required for LDA is not required here. As with quadratic linear regression, this is a more flexible algorithm but has a higher variance and is computationally more expensive.

Log-linear regression is commonly used when the data represents counts for different variables. An example is counts of occurrences of cancer for different combinations of socio-economic and demographic variables. An example goal is to predict the likelihood of cancer given a specific combination of variable categorical values. In natural language processing, log-linear regression is often used to predict whether two sets of linguistic features represent the same or a similar underlying concept or relationship.

K-nearest neighbors finds the k closest training observations for each new observation and assigns the new example to the most common category for the k observations. For example, when k=3, if 2 of the 3 closest observations are in one category, assign the new observation to that category. K-nearest neighbors is actually a series of algorithms starting with 2-nearest neighbors, 3-nearest neighbors, and so on. Recall that if you have 2 input variables and one output variable, you have a 3-dimensional space that can be visualized in a 3-dimensional plot. For example, the 2-nearest neighbors algorithm takes each data point in the space and averages it with the point nearest in the space. The 5-nearest neighbors algorithm takes each data point and averages it with the 5 closest data points. These methods work well for low-dimensionality problems. For high-dimensionality problems, observations are often isolated and nearest neighbors are too far away. K-nearest neighbors requires no assumptions about the data.

Decision tree algorithms build an upside-down tree (leaves at the bottom). The first split (top of the tree) is based on the most important variable and iterates from there. So, if the first iteration splits the tree into two branches, then, for each branch we find the next most important variable (while factoring out any variability accounted for by the first variable) and split based on that variable. Decision trees have a number of advantages over other classification methods:

- They work well when there are non-linear and/or otherwise complex relationships between the input variables.

- They are very interpretable and can lead directly to decision-making paths.

- They are easy to display graphically.

- There is no need to determine which features are the most important. This is done automatically by applying the decision tree method.

- There is no need to transform data to meet the assumptions of the algorithm. For example, using linear regression, it is often necessary to apply some form of normalization or scaling of feature values. Using decision trees, the same tree will result without transformations.

- Outliers will not affect the result and do not need to be removed.

Neural network algorithms can be used to create classification models and are particularly effective for non-linear relationships and huge numbers of input variables.

2.9.4 Classification metrics

Performance metrics are more complicated for categorical output variables than for regression output variables. The most obvious measure is accuracy, i.e. the percentage of examples that are correctly classified by the model. But there are issues with accuracy when the category values are not evenly distributed.

For example, take a binary classification problem: fraud detection. Suppose that out of every 10,000 transactions, only 1 is truly fraudulent. Now suppose we build a model that classifies every transaction as non-fraudulent. The accuracy of the model will be 9,999/10,000 = 99.99% correct. Pretty good accuracy! Wait a second… We didn’t discover any fraudulent transactions.

The problem here is that we really want a model that minimizes false negatives (i.e. incorrectly classifying a transaction as non-fraudulent). On the other hand, a model that generates too many false positives (i.e. incorrectly classifying a transaction as fraudulent) isn’t so bad. Or, at least it’s not so bad for my credit card company but it’s still bad for me when they block or delay my non-fraudulent transactions.

Alternatives to accuracy as a performance metric include:

- Precision is the number of True Positives divided by the number of True Positives + False Positives. So, a model that always predicted that the transaction is non-fraudulent would score 0 on the precision measure. A model that correctly identifies all the fraudulent transactions but has no False Positives will score the highest value of 1. A model that has some True Positives and some True Negatives will score more than 0 and less than 1.

- Recall is the number of True Positives divided by the number of True Positives + False Negatives. So, a model that catches every fraudulent transaction will score 1 regardless of how many False Positives are produced.

- The F1 score is an accuracy measure that combines precision and recall.

- For natural language processing tasks, exact match accuracy is often used which simply means that the words in the answer produced by the system must exactly match the words in the correct answer.

2.9.5 Input variables for vision tasks

Classification tasks often include visual inputs such as pictures and/or videos. Examples include image classification, facial recognition, and image and video captioning. The most commonly used input variables for images are the individual pixels in the image. The term “pixel” is short for “picture element” and pixels can be thought of as a set of small dots that comprise an image. If an image is composed of a 28×28 array of pixels, then each pixel can be an input variable and there will be 784 input variables. Alternatively, there are many algorithms for preprocessing images and extracting features that can be used as input variables.

2.9.6 Input variables for natural language processing tasks

Classification algorithms are also commonly used for natural language tasks and use several types of input variables.

2.9.6.1 Individual words

Each word in an input sentence can be treated as a separate input variable. Parts of words can also be used. Google Translate uses parts of words termed word pieces. Other systems use parts of words termed tokens.

The form of the input variable is a vector of numbers. The different types of numbers used will be discussed below. In order to have equal numbers of input variables for all input observations, shorter sentences are filled with input variables that have vectors with 0 values. The number of input variables will be equal to the number of words in the longest input sentence (or document) in the dataset.

The same approach can be taken if it is desirable to treat each character as an input variable. This approach results in the number of input variables equal to the size of the maximum number of characters in a sentence (or document) which of course is much larger than the maximum number of words.

One-hot encoding is the simplest type of input variable for representing words and word pieces. One takes all the unique words in all the documents and numbers them 1 through n. Each word in an input sentence (or document) is simply the number of the unique word. The number is represented as a vector of length n that contains all zeroes except for a one in the position of the unique word.

(Note: If you are not familiar with the mathematical properties of a vector, you can think of a vector as a list).

One-hot encoding vectors can be overwhelmingly large. For example, Google researchers (Mikolov et al, 2013) identified 3 million different words and phrases in a Google News dataset of about 100 billion words total. A one-hot representation of words would require a vector with 3 million entries which would be very large for a machine learning algorithm.

2.9.6.2 Word embeddings

Word embeddings are much lower-dimensional representations of words that were first created by Yoshua Bengio and his colleagues at the University of Montreal (Bengio, 2003). The idea was to use neural networks to create a low-dimensional representation of each input word in a text. Bengio’s team showed how it is possible to take a corpus with 17,000 words and produce a 30, 60, or 100 slot vector for each word. The vector composed of these 30, 60, or 100 numbers was termed a word embedding. Using word embeddings instead of one-shot encoding is far more efficient than using one-hot word encodings.

A team of Google researchers (Mikolov et al 2013) created a an algorithm named Word2Vec that creates word embeddings. They used it to create a widely-used set of word embeddings that was created by running Word2Vec against a 100 billion word Google News extract. The set of word embeddings contain 300-dimensional word vectors for 3 million words and phrases.

In the chapter on Transfer Learning, we will discuss another advantage of using word embeddings: they been shown to contain semantic information about the words and this reduces training time on most NLP tasks.

2.9.6.3 Document embeddings

For natural language tasks such as topic identification and sentiment analysis, where the inputs are long documents instead of individual sentences and the goal is to extract high-level information about the document, one-hot and word embedding representations lead to unnecessary computation complexity. Instead, each input document (rather than each word) is represented by a vector of numbers. This type of aggregate representation for each document is known as a vector space model (VSM).

The numbers in the vectors can take several different forms:

In a bag-of-words representation of an input document, the size of the vector is the number of unique words in all the documents. The value of each vector entry is the number of times the word appears in the document. Here is an example of an Amazon review:

It feels flimsy and hollow. It is sad. It has dull and uneven lighting. It also feels cheaply made. It is a major disappointment.

The BOWs for this review would be:

| it | 5 |

| feels | 2 |

| and | 2 |

| is | 2 |

| flimsy | 1 |

| hollow | 1 |

| sad | 1 |

| has | 1 |

| dull | 1 |

| uneven | 1 |

| lighting | 1 |

| also | 1 |

| cheaply | 1 |

| made | 1 |

| major | 1 |

| disappointment | 1 |

Using BOW as the input variables can often result in reasonable performance on some natural language processing tasks such as topic identification (identifying the topic of a document), sentiment analysis (identifying whether a social media post or other text has a positive or negative sentiment), and emotion analysis (identifying the emotions expressed in a text). However, it should be noted that this feature set loses all information about word order, sentences, and even the frequency of word occurrence in a document. So, two completely different sentences could have the same representation if they have the same words. There is also no semantic information about the words that one has with word embeddings.

- Bag of n-grams

An n-gram is a sequence of words. Bigrams are two-word phrases. Trigrams have three-words. So, a bag of bigrams would have the frequency that each two-word phrase appeared in a document. Bag of n-grams suffers from similar issues to those just described for bag-of-words. Bag-of-n-grams has some word order information (i.e. the short word order context of the phrases themselves) but

- Term frequency-inverse document frequency (TF-IDF)

In a TF-IDF vector, each number is based on two components:

-

- Term frequency (TF): The frequency of occurrence of a word in the documents divided by the total number of words in the document.

- Inverse document frequency (IDF): The log of (the number of the documents in the corpus divided by the number of documents that have the word).

The TF-IDF value is the multiplication of the two terms.

2.10 Regression algorithms

This section discusses the most commonly used regression algorithms. These are algorithms that predict a continuous value. Linear regression with just one input variable is known as univariate linear regression. Multiple linear regression has multiple input variables. Linear regression (discussed above) requires assumptions of linear relationships between the input and output variables, a normal distribution of data, independence of input variables, and independence of errors.

2.10.1 Polynomial regression

When the underlying relationship between input variables and observed values is non-linear (perhaps discovered via scatter plots) and the data cannot be transformed (e.g. via a log transformation) to a linear relationship, polynomial regression is an option. A log transformation simply multiplies each data value by a log function. Instead of looking for a linear relationship (such as the linear y = ax + b equation we used to predict housing prices), one might add higher degree terms. For example, y = ax + bx² + c would fit a 2nd-degree polynomial.

This is still linear regression because a least squares algorithm determines the values of the weights for the polynomial variables. While the results of polynomial regression can be predictive, they are generally difficult to interpret. Polynomial regression assumes that there is a linear or curvilinear relationship between the output variable and each input variable, the input variables are independent, and the distribution of errors is independent.

In many cases, it will make sense to try adding interaction terms and/or non-linear transformations (e.g. x2 or x3) as input variables. For example, if there are two input variables x1 and x2, one might add the interaction term x1 * x2 (i.e. x1 times x2) and also add x12 and x22 (i.e. the squares of the variables) and perhaps also add x13 and x23 (i.e. the cubes of the variables). The scikit-learn API has a PolynomialFeatures class that will generate these terms for you.

2.10.2 k-nearest neighbors

k-nearest neighbors was described above under classification algorithms and works the same way for regression. It works well for low-dimensionality problems. For high-dimensionality problems, observations are often isolated and nearest neighbors are too far away.

2.10.3 General additive algorithms

These algorithms extend linear regression by supporting even more complex non-linear relationships than polynomial regression. For example, the weights for each input variable could be a function. These algorithms result in reasonably interpretable models but less so than simple linear regression. The primary limitation in the use of these algorithms is that the functions must be non-correlated to work well.

2.10.4 Decision trees

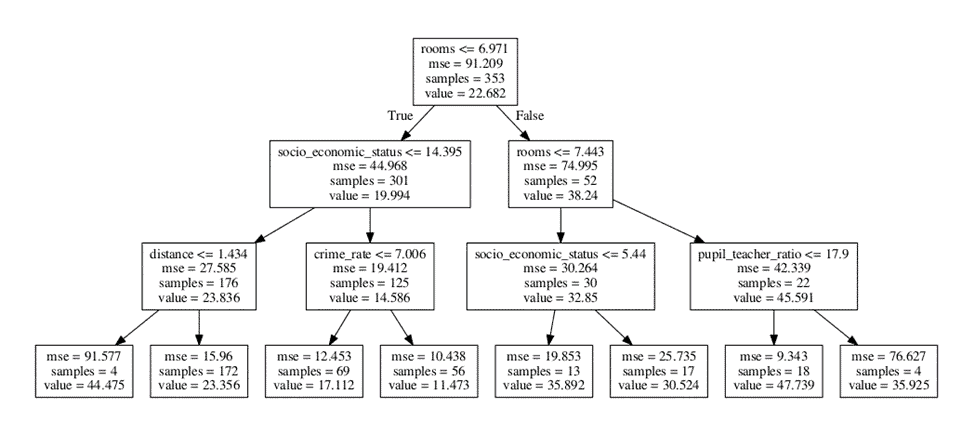

Decision trees are widely used for classification (see below) but can also be used for regression. An example of a regression decision tree for the housing dataset is shown below:

2.10.5 Neural networks

Neural networks are capable of solving the most complex regression problems. For example, as Alibaba researcher Rong Jin (2017) pointed out, product recommender systems contain information about consumer ages, genders, and locations, and vendors have categories, sellers, and brands. Since features are mostly binary features generated by one-hot encoding, it’s not unusual of have hundreds of millions of binary features. Linear models are ill-suited for high-dimensional problems. Neural networks are well-suited for such high-dimensional problems.

2.10.6 Regression algorithms in scikit-learn

In addition to the LinearRegression and SGDRegression classes mentioned above, the scikit-learn package has classes for other regression algorithms such as lasso, ridge, elastic net, LARS, OMP plus a number of other algorithms not discussed above including Bayesian, Huber, ARD, kernel ridge, and support vector machine regression. Many other algorithms (e.g. XGBoost) are available as add-ins.

2.11 Hyperparameters

Each supervised learning algorithm has a set of hyperparameters that affect the performance of the learned model. Unlike model parameters, hyperparameters are not learned by the model. Rather, they are set by the user of the algorithm.

For example, a binary classification algorithm might try to maximize the average distance between points in the two classes or it might try to minimize misclassifications. These two objectives are often at odds with one another. As a result, classifications algorithms like SVMs have a hyperparameter that determines how much to prefer one objective over the other. To build a model, it is often necessary to try multiple hyperparameter settings, a process known as hyperparameter tuning.

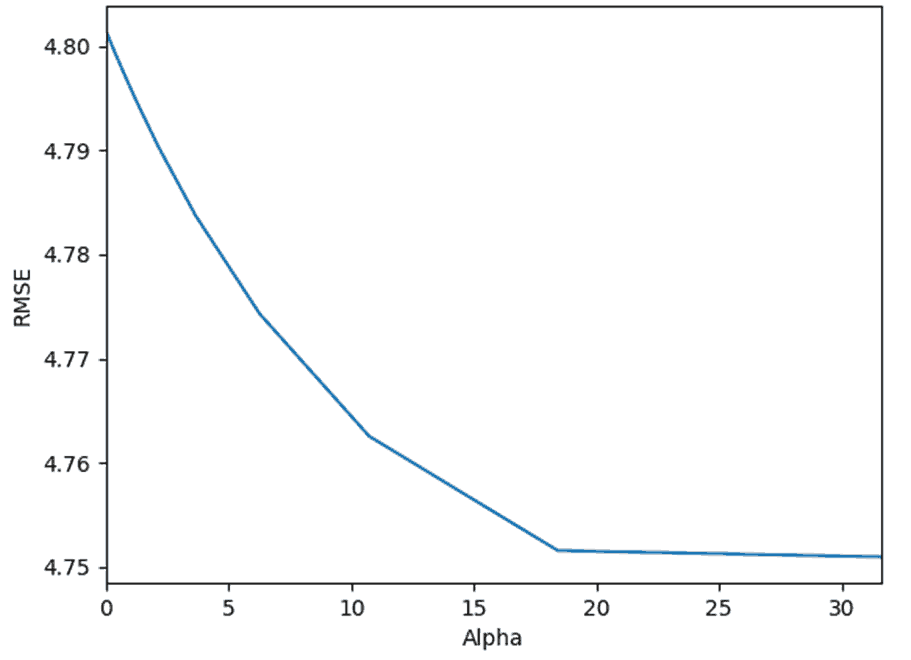

As another example, the random forest algorithm requires specification of the number of trees in the forest and the number of features to consider when looking for the best split. The lasso algorithm requires a hyperparameter, alpha, that controls the degree of shrinkage of the weights and therefore controls the number of variables whose weights become zero. In most cases, hyperparameter selection is done by trying a number of different values and seeing what works best.

Here is an example of code that finds the best alpha value for a lasso regression:

When you have no idea what value a hyperparameter should have, a simple approach is to try out a wide range of values as in the example above.

Looking at the plot of the results in the figure above, we can see that the error loss stops decreasing at around an alpha value of 20.

For many of the estimators in scikit-learn, there are two classes – one that accepts specific values for the hyperparameters and another that helps find the best hyperparameters. For example, the Lasso class used above requires a value for alpha. The LassoCV class accepts an array of alpha values and finds the best value of alpha.

Here is some example code for the LassoCV class:

LassoCV also uses cross-validation to improve the result (by default it uses 3-fold cross-validation but the method can be set as a hyperparameter).

The scikit-learn package also offers generic grid search classes that can be used for algorithms with multiple hyperparameters. GridSearchCV exhaustively generates all combinations of hyperparameters (based on ranges input to the class). RandomizedSearchCV does a randomized search which is of course a lot faster because it tries fewer hyperparameter combinations. Bergstra et al (2012) showed that random trials are far more efficient than grid search and produce good results.

2.12 Ensemble algorithms

Determining which regression or classification algorithm to use can be a bit of an art. The same holds true for choosing the hyperparameters. Because it can be hard to determine the best algorithm and hyperparameters for a given task, combining multiple models often works better than using a single model. These combinations of multiple models are termed ensemble models. Ensembles can also be used with a single model and multiple sets of hyperparameters. This can take the guesswork out of hyperparameter selection.

There are several types of ensemble models…

2.12.1 Voting ensemble models

Voting methodologies use multiple classification methods (e.g. k-nearest neighbors, neural networks, decision trees). The same training data is fed to each method to create a group of classifiers (e.g. one for each method or maybe multiple k-neural network methods with different k values). Then, for test or real-world data, each classifier “votes” their classification and the one with the most votes wins. For regression problems, the results of all the classifiers are averaged.

2.12.2 Bagging

Bagging is a technique for effectively increasing the sample size. It is used primarily in conjunction with decision trees but can be used for any regression or classification technique. Bagging, which is short for Bootstrap with Aggregation, doesn’t increase performance but does reduce variance and hence generalizes better from training to test datasets. Bagging creates additional training samples that are the same size as the original training sample by random sampling with replacement from the original. For regression problems, the results of all the training samples are averaged. For classification problems, a “voting” algorithm is used, i.e. each model votes on the classification and the one with the most votes wins.

2.12.3 Boosting

Boosting is an iterative technique in which each training record is given a weight. For the first iteration, all the weights are equal. Then, training records with incorrect classifications are given higher weights. As with bagging, a second iteration is done by creating a new sample of the same size as the original by selecting training records with replacement. In contrast to bagging, where selection is random, with boosting, the higher weighted training records are more likely to be selected. As a result, the iteration will produce a classifier that specializes in the incorrectly-classified cases from the first iteration. The process continues for k iterations and, like bagging, produces k classifiers. As with bagging, a “voting” algorithm is used, i.e. each model votes on the classification and the one with the most votes wins.

2.12.4 Random forest

Random Forest is a bagging algorithm that is used only for decision trees. The goal is to produce multiple decision tree classifiers from the training set and to have them vote on the correct classification. However, the full training sample is used for all classifiers. What varies between classifiers is the subset of input variables fed into the decision tree algorithm.

2.12.5 Stacked ensemble algorithms

Most winners of ML competitions (e.g. Kaggle.com and KDD Cup and the old Netflix Prize) use stacked ensemble algorithms. Like a voting algorithm, the first step is to train a number of different algorithms/hyperparameters. k-fold cross-validation is typically used to avoid overfitting. Then a new model is trained based on the predictions of the different algorithms. Instead of voting, supervised learning is used to find the optimal combination of these models. Since many of the predictions will be correlated, it is important to use some form of regularization to minimize the impact of highly correlated input variables (i.e. correlated model predictions).

2.13 Generative algorithms

Classification algorithm can be segmented into two categories: discriminative and generative classifiers. For an in-depth look at the difference between these two types of algorithms, see Ng and Jordan (2002).

Suppose we want to build a simple classifier that attempts to guess whether a person is a basketball player or not based on height and gender. The discriminative approach is to find a function that relates height and gender directly to the binary determination of whether that person is a professional basketball player.

The generative approach is to first identify the underlying distribution that shows the probability of playing professional basketball for a given gender and height. The generative approach would create a distribution based on the data that shows that for men most professional basketball players are over 6 feet tall and for women that most professional basketball players are over 5’8”.

So, the discriminative approach directly relates gender and height to playing basketball where the generative approach first infers the distribution from the data and then bases the decision on the distribution. The discriminative algorithms above essentially learn the boundaries between categories. Said another way, the algorithms learn how to best use the input variables to distinguish between categories. What they don’t do is learn anything about the underlying distribution of data by category.

In contrast, generative algorithms try to do exactly that. They estimate the distribution of data for each category and then, for a new test observation, they use the distribution to create the prediction classification or value. When presented with a new training example, the prior (i.e. the estimated distribution of data prior to seeing the new example) is updated to incorporate the new example and becomes a posterior distribution that can be used to recognize the next test observation. It also becomes the prior for the next training example.

If it sounds like the generative approach is harder than the discriminative approach, that’s because it is. In fact, for use cases where both discriminative and generative algorithms are tried, the discriminative algorithms typically perform better. However, generative algorithms can learn with much smaller sets of examples and they can learn incrementally.

One of the really interesting characteristics of many generative models is that the learned distributions can be used to generate new instances. For example, generative adversarial models can create images of people who don’t exist.

2.13.1 An example: building a spam filter

Consider the task of building an English language spam filter using a discriminative model. The input variables might be the words in the emails or it might be something like all possible bigrams (2-word combinations).

A discriminative algorithm would try to find a function that discriminates between the spam and non-spam classes. While this approach can be quite effective, it is computationally expensive and it suffers from an additional deficiency compared to generative models: It is very hard to continually add new spam examples to the model. Every time new examples are to be added, the entire model must be recalculated. Using the generative approach, we try to estimate the probability of a spam message given on each word in the message and then combine the probabilities. However, this probability is difficult to estimate directly.

Bayes Theorem is a mathematical theorem that tells us that the probability of spam given a word can be computed by knowing two other probabilities: the probability of the word occurring in any email text and the probability of the word occurring in a spam text. These two probabilities, however, are easy to estimate. We can do it directly from our dataset of emails that are labeled as spam and not spam. More importantly, it is easy to recalculate these probabilities as new emails are added to the dataset. There are a number of uses for generative algorithms especially in the area of natural language processing.

2.13.2 Naïve Bayes algorithm

Perhaps the most common form of generative model is known as Naïve Bayes. Early spam filters used this technique to estimate the true density of words like “Viagra” in legitimate emails compared to emails that had been marked as spam. A new email with words that appear more often in spam is then classified as spam.

As an aside, the technique is known as Naïve Bayes because it relies on an assumption that the probability of a spam message given one word is independent of the probability of a spam message given another word.

The reason this assumption is used is because it greatly simplifies the computational calculations required to compute probabilities by enabling each word to be its own predictor. Without the independence assumption, one would have to use combinations of words as predictors and there are way too many possible combinations of words to be computationally feasible. This assumption is considered naïve because it is often not true. In fact, we know this assumption is not correct for spam analysis because, for example, words like “refinance” and “loan” are both likely to occur in spam messages regarding loan refinancing. Naïve Bayes is widely used in natural language processing because the technique produces good results despite the violation of the independence assumption.

2.13.3 Probabilistic programming

Probabilistic programming is a methodology for implementing Bayesian methods. So what is a Bayesian method? Let’s look at an example: Suppose we are given a coin, flip it 2 times, and it comes up heads each time. What is the likelihood it will come up heads the 3rd time? One could assume that if it comes up heads both flips, it will always come up heads.

A Bayesian approach would start with a default underlying probability distribution such as a uniform distribution (i.e. one that assumes an unbiased coin that comes up heads half the time and tails half the time). The Bayesian approach is to assume the odds of the 3rd flip also being heads is (2+1)/(2+2) = 75%. If the 4th flip is also heads, the probability of the 5th flip being heads increases to 80%. As more and more heads appear without a tail, the probability will slowly trend to 100%. If tails start to appear, the probability will trend towards 50%.

Another description of what is happening is that we are incorporating the new information provided by every coin flip into our model of the distribution of coin flips. In Bayesian terms, we start with a prior model and incorporate what we learned from the coin flip into a posterior model and use the posterior model to make our predictions. The posterior model then becomes the prior model. When the data are structured into groups, hierarchical Bayesian modeling can be used. For example, if one is trying to predict housing prices based on the number of rooms for a number of different counties, the counties might be used as groups and will often produce better results than trying to model each country separately or ignoring counties and combining all the data.

Probabilistic Programming is a set of techniques for implementing Bayesian models. There are a number of probabilistic programming languages available. One of the first was Church. Perhaps the best known are Stan, PyMC3, and WebPPL. Uber recently released Pyro as open source code and Edward is another new open source library. Uber uses Pyro to match riders to drivers, find optimal routes, and find pool combinations. There are many other probabilistic programming languages. Probabilistic Programming models have a number of advantages over discriminative models:

- As mentioned above, new examples can be added without retraining the whole algorithm.

- Training requires far fewer examples

- The model is more interpretable and it is easier to explain the decision-making process

- They require much less computational power

- They do a better job of handling missing data values

2.13.4 Mixture models

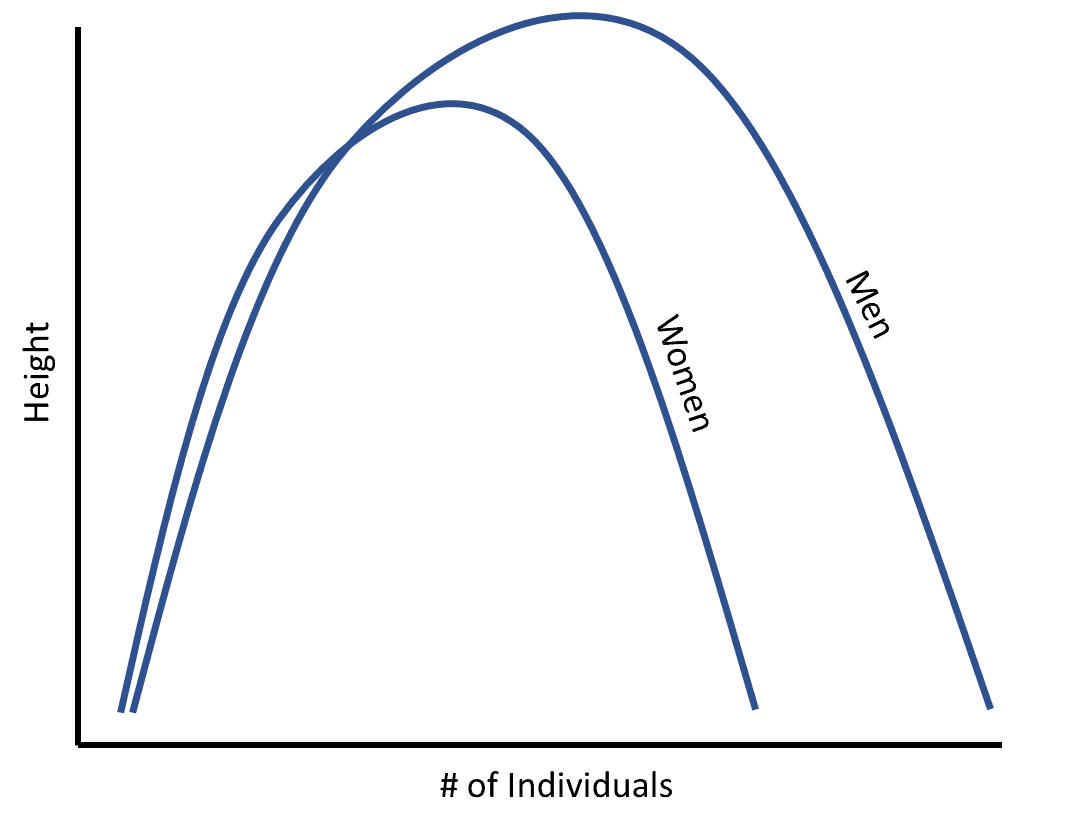

Mixture models are used to represent subpopulations that have different distributions within an overall population. For example, if one were to plot the heights of men and women in the US population, it would probably be a multi-modal normal (Gaussian) distribution like the one below:

Given a dataset containing only the heights of individuals in the US (and not the genders of the individual), one could use a Gaussian Mixture Model to identify the distributions of the two subpopulations. This example would be a form of unsupervised learning. Gaussian Mixture Models were also used heavily in early speech recognition research. Other types of mixture models involve other distributions such as binomial, poisson, and exponential distributions.

2.13.5 Energy-based models

One difficulty with the generative methods discussed so far is that they assume underlying normalization distributions. Energy-based models (LeCun et al, 2006) don’t require this assumption.

2.14 No free lunch theorem

Isn’t there one classification algorithm that is better than all the rest? The No Free Lunch theorem (Wolpert and Macready, 1997) states that for any two optimization algorithms, A and B, there are as many tasks for which A will do better than B as there are for which B will do better than A.

How do we know if a model created with one algorithm and one set of hyperparameters is a good fit? And how do we know that some other algorithm or another set of hyperparameters might not produce a better fit? It is hard to know in advance which optimization algorithm and hyperparameters will be the best fit for a specific prediction task. Machine learning practitioners tend to rely on both experience and trial-and-error to find the algorithm and parameters that will result in the best real-world performance.

The no free lunch theorem also means that there is a tradeoff between the breadth of a task and the performance on that task. When the problem domain is broadened, performance decreases.

2.15 Regularization

If a function has a single large weight, it will often disproportionately affect the predicted value. Often, large weights are an indication of overfitting. The large weight works well for the training data but not the test data. A common way to cause the learning algorithm to learn smaller weights is to add a regularization parameter. A regularization term is a modification to a cost function to achieve a particular objective.

Some commonly-used forms of linear regression with regularization terms include:

Ridge regression: This method, also known as L2 regularization, uses all the predictor values. However, rather than just using a cost function that minimizes least squares, it adds a variable that tends to penalize large values. When the resulting function is minimized, it tends to shrink some input variable weights to zero or near-zero and therefore effectively shrinks the dimensionality. It works particularly well when some input variables are correlated and when the model has high variance. As such, it tends to increase the MSE in the training set (versus least squares) but reduce it in the test set. It is also computationally less expensive than the least square approach just discussed because one doesn’t have to compute the result for every possible remaining input variable at each step.

Lasso regression: This method, which stands for Least Absolute Shrinkage and Selection Operator is also known as L1 regularization, is similar to ridge regression in that it adds a parameter to the cost function that shrinks the input variable weights. However, unlike ridge regression which only makes some weights small, lasso regression actually shrinks some weights to zero so that the final regression function does not contain all the input variables. However, this method cannot be used if the number of input variables is larger than the number of observations.

Elastic net: This method combines the L1 and L2 shrinkage penalties to overcome the lasso limitation just discussed and works well when the number of input variables is larger than the number of observations.

2.16 Dimensionality reduction

When there are too many input variables (i.e. too many dimensions), it can be very difficult to train a model without overfitting. This is known as the curse of dimensionality. For example, in our housing dataset, suppose that we use both “# of rooms” and “square footage” as input variables. These variables have a high correlation, in other words, when there are a large number of rooms, the square footage will usually (but not always) be large and vice versa. However, the correlation might not be as high in our training set as in the real world and the supervised learning algorithm might use these variables in different ways that work well in the training set but not in the test set and/or real world.

In other words, the learning algorithm might treat these as completely separate dimensions where really using just one or the other variable would lead to nearly equivalent results in the real world. As a result, data scientists (see below) often look to try and reduce the dimensionality of the solution. There are two general categories of dimensionality reduction: Variable selection and feature extraction.

2.16.1 Variable selection

The variable selection method is to discard selected features.

Variance-Based Selection: One can analyze the variance (i.e. predictive value) of each individual input variable on the output variable and discard input variables that do not meet a threshold of predictive value.

Correlation-Based Selection: Input variables are often highly correlated with one another. For example, in our housing dataset, the number of rooms is likely highly correlated with the square footage. Rather than use both, it is often advantageous to discard the one with the lesser predictive value.

Stepwise Regression and Classification: Stepwise methods start by trying all models with one input variable and starting with the model that minimizes a cost function. Next, it adds the input variable that minimizes the cost function for the two-variable model and continues until the cost function stops getting smaller. Unfortunately, if one continues this process, the error may never stop going down even though the added parameter may have no predictive value in the test dataset or in the real world.