Performance of Self-Driving Cars Can Be Improved With Deepfake Images

A self-driving car can, at least theoretically, do many things better than people. It can attend to multiple objects in the environment (pedestrians, stop signs, birds, and road hazards) simultaneously. It will never get tired, drunk, or distracted and it can react much faster than humans to avoid accidents.

Machine Learning Systems For Self-Driving Cars

Autonomous vehicle manufacturer Oxbotica has recently developed a technology that promises to speed up progress toward fully autonomous vehicles such as self-driving cars. Machine learning systems require massive amounts of training data to learn. People can learn to recognize stop signs with a handful of examples. We can recognize a stop sign in the rain without ever having had a teacher or parent show us a stop sign in the rain and say “that’s a stop sign”. We can recognize a stop sign half occluded by a car without ever having seen one before.

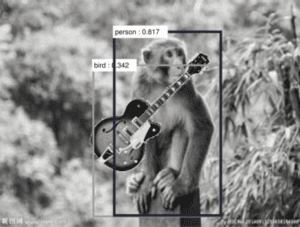

Machine learning systems are different. For example, a group of Johns Hopkins University researchers trained a machine learning system to learn to recognize monkeys. Then they took an image of a monkey and added a guitar to the image. When asked to classify the animal in the image, the system thought it was a human. Why? It had never seen a monkey with a guitar. Its training set only included humans with guitars.

For a self-driving car to learn to identify stop signs, its training set must include stop signs in all different kinds of weather, it must have stop signs occluded by other objects, it must have stop signs of all different shapes and sizes, and many other variations. (See this video by Tesla Director of AI Andrej Karpathy for a discussion of stop sign detection in Teslas). Collecting numerous examples of each variation is a daunting task.

Augmenting Self-Driving Car Datasets Using Deepfake Technology

Oxbotica has shown how deepfake image technology can be used to augment this training data.

As depicted in the image above, Oxbotica uses deepfake technology to create variants of the original image by changing the weather, changing road markings, and other alterations. These augmented images will improve the robustness of the machine learning in self-driving cars because they will add combinations of image characteristics that might not have been present in the original dataset. This will help avoid the monkey with a guitar problem.

The Big Problem for Self-Driving Cars: Edge Cases

While these augmented datasets will likely improve training, they will not be the key technology that enables us all to pile into our self-driving minivans on to visit grandma for the holidays.

In 2009, Captain Sully Sullenberger had just piloted his plane into the air when a flock of Canadian geese took out the engines. The plane was only 2,900 feet above the ground, and the pilots had only a few minutes to maneuver before the plane would hit the ground. They had received no training on this specific scenario other than to apply a few basic rules and apply their common sense. To decide the best course of action, they factored in the likelihood of their passengers surviving various crash alternatives, the likelihood of injuring people on the ground, where rescue vehicles would be quickly available, and many other factors. Then they heroically landed in the Hudson River and all 155 passengers survived.

Pilots receive extensive training, but it is impossible to train them for every possible situation. For those edge cases, they must use their commonsense knowledge and reasoning capabilities.

The same is true for automobile drivers. A Clearwater Florida high school student noticed a woman having a seizure while her car was moving. The student pulled her car in front of the woman’s car and stopped it with no injuries and only minor bumper damage.

Most of us have encountered edge cases while driving: A deer darts onto the highway. A flood makes the road difficult or impossible to navigate. A tree falls and blocks the road. The car approaches the scene of a car accident or a construction zone. A boulder falls onto a mountain road. A section of new asphalt has no lines. You notice or suspect black ice. Drivers are fishtailing, trying to get up an icy hill. We all have our stories.

People do not learn about all these possible edge cases in driving school. Instead, we use our commonsense reasoning skills to predict actions and outcomes. If we hear an ice cream truck in a neighborhood, we know to look out for children running towards the truck. We know that when the temperature is below 32 degrees and there is precipitation on the road, and we are going down a hill that we need to drive very slowly. We change our driving behavior when we see the car in front of us swerving, knowing that the driver might be intoxicated or texting. If a deer crosses the road, we are on the lookout for another deer because our commonsense knowledge tells us they travel as families. We know to keep a safe distance and handle passing a vehicle with extra care when we see a truck with an “Extra Wide Load” sign on the back. When we see a ball bounce into the street, we slow down because a child might run into the street to chase it. If we see a large piece of paper on the road, we know we can drive over it, but if we see a large shredded tire, we know to stop or go around it.

Unfortunately, no one knows how to build commonsense reasoning capabilities into self-driving cars (or into AI systems in general). Without commonsense reasoning capabilities to handle these unanticipated situations, self-driving vehicle manufacturers have only two choices for these unexpected phenomena. They can try to collect data on human encounters with every one of these rare phenomena and use machine learning to build systems that can learn how to handle each of these phenomena.

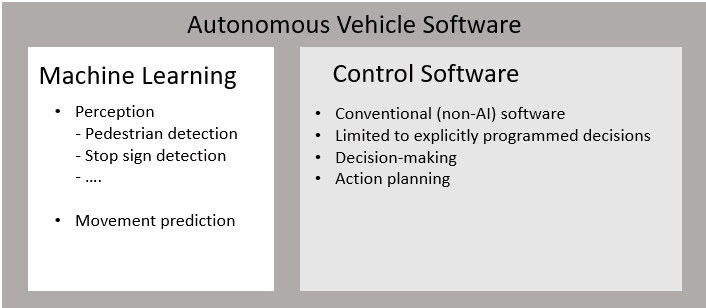

Augmenting training datasets with deepfake images might randomly produce images that depict some of these edge cases. However, these deepfake images will be of little use because the self-driving car vendors, including Tesla and Waymo, do not use machine learning to make the driving decisions. Machine learning in self-driving cars is mostly used for identifying people and objects and predicting their future movements. The decision-making software is conventionally programmed. This means that every self-driving decision must be explicitly programmed rather than learned. As a result, even if images depict edge cases, every edge case needs to be identified and the vehicle response to each edge case needs to be explicitly programmed.

What will happen when self-driving cars encounter unanticipated situations for which there is no training or programming? A scary video taken in 2020 illustrates what can happen when a self-driving car makes a bad decision. The video shows a Tesla on a Korean highway approaching an overturned truck at high speed in autopilot mode. A man is standing on the highway in front of the truck waving cars into another lane. The Tesla never slows down, the man has to jump out of the way, and the Tesla crashes into the truck at high speed.

It will be difficult, if not impossible, for self-driving car manufacturers to anticipate every edge case. This is why I have a hard time imagining self-driving cars dominating our roads anytime in the near future.

Leave A Comment