13.0 Overview

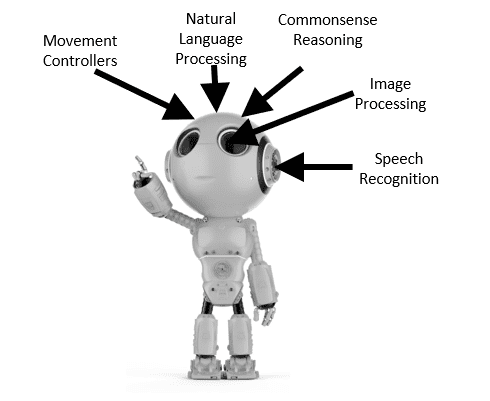

Robots can take on many shapes and forms, some of which are illustrated below:

IEEE defines 13 categories of robots:

- Aerospace: flying robots

- Consumer: robots for consumer use like Roomba and toy pets

- Disaster response: robots that perform dangerous jobs like post-earthquake building inspection

- Drones: UAVs (unmanned aerial vehicles) armed with computer vision can operate autonomously

- Education: robots designed to teach children

- Entertainment: humanoid robots that entertain such as comedian robots

- Exoskeletons: exoskeletons that help people walk

- Humanoids: like Sophia

- Industrial: warehouse arms, pick-and-place, and robots that move items around the shop floor

- Medical: surgical robots and robot prostheses

- Military and security: robots that scout for explosive devices and carrying heavy gear

- Research: robotic research designed to create new abilities (e.g. running, jumping, searching)

- Self-driving cars: cars with computers that steer and control speed without driver intervention

- Telepresence: robots that enable remote work

- Underwater: deep sea submersibles

Robots are in heavy use today in industrial automation. For example, automotive production lines contain robots that weld, glue, and paint cars. There were 517,385 new industrial robots installed in 2021 in factors around the world.

Numerous startups are developing robots for imaginative uses. For example, AstroForge is using robotics to mine asteroids for rare minerals and Universe Energy is using robotics to do the dangerous work of battery disassembly.

Goldman Sachs predicted in 2022 that humanoid robots could be a $154 billion business in 15 years. And the global market for surgical robots is predicted to be $14.4 billion by 2026.

As illustrated above, a robot might look something like a human with arms and legs or it might just be an arm or a vacuum on a set of wheels. Robot limbs have varying numbers of components that can move in different directions and that are guided by some form of controller. Controllers issue joint torques to the various joint motors. Robots that weld, glue, and paint operate in a well-defined area are far easier to program than robots that must navigate the real world and navigate based on sensors that provide input about the environment and a controller that can interpret those sensors and direct the robot components accordingly. Robots also have actuators (e.g. robotic hands) that can effect change on the world.

These sensors and actuators give robots a physical embodiment that turns them into physical agents that perceive and react to the environment. The sensors provide input to a controller. Vision sensors such as cameras and lidar give controllers the ability to “see” the world.

Touch sensors enable a controller to manipulate the environment without vision. For example, a pressure sensor might tell the controller how much pressure a robotic hand is applying to some object. Audio sensors listen for speech and give the controllers the opportunity to react to verbal commands.

Robots perform two high-level categories of tasks: Locomotion involves navigating the environment. Some robots use wheels for locomotion. Quadruped robots have four legs. And bipedal robots use two legs for moving around the environment. Manipulation tasks use robot arms to push, pick, and place objects in the environment. Robots also weld, paint, and perform many other types of object manipulations.

13.1 Optimal control theory

Most of the robots in use commercially as of the end of 2022 have software controllers created using optimal control theory which was discussed in Chapter 12. Even the amazing Boston Dynamics robots, Spot and Atlas, are programmed using control theory. Spot is a commercially available robot shaped like a dog that retails for $75,000:

First released in 2020, Spot can walk at a pace of three mph, climb, avoid obstacles, and perform pre-programmed tasks. It can be operated remotely or can be programmed with autonomous routes and actions. For example, operators can use a joystick to maneuver Spot through a route and from then on Spot can “remember” the route and walk it autonomously. It can be used for tasks like documenting construction progress, monitoring remote or hazardous environments, and provide situational awareness in environments including power generation facilities, decommissioned nuclear sites, factory floors, and construction sites. Locomotion is a particularly difficult problem for robots.

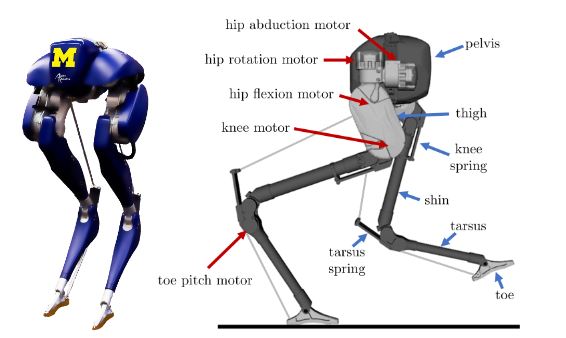

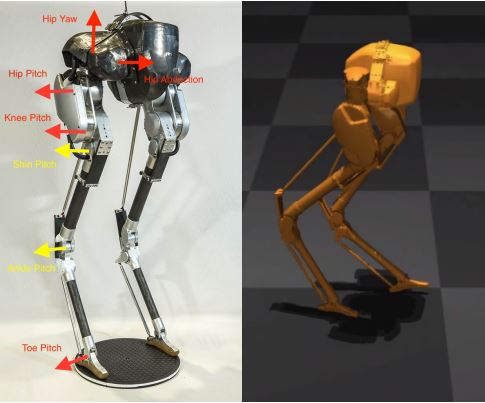

The Cassie Robot (pictured above left) has about 20 degrees-of-freedom created by the multiple joints pictured on the right. The motors that control these joints must all work in sync.

For a bipedal (two-legged) robot to walk, run, or jump, the controller must account for many different aspects of the interaction between the robot and the environment. For example, the ground impacts of the robot feet are challenging to model especially if the terrain varies.

The model needs to include force equations for the force the robot puts on each foot from different positions. It must have friction equations for the robot feet which are notoriously difficult to construct. Worse, any small error can cause the robot to be unstable. See Grizzle and Westervelt (2008) for a discussion of the challenges of creating bipedal robot control programs.

There are multiple methods of providing robots with the information they need to traverse an environment:

- Maps that provide navigation paths and provide information about the environment (e.g. locations of walkways, buildings, and other objects). The downside of using maps is that they are subject to inaccurate sensors and/or inaccurate processing of sensor data, slippage (i.e. cascading inability to know the current location), and unexpected environmental changes such as a cat in the walkway.

- Visual inputs from cameras and lidar can be processed to identify objects. However, these can be thrown off by bad weather, low light conditions, and objects that are occluded.

- Proprioceptive feedback can be used to assess the environment. For example, the robot foot pads can have sensors that help a robot maneuver through an environment.

Many robots will use more than one of the above methods. The robots must also maintain their balance, coordinate the movements of the actuators, and process both visual and proprioceptive feedback. And they face challenges like changing terrain, changing payloads, and wear and tear that degrades the performance of actuators over time.

They must also figure out how to traverse an environment. The problem of balance is even more pronounced for two-legged robots. Quadrupedal robots like Spot are inherently more stable than for bipedal robots because they have twice the number of contacts with the walking surfaces. As a result, bipedal robots can fall much more easily than quadrupedal robots. And adding an arm to quadrupedal makes control policy creation much more difficult because, as the arm swings right or left, the robot must adjust its balance (Fu et al, 2022).

That makes demonstrations of Boston Dynamics two-legged Atlas robots even more impressive. It can run up stairs, leap onto a balance beam, vault over a beam, and perform handstands and back flips:

Every move in Atlas is part of a library of templated moves that are created using control theory. Atlas uses a dynamics model that enables it to predict how engagement of its actuators will impact the environment.

Each movement template gives the controller the ability to adjust force, posture, and behavior timing and the controller uses optimization software to compute the best way to engage its actuators to produce the desired motion. For example, this enables the controller to use the same template for jumping off a 15-inch platform as for a 20-inch platform and it enables the controller to operate on surfaces with different grip textures (e.g. wood, mats, asphalt, and grass).

More recent versions of Atlas incorporate computer vision capabilities so the controller can identify and navigate gaps, beams, and environmental objects. It also uses IMUs, joint positions, and force sensors to control body and feel the ground for balance. The controller contains manually-programmed rules like “look for a box to jump on” and if there is a box, the jump program is executed. If there isn’t a box, the robot comes to a stop.

There are also many examples of manipulation tasks developed using control theory. Most factory robots that perform welding, painting, and other repetitive tasks are created using control theory (e.g. Podržaj, 2018) and/or tools that enable programming of exact trajectories.

Though commercial robots in 2022 are primarily programmed using control theory, considerable research is being done in academic and commercial laboratories around the world to create AI systems that can learn controllers by interacting with the world rather than needing to be manually programmed. This work is resource-intensive, requires great creativity on the part of the human designer/programmer, and the resulting robotic controller will only work for a specific set of environmental conditions. Worse, the programmers need to have domain expertise.

This type of work is resource-intensive because it requires manual creation of a dynamics model. It must account for the impact of actuators on the environment. It must account for the degrees-of-freedom of the robotic parts. And it must incorporate equations about motion (i.e. a kinematics model) and other aspects of the real-world environment.

There are many toolkits that help roboticists with the complex non-linear programming calculations required including the open source FROST and DRAKE toolkits. Two promising approaches to solving some of these issues are reinforcement learning and imitation learning. Robots trained with reinforcement learning explore the environment in search of reward signals. Robots trained with imitation learning use a teacher who demonstrates how to perform a task.

13.2 Reinforcement learning for robotics

Reinforcement learning for robotics differs from reinforcement learning for game playing. Robotics applications create several issues for the use of reinforcement learning:

First, reinforcement learning, like supervised learning, requires huge amounts of data. The amount of data required to train a policy increases with task complexity. However, it is often difficult to obtain a large number of trials in a robotics setting because the robots and/or other industrial automation equipment is expensive to run and maintain.

Second, where in game-playing, a dynamics model is readily-available, in most real-world environments, a dynamics model is not available. So, a reinforcement learning algorithm must either learn a dynamics model (model-based reinforcement learning). Or, it must use model-free reinforcement learning.

Third, it’s complicated to obtain the current state of the environment. The robot can provide the position of each actuator but it has to also process exteroceptive sensor input such as camera input to determine the position of objects in the environment.

Fourth, defining a reward function for many robotics tasks is challenging. What should the reward function be for turning on a light switch or preparing a meal? In the laboratory, human researchers can indicate when the light switch has been successfully turned on and can decide when a meal is ready and if it tastes good. That won’t work in a real-world environment where a researcher is not present to provide feedback. In contrast, in an Atari game, the reward function is simple. A reward is a scored point.

Fifth, unlike supervised learning, it’s difficult to create reusable datasets for robotic reinforcement learning because every robot has a different physical configuration. As a result, specialized data collection is required for each type of robot. Sixth, many real-world tasks require completion of multiple sub-tasks. If you ask a future household robot to “clean and slice an apple”, it will need to break down that request into multiple sub-tasks such as “go to the island”, “pick up an apple from the basket on the island”, “go to the sink”, “run the water”, “put the apple under the water”, “rub the apple to clean it”, “tear off a paper towel”, “rub the apple with the paper towel to dry it”, “go to the island”, “open the bottom drawer”, “take out a cutting board”, “go to the sink”, “place the cutting board on the counter near the sink”, “go to the utensil drawer”, “pick up a knife”, and so on.

Tasks that require completion of multiple sub-tasks are known as long-horizon tasks. Finally, real-world robots need to be able to navigate changes in the environment such as different object colors and textures and layout changes. If the training environment doesn’t include all these variations, the training methodology needs to incorporate some means of generalization.

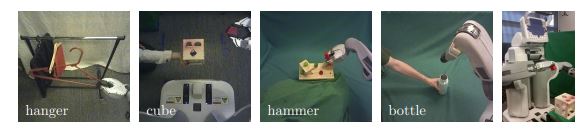

That said, it is possible to use reinforcement learning to directly learn simple real-world tasks. Model-based reinforcement learning techniques such as guided policy search have been successfully used to create controllers in laboratory settings for tasks such as inserting a block into a shape sorting cube, screwing a cap onto a bottle, fitting the claw of a toy hammer under a nail with various grasps, and placing a coat hanger on a rack (Levine et al, 2016) as illustrated below:

Model-free reinforcement learning techniques have been used for tasks such as opening doors (Gu et al, 2016), stacking Lego blocks (Harnooja et al, 2018). Consider the problem of training robots to grasp objects using camera images. This can be accomplished by manual labeling of the grasp points on images and then using supervised learning to identify grasp points on images (e.g. Lenz et al, 2014). However, it is expensive to label the images and this issue is compounded by the fact that there are often multiple possible grasp points on an object.

Alternatively, correct grasp points can be collected along with real images using self-supervised learning techniques. For example, Pinto and Gupta (2015) had a robot use trial-and-error to attempt to grasp a variety of objects on a table top and lift them up. When sensor readings indicated that the object was indeed lifted up, it recorded the location of the grasp points on the image. By doing so, these researchers were able to generate 50,000 data points for training. Then these used these data points to train a convolutional neural network that was deployed on a real-world robot. With this approach, the robot was able to grasp 73% of the objects that were used for training and 66% of new objects that had not been used in training.

A group of Google researchers (Levine et al, 2015) used a similar trial-and-error data collection methodology. Instead of predicting grasp points, their system learned to predict whether or not a successful grasp would occur. They found some success in tasks such as inserting objects into containers and screwing caps onto bottles.

The primary limitations of this end-to-end approach are high training cost for each task and lack of generalization to new objects and settings. While effective, these real-world training methods are very expensive. The Pinto and Gupta research required 700 hours of robot grasping attempts. The Levine et al work required 800,000 grasp attempts over a two-month period.

Moreover, the resulting grasping capabilities are only effective for the specific objects and the specific table top environments. Unfortunately, collecting data in this fashion is mostly only practical in a laboratory setting and is much less practical in the real world. For example, real-world training techniques for tasks like locomotion are so expensive they are impractical. A robot that attempts to walk or run using trial-and-error will fall over and break after a relatively small number of attempts. Even for tasks like picking and placing objects, where breakage isn’t an issue, collecting sample data is very time-consuming and places a great deal of wear and tear on the robots.

13.3 Imitation learning

One way to manage the challenges discussed in the previous section is for the robot to learn from an expert who demonstrates how to do a task. When we teach a young adult to drive, we don’t give them a reward function. Instead, we demonstrate how to drive (Abbeel and Ng, 2004). This type of training involves observing a teacher agent that is assumed to be following an optimal policy and maximizing its rewards. Importantly, no explicit reward function is required.

Learning by copying the behavior of an expert (or “teacher”) is known as imitation learning (Schaal, 1999) or learning from demonstration (Argall et al, 2008). One important aspect of imitation learning is that the teacher agent is often a person who is a domain expert (i.e. knows how to do a task) but is not knowledgeable about machine learning. Data about the state of the environment and the state of the robot are gathered using the robot’s own sensors and data on the robot’s own state (e.g. the position and movement of its joints).

By recording this state and action information and feeding this data into a supervised learning algorithm, the robot can learn a policy that enables it to perform the behavior. Imitation learning can be much more efficient than reinforcement learning because there is no need for resource-consuming exploration. There is also no need to define reward functions which can be important in environments where reward functions are difficult to specify or cannot be used for training. For example, the best reward function for a surgical robot might involve patient outcomes where trial-and-error training is impossible. Imitation learning has been successfully used in a wide variety of tasks including robot arm tasks as diverse as picking-and-placing objects, playing ping pong, and simulating helicopter maneuvers.

Finally, imitation learning is sometimes the entire learning algorithm and is sometimes used as part of a bigger reinforcement learning algorithm. One can imagine that training a robot to do a task using a policy-based or value-based algorithm that starts with a random initialization could be difficult. The robot would need to flail around quite a bit before starting to get rewards and that could be very time-consuming and very expensive. This is even true for model-based systems that learn a model. As a result, many robotics algorithms use imitation learning algorithms to create a starting point for the algorithm to prevent the initial flailing around.

13.3.1 Kinesthetic feedback and teleoperation

One method of avoiding the correspondence problem is using kinesthetic feedback. This method involves a human guiding a robot by physically helping the robot make the correct movements. Kinesthetic feedback often involves guiding a robot arm to perform a task such as pouring a glass of water (Pastor et al, 2009), playing ping pong, (Muelling et al, 2007), and box opening, pick-and-place, and drawer opening using kinesthetic feedback (Rana et al, 2017).

One issue with kinesthetic feedback is that it can be physically demanding to guide a robot hand for a large number of interactions. It can also be unintuitive especially where the degrees of freedom of the robot arm differ from a human arm. This is known as the correspondence problem.

Finally, physically guiding the robot arm can block the teacher’s and/or the robot’s vision. Another approach is teleoperation (i.e. remote operation). For example, researchers (Rahmatizadeh et al, 2017) trained a robot arm using remote operation to perform multiple tasks using a visual image to specify which task the robot should perform. The tasks including picking up a towel, wiping an object, and then putting the towel back in its original location. They also showed that training on multiple tasks improved performance on all the tasks. This improvement likely resulted from higher levels of exposure to individual objects and a common structure for the tasks. Teleoperation can be enhanced by the use of virtual reality headsets that gives the human operator more accurate control over the robot (e.g. Zhang et al, 2018).

One difficulty with imitation learning is that the resulting policy often only works for environmental states encountered during training. As a result, many demonstrations can be required to achieve robust performance. For example, Zhang et al (2018) used 200 demonstrations for a reaching task, 180 for a grasping task, and so on. One way to increase robustness is to inject small amounts of noise into the supervisor’s policy while demonstrating (Lee et al, 2017). This forces the supervisor to demonstrate how recover from these errors.

That said, for many commercial applications, generalization is not required. For example, to train a robot arm to perform welding on a specific part, the part to be welding will be in exactly the same position every time and robot only needs to learn the exact welding trajectories.

13.3.2 Behavioral cloning

In behavioral cloning, a teacher provides a demonstration and the system learns the expert’s policy directly using supervised learning. For example, in 1992, a group of Australian and UK researchers (Sammut et al, 1992) trained an autopilot to fly a plane in a simulator. The method used was to record an expert taking actions such as moving the control stick and changing the thrust or flaps settings. Each time the pilot took an action, 20 data points were logged and a supervised learning algorithm (a decision tree algorithm) was used to predict which action the pilot would take based on the data points. This algorithm was then used to fly the plane in the simulator.

An even earlier example of this technology was the ALVINN program (Pomerleau, 1989) which was an early self-driving vehicle that learned to stay in a lane by observing a human driver’s actions.

Similarly, Lecun et al (2006) trained a convolutional network using supervised learning by recording driving simulator images and the steering angle chosen for each image by a human driver. The system was trained to make the same steering angle choices. The studies mentioned above as examples of kinesthetic feedback and remote operation all used behavioral cloning.

Google researchers (Florence et al, 2021) used behavioral cloning with an implicit model in which the inputs are both observations and actions rather than an explicit model in which the inputs are just observations. In both types, the output is the predicted actions. The implicit model is also energy-based. It computes a single number that is low for expert actions and high for non-expert actions. On six of seven D4RL tasks, implicit behavioral cloning outperformed the best previous method for offline reinforcement learning.

Berkeley and Stanford researchers (Luo et al, 2024) developed and open-sourced a behavior cloning methodology that is highly sample efficient.

One limitation of these approaches is that they assume that the training and test examples are drawn from the same distribution (Ross et al, 2011). When test and/or real-world states deviate from those experienced during training, these systems tend to fail. In other words, the system can only perform the training task under the environmental conditions present during training.

To circumvent this limitation, Meta researchers (Liu et al, 2024) are exploring the use of vision-language models (VLMs) to bridge the gap between the training and real-world environments. Their OK-Robot robotics framework contains a VLM that recognizes objects in the environment and maps them to objects from the training environment. Then, when the robot’s VLM is given a natural language command like “Move the soda can to the box”, it can successfully execute the command.

13.3.3 Inverse reinforcement learning

Inverse reinforcement learning (IRL), which was discussed in Chapter 12, can also be used for imitation learning. An expert demonstrates the movements that should be made by the robot. The IRL system assumes that the expert’s control policy is optimal and sets out to first learn the rewards that drive this optimal policy. It learns the policy based on the rewards. Then it compares the learned policy with the expert’s policy and iterates until the result is satisfactory.

One advantage of IRL is that it avoids the correspondence problem because it is learning the reward function rather than directly learning a policy. Also, in many cases, it can be easier to learn a reward function because there are relatively small numbers of rewards compared to the numbers of states and actions.

One limitation of IRL systems is that if the real-world environment dynamics vary from the training environment dynamics, IRL often will fail to learn the reward function that produces optimal behavior. IRL systems also tend to be less efficient than behavioral cloning systems, i.e. they require more data, and do not scale as well as behavioral cloning systems to complex tasks.

13.3.4 Adversarial imitation learning

A different approach to extracting policies via imitation learning was taken by two Stanford researchers (Ho and Ermon, 2016) who used an architecture similar to a generative adversarial network. Their generative adversarial imitation learning (GAIL) system contained a generator and a discriminator. During each iteration, the current policy is used to generate samples of state-action pairs. These samples are passed to the discriminator that also receives samples from an expert’s trajectories. The job of the discriminator is to distinguish between samples produced by the expert and samples produced by the generator. After each iteration, the generator policy is updated in a way that causes it to generate samples that are more and more indistinguishable from the expert samples. Eventually, the generator will learn the expert’s policy.

A related system was created by a team of University of California at Berkeley researchers (Fu et al, 2018) that learn rewards rather than policies using a form of inverse reinforcement learning. Their adversarial inverse reinforcement learning algorithm uses a GAN-like architecture to simultaneously learn the reward function and a value function. The result is a system that generalizes better to real-world environments where the dynamics different from the training environment. The Google DeepMind team (Wang et al, 2017) also developed an alternative adversarial approach to get around the GAIL limitations.

13.4 Use of simulators

It’s relatively straightforward for a computer to use reinforcement learning learn to play simulated games like chess, Go, and Atari games. The game environment necessarily has a built-in dynamics model. The agent who needs to learn the game doesn’t need any sensors (e.g. vision) because the state information is available from the game environment.

In contrast, in robotic environments, trial-and-error learning can be very expensive because robotic parts wear out. They are also expensive, which often means that researchers have to share robots. As a result, a fair amount of attention has been paid to learning via simulators and then transferring the learned policies to real-world environments for both grasping and locomotion and other robotic tasks.

13.4.1 Simulation environments

Researchers have developed many simulation engines that are in wide use. These include both physics engines and simulated environments and benchmarks. Physics engines simulate friction, torque, gravity, and other forces operating on shapes, bodies, and joints. Widely used engines include Nvidia’s IsaacGym (Makoviychuk et al, 2021), Unity 3D, DeepMind’s MuJoCo (Todorov et al, 2012), Google’s Brax (Freeman et al, 2021), and RaiSim. Simulated environments include:

- Open AI Gym (Brockman et al, 2016) is a widely-used collection of simulated environments. Many of the environments are built on top of the MuJoCo physics engine and four examples are shown below:

- Habitat 2.0 (Szot et al, 2022) contains a 3D dataset of apartments with articulated objects such as cabinets and drawers that open and close. It also includes its own 3D physics engine.

- iGibson (Shen et al, 2021) contains 15 fully-interactive home-size scenes with 108 room populated with both rigid and articulated objects.

- Sapien (Xiang et al, 2020) is an environment that contains 46 common indoor objects with 14,000 parts. It includes its own physics engine.

- PyBullet is an open source simulator with a wide variety of environments.

- IsaacGym contains a wide variety of environments for simulated robot training.

- ThreeDWorld (Gan et al, 2021) is a multi-modal simulator that includes multi-agent interaction, navigation, and perception and includes audio in addition to visual rendering in its environments.

- AirSim (Shah et al, 2017) is a simulator for autonomous vehicles.

- Gazebo (Koenig et al, 2004) is a 3D open source environment that support multiple robots.

- TextWorld (Côté et al, 2019) is a sandbox learning environment for the training and evaluation of reinforcement learning systems on text-based games.

- AlfWorld (Shridar et al, 2021) is a simulator that enables agents to learn abstract, text-based policies in TextWorld and then execute goals from the ALFRED benchmark (see next section).

There are also many benchmarks and datasets that can be run in these simulators that enable researchers to measure progress. Some benchmarks that are referenced in this chapter include:

- ManiSkill (Gu et al, 2023) is a manipulation benchmark that includes its own simulator. It includes 20 manipulation task families, 2000+ objects, and 4M+ visual demonstration frames covering robot arms with both stationary and mobile bases and single and dual arms. It includes both rigid and soft body manipuation tasks.

- Ravens (Zeng et al, 2022) is a benchmark for vision-based manipulations tasks. It includes 10 tasks for manipulating objects such as blocks, boxes, rope, and piles of small objects.

- D4RL (Fu et al, 2021) is a suite of tasks and datasets for benchmarking progress in offline reinforcement learning.

- Google Scanned Objects (Downs et al, 2022) is a high-quality dataset of 3D scanned household items.

- Language-Table (Lynch et al, 2022) is a dataset of 87,000 natural language strings specifying real-world long-horizon tasks like “make a smiley face out of blocks” and “put the blocks into a smiley face with green eyes”. It contains 181,000 simulator-based videos of a robot performing the tasks and 413,000 videos of real-world demos and contains the sequence of actions the robot took during the demonstration.

- RLBench (James et al, 2019) contains 100 simulated tasks ranging in difficulty from simple target reaching and door opening, to longer multi-stage tasks, such as opening an oven and placing a tray in it.

- DeformableRavens (Seita et al, 2021) provides 12 tasks that involve using a robot arm to manipulate deformable objects such as cables, fabric, and bags. It runs in its own simulated environment.

- ALFRED (Shridar et al, 2020) is a a benchmark for learning a mapping from natural language instructions and egocentric vision to sequences of actions for household tasks. It contains expert visual demonstrations for 25k NL instructions such as “Walk to the coffee maker on the right”, “Pick up the dirty mug from the coffee maker”, and “Turn and walk to the sink”. It includes 120 interactive household scenes and 8055 expert demonstrations averaging 50 steps each for a total of 428,322 image-action pairs in an AI2-THOR 2.0 simulator”.

13.4.2 Examples of training in simulators

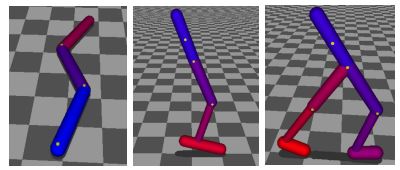

Progress in training robots in simulated environments has been impressive. Berkeley researchers (Levine and Koltun, 2014) showed that a guided policy search algorithm could be used to train a 2D robot to walk on uneven terrain. A follow-on project (Schulman et al, 2015) showed that trust region policy optimization could be used to train 2D robot models to swim, hop, and walk. Examples of the 2D robot models are shown below:

OpenAI researchers (Schulman et al, 2017) were able to train 3D robot models on locomotion tasks using proximal policy optimization. These efforts used simulated robots that have little in common with real-world robots. Xie et al (2018) designed a simulated robot in MuJoCo that was a realistic model of the Cassie robot:

They were able to successfully train the simulated version of Cassie to walk at various speeds using reinforcement learning. However, they did not tackle the challenge of moving the learned policy to the real-world Cassie.

A group of Berkeley researchers (Peng et al, 2018) developed a form of imitation learning that can teach a simulated humanoid to perform acrobatics from watching videos. They started with a state-of-the-art system for deriving the pose (i.e. the 3D joint angles and body shape) of humans from individual frames of the video (Kanazawa et al, 2018) developed by their Berkeley colleagues. When the pose estimates were run though the imitation learning algorithm, the humanoid figures were able to do somersaults and other acrobatic skills. However, this technique was only demonstrated on simulated humanoids not on real-world robots. Simulators are also used for work on robotic manipulation. For example, researchers (Rajeswaran et al, 2018) have made progress studying the use of reinforcement learning for gripping and other manipulation tasks.

13.4.4 The reality gap

While great progress has been made using reinforcement learning to train robots in simulated environments, researchers are still trying to figure out how to transfer what is learned in a simulator to the real world. The main difficulty is that the dynamics of the simulated environment are different than the real-world dynamics. While there are simulators for many aspects of physics (e.g. force, gravity, friction, collisions, and torque), it is difficult to simulate these physics phenomena accurately.

And there are aspects of physics that are difficult to simulate at all. Rigid objects such as a metal cube are much easier to simulate than deformable objects such as a human hand or a piece of cloth. Where a rigid object can be located in space just by knowing the location of its center and a single other point of the object, the arrangement of a hand or cloth is much harder to model. Similarly, it’s difficult to simulate fluids and complex real-world objects like zippers and books. As a result, the simulated and real-world robots will typically have significant differences.

The physics in the simulator will also differ significantly from the real-world physics especially for deformable objects. Video games appear to have physics elements; however, the physics in these systems are for imaginary environments and don’t exactly match real-world physics. The simulated robots visual, tactile, and auditory sensory inputs are also different than those encountered by a real-world robot. For example, for locomotion, the terrains will typically differ from the simulator to the real world. Worse, it is difficult and sometimes impossible to collect real-world data for use in simulators. Attemping to collect walking data from an untrained robot can cause it to fall repeatedly which can cause expensive damage. These issues are collectively known as the reality gap.

The next section will explore the methods researchers are using to bridge the reality gap and the progress to date.

13.5 Bridging the reality gap

Researchers have explored many different methods of bridging the reality gap to enable policies trained in a simulator to transfer to real-world robots. This is also known as sim2real transfer.

13.5.1 Domain adaptation

Domain adaptation focuses on adapting a model learned in the simulation environment so that it functions well in the real-world environment.

Pixel-level domain adaptation takes images from the simulated environment and makes them look more like real-world images by learning a generator function.

Feature-level domain adaptation learns features that are common to both the simulation and real-world environments.

Google Brain researchers (Bousmalis et al, 2017) studied both domain adaptation techniques: a form of pixel-level domain adaption they termed the GraspGAN and a form of feature-level domain adaptation known as domain-adversarial training (Ganin et al, 2016). Using these techniques, they were able to reduce the number of real-world samples needed to achieve a given level of performance by up to 50 times.

Other researchers did the reverse (Zhang et al, 2019). Rather than try to improve the fidelity of simulated images by generating versions of the real-world images in the style of simulated images and termed this approach VR-Goggles. They found that for each new real-world environment, they only needed to collect around 2000 real-world images and trained a VR-Goggles model on these images. As a result, they didn’t need to retrain the visual control policy for new environments.

Another approach, termed neural-augmented simulation (Golemo et al, 2018), is to use data collected from a robot operating in a real-world environment to train a model to predict the differences in the simulated and real-world trajectories. This trained model is then used as an input to the reinforcement learning algorithm in a simulated environment. The result is better transfer from the simulation environment to the real-world environment.

Bi-directional domain adaptation combines VR-Goggles and neural-augmented simulation. Truong et al (2021) showed that bi-directional domain adaptation in a navigation task required only 5000 real-world samples to match the performance of a policy trained in simulation and fine-tuned with 600,000 real-world samples.

Another group of researchers (Kadian et al, 2020) developed a measure that predicted how well a simulated model will transfer to a real-world robot. They then tuned the parameters of the simulation model to increase the measure and this resulted in better sim2real transfer for a navigation task.

Chebotar et al (2019) alternated simulation with real-world training on a robot to develop policies. After each real-world training, they updated the policy behavior in the simulation to match the real world behavior. By doing so, they were able to successfully transfer policies from the simulator to the real-world robots for opening a cabinet drawer and a swing-peg-in-hole task.

Google researchers (Abeyruwan et al, 2022) used a similar approach to learn human behaviors rather than just collecting real-world physical data. By doing so, they were able to train a real-world robot to play ping pong against a human player.

Arndt et al (2019) used meta learning to train a robot to adapt to real-world changes in friction. Another interesting approach is to avoid the sim2real issue altogether and train robots in the real-world in a way that prevents damage.

Another group of Google researchers (Yang et al, 2022) trained a quadruped robot in the real-world using two policies. One policy was a “learner policy” designed to learn locomotion skills. The other policy was a “safe recovery policy” that prevents the robot from entering unsafe states. Other Google researchers (Smith et al, 2021) trained a quadruped robot to right itself after falling so that it could continue learning locomotion skills.

Source: Google Research

13.5.2 System identification

Another approach to closing the sim2real gap is to make simulated robot environments need to move beyond physics engines and incorporate more realistic models of the real-world robot actuator dynamics and sensor inputs (e.g. Kaspar et al, 2020). The goal of system identification is to reduce or eliminate the reality gap by improving the fidelity of the simulation, i.e. making the simulation environment more similar to the real-world environment.

System identification is obviously a key component of using optimal control theory in robotics. And it is an important component of creating simulators. Deep learning is an important addition to the system identification toolbox due to its ability to estimate complex functions.

13.5.3 Domain randomization

Research on supervised and unsupervised deep learning has shown that increasing the amount of and breadth of training data leads to better performance and generalization. Unfortunately, it is difficult to collect data from expensive real-world robots.

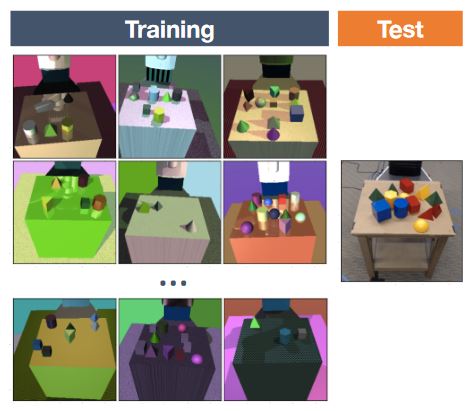

One approach to increasing the amount and diversity of data in simulations is domain randomization. The basic approach is to create additional data by randomly perturbing visual and/or dynamics parameters. By perturbing visual parameters such as lighting, texture, and color, the goal is to make the learned policy more robust to visual differences between the simulated and real-world visual input. By perturbing dynamics parameters such as friction and forces, the goal is to make the learned policy robust to dynamics differences between the simulated and real-world input. With enough variability in the simulator, the real world may appear to the model as just another variation. This variability also reduces overfitting.

Tobin et al (2017) demonstrated the feasibility of this approach using an object localization task based on visual images. They were able to train a real-world object detector that was accurate to 1.5 cm and robust to distractors and partial occlusions using only data from a simulator with non-realistic random textures as illustrated below:

Peng et al (2018) were able to demonstrate that domain randomization can work by randomly changing various visual parameters (e.g. textures, lighting, and camera position) in the simulator during the learning process. Then, when the learned model is run in the real world, it is more robust and better able to adapt to real-world physics.

University of Washington researchers (Mordatch et al, 2018) created an ensemble of models by perturbing the dynamics parameters of the initial model that was trained in the simulator. They were able to successfully deploy the resulting trajectories created by this ensemble on real-world robots which successfully walked forwards and sideways, turned, and got up from the floor. It should be noted that this work used an optimization method known as contact-invariant optimization (Mordatch et al, 2012) and did not use reinforcement learning.

Google researchers (Tan et al, 2018) applied both domain randomization and a higher fidelity model. They created a realistic simulated model of a real-world quadruped robot that had an accurate actuator dynamics model to improve fidelity and also made random changes to the environment to improve robustness. When the model was transferred to the real-word quadruped robot, it successfully performed both trotting and galloping. Oregon State researchers (Siekmann et al, 2021) successfully trained a headless (no vision) Cassie robot in simulation to climb stairs using only proprioceptive feedback. The key to success was randomization of the terrains during training.

A group of Berkeley researchers (Li et al, 2021) developed a 3D simulation for the bipedal Cassie robot in a MuJoCo simulator. They combined a library of gaits coded in FROST with reinforcement learning to train the simulated Cassie robot to walk while following commands for frontal and lateral walking speeds, walking height, and turning yaw rate. By training a policy to imitate a collection of diverse gaits and using domain randomization, they were able to effect a sim2real transfer to a real-world Cassie robot that was robust to modelling error, perturbations, and environmental changes.

Other researchers have successfully used perturbations of many other simulation parameters including randomization of physics properties such as inertia and friction, delayed actuations, and efficiency coefficients of motors. For a comprehensive review of domain randomization techniques see Muratore et al (2021).

13.6 Generalization

While the sim2real techniques discussed in the last section are gaining traction, their primary limitation is that, by themselves, they only work for a specific robot performing a specific task in a specific environment. There is no generalization to other robots, other tasks, or other environments. Robots of the future will need to do many tasks that involve locomotion and object manipulation. A general purpose robot should be able to follow instructions for tasks it has not previously performed, it should be able to handle environments that are different from the training environment(s) such as navigating around pets and climbing stairs, and it should be able to manipulate unfamiliar objects in unfamilar poses (i.e. orientations).

13.6.1 Adaptation to different robot morphologies

Robots have different morphologies that enable them to be effective in particular environments. For example, manipulation-oriented robots have arms have varying degrees of freedom, different end effectors (i.e. hands), and must be capable of manipulation both rigid and deformable objects. Additionally, they might have a stationary base vs. a mobile base and they might have a single arm vs. a dual arm configuration.

Locomotion-oriented robots have different numbers of legs with different types of feet. A policy trained for a robot with four legs can be difficult to transfer to a robot with two legs. One way to get around this issue is to separate the high-level aspects of the locomotion task from the low-level aspects.

Facebook researchers (Truong et al, 2021) separated learning of the navigation policy from the low-level dynamics of legged robots, such as maximum speed, slipping, and contacts using a hierarchical network. The robot was able to learn to successfully navigate cluttered real-world indoor environments on quadruped robots in simulation and was able to adapt quickly to a real-world hexaped robot.

The MetaMorph approach (Gupta et al, 2022) used multi-modal self-supervised learning in which the different robot morphologies were just treated as another modality. These researchers also used this approach in the UNIMAL (Gupta et al, 2021) design environment which is a design space for constructing robots with dramatically different morphologies (i.e. numbers and types of arms and legs) from a set of modular components. These modular components enable specification of over 1018 different robots. They created 100 robots that were trained in a Mujoco simulation environment on flat terrain, varied terrain, and varied terrain with manipulation tasks. All 100 robots were jointly trained using proximal policy optimization. Training inputs included both proprioceptive information such as the position, orientation, and velocity of each module and the position and velocity of each joint and sensory information from camera and/or depth sensors. Additionally, however, the training inputs included information about the morphology of the robot such as the height, radius, material density, the orientation of each child module with respect to the parent module, and joint type and property information. After training the 100 robots, zero-shot generalization was demonstrated on a set of new robots.

13.6.2 Using old data to learn new tasks

Training robots with reinforcement learning by having them explore their environments can be both expensive and dangerous. For example, the QT-Opt system (Kalashnikov et al, 2018) required over 580,000 trials with multiple robots to learn to perform a specific vision-based grasping task.

For locomotion tasks, high numbers of trials can incur additional expense due to damage to the robots and wear and tear, not to mention danger from moving robots endangering humans.

To build a general-purpose robot by having it learn many different tasks would be enormously expensive and can be dangerous. Google researchers (Kalashnikov et al, 2021) showed that by training real-world robots on multiple similar tasks simultaneously, it is possible to reduce the number of trials required for each individual task. Their MT-Opt system was able to learn a wide range of skills, including semantic picking (i.e., picking an object from a particular category), placing into various fixtures (e.g., placing a food item onto a plate), covering, aligning, and rearranging using data collected from seven real-world robots. These robots were able to learn with fewer trials than required for individual tasks and were able to acquire distinct new tasks more quickly by leveraging past experience. They pointed out that this it is not suprising that learning a task like lifting a food item would be beneficial to the more difficult task of placing three food items on a plate. They also noted that sharing data could negatively impact dissimilar tasks. Another way to reduce the number of training trials required is to reuse data generated from previous training robots to do other tasks. The basic idea is to take trial data for previous tasks and relabel them for new tasks.

Unsurprisingly, the best results come from methods that relabel data from similar prior tasks (Yu et al, 2022; Yu et al, 2021; Eysenbach et al, 2020; Cabi et al 2020).

13.6.3 Generalization to new environments

Lee et al (2020) tackled the problem of teaching a quadruped to navigate a wide variety of terrains in simulation. First, a teacher model was trained using a reinforcement learning algorithm in simulation. The teacher model had access to “privileged” information, i.e. information that would not be available to the real-world robot. This information included contact states, contact forces, the terrain profile, and friction coefficients that was provided by a physics model in the simulator.

The teacher model was then used as supervision to trained a supervised student model that had access to proprioceptive robot data but not the privileged information. Further, the training was done using a curriculum that ensured the student model learned easier terrains first. The student model was then transferred to a real-world quadrupedal ANYmal robot (Hutter et al, 2016) and with no additional training, the robot was able to traverse a wide variety of terrains including deformable terrain such as mud and snow, dynamic footholds such as rubble, and overground impediments such as thick vegetation and gushing water.

Miki et al (2022) improved on this scheme by combining exteroceptive input (i.e. visual input) with the proprioceptive input. They used a similar teacher model that is used to train a student. However, the student model is trained end-to-end using both exteroceptive and proprioceptive input. The resulting model was able to do an hour-long hike in the Alps in the time recommending for a human hiker.

Chen et al (2019) used a similar approach for an autonomous driving application. However, they used imitation learning to acquire the privileged information for the teacher model.

Kumar et al (2021) designed a method to train a locomotion policy for quadruped robots that generalizes to terrain that was not seen or anticipated during training. The training is done solely in simulation and there is no fine-tuning after transfering the policy to the real-world robot. The method involves training an encoder that projects the environmental conditions for each state into a latent space. This encoder is trained in simulation along with the policy that selects the appropriate actions for a given latent state. An adaptation model is trained using self-supervised learning to predict the latent state from the immediately preceding latent states. Then, after transfer to the real-world robot, the adaptation model predicts the latent state of the environment which is one of the inputs to the trained policy.

13.7 Learning from video demonstration

When I have to replace a laptop battery, I might watch a YouTube video to learn how to do that task. Similarly, researchers are starting to train robots to learn from videos.

There are two major challenges to learning from video demonstration. First, there are no action labels and no reward functions. Second, is the correspondence problem, i.e. there are differences between human and robot morphology and there are differences in the action space, viewpoint, and environment.

One way to attack these challenges (Young et al, 2020; Song et al, 2020) is to minimize the differences in human and robot morphology. Young et al had humans demonstrate object manipulation using a reacher-grabber tool that was very similar to a robot arm end-effector. The robot arm end effector looked and performed just like a reacher-grabber tool.

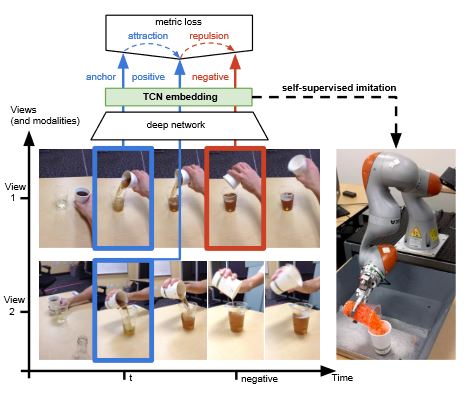

Beyond learning to perform a specific task, videos offer the opportunity for an agent to learn general principles of physics and object interactions using self-supervised learning. One of the first to do this was a group of Berkeley researchers (Finn et al, 2017) who used a meta-learning approach that enables a robot to learn to place a new object in a new container with just one visual demonstration. Sermanet et al (2018) started with a network had been pre-trained on ImageNet so that it had a representation of object components. They further trained the network by presenting simultaneous videos of tasks from two different perspectives as illustrated below.

The objective was to learn what was similar about the frames from the same point in time (i.e. the blue frames above) and to learn what was different about frames from different points in time (i.e. the blue vs. red frames above). Following this self-supervised training, a real-world robot was able to learn pouring tasks from a single video demonstration (as illustrated above).

A group of Berkeley researchers (Yu et al, 2019) used videos of both humans and robots performing similar tasks. At each step, the training algorithm randomly chooses randomly from a variety of tasks. For the selected task, the algorithm is presented with a video of a human and a video of a robot performing similar versions of the task. It first updates a policy that predicts the correct action from the human video. Then it updates the policy based on the robot video. This causes the model to learn the visual cues needed to produce the correct action. Then, with a single video demonstration from a human for a task not in the training set, a real-world robot was able to learn to place, push, and pick-and-place new objects.

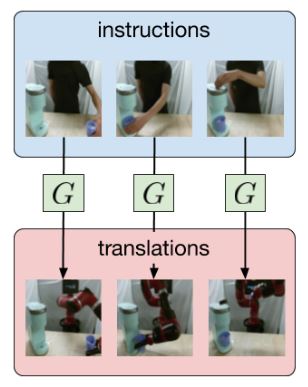

Another group of Berkeley researchers (Smith et al, 2020) created a system that worked on real-world robots. They first translated images of human demonstrators into images of robots performing the same task as illustrated below:

Rather than defining manual correspondences between the human and robot, the system learned the translation using CycleGAN (Zhu et al, 2017) which is an image-to-image translation framework. They used only a few images per task where each image represented goal state for each stage of the task. They then used these goal state images to define a reward function for a reinforcement learning algorithm. They named the resulting system AVID (Automated Visual Instruction-following with Demonstrations). AVID was able to learn to make coffee with only 30 human demonstrations and 180 interactions in the real-world environment.

Carnegie Mellon and Meta researchers (Bharadhwaj et al, 2023) trained a model to predict hand positions from internet videos. Then by showing a real-world robot an object image (e.g. a closed cabinet) and a goal image (e.g. an open cabinet), the robot was able to perform coarse manipulation tasks like opening and closing drawers, pushing, and tool use.

Berkeley researchers (Xiao et al, 2022) trained a vision transformer using masked modeling on a general (i.e. not task-specific) set of images. They then froze the encoder of the vision transformer and encoded robot camera images for a specific task. They paired the encoder output with proprioceptive input from the robot and used the combined data to learn a policy for a specific task using reinforcement learning. In this fashion, they were successful in using the generic encoder output to train a robot on multiple tasks.

MimicPlay (Wang et al, 2023) divides long-horizon tasks learning into two components. The first component uses self-supervised learning to predict hand trajectories by watching human play videos. For example, a human might be asked to interact with interesting objects a kitchen environment. The videos might show that human operator opening the oven and pulling out the tray or picking up a pan and placing it on the stove. Multiple cameras record the video from different locations so that the system learns an orientation-independent representation of the human play activity (similar to Sermanet et al, 2018).

The use of human self-play videos was first introduced by Google researchers (Lynch et al, 2019). The idea is that, even though humans and robots have different morphologies, “human play data contain rich and salient information about physical interactions that can readily facilitate robot policy learning”. More importantly, human play data is much easier and much less expensive to collect than robot data collected from teleoperation of robots. The second MimicPlay component learns low-level controller actions for individual sub-tasks using teleoperated imitation learning via a smartphone that controlled a robot arm. After training, the MimicPlay system is shown a goal image and the component that watched the videos generates a sequence of actions to reach the goal state. The low-level controller then executes those actions. The system was evaluated on 14 long-horizon tasks and dramatically outperformed earlier self-play imitation learning algorithms (e.g. Cui et al, 2022).

13.8 Following natural language instructions

Most of the robotics research is focused on improving methodologies for developing better and better single-purpose robots. We can train these robots to pick up a blue ball and put it in a box on the table. But if we now want to put it in a box on the floor or to pick up a red ball, retraining is required. In contrast, we can instruct a person to perform many tasks simply by providing natural language instructions.

Using natural language to instruct robots has several challenges:

First, real-world tasks tend to be more complex than the simple tasks that are readily learned using reinforcement and imitation learning. While a robot can be trained on a simple task such as picking up a block, real-world tasks are often long-horizon tasks that require completion of multiple sub-tasks.

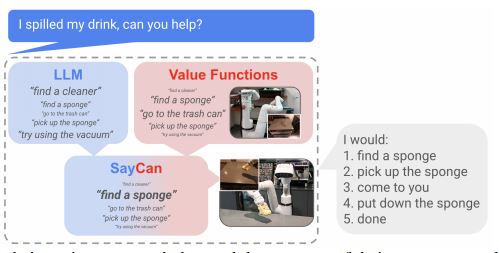

Second, robots are embodied agents (i.e. robots with vision, touch, and other sensors) that have vision, touch, and other sensors that enable them to interact with the environment. If you say to a chatbot “I spilled my drink, can you help?” and it responds “Do you want me to find a cleaner?”, that would be an acceptable answer. However, if you say that to a kitchen robot, you expect it to clean up the mess (Ahn et al, 2022).

Third, a robot’s environment itself limits what the robot can accomplish. Every environment has certain affordances (Gibson, 1977) that a robot could use to perform certain tasks. For example, the kitchen environment has cleaning supplies and cooking equipment that a robot could use to perform tasks like cleaning and cooking. But a kitchen environment doesn’t have the space or tools for a robot to fix a broken tractor.

Fourth, many instructions require a robot to plan and perform multiple sub-tasks to satisfy the instruction.

To begin to address these issues, Google researchers (Ahn et al, 2022) explored pairing the semantic capabilities of the 540 billion parameter PaLM language model (Chowdhery et al, 2022) with a robot’s hands and vision capabilities. They provided this real-world grounding by pre-training the robot for a set of skills and then using these affordances (i.e. this skill set) to constrain the language model responses to skills the robot was capable of performing. More specifically, they use reinforcement learning to learn a value function that determined which of the learned skills could be applied at each state of the environment. By doing so, the resulting language model, named SayCan, was able to respond to the question “I spilled my drink, can you help?” with the following:

One limitation of this approach is that the embodied agent is disconnected from the language model. For example, the language model cannot use visual input from the environment to influence its responses. Google researchers (Huang et al, 2022) enhanced theSayCan approach with the ability to ask clarification questions. For example, if the robot is instructed to “bring me the drink from the table”, it might ask the human “do you want the coke or the water”?

In the video above, the Google Grounded Decoding model was instructed to “Stack only the blocks of cool colors”. It was able to decompose this natural language instruction into two sub-tasks: (1) “Pick up blue block and place it on green block”. (2) “Pick up cyan block and place it on blue block”. Then it executes both sub-tasks as shown in the video.

Another group of Google researchers (Brohan et al, 2023) developed RT-1 (Robotics Transformer 1) which further enhanced the PaLM-SayCan framework to produce more efficient inference at runtime to make real-time control feasible. To create this efficiency, high-dimensional inputs and outputs in the training data including camera images, instructions and motor commands were converted into compact token representations for use by the Transformer. RT-1 was trained on a dataset that captured data from 13 robots, ~130,000 episodes, and 700+ tasks.

RT-1 was able to accomplish 101 real-world kitchen tasks and was able to execute on 700 training instructions with a 97% success rate. It was also able to generalize to new tasks, distractors, and backgrounds 25%, 36% and 18% better, respectively, than the next best baseline system tested. The biggest improvement was for long-horizon planning tasks (8 or more steps) such as “I left out a soda, an apple and water. Can you throw them away and then bring me a sponge to wipe the table?”

One limitation of the SayCan approach is that the vision component of the embodied agent is disconnected from the language model. The vision component is used to help execute commands. However, the language model cannot use visual input from the environment to influence its responses. To get around this limitation, researchers at Berkeley and Google created InstructRL (Liu et al, 2022) which uses a multi-modal transformer that is jointly trained on sequences of images from multiple cameras, text instructions, proprioception data, and robot actions where each action consists of the position, rotation, and state of the robot gripper:

Google researchers (Driess et al, 2023) developed a similar multi-modal model based on the Palm language model (Chowdhery et al, 2022) but enhanced with embodied data and named the model PaLM-E. The model was jointly trained with text, visual embeddings from a Vision Transformer (Dosovitskiy et al, 2021), and state information regarding the objects in each image (e.g. pose, size, and color). The resulting model performed well on three real-world desktop manipulation tasks. This sequential data is fed into a transformer that predicts actions, i.e. the position, rotation, and state of the gripper. InstructRL was evaluated on the RLBench (James et al, 2019) benchmark which is composed of 74 tasks in 9 categories. The system achieved state of the art performance on all 74 tasks.

A team of MIT, Google, and Berkeley researchers (Du et al, 2023) created a video diffusion model that was trained autoregressively to take a video frame and a text instruction and predict the video frame that is the next step towards accomplishing the text instruction. The resulting system, UniPi, was able to generate the sequence of video frames that would accomplish the text instruction. They trained a separate model to generate actions from images (video frames). When this model was applied to the sequence of video frames, the result was the sequence of actions required to complete the trajectory. Though this approach was not tested on real-world robots, it provides a general framework for creating action plans from instructional videos.

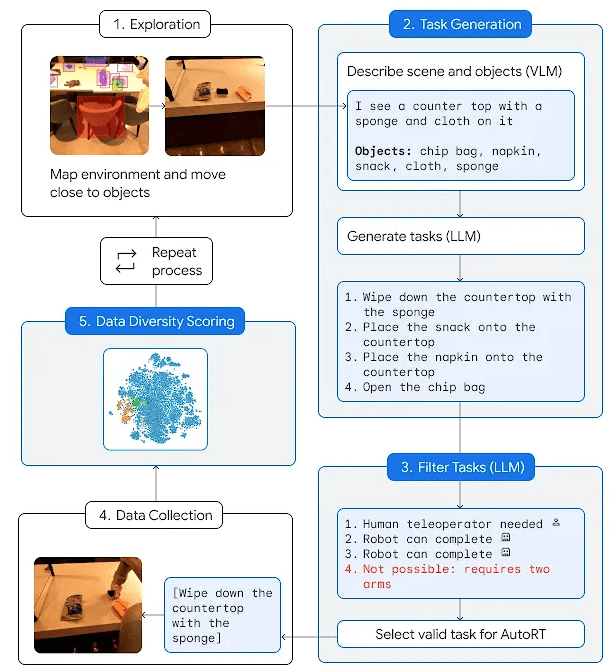

Google DeepMind researchers (Ahn et al, 2023) added a large language model (LLM) and a visual-language model (VLM) to its RT-1 (see above) and RT-2 (Brohan et al, 2023) systems and named the resulting system AutoRT. As illustrated below, AutoRT can use the VLM to identify objects within it camera range. Then, the LLM creates a list of tasks that a robot might perform using the “seen” objects.

It then determines which tasks the robot is capable of performing on its own.

Meta’s OK-Robot framework discussed above is another promising methodology for following natural language commands.

Unsupervised Learning > © aiperspectives.com, 2020. Unauthorized use and/or duplication of this material without express and written permission from this site’s owner is strictly prohibited. Excerpts and links may be used, provided that full and clear credit is given with appropriate and specific direction to the original content.