Reasoning

6.0 Overview

One of the fundamental characteristics of human cognition is the ability to reason. People use reasoning to solve problems and make decisions.

Reasoning starts with knowledge. People have access to their own knowledge about the world, information that can be looked up, and new information that forms the context for the problem to be solved or the decision to be made. People reason by taking the facts and applying a set of logical steps to reach a conclusion. People employ many different forms of reasoning including (but not limited to) deductive, inductive, abductive, causal, commonsense, analogical, and mathematical reasoning.

According to the ground-breaking developmental psychologist Jean Piaget (Piaget and Inhelder, 1972), logical reasoning starts developing in the concrete operational phase of child development, which occurs approximately between ages 7 and 11. In this stage, children learn to apply forms of logical reasoning such as inductive, deductive, and abductive reasoning. However, they can only apply logical reasoning to the concrete objects they encounter in the world.

Children start to acquire abstract thinking skills around age 11 when Piaget’s formal operational phase begins. Here, children start to think in terms of representations of objects rather than just the concrete objects themselves. For example, they can start to reason about hypothetical situations and use symbols to performs elementary math. They begin to imagine different uses for tools, start to form theories and make predictions, engage in hypotheticals and counterfactuals, and start to use figurative language. They start to understand analogies and humor and make jokes. Abstract thinking helps people solve problems, figure out how to handle new situations, plan ahead, and think strategically.

Most users of large language models (LLMs) would agree that LLMs at least appear to have some level of reasoning capabilities. Unfortunately, no one knows exactly how LLMs produce the amazing capabilities discussed in the last chapter.

Researchers are just starting to probe LLMs by seeing which neurons are activated in response to certain stimuli (Bricken et al, 2023) and which activations store the relationships between entities and their properties (Meng et al, 2023). That said, if research on the human brain is any indication, it may be a very long time before we learn exactly how LLMs work. Researchers have been stimulating neurons in the human brain since the pioneering work of Wilder Penfield in the 1940s and 1950s. His “maps” of brain function are still referenced today but an understanding of how human neurons work is still far in the future.

While neither cognitive psychologists nor neurologists understand how the neurons in human brains operate to store knowledge about the world and effect human thinking and reasoning, cognitive psychologists perform experiments on people to determine how human brains work at a functional level. These experiments have provided great insights into the types of knowledge structures that people have and the types of reasoning about this knowledge that people perform every day even though they can’t localize that knowledge and reasoning down to the level of actual neurons.

Similarly, AI researchers and cognitive psychologists have probed LLMs to determine the types of world knowledge and reasoning capabilities that they acquire during language model training. In this chapter, we’ll examine the evidence regarding both the acquisition of world knowledge during training and the degree to which LLMs learn to think and reason during training.

6.1 World knowledge

The degree to which LLMs acquire knowledge about the world during pre-training have been analyzed in three ways:

- NLP benchmarks

- Academic and professional tests

- Anecdotal evidence

These types of evidence are listed in order of validity. NLP benchmarks are based on datasets that are carefully constructed by researchers but are still subject to some concerns about their validity.

Academic and professional tests are also carefully constructed but their intended audience is humans rather than computers. While these are valid tests of knowledge, they shouldn’t be seen as predictors of ability to perform in the real-world. For example, one can’t infer that an LLM that does well on a law exam would perform well in a courtroom.

Anecdotal evidence is by far the least reliable. These are just individual examples that shouldn’t be given anywhere near the same weight as benchmarks and exams. All of the anecdotal examples presented below were taken from a session with ChatGPT in May 2023.

6.1.1 Simple facts

Multiple LLMs have been tested against benchmarks that test their knowledge of simple facts. These datasets contain questions like

Q: Who played tess on touched by an angel?

A: Della Reese

and

Q: Where was Obama born?

A: Hawaii.

The criteria used to measure the accuracy of the LLM answers was that it had to be an exact match (em) and the LLMs had to answer without consulting external information sources like Wikipedia.

Exact match accuracy scores were:

| Benchmark | GPT-3 | LLaMA 2 | PaLM 2-L |

| Natural Questions (Kwiatkowski et al, 2019) | 30% | 44% | 38% |

| Web Questions (Berant et al, 2013) | 42% | 44% |

While these accuracy scores may seem fairly low, it should be pointed out that, except for trivia experts, most humans would probably also only be able to answer a fairly low percentage of these questions from memory. These results show that LLMs are acquiring world knowledge.

6.1.2 Academic subjects

GPT-4 demonstrated amazing results on a number of academic exams:

| Test | Human Percentile |

| GRE Verbal | 99 |

| USABO (biology) | 99-100 |

| AP Art History | 86-100 |

| AP Biology | 85-100 |

| AP Chemistry | 71-88 |

| AP Environmental science | 91-100 |

| AP Macroeconomics | 84-100 |

| AP Microeconomics | 82-100 |

| AP Physics 2 | 66-84 |

| AP Psychology | 83-100 |

| AP Statistics | 85-100 |

| AP US History | 89-100 |

| AP World History | 65-87 |

| GRE Quantitative | 80 |

| GRE Writing | 54 |

| AP Calculus BC | 43 |

| AP English Language and Composition | 14-44 |

| AP English Literature and Composition | 8-22 |

The AGI-Eval benchmark (Zhong et al, 2023) contains college entrance exam questions some of which are in English and some in Chinese. Scores for three LLMs on AGI-Eval are shown below (Zhong et al, 2023):

| SAT-Math | SAT-English | |

| Average Human Score | 66 | 66 |

| Top Human Score | 94 | 94 |

| ChatGPT | 71 | 78 |

| GPT-4 | 95 | 86 |

| LLaMA 2 | 42 | 87 |

Very impressive results. The LLMs beat the average human score handily and even beat the SAT-Math top human score!

Scores for GPT-4 and ChatGPT on Chinese language college entrance exams (Zhong et al, 2023) were:

| Chinese | English | Geography | History | Biology | Chemistry | Physics | Math QA | Math Cloze | |

| Average Human Score | 65 | 69 | 65 | 64 | 68 | 66 | 71 | 73 | 73 |

| Top Human Score | 85 | 91 | 85 | 85 | 89 | 86 | 94 | 96 | 96 |

| ChatGPT | 34 | 84 | 56 | 50 | 42 | 34 | 30 | 33 | 5 |

| GPT-4 | 45 | 93 | 72 | 77 | 72 | 52 | 46 | 51 | 15 |

These results are also impressive though not as much as the English results. GPT-4 beat the average Chinese student score on four of the nine exams. It beat the average student on exams that test factual knowledge like biology, history, and geography but did poorly relative to the average Chinese student on exams that require some reasoning such as math, physics, and chemistry.

Similar results were found for college entrance exams in Vietnam (Dao et al, 2023). GPT-4 also performed poorly on math, physics, and chemistry college entrance exams in India (Arora et al, 2023).

6.1.3 Legal expertise

According to the initial GPT-4 technical report (OpenAI, 2023), GPT-4 scored in the 90th percentile on the Uniform Bar Exam. However, subsequent analysis (Martinez, 2024) suggests that the true percentile was the 69th percentile or lower and was ~48th percentile for essay questions.

Scores for three LLMs on Chinese language college entrance exams are shown below (Zhong et al, 2023):

| LSAT-AR | LSAT-LR | LSAT-RC | QA-KD | QA-CA | |

| Average Human Score | 56 | 56 | 56 | 71 | 58 |

| Top Human Score | 91 | 91 | 91 | 78 | 85 |

| ChatGPT | 23 | 53 | 62 | 21 | 20 |

| GPT-4 | 34 | 81 | 85 | 32 | 30 |

| LLaMA 2 | 36 | 70 | 77 |

The legal tests above were law school entrance exams. Another study (Choi et al, 2023) tested ChatGPT on actual law school performance. They used four law school exams at the University of Minnesota. There were 95 multiple choice and 12 essay questions. The researchers blindly graded ChatGPT along with human student answers. ChatGPT achieved an average grade of C+ which was a passing grade but was at or near the bottom of the class. This grade would have put a human student on academic probation. The system performed at the 17th percentile on essays and at the 7th percentile on multiple choice questions. The overall evaluation was:

“In writing essays, ChatGPT displayed a strong grasp of basic legal rules and had consistently solid organization and composition. However, it struggled to identify relevant issues and often only superficially applied rules to facts as compared to real law students.”

Another study, performed by LegalOn Technologies, demonstrated that both GPT-4 and Claude 2 can pass the legal ethics exam in addition to the bar exam.

6.1.4 Medical knowledge

GPT-4 scored in the 75% percentile on the Medical Knowledge Self-Assessment test.

ChatGPT performed at the level of a 3rd year medical student on standardized medical student tests (Gilson et al, 2022).

GPT-4 also received a passing grade on a set of test questions that simulated the US Medical License Examinations and performed better than first- and second-year medical students.

6.1.5 Professional knowledge

GPT-4 scores on the three levels of the sommelier certification tests are shown below (OpenAI, 2023):

| Test | Human Percentile |

| Introductory Sommelier | 92 |

| Certified Sommelier | 86 |

| Advanced Sommelier | 77 |

Scores for three LLMs on the LogiQA English and Chinese civil service exams are shown below:

| English |

Chinese |

|

| Average Human Score | 86 | 88 |

| Top Human Score | 95 | 96 |

| ChatGPT | 40 | 39 |

| GPT-4 | 58 | 58 |

| LLaMA 2 | 49 |

LLM scores on the MMLU (Hendrycks et al, 2021), a test containing multiple choice questions in 57 academic and professional subjects, are shown below*:

| Claude 3 | Gemini | GPT-4 | LLaMA 2 |

| 87% | 84% | 86% | 68% |

* 5-shot scores

6.1.6 Conceptual knowledge

LLMs demonstrate conceptual knowledge that includes both taxonomic and ontological knowledge that is often structured as a knowledge graph. A knowledge graph contains entities, the relationships between entities, and the properties of the entities.

For example, Ettinger (2020) gave the BERT LLM a completion task with examples like this one:

A robin is a ___

BERT correctly responded with “bird” 100% of the time.

LLMs also demonstrate this type of knowledge by being able to answer questions like these:

Q: Are german shepards mammals?

A: Yes, German Shepherds are mammals. In fact, all dogs, including German Shepherds, are mammals. Mammals are a class of animals that are characterized by having hair or fur, mammary glands that produce milk for their young, and three middle ear bones. Dogs, like all mammals, give birth to live young which they nurse with milk produced by their mammary glands.

Q: Tell me about New Haven CT

A: New Haven is a city located in the southern part of the state of Connecticut in the United States. It is the second-largest city in Connecticut after Bridgeport, with a population of approximately 130,000 people.

New Haven is home to many famous landmarks and institutions, including Yale University, one of the world’s most prestigious universities. The city is also known for its historic architecture, including the New Haven Green, a large public park in the center of the city that was first laid out in 1638. The green is surrounded by many historic buildings, including the Old Campus of Yale University, the New Haven County Courthouse, and the Center Church on the Green, which was built in the 1810s.

Another notable landmark in New Haven is the Peabody Museum of Natural History, which is part of Yale University and houses many exhibits related to natural history, including dinosaur fossils, Native American artifacts, and minerals. The city is also home to the Shubert Theater, a historic venue that has hosted many famous Broadway shows and musical acts over the years.

In terms of cuisine, New Haven is known for its pizza, which is considered by many to be some of the best in the world. The city has several famous pizzerias, including Frank Pepe Pizzeria Napoletana and Sally’s Apizza, which have been serving up delicious pies for generations.

Overall, New Haven is a vibrant and historic city with much to offer visitors and residents alike, from its world-class university to its famous pizza and historic landmarks.

Researchers have also shown that knowledge graphs can be populated by extracting this knowledge from LLMs (e.g. Hwang et al, 2021).

Moreover, this conceptual knowledge seems to extend to entity properties that most people think of as visual information:

Q: What shape are a german shepherd’s ears?

A: German Shepherds typically have erect, pointed ears that stand up straight on top of their head. The ears are triangular in shape and are set high on the head. When a German Shepherd is a puppy, its ears may be floppy or only partially erect, but as the dog grows and develops, the ears should stand up straight. However, some German Shepherds may have naturally floppy ears due to genetics or injury. Overall, German Shepherd ears are an important characteristic of the breed’s appearance and are often used to help distinguish them from other breeds.

6.1.7 Events

LLMs demonstrate a strong knowledge of events that occurred prior to their training cutoff date. For example,

Q: Tell me about the crash of the Hindenburg.

A: The Hindenburg was a German airship, a type of lighter-than-air aircraft that used hydrogen gas to float in the air. On May 6, 1937, the Hindenburg was attempting to land at the Naval Air Station in Lakehurst, New Jersey, after a transatlantic flight from Frankfurt, Germany, when it suddenly caught fire and crashed. The disaster resulted in the deaths of 36 people, including passengers and crew members.

The cause of the Hindenburg disaster is still debated, but it’s widely believed that the fire was started by a spark that ignited the hydrogen gas inside the airship’s gas cells. The spark may have been caused by static electricity or a spark from the engine exhaust. The fire spread rapidly, engulfing the ship in flames and causing it to crash to the ground.

The disaster was a major event at the time and was widely covered by the media. It had a significant impact on the public perception of air travel and led to a decline in the use of airships for commercial purposes. The Hindenburg disaster also led to significant changes in airship design and safety procedures, including the use of non-flammable helium gas instead of hydrogen and the development of new fire-resistant materials for airship construction.

6.1.8 Scripts

People know the typical patterns of events of eating in a restaurant, buying goods in a store, or lending money to a friend (Schank and Abelson, 1977). Schank and his students theorized that people have scripts that they use to make sense of the world. For example, there might be a restaurant script encodes the basic stages of eating in a restaurant:

ENTERING -> ORDERING -> EATING -> PAYING/LEAVING

So, when we hear that

John went to a restaurant He ordered chicken He paid and left

we can infer that John was seated and that he ate the chicken. It should be noted that the description above is a simplification for the purpose of illustration.

So, I tried this example on ChatGPT:

Q: A woman went to a restaurant. She ordered steak. She paid the check and left. Did she eat the steak?

A: It’s impossible to determine whether or not the woman ate the steak based on the given information. It’s possible that she ate the steak and left, or she could have taken the steak to go. Alternatively, she might not have eaten the steak at all.

Source: ChatGPT — May, 2023

The ChatGPT answer makes it sound like ChatGPT has an even more detailed restaurant script than the simple one described by Schank.

6.1.9 Models of the world

A group of Harvard researchers (Li et al, 2023) did an experiment to determine whether LLMs just memorize statistics or whether they learn a model of the world. They investigated this question by training a model, Othello GPT, to predict legal moves in the Othello board game. They probed the trained model to determine if it had an internal representation of its “world”, i.e. the Othello board. They found that not only could the model predict the next move but that it had an internal representation of where all the pieces were on the board, i.e. it had a representation of its “world model”.

LLMs also seem to have internal models of many specific aspects of the world including:

- Geography

Q: Is nevada east or west of utah

A: Nevada is located to the west of Utah. Utah is a state in the western United States, and Nevada is located directly to the west of Utah, with a border between the two states. - Space

Q: Mary is to the left of John and Paul is to the right of John. Is Mary to the left or right of Paul?

A: Assuming that “left” and “right” are being used in a linear sense, with John being in the center, Mary to his left, and Paul to his right, then Mary is to the left of Paul. This can be visualized as follows:

Mary John Paul

[ ← | • | → ]

In this arrangement, Mary is to the left of John, Paul is to the right of John, and Mary is also to the left of Paul. Therefore, Mary is to the left of Paul.

Though LLMs appear to exhibit spatial reasoning, mixed vision-language models have difficulties producing captions for images that contain spatial relationships (Kamath et al, 2023).

Q: My 68th birthday was Sept 16 2022. How old will I be on Sept 15 2023?

A: If your 68th birthday was on September 16, 2022, then you will turn 69 years old on September 16, 2023. Therefore, on September 15, 2023, which is one day before your next birthday, you will be 68 years old.

Q: If I press the power button on the TV remote control, what will happen?

A: If you press the power button on the TV remote control, it will turn off the TV if it’s currently on, or turn it on if it’s currently off. The power button is used to toggle the power state of the TV. When the TV is on, it displays images and plays sound, and when it’s off, it’s in standby mode and doesn’t display any images or play any sound. Pressing the power button on the remote control sends a signal to the TV to turn it on or off, depending on its current state. The exact behavior of the power button may vary depending on the specific make and model of the TV and the remote control.

Q: If I roll a dice 10 times, how many times will it land with 5 dots face up?

A: Rolling a dice is a random event, and the probability of getting a specific number on a single roll of a fair six-sided dice is 1/6. Therefore, the expected number of times a dice with 5 dots face up will appear on any given roll is approximately 1/6.

To determine the expected number of times a dice with 5 dots face up will appear if you roll a dice 10 times, you can use the formula for the binomial distribution. The probability of getting a dice with 5 dots face up on any given roll is p=1/6, the number of trials is n=10, and the number of successes (getting a dice with 5 dots face up) is x=?

Using the formula, the expected number of times a dice with 5 dots face up will appear if you roll a dice 10 times is:

E(x) = np = 10(1/6) = 1.67

Since you cannot get a fractional number of successes, the expected number of times a dice with 5 dots face up will appear is rounded to the nearest whole number, which is 2. Therefore, you can expect to get a dice with 5 dots face up approximately 2 times if you roll a dice 10 times. However, it’s important to remember that this is just an expected value based on probability, and the actual number of times a dice with 5 dots face up appears can vary due to chance and other factors.

Q: is 10 a bigger number than 5

A: Yes, 10 is a bigger number than 5. In fact, 10 is twice as big as 5. When comparing two numbers, one way to determine which is bigger is to look at their values and compare them. In this case, 10 has a higher value than 5, so it is the bigger number.

Q: If I drop a glass, what will happen?

A: If you drop a glass, it will likely fall to the ground and shatter into pieces. This is because glass is a brittle material that can easily break when subjected to force or impact. When the glass hits the ground, the force of the impact causes it to fracture and break into smaller pieces. The specifics of what happens when you drop a glass can depend on various factors, such as the height from which it was dropped, the surface onto which it falls, and the type of glass and its thickness. However, in general, dropping a glass will cause it to break and potentially create a mess that needs to be cleaned up.

Q: Is it dangerous to smoke a cigarette while pumping gas?

A: Yes, smoking a cigarette while pumping gas is very dangerous and should be avoided. Gasoline is highly flammable, and smoking near gasoline vapors can ignite a fire or explosion. The sparks from a cigarette or lighter could easily ignite gasoline vapors, leading to a fire or explosion that could cause serious injury or death. To prevent accidents, it’s essential to always observe posted “No Smoking” signs and to extinguish all smoking materials before pumping gas. Additionally, it’s important to avoid using your cellphone while pumping gas, as it can also generate sparks that could ignite gasoline vapors.

Q: A lion encountered an antelope. What happened next?

A: It’s likely that the lion attempted to catch and kill the antelope. Lions are carnivorous animals that hunt and feed on other animals, and antelopes are a common prey species for lions. Depending on the specific circumstances of the encounter, the lion may have chased the antelope, ambushed it, or used other hunting strategies to catch it. If the lion was successful in catching the antelope, it would have killed it and then eaten it as a source of food. However, without more information about the specific situation, it’s impossible to say exactly what happened next.

Q: If I encounter an alligator, what should I do?

A: Encountering an alligator can be a dangerous situation, and it’s important to take appropriate precautions to stay safe. Here are some general guidelines to follow if you encounter an alligator:

Keep a safe distance: Stay at least 6 feet away from the alligator, and if possible, create a barrier between yourself and the alligator, such as a fence or a car.

Do not approach or feed the alligator: Alligators are wild animals, and feeding or approaching them can make them more aggressive and dangerous.

Back away slowly: If you find yourself too close to the alligator, slowly back away while keeping your eye on the alligator.

Avoid sudden movements: Alligators are sensitive to sudden movements, and sudden movements can provoke an attack. Move slowly and calmly.

Seek help: If you feel threatened or if the alligator is blocking your path, seek help from a trained professional, such as a wildlife expert or animal control.

Do not swim in areas known to have alligators: Alligators are often found in freshwater bodies such as ponds, lakes, and rivers, and it’s important to avoid swimming in areas known to have alligators.

Remember that alligators are wild animals and should be treated with caution and respect. If you are unsure about how to handle an alligator encounter, seek guidance from a trained professional.

Q: The police officer held up his hand and stopped the truck. Did the officer physically stop the truck?

A: Based on the given information, it’s unlikely that the police officer physically stopped the truck. When an officer holds up their hand to stop a vehicle, it usually means that they are signaling the driver to come to a stop by using a hand gesture or a stop sign. The driver would need to voluntarily stop the truck in response to the officer’s signal. However, without further context, it’s impossible to say for certain what happened in this particular situation.

Q: Superman held up his hand and stopped the truck. Did Superman physically stop the truck?

A: If we are talking about the Superman character from the comics or movies, then it’s possible that he physically stopped the truck using his superhuman strength or other abilities. However, if we are talking about a real-life situation, then it’s not possible for Superman to physically stop a truck since he is a fictional character.

-

Human moods

Q: John starting ranting about all of the president’s mistakes. How would you describe his mood?

A: Based solely on the information provided, John’s mood can be described as angry or frustrated. Ranting is often associated with expressing strong negative emotions, such as anger, frustration, or disappointment. By ranting about the president’s mistakes, John is likely expressing his strong disapproval of the president’s actions or decisions. However, it’s important to note that this is a subjective interpretation of John’s mood based on limited information, and there may be other factors or emotions at play that are not immediately apparent. -

Attitudes

Q: John is a republican. Does he support abortion?

A: It’s difficult to determine John’s stance on abortion based solely on the information that he is a Republican. While the Republican Party as a whole tends to be more conservative on social issues, including abortion, individual Republicans may have a range of opinions on the topic. Some Republicans may support abortion rights, while others may oppose them.

Additionally, people’s political views are complex and can be influenced by a variety of factors, including personal beliefs, religious values, and life experiences. Therefore, it’s important to avoid making assumptions about someone’s stance on a particular issue based solely on their political affiliation. If you want to know John’s opinion on abortion, it’s best to ask him directly or engage in a conversation about the topic to gain a better understanding of his perspective. -

Personality

Q: John keeps accusing Mary of infidelity. How would you describe his personality?

A: Based solely on the information provided, it’s difficult to make a definitive assessment of John’s personality. However, his behavior of constantly accusing Mary of infidelity suggests that he may have trust issues or be prone to jealousy. This could be a manifestation of a variety of personality traits, such as insecurity, possessiveness, or paranoia.

It’s also important to note that there could be other factors contributing to John’s behavior, such as past experiences of betrayal or trauma. Additionally, it’s possible that John’s accusations are unfounded and that he is projecting his own insecurities or guilt onto Mary.

Overall, it’s important to approach this situation with empathy and caution, and to avoid making definitive judgments about John’s personality without further information or context. If John’s behavior is causing problems in his relationship with Mary, it may be helpful for him to seek professional help to address any underlying issues and improve his communication and coping skills. -

Aging

Q: who acts more like a child? a 10-year old or a 90-year old?

A: In general, a 10-year-old is more likely to act like a child than a 90-year-old. This is because at the age of 10, a child is still developing physically, emotionally, and cognitively, and they are likely to engage in behaviors that are characteristic of childhood, such as playing, exploring, and seeking attention. On the other hand, a 90-year-old has had a lifetime of experiences and has likely developed a more mature and stable personality with a greater sense of independence and self-awareness. While there can be individual differences in behavior and personality among people of any age, as a general rule, a 10-year-old is more likely to act like a child than a 90-year-old. -

Emotions

Q: John broke up with Mary. That made Mary mad. Explain why Mary was made at John.

A: Mary was likely mad at John because he broke up with her. A breakup is a significant event that can cause a range of emotions, including sadness, anger, and frustration. Mary may have been invested in the relationship and had expectations for its continuation, and John’s decision to end the relationship may have come as a surprise or disappointment. Additionally, the way in which the breakup occurred or the reasons behind it may have contributed to Mary’s anger. Without more information about the specific circumstances of the breakup, it’s difficult to know exactly why Mary was mad at John, but it’s reasonable to assume that the breakup itself was the primary cause of her anger.

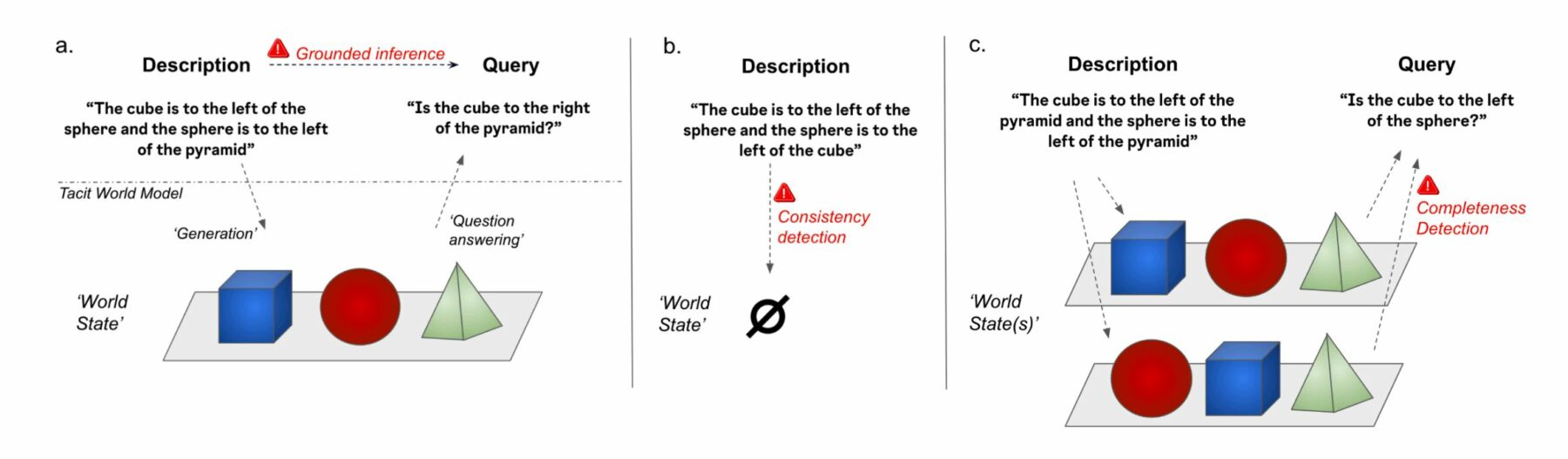

A more rigorous evaluation was performed by a group of Meta researchers (Benchekroon et al, 2023). They devised three tasks intended to assess whether LLMs can form a spatial model of the world in response to a text description. The three tasks are illustrated below:

The grounded inference task provides a textual description of the spatial relationship between objects and then queries the LLM about that spatial relationship. The consistency detection task asks the LLM to determine whether the textual description is internally consistent. The completenes detection task asks the LLM to determine whether the textual description can produce more than one world model. The best-performing LLM without fine-tuning was GPT-4 which still had a high error rate. GPT4 produced scores of 76% on grounded inference, 65% on consistency detection, and 58% on completeness detection. Chance performance was 50%. The errors persistent even when using chain-of-thought prompting and in-context learning examples.

6.1.10 Conclusions about LLM knowledge

The research and anecdotes discussed in this section clearly show that LLMs acquire many different types of knowledge including general factual knowledge, knowledge needed for college and law school entrance exams, and professional exams used in medecine and other fields.

As Will Douglas Heaven said in an MIT Technology Review article:

“What it seems to be good at is synthesizing text it has found elsewhere on the internet, making it a kind of vast, eclectic scrapbook created from millions and millions of snippets of text that it then glues together in weird and wonderful ways on demand.”

But it’s not the words themselves that are glued together, it’s the vectors in the latent space created by training the models that are glued together. Columbia University researcher Raphaël Millière eloquently described the process of generating text, images, and other media as

“…sampling a region of the model’s latent space based on context”

So, rather than sampling a scrapbook of words, these models sample from the (most likely language-independent) latent vectors created by the model training and then convert the sampled content to words.

While LLMs demonstrate significant world knowledge, the press has sensationalized them. After ChatGPT was able to answer some of the Wharton final exam questions, media outlets proclaimed that ChatGPT has the knowledge and abilities of an MBA recipient. For example, a Bloomberg News headline said “ChatGPT Gets an MBA”.

Mitchell (2023) debunked this notion and similar press proclamations about passing graduate-level exams. For example, she argued that, while research has shown a correlation between human performance on these tests and performance in the real world, there is no such evidence that this correlation will hold true for AI systems. In a study, she performed some informal tests that showed that, while impressive results were achieved, that if the test questions are phrased slightly differently, in a way that wouldn’t affect human performance on these tests, that LLMs often answered questions correctly when phrased one way and incorrectly when phrased another way. This would almost certainly negatively impact AI system performance in the real world.

Narayanan and Kapoor (2023) made similar arguments and additionally point to issues like data contamination which was discussed earlier in this chapter.

Though ChatGPT is not ready to run a business, there can be little doubt that LLMs acquire a great deal of world knowledge during training.

6.2 Reasoning

Most of the research on reasoning in LLMs focuses on either commonsense reasoning or abstract thinking. We’ll explore LLM commonsense and abstract reasoning capabilities in this section.

Perhaps the most important question that researchers study is whether LLMs learn reasoning patterns in a general fashion that enables them to apply their reasoning skills to situations not encountered during training. Alternatively, perhaps they just memorize task-specific reasoning patterns and only use them for the specific tasks for which they were trained.

6.2.1 Commonsense reasoning

Children begin learning to reason at a very young age. At around six months of age, infants learn that when a ball is hidden behind a barrier, it still exists and they can be seen trying to look behind the barrier to see the ball. This was termed object permanence by Piaget. More importantly, object permanence isn’t learned independently for every object. Instead, infants learn a generalized notion of object permanence and apply it to objects they’ve never seen before.

Object permanence is an early form of commonsense reasoning. Children also learn commonsense concepts about physics such as gravity. They learn that if they drop a ball, it will fall to the ground. In adults, commonsense reasoning allows drivers to handle road configurations and conditions they’ve never seen before.

In contrast, self-driving cars struggle with road configurations and conditions they didn’t encounter during training because they don’t have the ability to apply commonsense reasoning. For example, a self-driving taxi in San Francisco encountered a woman who had been hit by another car. The taxi had “learned” to pull over to the side of the road when it encountered an accident. Unfortunately, it dragged the poor woman under the taxi over to the side of the road. Any human driver would know that, if a person hit by another car landed under the wheels of the car of the driver, the last thing they should do is continue driving and risk injuring the person further. But self-driving taxis don’t have commonsense reasoning abilities, and the poor woman was dragged under the taxi for 20 feet. This incident led to the self-driving taxi company (Cruise) removing all of its cars from the road.

Researchers have proposed many benchmark datasets for measuring commonsense reasoning capabilities. These include:

| Benchmark | Example |

| WSC (Levesque et al, 2012) |

The trophy doesn’t fit in the brown suitcase because it’s too big. What is too big? Correct Answer: the trophy Incorrect Answer: the suitcase |

| Winogrande (Sakaguchi et al, 2019) |

A larger and more difficult version of WSC |

| PhysicalQA (Bisk et al, 2019) |

Question: To separate egg whites from the yolk using a water bottle, you should |

| ARC (Challenge) (Clark et al, 2018) |

Question: George wants to warm his hands quickly by rubbing them. Which skin surface will produce the most heat? |

| HellaSwag (Zellers et al, 2019) |

Scenario: A woman is outside with a bucket and a dog. The dog is running around trying to avoid a bath. |

| SIQA (Sap et al, 2019) |

Q: Jordan wanted to tell Tracy a secret, so Jordan leaned towards Tracy. Why did Jordan do this? |

| CommonSense QA (Talmor et al, 2019) |

Question: Where would I not want a fox? Corect Answer: hen house Incorrect Answer: england Incorrect Answer: mountains |

|

TriviaQA |

Excerpt: The Dodecanese Campaign of World War II was an attempt by Allied forces to capture the Italian-held Dodecanese islands in the Aegean Sea following the surrender of Italy in September 1943, and use them as bases against the German-controlled Balkans. The failed campaign, and in particular the Battle of Leros, inspired the 1957 novel The Guns of Navarone and the successful 1961 movie of the same name. Question: The Dodecanese Campaign of WWII that was an attempt by the Allied forces to capture islands in the Aegean Sea was the inspiration for which acclaimed 1961 commando film? |

| ReCoRD (Zhang et al, 2018) |

Passage: . . . Jamie Lee Sharp, 25, stole keys to £40,000 Porsche Boxster during raid. . . He filmed himself boasting about the car before getting behind the wheel |

|

BoolQ |

Passage: The Sharks have advanced to the Stanley Cup finals once, losing to the Pittsburgh Penguins in 2016. Question: Have the San Jose Sharks won a Stanley Cup? |

|

MultiRC |

S3: Hearing noises in the garage, Mary Murdock finds a bleeding man, mangled and impaled on her jeep’s bumper. S5: Panicked, she hits him with a golf club. S10: Later the news reveals the missing man is kindergarten teacher, Timothy Emser. S12: It transpires that Rick, her boyfriend, gets involved in the cover up and goes to retrieve incriminatory evidence off the corpse, but is killed, replaced in Emser’s grave. S13: It becomes clear Emser survived. S15: He stalks Mary many ways Question: Who is stalking Mary? |

|

DROP |

Passage: In 1517, the seventeen-year-old King sailed to Castile. In May 1518, Charles traveled to Barcelona in Aragon. Question: Where did Charles travel to first, Castile or Aragon? |

|

CoQA |

Excerpt: Jessica went to sit in her rocking chair. Today was her birthday and she was turning 80. Her granddaughter Annie was coming over in the afternoon and Jessica was very excited to see her. Her daughter Melanie and Melanie’s husband Josh were coming as well. Question: Who had a birthday? Answer: Jessica Question: How old would she be? Answer: 80 |

| COPA (Gordon et al, 2012) |

Premise: The man fell unconscious. What was the cause of this? Correct Answer: The assailant struck the man on the head. Incorrect Answer: The assailant took the man’s wallet. |

|

RACE |

Article: On the evening of June 21, 1992, a tall man with brown hair and blue eyes entered the beautiful hall of the Bell Tower Hotel in Xi’an with his bicycle. The hotel workers received him and telephoned the manager, for they had never seen a bicycle in the hotel ball before though they lived in “the kingdom of bicycles. Robert Friedlander, an American, arrived in Xi’an on his bicycle trip across Asia which started last December in New Delhi, India. When he was 11, he read the book Marco Polo and made up his mind to visit the Silk Road. Now, after 44 years, he was on the Silk Road in Xi’an and his early dreams were coming true. Robert Friedlander’s next destinations were Lanzhou, Dunhuang, Urumqi, etc. He will complete his trip in Pakistan. Question: The best headline for this newspaper article would be _. |

| RTE (Text Analysis Conference Challenge 2005-2011) |

(1) Claims by a French newspaper that seven-time Tour de France winner Lance Armstrong had taken EPO were attacked as unsound and unethical by the director of the Canadian laboratory whose tests saw Olympic drug cheat Ben Johnson hit with a lifetime ban. |

|

OpenBookQA |

Science Fact: Metal is a thermal conductor Common Knowlege: Question: Which of these would let the most heat travel through? |

The table below compares the scores on these datasets for some of the best known LLMs and also compares those scores with the state of the art (SOTA):

| Benchmark | Measure |

Claude 3 |

Gemini |

GPT-3/4* | LLaMA* | PaLM* | GLAM | FLAN | Best LLM Score |

SOTA Score |

Fine-Tuning Advantage |

| WSC | accuracy | 80% | 87% | 86% | 89% | 89% | 89% | 0% | |||

| Winogrande | accuracy | 88% | 80% | 83% | 79% | 72% | 88% | 88% | 0% | ||

| PhysicalQA | accuracy | 83% | 83% | 85% | 82% | 82% | 85% | 85% | 0% | ||

| ARC (Challenge) | accuracy | 96% | 57% | 64% | 52% | 96% | 96% | 0% | |||

| HellaSwag | accuracy | 88% |

95% | 85% | 87% | 77% | 59% | 95% | 95% | 0% | |

| SIQA | accuracy | 52% | 90% | 90% | 90% | 0% | |||||

| CommonSense QA | accuracy | 79% | 90% | 90% | 90% | 0% | |||||

| TriviaQA | exact match | 71% | 85% | 86% | 86% | 86% | 0% | ||||

| ReCoRD | accuracy | 90% | 94% | 90% | 79% | 94% | 95% | 1% | |||

| BoolQ | accuracy | 76% | 85% | 91% | 91% | 92% | 1% | ||||

| MultiRC | F1 | 75 | 88 | 88 | 90 | 2 | |||||

| DROP | F1 | 83 | 82 | 81 | 81 | 83 | 1 | ||||

| CoQa | F1 | 85 | 85 | 91 | 6 | ||||||

| COPA | accuracy | 93% | 93% | 93% | 89% | 93% | 100% | 7% | |||

| RACE-h | F1 | 47 | 62 | 62 | 70 | 8 | |||||

| RTE | accuracy | 69% | 79% | 84% | 84% | 96% | 12% | ||||

| OpenBookQA | accuracy | 65% | 60% | 59% | 65% | 94% | 29% |

* Accuracy is the measure reported in the table above. All scores are for the best few-shot performance reported.GPT-3/4 scores are mostly GPT-3. The DROP dataset score is GPT-4. LLaMA scores are mostly LLaMA 2 but include scores from the original LLaMA paper. PaLM scores are mostly PaLM 2-L but include some scores from the original PaLM paper.

LLMs have demonstrated at or near state of the art scores on 12 of the 17 benchmarks. On the other 5 benchmarks, the best LLM was beaten by a fine-tuned system.

What was learned by the fine-tuned systems that wasn’t learned during the more generic training of the LLMs? One clue can be garnered by the relative performance of LLMs and fine-tuned systems on reading comprehension datasets that only require the system to find a span of words in the passage that best answer the question. The Stanford Question Answering Dataset (SQuAD) (Rajpurkar et al, 2018) contains over 100,000 crowdsourced questions based on passages from 536 Wikipedia articles. A sample passage and a pair of questions are shown below:

Passage: In meteorology, precipitation is any product of the condensation of atmospheric water vapor that falls under gravity. The main forms of precipitation include drizzle, rain, sleet, snow, graupel and hail… Precipitation forms as smaller droplets coalesce via collision with other rain drops or ice crystals within a cloud. Short, intense periods of rain in scattered locations are called “showers”.

Question: What causes precipitation to fall? (Answer: gravity)

Question: Where do water droplets collide with ice crystals to form precipitation? (Answer: “within a cloud”)

The best LLM score on SQuAD 2.0 is 82% which was achieved by LLaMA 2. However, the state of the art fine-tuned system, Retro-Reader (Zhang et al, 2020), achieved a 91% score which was actually better than average human performance which is 87%.

One possible explanation is that fine-tuned systems learn shortcuts (Geirhos et al, 2021) or anomalies in the dataset. Because the answer is contained in a span of words in the document, commonsense reasoning is not necessarily required to produce the correct answers. Systems might learn to locate the most similar sentence in the passage to the question and then match and align the lexical units in the sentence to those in the question. This alignment might make use of both syntactic cues and word similarities based on the word embeddings associated with each word in the passage and question. For example, if one looks closely at the SQuAD example above, one can see that answers can be obtained by surface-level analysis rather than true understanding. The correct sentence can be identified by matching the words “precipitation” and “fall” for the first question and the words “water”, “droplets”, “collide” (“collision”), “ice”, “crystals”, and “precipitation,” in the passage.

For example, in the OpenBookQA sample question shown in the table above there are obvious shortcuts available to be acquired during fine-tuning. The word “steel” is in both the correct answer and a science fact. If the science fact is not available, the word embedding for “metal” in the science fact will be closer to the embedding for “steel” in the correct answer than any of the words in the incorrect answers.

Similarly, the CoPA dataset appears to be susceptible to surface-level strategies that don’t require commonsense reasoning. In the CoPA example above, the words “struck” and “head” are likely more closely related to “fell” and “unconscious” than “man’s” and “wallet”. And the RTE dataset has been heavily criticized over the years (e.g. Roemmele et al, 2011; Levesque et al, 2012) for being susceptible to AI systems that learn simple word-oriented strategies rather than any type of true reasoning. In the example in the table above, the computer can match the words in (1) and (2) using a simple rule that says, “if all the words in (2) are also in (1), then answer ‘yes.’”

The designers of these commonsense datasets were all attempting to create test examples that would require the system to engage in commonsense reasoning and to eliminate surface-level cues such as word co-occurrences and word embedding relatedness. However, even without fine-tuning, it’s still possible that the LLMs learned some generic shortcuts based on surface-level cues that can be used across tasks. For example, in the CommonSenseQA example, it is likely that the system frequently encountered the phrase “fox in a hen house” while learning the language model and the simple association between “fox” and “hen house” could be used to find the correct answer in the CommonsenseQA sample question in the table above.

A group of researchers (Li et al, 2022) tested four of these datasets to determine if the answer alternatives provided clues that enabled the system to guess the right answer without using commonsense reasoning. They tested performance by giving a system just the answers and not the questions or excerpts. On the HellaSwag dataset, there was a 32% difference between the answer only accuracy score and random guessing. This indicates that 32% of the questions have a bias in the wording of the alternate answers that enables a system to guess the right answer without using commonsense reasoning. On the PIQA dataset, the difference was 23%. On SIQA it was 3% and under 1% for Winogrande.

Because the LLMs received no training on the datasets themselves, it is unlikely that they would have learned similar shortcuts unless their training datasets were contaminated and included these or similar datasets. More likely, the LLMs acquired some level of general reasoning skills that enabled them to perform well on these datasets. For the 5 of the 17 datasets in which there was a fine-tuning improvement, it is likely that the systems acquired shortcuts during fine-tuning that augmented the general reasoning skills of the LLMs.

Bian et al (2023) replicated the ability of ChatGPT and GPT-3.5 to answer the questions on many of these benchmarks. They also found that these LLMs lag behind in social, temporal, and event commonsense. They concluded that ChatGPT has a strong base of commonsense knowledge but struggles in applying reasoning that uses this knowledge.

In summary, LLMs do reasonably well on tests of commonsense reasoning and it seems reasonable to conclude that some degree of commonsense reasoning is occuring. That said, the level of performance might be artificially high due to data contamination and/or use of shortcuts.

Also, none of these datasets test the type of real-world, complex commonsense reasoning that humans perform when faced with situations they have never encountered previously. For example, when driving a car, people use commonsense reasoning to figure out what to do when a deer darts onto the highway, when a flood makes the road difficult or impossible to navigate, and when cars are fishtailing, trying to get up an icy hill. People do not learn about all these possible edge cases in driving school. Instead, we use our everyday commonsense reasoning skills to predict actions and outcomes. If we see a ball roll onto the street, we know to look out for children chasing the ball. We change our driving behavior when we see the car in front of us swerving, inferring that the driver might be intoxicated or texting.

6.2.2 Abstract reasoning

One of the most common ways to test humans for abstract thinking capabilities is to ask them to solve analogy problems. So it makes sense to use humans as a benchmark to analyze analogical reasoning in LLMs. Mathematical and coding abilities are also considered abstract reasoning and these capabilities have been widely studied also.

6.2.2.1 Reasoning by analogy

GPT-3 was tested for its ability to solve analogy problems found on the SAT college entrance exams. Here is an example problem:

Question: lull is to trust as

A: cajole is to compliance (correct answer)

B: balk is to fortitude

C: betray is to loyalty

D: hinder is to destination

E: soothe is to passion

GPT-3 was able to achieve 65% correct on this set of problems (Brown et al, 2020).

One possible explanation for how GPT-3 performs this task is that is compares word embeddings. Word embeddings locate individual words in a multidimensional space in which each dimension captures and aspect of meaning such as singular vs. plural and male vs. female. It’s possible that GPT-3 performs this analogy task by locating the question words (e.g. “lull” and “trust”) in this multidimensional space identifying the length and direction of the line that connects the two points. Then it does the same for each possible answers, choosing the answer with the most similar length and direction.

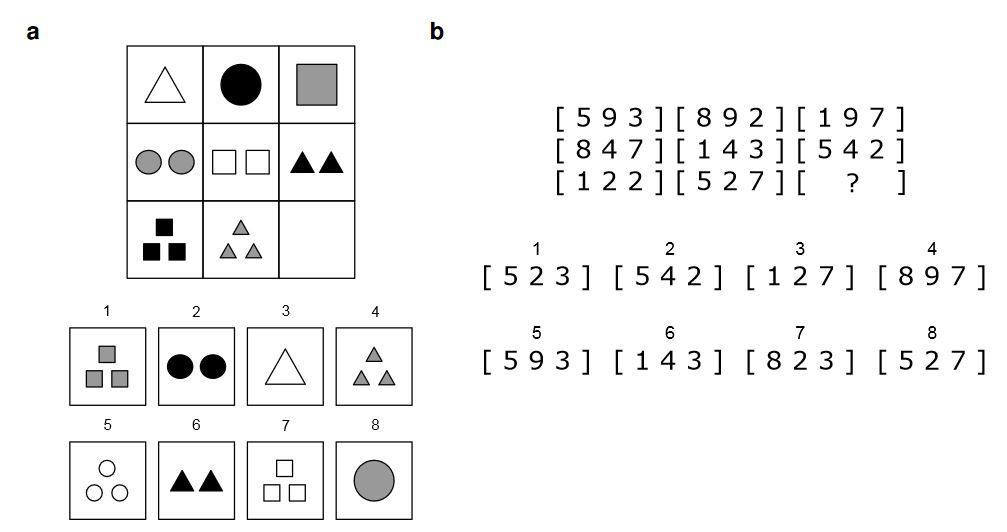

UCLA researchers (Webb et al, 2022) tested GPT-3 on a version of the Ravens Progressive Matrices test which measures abstract pattern induction in humans.

The left side of the figure above shows a Ravens test example. Because GPT-3 does not accept visual input, the UCLA researchers converted the visual tests to numeric tests as illustrated in the right half of the figure above. The numbers on the right side can be interpreted as follows:

For each bracketed element (e.g. [ 5 9 3 ]), the first element refers to the shape of the object(s). Specifically, 5 = triange, 8 = circle, and 1 = square. The second element refers to the number of objects (9 = one element, 4 = two elements, 2 = three elements). The third element refers to the fill color (3 = no fill color, 2 = black fill, 7 = gray fill). For example, [5 2 3] means one triange with no fill.

They found GPT-3 performed better than the average human test-taker and GPT-4 performed even better than GPT-3.

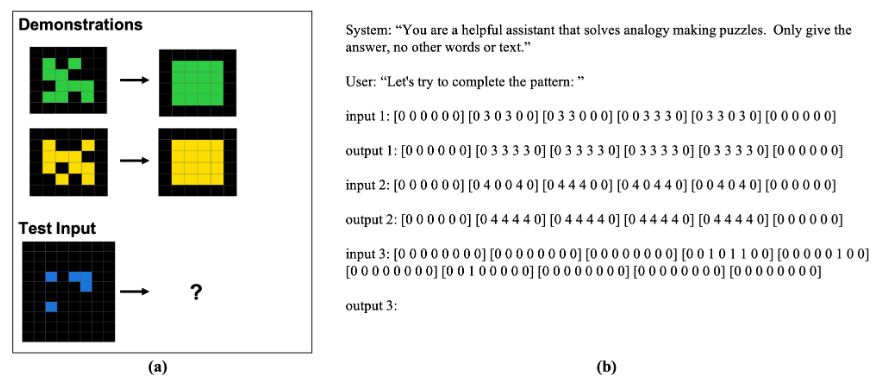

So, is GPT-3/4 better at abstract thinking than humans? A group of Santa Fe researchers (Moskvichev et al, 2023) argue that the version of Ravens given to the computer systems is far easier than the one given to humans. The problem is that features such as objects, shapes, number, and orientation are abstracted out into numbers in the version given to the computer systems. Instead, they designed a different test that they labeled ConceptARC:

To solve these problems, the computer system must identify objects, shapes, number, and orientation and use abstract reasoning to identify patterns using these higher-level concepts. They found that “…humans substantially outperform the machine solvers on this benchmark, showing abilities to abstract and generalize concepts that are not yet captured by AI systems.”

This result was later extended (Mitchell et al, 2023) from simple, zero-shot prompts to more detailed, one-shot prompts.

Still, it is reasonable to conclude that some degree of analogic reasoning is occurring though probably not to the level of analogic reasoning in humans.

6.2.2.2 Logical reasoning

Logical reasoning involves starting with a set of premises and/or observations and using specific types of processes to arrive at conclusions. The main types of logical reasoning are:

- Deductive reasoning: Start with a set of premises and use rules of inference such as modus ponens and modus tollens to arrive at a conclusion. For example: All mammals are warm-blooded. Dolphins are mammals. Therefore, dolphins are warm-blooded.

- Inductive reasoning: Start with a set of specific observations and draw general conclusions. For example, if I do a survey of customers in a target demographic and find that most of the surveyed customers prefer product-x to product-y, I might conclude that this survey is representative of the whole target market and step up my marketing of product-x to that demographic.

- Abductive reasoning: Start with an observation or set of observations and draw a conclusion. For example, if I’m driving and see another driver weaving back and forth, I might conclude that driver is impaired and drive accordingly.

Conclusions drawn with deductive reasoning are always valid. Conclusions drawn with inductive and abductive reasoning are likely to be valid but aren’t necessarily valid.

LLMs have demonstrated all three of these types of logical reasoning (Bang et al, 2023; Tang et al, 2023)

Hong Kong University researchers (Bang et al, 2023) tested ChatGPT on deductive, inductive, and abductive datasets:

| Type | Task | Example Prompt |

Answer | % Correct |

| Deduction | bAbI task 15 (with prompt engineering) (Weston et al, 2016) |

Given facts: Wolves are afraid of mice. Sheep are afraid of mice. Winona is a sheep. Mice are afraid of cats. Cats are afraid of wolves. Jessica is a mouse. Emily is a cat. Gertrude is a wolf. Based on the given facts above, do a reasonable inference on this question using deductive reasoning: What is winona afraid of? | Mouse | 93% |

| Deduction | EntailmentBank (Clark et al, 2018) |

Earth is a kind of planet. A planet is a kind of celestial object / celestial body. Earth is located in the milky way galaxy. Which object is a planet found in the Milky Way Galaxy? | Earth | 93% |

| Induction | bAbI task 16 (with prompt engineering)(Weston et al, 2016) | Given facts: Bernhard is a swan. Greg is a frog. Brian is a swan. Julius is a lion. Greg is gray. Julius is yellow. Lily is a lion. Lily is green. Brian is yellow. The most recent fact is the correct fact. Based on the given facts above, do a reasonable inference on this question using inductive reasoning: What color is Bernhard? | Yellow | 67% |

| Induction | CLUTRR (Minervini et al, 2020) |

[Jason] and his wife [Gabrielle] went to the beach to watch the fireworks on the 4th of July. [Jason] and his daughter [Alma] took a day off school to go to the zoo… Who is Alma to Gabrielle? | Daughter | 43% |

| Abduction | αNLI (Bhagavatula et al, 2020) |

Jenny cleaned her house and went to work, leaving the window just a crack open. When Jenny returned home she saw that her house was a mess! Which hypothesis is most likely correct: (1) A thief broke into the house by pulling open the window. (2) It was a breezy day and a large bird flew into the house. (3) At work, she opened her window and the wind blew her papers everywhere. |

(1) | 87% |

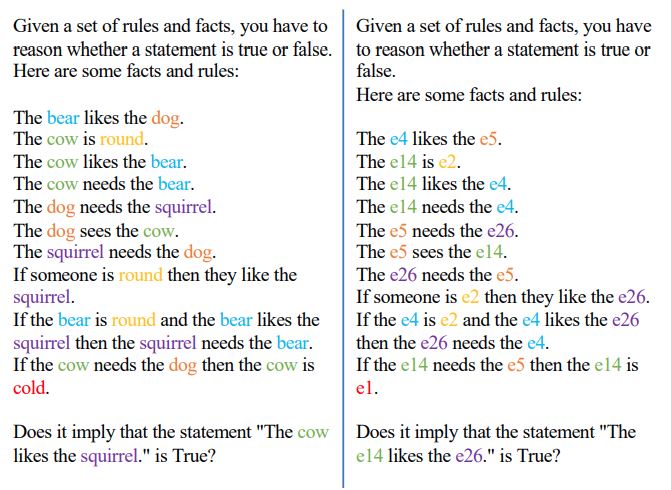

Another group of Chinese researchers (Tang et al, 2023) tested the deductive, inductive, and abductive reasoning capabilities of GPT-4, ChatGPT, and LLaMA using sets of facts like the following:

The example on the right (termed Symbols in the results below) contains the same facts as the example on the left (termed Semantics in the results below); however, the nouns (e.g. “bear”) and adjectives (e.g. “round”) are replaced with symbols (e.g. “e4” and “e2”). The goal was to decouple the semantics of the words from the reasoning process.

GPT-4 far outperformed the other two LLMs and the GPT-4 accuracy scores for the zero-shot setting using chain-of-thought prompting were:

| Deduction | Induction | Abduction | |

| Symbols | 71% | 9% | 31% |

| Semantics | 86% | 54% | 33% |

Deduction performance was good for both types of fact presenations though Semantics performance was better.

Induction performance was much lower for Semantics and near chance for Symbols.

Abduction performance was equal but poor for the two types of fact presentations.

So, deduction performance was good overall while induction and abduction were poor overall. Moreover, the substitution of symbols for the nouns and adjectives significantly reduced performance, especially for induction.

The researchers concluded that performance is not due strictly to reasoning capabilities. In order to perform the logical reasoning, it may be that the facts need to be linked to existing objects in memory. It should be noted that it is possible (and intuitively likely) that humans might also perform worse on the tasks with symbols.

These studies seem to show that some degree of logical reasoning is occuring in LLMs and that deductive reasoning is the strongest type of logical reasoning LLMs.

Another study of inductive reasoning (Qiu et al, 2023) showed that, while LLMs are “phenomenal” at generating candidate inductive rules, they are poor inductive reasoners that have great difficulty identifying which rules are plausible and applying proposed rules to specific instances.

6.2.2.3 Mathematical reasoning

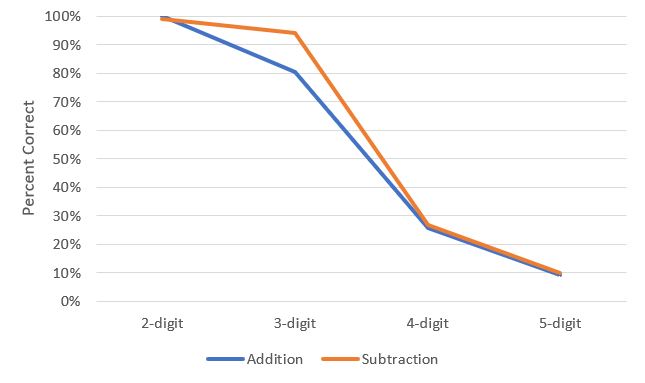

GPT-3 was tested on a series of arithmetic problems. It’s accuracy scores were:

GPT-3 did fairly well on two-digit addition and subtraction. However, as the number of digits in the addition/subtraction problem increased, performance fell off a cliff. For four-digit addition and subtraction, accuracy was under 30%. GPT-3 also scored 29% correct on 2-digit multiplication and 21% on composite questions such as “what is 6+(4*8)?”.

Two-digit addition is a fairly simple pattern to learn and GPT-3 certainly encountered many two-digit addition examples in its training dataset. Five-digit addition examples were probably rare and GPT-3 learned a pattern that didn’t work well.

So, did GPT-3 acquire mathematical reasoning skills or not? People learn a single reasoning pattern that works for two-, three-, four-, and five-digit addition. GPT-3 clearly isn’t learning a single method of adding numbers. But GPT-3 may be learning separate patterns for two-, three-, four-, and five-digit addition.

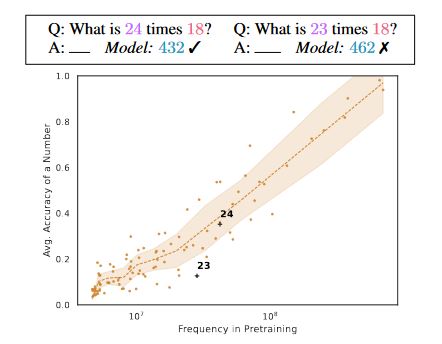

Another group of researchers Razeghi et al (2022) reasoned that, if an LLM really understands numbers and numerical reasoning, then if an LLM were tested on multiplication tables of numbers from 1 to 99, one would expect an LLM to have a similar multiplication accuracy for numbers of similar complexity (e.g. 23 vs. 24). However, they found that multiplication accuracy for GPT-J, a 6B parameter LLM, was a function of the frequency that the numbers appeared in the training text:

For example, multiplication accuracy for the number 24 was far better than for the number 23 and the number 24 appeared more frequently than the number 23 in the training text. These researchers tested on a variety of numerical tasks and found that performance on these tasks was a function of the number frequency. These researchers assert that this is evidence that there is some memorization involved and argue that this result calls into question whether LLMs are actually learning generalized numerical reasoning at all.

LLMs have also been evaluated on several standardized math tests.

LLM accuracy scores on GSM-8K were:

| Gemini |

GPT-4 | LLaMA | CodeT5+ | Claude 3 |

| 94% | 92% | 57% | 74% | 95% |

AQuA-RAT (Ling et al, 2017) contains test questions like this one: Two trains running in opposite directions cross a man standing on the platform in 27 second and 17 seconds respectively and they cross each other in 23 seconds. The ratio of their speeds is: A) 3/7 B) 3/2 C) 3/88 D) 3/8 E) 2/2

GPT-4 scored 73% and LLaMA 2 scored 23% on this dataset compared to an average human score of 85% (Zhong et al, 2023).

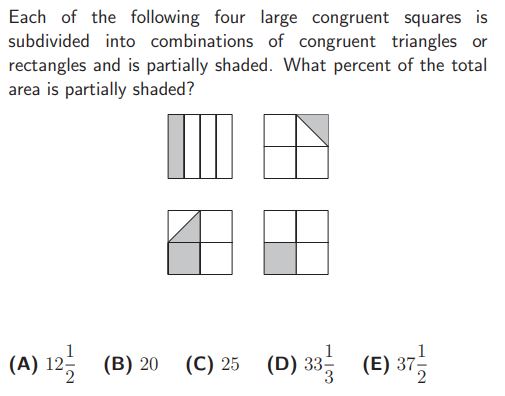

AMC 10/12 are multiple-choice examinations in high school mathematics designed to promote the development and enhancement of problem-solving skills. AMC 10 is for students in 10th grade and below. AMC 12 is for students in 12th grade and below. These tests contain questions from high school math competitions that cover math topics like algebra, geometry, and trigonometry like this one:

GPT-4 scored in 6-12 percentile range on AMC 10 and in the 45-66 percentile range for AMC 12 (OpenAI, 2023). The AMC 10 results are worse than chance. It’s odd that GPT-4 scored better on the test for 12th graders than it did on the test for 10th graders. One possible explanation is that some of the 12th grade material was in the training dataset.

Another group of researchers (Frieder et al, 2023) tested GPT-4 on datasets composed of graduate-level mathematics problem. GPT-4 scored well below the level of an average graduate student in mathematics.

Researchers (Davis and Aronson, 2023) have also found that GPT-4 performance on math tasks can be significantly enhanced by the use of plugins such as Code Interpreter and Wolfram Alpha that offload math calculations to external services.

And, like humans, LLMs can be prompted to gain confidence in an answer by producing that answer in different ways. MathPrompter (Imani et al, 2023) generates multiple algebraic expressions to solve the same math problem and gains confidence in an answer when other methods produce the same answer. For example, MathPrompter was asked to “Write a mathematical equation and

generate the answer” and also to “Write a python function that returns the answer”. When both methods produce the same answer, it answers with higher confidence.

LLMs clearly acquire mathematical reasoning capabilities during training. However, these capabilities are far more limited than humans especially in areas like simple arithmetic and they don’t seem to acquire generalized rules like humans.

6.2.2.4 Coding

OpenAI started with GPT-3 and fine-tuned it on 179Gb of code in 54 million GitHub software repositories to create Codex (Chen et al, 2021). Codex can generate code continuations in 12 programming languages in response to natural language descriptions of the desired code. Codex-S is a version of Codex fine-tuned on code from programming competitions and from continuous integration tasks, both of which emphasize code correctness. Codex is available via an API and is available inside certain development environments under the GitHub Copilot name.

Codex is designed to a programmer’s helper. It can’t replace programmers because it doesn’t always generate good code. For example, NYU researchers found that 40% of the code generated had security flaws. It also doesn’t do well when a natural language description requires decomposition into multiple smaller programs. However, it can be useful as a starting point as long as a programmer checks and corrects the generated code.

Since Codex, researchers have developed many other coding assistants:

- Meta’s Code Llama (Rozière et al, 2023) started with LLaMA 2 as a base and was fine-tuned on code. Similarly, Google’s PaLMCoder (Narang and Chowdherry, 2022) started with its PaLM LLM and fine-tuned it on Python code.

- DeepMind’s AlphaCode (Li et al, 2022), Amazon’s CodeWhisperer (Athiwaratkun et al, 2023), InCoder (Fried et al, 2023), and the open source StarCoder (R. Li et al, 2023) were all directly trained on code only datasets.

- StarCoder can generate code in 80+ programming languages. AlphaCode was trained on code from coding competitions and outperformed 54% of human contestants in a series of online competitions with over 5000 contestants.

- Salesforce’s CodeT5+ (Wang et al, 2023) was trained on both natural language text and code.

- Microsoft researchers created phi-1 (Gunasekar et al, 2023) is a model that was trained on “textbook quality” data. Despite it’s small size (1.3B parameters), it outperformed many larger models on HumanEval and MBPP.

- Berkeley and Microsoft researchers (Patil et al, 2023) fine-tuned a LLaMA model to create API calls. The model was named Gorilla and it outperforms GPT-4 on API generation. They also combined it with an external API database using a RAG approach to reduce hallucinations and adapt to API changes.

- Other commercial tools include Cody and numerous.ai.

See Xu and Shu (2022) for a survey of coding LLMs.

In March, 2023, Stackoverflow did a survey on the impact of LLM-based coding assistants on software development professionals. 70% said they were either using them already or were planning to use them. However, software developers have to be very careful because the LLMs make many mistakes and can produce insecure code (Perry et al, 2022).

The table below shows the performance of various LLMs on two coding benchmarks: The HumanEval coding benchmark (Chen et al, 2021) measures the ability to produce code from descriptions. The scores listed below are for the Pass@1 scoring method which only considers the output that was top-ranked by the LLM. MBPP (Mostly Basic Python Problems) (Austin et al, 2021) contains 974 programming tasks designed to be solvable by entry level human programmers.

| Benchmark | Claude 3 | Gemini | GPT-3/4 | Code LLaMA |

Codex | PaLMCoder | CodeT5+ | Phi | |

| HumanEval | 85% |

74% |

67% | 54% | 34% | 36% | 41% | 43% | 51% |

| MBPP | 52% | 62% | 46% | 47% | 53% |

The next table shows GPT-4 accuracy on two online programming challenges, Codeforces and Leetcode:

| Coding Challenge | Human Percentile |

| Codeforces | 4 |

| Leetcode (Easy) | 31 |

| Leetcode (Medium) | 21 |

| Leetcode (Hard) | 3 |

GPT-4 performed very poorly against better human programmers.

Princeton university researchers (Jimenez et al, 2023) created an evaluation framework named SWE-bench, an evaluation framework that contained 2,294 software engineering problems drawn from Github and other real-world repositories. Each example contains a description of the issue to be resolved. The example is fed into the LLM as part of a prompt that asks the LLM to produce code as output. GPT-4 was only able to solve 1.7% of the problems and Claude 2 solve 4.8% of the problems.

In addition to writing code, LLMs can fix bugs. European researchers (Sobania et al, 2023) found that ChatGPT was able to fix 31 of 40 bugs on a bug-fixing benchmark.

In summary, LLMs have demonstrated an impressive ability to perform the reasoning processes required to write code. That said, the code can be wrong and is far below the level of an experienced human coder.

6.2.2.5 Humor

Google researchers (Chowdhery et al, 2022) gave their PaLM LLM a handful of jokes and asked it to explain what makes each joke funny. This is an example joke:

Prompt: Did you see that Google just hired an eloquent whale for their TPU team? It showed them how to communicate between two different pods!

PaLM: TPUs are a type of computer chip that Google uses for deep learning. A “pod” is a group of TPUs. A “pod” is also a group of whales. The joke is that the whale is able to communicate between two groups of whales, but the speaker is pretending that the whale is able to communicate between two groups of TPUs.

Though it’s just an anecdotal study on a handful of prompts, the Google researchers concluded that the PaLM model has some ability to understand humor.

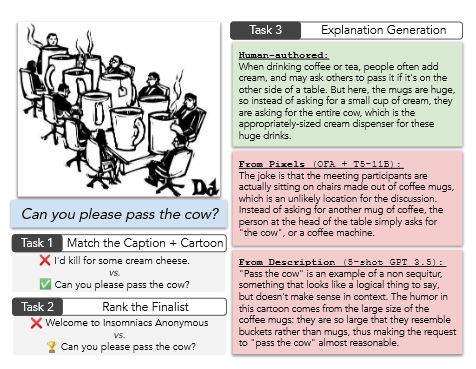

Another group of researchers (Hessel et al, 2023) compared GPT-4’s ability to explain the humor in jokes to the ability of humans to explain jokes from a decade of New Yorker Cartoon Caption contests. They gave both GPT-4 and humans three tasks that are illustrated below:

Task 1 was to match a cartoon to a caption. Task 2 was to rank the most popular caption versus the next most popular caption. Task 3 was to explain the humor. For the LLM tests, they used text descriptions of the cartoons instead of the cartoon visual itself. They found that humans outperformed the best LLMs in all three tasks with the human explanations prefered 2/3 of the time.

They also ran these tests on multimodal models. These models were presented with the actual visual cartoons instead of descriptions. However, these models didn’t perform anywhere near as well as the models given the textual cartoon descriptions. There are two possible explanations for this result: One is that models don’t understand the visual cartoons very well. The other is that the textual description provides surface-level cues that help the models perform the tasks.

LLMs can reason about humor to some degree but do not as well as humans.

6.2.2.6 Theory of mind

Stanford Professor Michael Kosinski (2022) argued that large language models have evolved to the point where they have achieved Theory-of-Mind, i.e. the ability to reason about the beliefs of people with whom they interact. He gave the following prompt to ChatGPT:

Prompt: Here is a bag filled with popcorn. There is no chocolate in the bag. Yet, the label on the bag says “chocolate” and not “popcorn.” Sam finds the bag. She had never seen the bag before. She cannot see what is inside the bag. She reads the label.

Question 1: Sam opens the bag and looks inside. She can clearly see that it is full of ___

Question 2: Before opening the bag, she calls a friend to tell them that she has just found a bag full of ___

These prompts and questions were carefully designed to avoid word frequency strategies. The words “popcorn” and “chocolate” both occur twice in the prompt. The first question has “popcorn” as the correct answer. The second question has “chocolate” as the correct answer.

Kosinski argued that the LLM must understand beliefs in order to get the correct answer.

However, Harvard Professor Tomer Ulman (2023) showed that slight variations on these tests caused ChatGPT to score significantly lower, something that wouldn’t happen for people. He concluded that the success rates on these test don’t really test theory-of-mind.

University of San Diego researchers (Trott et al, 2023) used a different theory-of-mind test with examples like these:

True Belief Passage: “Sean is reading a book. When he is done, he puts the book in the box and picks up a sweater from the basket. Then, Anna comes into the room. Sean watches Anna move the book from the box to the basket. Sean leaves to get something to eat in the kitchen. Sean comes back into the room and wants to read more of his book.”

False Belief Passage: “Sean is reading a book. When he is done, he puts the book in the box and picks up a sweater from the basket. Then, Anna comes into the room. Sean leaves to get something to eat in the kitchen. While he is away, Anna moves the book from the box to the basket. Sean comes back into the room and wants to read more of his book.”

Implicit Cue: “Sean goes to get the book from the ___.”

Explicit Cue: “Sean thinks the book is in the ___.”

GPT-3 responded correctly 75% of the time which was below human performance of 83%. Unless one argues that this is not a good test of theory-of-mind, these results indicate that GPT-3 was capable of some level of reasoning about human beliefs.

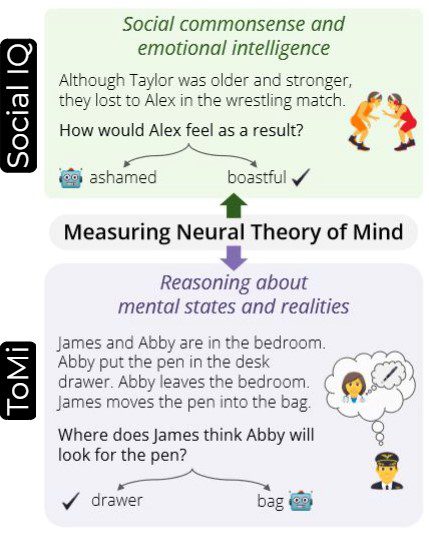

A contrary result was obtained by another group of researchers (Sap et al, 2023) who tested GPT-3 on two theory of mind benchmarks that are illustrated below:

GPT-3 was only able to achieve a score of 55% on Social IQ and 60% on ToMi. Those scores were far below human performance and only slightly better than random guessing (50%).

A similar conclusion was reached by group of University of Washington researchers (Kim et al, 2023) who created a theory of mind benchmark named FANTOM. They tested GPT-4, Llama 2, and other LLMs on this benchmark and found that they performed poorly.

In a more recent study, Kosinski (2024) tested multiple OpenAI models of different sizes on 40 false-belief tasks. He found performance on these tasks increased with the number of parameters in the model with GPT-4 able to solve 75% of the problems.

In summary, LLMs do well on some measures of ToM but not others.

6.2.3 Limited to linear pattern-matching

Suppose friends are coming over for dinner and you need to plan dinner. You could decompose this task into sub-tasks…

(1) Decide what courses to serve: wine, salad, main course, dessert

(2) For each course, choose what to serve:

-

- Red wine

- Garden salad

- My favorite tilapia recipe

- Cheesecake

(3) Figure out the ingredients for each and make a shopping list.

(4) Go to the store and get the ingredients.

(5) Cook them.

This is an example of linear sub-task decomposition. You go step-by-step, always making forward progress and never backtracking. But it’s often not that easy. You might look at the course choices and realize that red wine might not be best with fish. Or you might remember that one of your guests doesn’t like fish. So, you back up and do a revision.

Researchers at the Allen Institute of AI and the University of Washington (Dziri et al, 2023) showed that LLMs are good at reasoning tasks that

- Require only linear decomposition into sub-tasks

- Those sub-tasks or ones similar enough to be pattern-matched were encountered during training

- The LLM encountered a similar problem during training

These researchers showed that LLMs struggle with tasks that require more complex decomposition and/or sub-tasks that were not encountered during training. In particular, LLMs have difficulty using existing known sub-task skills to solve more complex problems than they have seen before. They argued that these are inherent limitations of the transformer architecture that underlies virtually every LLM and demonstrated these limitations on three compositional tasks: multi-digit multiplication, logic grid puzzles, and classic dynamic programming. When the LLMs could linearly decompose these tasks into sub-tasks that they had seen during training, they did well. However, as the complexity of the tasks escalated, the accuracy of transformers dwindles nearly to zero.

Other researchers (Chen et al, 2023) found that this limitation could be overcome by providing examples of how to use existing sub-task skills to perform a complex task. However, this is equivalent to an LLM encountering solutions to the task during training.

NYU researchers (Saparov and He, 2023) found that, in deductive reasoning, if multiple valid deduction steps are available, LLMs are not able to systematically explore the different options.

Other studies (Valmeekam et al, 2023a; 2023b) have shown similar results for tasks that require complex planning.

MIT and Boston University researchers (Wu et al, 2023) performed several experiments in which they took eleven reasoning tasks on which LLMs (including GPT-4, Claude, and PaLM 2) achieved strong performance. These tasks spanned math, coding, syntactic analysis, logic, spatial reasoning, drawing, music, and games.

Their goal was to determine whether GPT-4 had learned a narrow, task-specific reasoning pattern or a more generalized reasoning process like the ones that people learn. For each task, they created a variant that require the same reasoning process as the original but which was less likely to have been encountered during LLM training.

Performance of the LLMs on these variants was poor and the researchers concluded that the LLMs had essentially memorized very narrow formulaic reasoning processes during their training. It should be noted that they did not test humans on these tasks so they do not have evidence that humans really learn more generalized reasoning processes that they can apply to these tasks.

Similarly, a group of Princeton researchers (McCoy et al, 2023) performed a number of experiments that demonstrate that LLMs perform worse on tasks that are rarely encountered in the training set that tasks that are frequently encountered even when there is no difference in the complexity of the tasks. For example, when asked to translate English sentences into Pig Latin, GPT-4 gets 42% accuracy when using the most common variant of Pig Latin but only 23% accuracy when using a rare variant. This is additional evidence that LLMs learn very narrow reasoning patterns rather than general abstract reasoning like people.

6.2.4 Moving the goalposts

Ever since GPT-2 was released and few-shot learning was first demonstrated, social media and the press have published numerous dumb mistakes made by LLMs. For example, Yejin Choi, who is one of the leading NLP researchers in the field, gave a wonderful TED talk cleverly titled “AI is incredibly smart and shockingly stupid” in April, 2023. She used two great examples with GPT-4 to illustrate the “shockingly stupid” behavior of GPT-4:

Prompt: I left 5 clothes to dry out in the sun. It took them 5 hours to dry completely. How long would it take to dry 30 clothes?

GPT-4: It would take 30 hours to dry 30 clothes

Prompt: Would I get a flat tire by bicycling over a bridge that is suspended over nails, screws, and broken glass?

GPT-4: It is highly likely that you would get a flat tire if you bicycle over a bridge that is suspended over nails…

Yet, as of September, 2023, if you ask GPT-4 the first question, instead of the “shockingly stupid” explanation it produced in April, 2023, it generates this “incredibly smart” explanation: