4.0 Overview

Someone who has learned to program in one language (e.g. Python) will often find learning to program in a second language (e.g. Java) easier than it was to learn the first programming language. People report a similar phenomenon in learning natural languages (i.e. the languages people speak). People say that knowing how to ice skate makes learning to ski a little easier. However, knowing how to ski doesn’t help one learn a completely unrelated skill such as carpentry. The point here is that what people learn from one task often transfers over to the learning of another task.

The degree of transfer is a function of the similarity or relatedness of the two tasks. Learning one programming language will make it much easier to learn another programming language but will provide little or no benefit to learning to speak a different natural language. Transfer learning in humans was first studied well over 100 years by psychologists Edward Lee Thorndike and Robert Sessions Woodworth (Thorndike and Woodworth, 1901) who showed that people trained to estimate sizes of rectangles were able to more quickly learn to estimates sizes of other objects such as circles.

All the machine learning systems discussed to this point in this book have several important limitations:

- Limited to one task: These systems can perform only the specific task for which they were trained. In contrast, people seem to learn general capabilities (e.g. language skills and world knowledge) that can be applied to many tasks.

- Limited to one domain: These systems perform a specific task in a specific domain. For example, consider a system trained to identify positive vs. negative sentiment in Amazon reviews of kitchen appliances. If this system is then used to identify sentiment in DVD reviews, it will not do as well without retraining on a large set of labeled DVD review examples. As Glorot et al (2011a) noted, the reviews for kitchen appliances would contain adjectives such as “malfunctioning”, “reliable” or “sturdy”, and the reviews for DVD would contain adjectives like “thrilling”, “horrific” and “hilarious”.

A more formal way of saying the same thing is that the distributions of words for kitchen appliances are different than for DVDs.

For image processing tasks, the same objects photographed with a low-resolution mobile phone camera and a high-resolution DSLR camera will have a different distribution and a classifier trained on one will often fail on the other. Even for the same type of camera, differences in illumination, photographer settings, resolution, pose, occlusion, and blur can cause a classifier to fail.

This phenomenon has been termed domain shift because what has happened is that the domain has changed unexpectedly. There are three issues that can cause domain shift:

(1) Different input features: For example, this would happen if the inputs for the upstream and downstream task domains are in different languages.

(2) Different input feature distributions: The input features for the upstream and downstream task domains are the same (e.g. each sample is composed of a set of words from the same vocabulary) but the relative frequencies of the words are different in the upstream and downstream task samples.

(3) Different probability distributions: The input features are the same for the upstream and downstream task domains but the input features have a different probabilistic relationship to the outputs in the upstream and target domains. Reviews of kitchen appliances vs. DVDs fall into this category (and in some cases to the different input features category where words are used in one domain that are not used in the other).

- Exhibit catastrophic forgetting: ML systems can do one task very well. However, when the ML system is then trained to do another task, it completely forgets how to do the first task, a phenomenon that is termed catastrophic forgetting (McCloskey and Cohen, 1989).

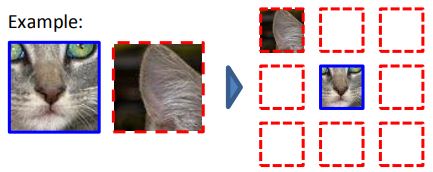

- Learn more slowly than people: To learn to recognize cats, an ML system needs thousands of examples of both cats and non-cats. In contrast, the first time a child sees a cat and an adult says “that is a cat”, the child forms a concept of a cat as an animate object that has 4 legs, 2 eyes, and a tail. Or maybe it two or three examples. But it doesn’t require thousands of examples. Children thus exhibit one-shot learning (i.e. learning based on just one example) or few-shot learning (i.e. learning based on a small number of examples). Then, when a child first sees a dog, they might mistake it for a cat. When they are corrected, they learn a concept for dog that includes the fact that dogs are bigger cats. Again, this is one-shot or few-shot learning. Supervised learning systems require thousands of examples and cannot learn incrementally. Two goals of transfer learning are to create ML systems that exhibit one- or few-shot learning and can learn incrementally.

For natural language tasks, one could add a fifth limitation related to language. A system trained on a set of English to French examples cannot translate English to Chinese.

Additionally, from an engineering perspective, the lack of large labeled datasets is a barrier to the implementation of many supervised learning tasks. Manual labeling of datasets can be prohibitively expensive. Moreover, when very deep architectures are used for tasks with insufficient labeled data, overfitting will often occur. Wouldn’t it be better if it were possible to transfer some of what is learned by doing one task to similar task in order to reduce or eliminate the amount of labeled data required?

This chapter examines the state of the art in learning techniques that have been developed to transfer some of what is learned from one task to other tasks. This general area of research is termed transfer learning.

In order for transfer learning to occur, something must be transferred from a system that has learned to perform a upstream task (an upstream task) to a system learning to do a target task (a downstream task). Whatever is transferred should increase performance on the target task.

Many existing transfer learning approaches assume only a small difference between the upstream and target tasks and/or domains (Jin, 2017) and there is a good reason for this: Let’s assume that we have developed a supervised learning prediction algorithm for one task (e.g. classification of medical images as indications of a particular type of brain cancer vs. absence of that type of brain cancer) – call it T1. This means we have learned the distribution of the input and output data for T1.

Now, suppose we want to learn a different task (e.g. classification of images for a different type of brain cancer) – call it T2. Now we need to learn a different distribution. Perhaps some of what we’ve learned about the distribution for T1 might be applicable to learning T2. However, this assumes that there are similarities in the T1 and T2 distributions. The more similar the two tasks and the two domains, the more similar the distributions, and the more likely it will be to find aspects of T1 that can help in learning the generalizations required for T2. At the same time, the more different the tasks, the less likely we are to find aspects of T1 that can help learn T2.

If everything learned in deep networks is task-specific and/or domain-specific, it would be hard to transfer the knowledge in these networks to new tasks and domains. As it turns out, deep networks tend to have layers that learn more general information.

In 2014, a group of researchers demonstrated that the initial layers of convolutional networks learn low-level features such as edges, lines, and curves. As one progresses through the layers of the network, the features tend to be more specific to the task (Yosinski et al, 2014).

Similarly, in the NLP area, Allen AI Institute researchers (Peters et al, 2018) showed that created a two-level language model and showed that the first layer holds syntactic information and the second layer holds semantic information. Various transfer learning approaches have been developed to transfer this more general knowledge to new tasks.

This chapter discusses the range of transfer learning methodologies. See Pfeiffer et al (2023) for a survey of transfer learning architectures.

4.1 Feature extraction

An early approach to transfer learning was to train a model on one task and extract portions of the model to be used as features to train on new tasks.

University of Toronto researchers (Kiros et al, 2015) trained a network on a corpus of books to take every first and third sentence and predict the sentence in the middle using an unsupervised Encoder-Decoder model. They used the output vector from the encoder as a set of features and applied logistic regression to the vector to learn new tasks. They were able to achieve robust, though not state of the art, results on eight different tasks including four sentiment detection tasks.

A group of OpenAI researchers (Radford et al, 2017) speculated that this system under-performed because the language model training occurred on a set of texts with very different distributional characteristics than those of the sentiment analysis task. Instead, they built a language model on a large database of product reviews. Then, when they applied a logistic regression model to the output of the encoder, they were able to achieve a state of the art sentiment detection score.

4.2 Word embeddings

Another approach is to use word embeddings to initialize networks improves performance on many natural language processing tasks (e.g. Socher et al., 2013; Sutskever et al., 2014; Modi and Titov, 2014; Chen and Manning, 2014; Ma and Hovy, 2016; Nguyen et al., 2017; Qi et al, 2018). This is a form of transfer learning because the semantic knowledge encapsulated in the word embeddings enables faster and more accurate supervised training.

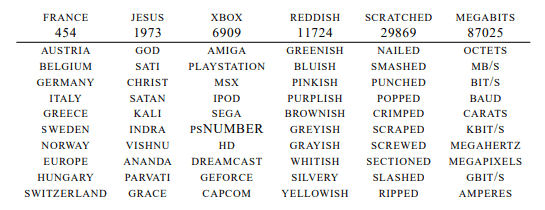

The semantic knowledge encapsulated in word embeddings was first shown by a group of NEC researchers (Collobert et al, 2011) who created a set of 50-dimension word embeddings by training a network to distinguish correct from incorrect word sequences. Correct word sequences were extracted from English texts. They found that these word embeddings capture some of the semantics (meaning) of the words. When they examined a distance measure known (the Euclidean distance) between the 50-dimension word embedding vectors, they found that related words were close together.

Source: Collobert et al (2011)

The figure above shows the ten closest word embeddings for each of six words. The number below the word is a ranking of its frequency in the dataset (high numbers are rarer). This isn’t surprising because words that occur together in natural language utterances and text are often semantically related.

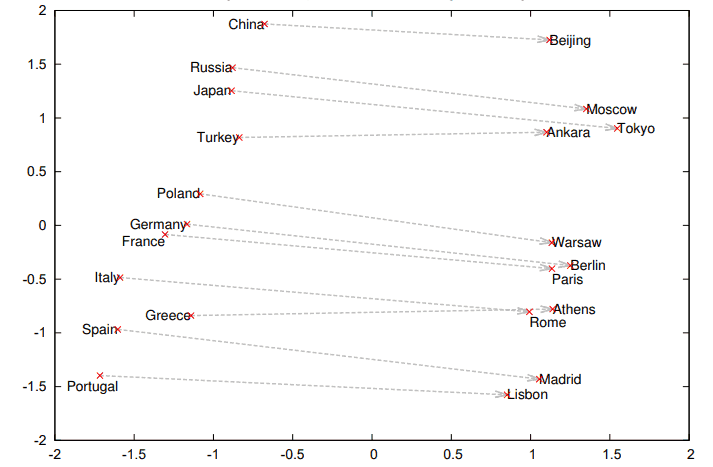

Similarly, Microsoft researchers (Mikolov et al, 2013a) studied word2vec word embeddings and found some very interesting properties. For example, they discovered that the male/female relationship is encoded. You can see this by doing math on the vectors corresponding to the words “king”, “queen”, “man” and “woman”. If you do the vector math “king – man + woman”, the resulting vector is very close to the vector for “queen”. Similarly, the concept of plurals is encoded as can be seen from the fact that the vector resulting from “apple – apples” is almost identical to the vector resulting from “car – cars”.

Source: Mikolov et al, 2013

The figure above shows how a similar algorithm captures the relationship between countries and their capital cities.

Other researchers (Tshitoyan et al, 2019) showed that word embeddings derived from a database of materials science articles capture complex materials science concepts such as the underlying structure of the periodic table and structure–property relationships in materials.

These findings have a correlate in cognitive psychology known as the Distributional Hypothesis (e.g. McDonald and Ramscar, 2001). The distributional hypothesis states that when words tend to appear in the same contexts, they have similar meanings. McDonald and Ramscar cite several psychological studies that directly indicate that both children and adults make use of distributional statistics (i.e. co-occurrence frequencies of words) in learning languages. The distributional hypothesis follows a well-known linguistic theory put forth in 1957 by J.R. Firth (Firth, 1957):

“You shall know a word by the company it keeps”

Another frequently used form of word embedding is GloVe which was developed by Stanford researchers (Pennington et al, 2013). They use a different unsupervised learning technique for creating vector representations that can then be used as the input layer to supervised learning algorithms.

Two limitations of word2vec and GloVe word embeddings are that they cannot handle words not encountered in the training corpus and that multiple vectors are required for morphological variants. Facebook researchers (Bojanowski et al, 2017) used a different approach to creating word embeddings that overcomes these issues. Their system is named fastText and an open source version is available with word vectors in 157 languages.

Instead of learning word vectors for individual words, their system learned a vector for each N-gram of a word. The N-grams used were n=3 to n=6 (or the size of the word plus a beginning of word symbol and an end of word symbol) plus the word itself (including beginning and ending symbols). A word is then the sum of the learned N-grams for the word. The advantage of N-grams over full words is that the system will work better on rare, technical, and other out-of-vocabulary words.

Many researchers use existing word embeddings such as Word2Vec, Glove, or fastText in their research due to the effort required to train their own word embeddings.

The biggest limitation of word embeddings is that many words have multiple word senses. Therefore, multiple senses for a word need to be embedded in a single word vector.

To get around this limitation, researchers have developed word embeddings that also store the context of the training sentences containing the word. A team of Israeli researchers (Melamud et al, 2016) created a system named context2vec. This system learns not only the word embeddings but learns embeddings for the context around the word. Melamud et al noted that earlier researchers represented these contexts by using word vectors placed in the same order as the original words in the sentence as input to an RNN network. They found it to be a useful technique in several natural language processing tasks.

To take this one step further, they trained a bidirectional LSTM RNN network. One half of the network processed the sentence words left to right and the other processed them left to right. For example, consider the context for the word “make” in the sentence

Please make the bed

The first network would learn a representation of the words to the left of “make” (i.e. “Please”) and the second network learns a representation of the words to the right (“the bed”). Then the outputs of the left-to-right network and the right-to-left networks were input to another network whose job was to combine the two and the result is a sentence embedding context for the word “make”. A standard (e.g. word2vec) word embedding for the word “make” is then paired with its sentence embedding context and both are used in the initial layer of a supervised network designed to learn a specific natural language processing task. They achieved state of the art results on several different tasks including sentence completion, lexical substitution, and word disambiguation.

Contextualized word embeddings derived from language models (see below) have been shown to improve performance for many NLP tasks relative to vanilla word embeddings like word2vec. Allen AI Institute researchers (Peters et al, 2018) created the cleverly-named ELMo (Embeddings from Language Models) representations by training a bidirectional LSTM model using a language model objective. They showed that using the internal states of the model as a starting point for supervised models, they were able to significantly improve performance on six NLP tasks. Researchers (Tenney et al, 2019; Manning et al, 2020) have shown that virtually all of this advantage is due to the ability of language models to encode syntactic information.

Microsoft researchers (Gong et al, 2020) created another type of word embedding, FRequency-AGnostic word Embedding (FRAGE). They discovered that prior word embedding methodologies created word embedding spaces in which the overall frequency of words was an important dimension. They argue that their FRAGE embeddings are an improvement over prior work because overall word frequency is ignored.

Subword embeddings have also proven useful for several tasks such as out-of-vocabulary word handling.

Unfortunately, word embeddings only capture tiny subsets of the full meanings of words (Lucy and Gauthier, 2017). When people learn the concept of a car, they also learn many features of the car concept. For example, a car has wheels, doors, an engine, and a steering wheel. We also learn that cars are a form of transportation, they carry passengers, they move faster than people, accidents involving cars can cause serious injury, and much more.

Language models…

4.3 Fine-tuning language models

A language model captures the likelihood of a sequence of words occurring for a particular language. It learns this in an unsupervised fashion by analyzing a large corpus of text (e.g. billions of words of Google News stories) and seeing which words typically follow other words and word sequences. For example, a language model for English could be created by analyzing a massive amount of news text and counting the number of times each pair of words (known as bigrams) occur together. One could also do this with trigrams (3-word sequences) or more generally with N-grams (n-word sequences).

Language models can also be trained using a form of unsupervised deep learning termed self-supervised learning. A network is presented with a large body of text and is trained to predict each next word in the text. Alternatively, it can be trained to predict the middle word in each sequence of three words. This is known as autoregressive predictive coding.

4.3.1 Language models

A language model captures the likelihood of a sequence of words occurring for a particular language. It learns this by analyzing a large corpus of text (e.g. billions of words of Google News stories) and seeing which words typically follow other words and word sequences. A language model for English could be created by analyzing news text and counting the number of times each pair of words (known as bigrams) occur together. One could also do this with trigrams (3-word sequences) or more generally with n-grams (n-word sequences).

Language models can also be trained using self-supervised learning. Unlike supervised learning, self-supervised learning doesn’t require massive amounts of labeled data. Self-supervised learning can take advantage of the massive amounts of unlabeled data available in Wikipedia, social media, and the whole internet. Self-supervised learning is similar to but also very different from semi-supervised learning. Both address the shortage of labeled observations for supervised training. However, semi-supervised learning requires an initial set of labeled observations. In self-supervised learning, all the labeled observations are created by the system.

A common self-supervised learning paradigm for creating language models is to train models in a supervised fashion to predict each next word in the text. Rather than needing manually-generated label for each training observation, each training observation is composed of the next word in the text that serves as a label plus the words preceding the label. The model is trained to predict each word in a body of text from the preceding words.

For example, suppose I want to train a language model on Wikipedia. One sentence from Wikipedia is

The United States of America is a country consisting of fifty

states, a federal district, five major self-governing territories, and

various possessions.

The table below shows how a series of supervised examples can be generated from this sentence:

|

Input |

Output |

|

The |

United |

|

The United |

States |

|

The United States |

of |

|

The United States of |

America |

|

The United States of America |

is |

|

The United States of America is |

a |

|

The United States of America is a |

country |

|

The United States of America is a country |

consisting |

|

The United States of America is a country consisting |

of |

|

The United States of America is a country consisting of |

50 |

One can train a model on a large body of text (e.g. all of Wikipedia) in this fashion without needing any manually created labels. The resulting class of models are termed large language models. Then, when the model is provided with an input string, it will predict the next word. By repeatedly doing this, a language model can generate social media snippets, stories, answers to questions, and even generate code.

When the model is used to repeatedly generate next words, the self-supervised technique is known as autoregressive modeling. In masked language modeling, a model is trained to predict portions of the input that are left out (or masked). Masked language modeling has also been used effectively to generate language models.

The context length of a language model determines how many tokens it considers when predicting the next token. For the large language models discussed in the next chapter, the context length can be 2000, 4000, 100,000 tokens and more.

The next chapter will discuss large language models that have billions of parameters and are trained on massive datasets. These large language models are capable of performing many tasks without further training (zero-shot learning) or by including just a few examples (few-shot learning) along with the input question.

4.3.2 Fine-tuning

The process of pre-training a network on one task, changing the output layer, and re-training part or all of the network on a new dataset is known as fine-tuning. Fine-tuning is more widely used than feature extraction though there is some evidence that the two methodologies may be equally effective (Peters et al, 2019).

Two NYU researchers (Zeiler and Fergus, 2014) took all seven layers from a convolutional network trained on ImageNet and used the trained layers as a starting point for training on two much smaller image classification datasets. They changed only the last classification layer, i.e. instead of the ImageNet categories, the classification layer contained the categories of the new dataset. After training on the new dataset, the network produced state of the art classification performance at the time on both of the smaller datasets. In contrast, the same network trained on these two smaller datasets, but with the layers initialized randomly, produced poor performance presumably because the datasets didn’t have enough training examples.

Similar results have been found for NLP. Google researchers Dai and Le (2015) used two different unsupervised techniques to pre-train LSTM network layers that could then be used for text classification tasks. The first approach was to train a conventional language model to predict what comes next in a sequence (autoregressive training). The second was to use an autoencoder to recreate a sequence. Initializing an LSTM network for a series of text classification tasks with the hidden layers from these unsupervised tasks significantly improved performance with a reduced amount of training on the downstream supervised text classification tasks. Howard and Ruder (2018) showed that this methodology could be applied to reduce the amount of training data needed for the downstream tasks by 100x.

The most impressive NLP performance has involved pre-trained language models that are fine-tuned for downstream tasks.

GPT (Generative Pre-Training) was created by a group of OpenAI researchers (Radford et al, 2018). These researchers were the first to apply the fine-tuning approach to language models using a Transformer architecture which enabled better capture of long-range dependencies than the earlier LSTM networks. The model had 117 million parameters and was trained on the BooksCorpus dataset (Zhu et al, 2015) which is a large collection of 11,038 free books containing approximately one billion words and a total of 4.5GB of text.

The hope was that this would cause the model to learn a “universal representation” that transfers to a wide variety of tasks. More specifically, for each task, the language model was fine-tuned for the specific task. Fine-tuning means starting with the pre-trained language model parameters, adding a task-specific output layer to the network, and providing additional training. For GPT-1, the tasks included natural language inference, question answering, semantic similarity, and text classification. The task-specific training used supervised learning.

By starting with GPT and then further training the model using the dataset for a specific NLP benchmark, the OpenAI researchers were able to achieve state of the art at the time results on 9 of 12 NLP benchmarks. See the chapter on Large Language Models for a discussion of these and other NLP benchmarks. For each task, GPT beat a system specifically architected for that task.

One limitation of GPT is that it uses an objective based on a forward-looking language model (i.e. trying to predict the next word). GPT uses attention to look at all context words simultaneously but the language model objective itself is forward-looking only. This is a significant restriction because the language model training at any time step can only consider the current and prior words in the sentence. However, the entire sentence context is important for natural language processing tasks such as question answering.

GPT is an example of a decoder-only model because it takes as input the preceding tokens and generates the most likely next token.

BERT (Devlin et al, 2018) is like GPT because it uses a Transformer architecture. However, it improves on GPT by using a pre-training task that is more likely to capture bidirectional context. Instead of doing unidirectional language model training, the BERT pre-training task uses masked language modeling which is a form of the denoising autoencoders discussed in the chapter on unsupervised learning. It randomly masks some of the input tokens and the objective is to predict the masked tokens.

This forces it to consider both left-to-right and right-to-left information. Each input training sequence contained two sentences from an unsupervised corpus consisting of 2.8 billion words of Wikipedia data plus 800 million words from books. Half the time, the second sentence was the next sentence in the corpus and half the time the second sentence was randomly selected. In addition to predicting the masked words, the system had to predict whether the second sentence was the next one in the corpus. The original BERT model had 340 million parameters.

BERT is an encoder-only model because it produces embeddings rather than generating outputs.

The researchers who developed BERT evaluated it on 11 natural language processing tasks:

- A set of 8 of the 9 tasks that comprise the GLUE (Wang et al, 2018) multi-task NLP benchmark:

- COLA (Warstadt et al, 2018) – Determine if a sentence is grammatically correct

- SST-2 (Socher et al, 2013) – Determine if a movie review has a positive or negative sentiment

- MRPC (Dolan and Brocket, 2005) – Determine if two news article sentences are paraphrases of one another

- QQP (Lyer et al, 2017) – Determine if two Quora questions are paraphrases of one another

- STS-B (Cer et al, 2017) – Determine degree of semantic similarity between two sentences

- MNLI (Williams et al, 2018), QNLI (Wang et al, 2018), RTE – Determine if a first sentence entails or contradicts a second sentence

- SQUAD v1 (Rajpurkar et al, 2016) – Given a question and a Wikipedia paragraph, identify the span of words in the paragraph that best answers the question

- CoNLL 2003 (Sang et al, 2003) – Determine if a word is a person, organization, location, miscellaneous, or other

- SWAG (Zellers et al, 2018) – Given a sentence, determine which of four continuations is the most plausible

Using a BERT-based language model followed by task-specific fine-tuning on each task produced state of the art results on all 11 NLP tasks at the time of its 2018 publication. More importantly, the state of art performance was achieved by adding just one additional layer to the network for each task.

Over the next couple of years, many improvements were made to the BERT approach:

- CMU’s XLNet (Yang et al, 2020) learned bidirectional contexts and outperformed BERT on 20 tasks.

- Meta’s RoBERTa (Liu et al, 2019) and BART (Lewis et al, 2020) dramatically improved performance by modifying how BERT was trained.

- ByteDance’s AMBERT (Zhang and Li, 2020) improved performance by using a different method of tokenization.

- Baidu’s ERNIE (Sun et al, 2019) added masking of phrasal entities (i.e. all the words in an entity reference) to the BERT training process and achieved state of the art results on several Chinese NLP benchmarks.

- ERNIE 3.0 (Sun et al, 2021) used a fusion of auto-regressive and auto-encoding networks with four billion params to achieve state of the art performance on 54 Chinese language NLP benchmarks.

- FLAN (Wei et al, 2022) is a Google language model that was fine-tuned using instructions from 60+ NLP datasets, a process they term instruction tuning. Performance was then tested on 25 NLP tasks that were not present during training and its performance surpassed GPT-3 on 20 of the 25 tasks.

This approach is particularly useful for training on tasks for which there is only a relatively small amount of labeled training data. One can train on other tasks that have large amounts of data and then fine-tune on tasks with only small amounts of data. For example, this has proven useful for improving machine translation for low-resource languages. Initializing the encoder and decoder with a pre-trained language model results in improved performance for low-resource languages that don’t have a large body of training data (Johnson et al, 2017).

Fine-tuning can either be done on a model trained using supervised learning or on a model trained using self-supervised learning, such a language model. When the model is pre-trained with supervised learning on a classification task, the output layer must first be changed to fit the target. When the model is pre-trained using self-supervised learning, the model output layer must be converted to fit a classification task.

4.3.3 Parameter-efficient fine-tuning

One way of taking advantage of pre-trained language models without the expense of fine-tuning an entire language model is adapter tuning. The idea is to freeze the weights of the language model but to add a relatively small number of weights to the model that are fine-tuned for each task.

Google researchers (Houlsby et al, 2019) added adapter modules composed of weights in between the layers of a BERT language model. When fine-tuning the model for a specific task, only these new weights are updated. The number of added task-specific weights is only 3.6% of the original BERT weights. The resulting resulting model attained near state of the art performance on a wide range of text classification tasks.

A similar approach was shown to work for vision-based adaptation of Transformer vision models (Rebuffi et al, 2018). And a more recent study (Mahabadi et al, 2021) showed how to trim the number of adapter parameters to 0.047% of the pre-trained model’s parameters.

Another parameter-efficient fine-tuning paradigm is to add parameters to the front of the model and to fine-tune only these parameters. This is known as prefix tuning. Several researchers (Li and Liang, 2021, Lester et al, 2021; Liu et al, 2021) added as few as 0.1% of the model parameters as prefix layers and obtained comparable performance to full fine-tuning and outperformed fine-tuning in a low-resource setting.

Other researchers (Mao et al, 2021; He et al, 2022) have explored combining adapter tuning and prefix tuning with even better results.

Microsoft researchers (Hu et al, 2021) developed Low Rank Adaptation (LoRA) which injects parameters into each layer and reduces the number of parameters required to fine-tune GPT-3 by 10,000 times and reduces memory requirements 3 times.

QLoRA (Dettmers et al, 2023) is a technique that applies LoRA to a 4-bit quantized language model. Quantization is a technique that speeds up inference on frozen language models by storing parameter values as integers instead of floating point numbers. Quantization isn’t used for training models due to the loss of fidelity but works fine for inference. However, quantization can be used for the frozen parameters of the language model while the non-quantized LoRA parameters are updated during fine-tuning. This speeds up the fine-tuning process.

Collectively, these fine-tuning methods are known as parameter-efficient fine-tuning (PEFT).

4.4 Cross-lingual transfer

The monolingual language models discussed above increase performance and reduce required training on monolingual natural language processing tasks. In a similar fashion, researchers have developed multilingual language models that enable cross-lingual transfer. This is particularly important for developing NLP capabilities in low-resource languages.

A model is pre-trained on corpora from multiple languages to create a multilingual representation. It is then fine-tuned on a specific NLP task in one language and tested on other languages to determine the degree to which the multilingual representation enables transfer of what was learned about the NLP task in the trained language to the untrained language(s).

Meta and NYU researchers (Conneau et al, 2018) developed the XNLI dataset for measuring and comparing the transferability of multilingual models. XNLI contains 7,500 natural language inference (NLI) test questions that have been translated into 15 languages including languages with non-roman character sets like Chinese and low-resource languages like Swahili and Urdu. The dataset measures the ability to fine-tune a multilingual language model in English and then test the zero-shot NLI performance in other languages.

XLM is a multilingual language model created by Meta researchers (Conneau and Lample, 2019). They created a shared vocabulary among their 15 languages by breaking down the training corpora into a sequence of symbols using byte pair encoding. Initially, the symbols are the characters plus a special character to mark the end of words. Then the most common two-symbol sequences are found and replaced with a new symbol. This is done over and over to create a shared vocabulary.

They created a cross-lingual language Transformer model and fine-tuned it on XNLI by adding a linear classifier on top of the first hidden state of the final Transformer hidden layer. They fine-tuned on English XNLI and tested on the other languages. The system outperformed a multilingual BERT language model and two other multilingual models (Artetxe and Schwenk, 2019; Conneau et al, 2018). Most importantly, the model transferred fairly well to the low-resource languages (Swahili and Urdu).

The multilingual BERT model, which also produced good results, was simultaneously trained on 104 languages using Wikipedia data (compared to 15 for XLM). The multilingual BERT model has also been shown to provide cross-language transfer on four other natural language processing tasks (document classification, named entity recognition, POS tagging, and dependency parsing) (Wu and Dredze, 2019).

In 2020, the same research group (Conneau et al, 2020) trained a language model with examples from over 100 languages. This model, named XLM-R, exhibited comparable performance to state of the art monolingual models on the GLUE and XNLI NLP benchmarks.

As discussed in Chapter 10, this group (Babu et al, 2021) also developed XLS-R for speech recognition. XLS-R can translate between English speech and 21 other languages, including some low-resource languages. This research showed that a large enough model can perform as well with cross-lingual pre-training as with English-only pre-training when translating English speech into other languages.

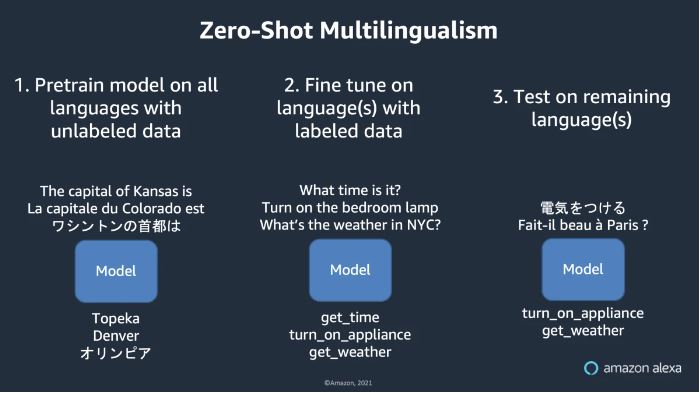

Amazon has scaled its Alexa voice assistant to 1000 languages. The Alexa process is illustrated below:

Image source: Amazon Alexa

As illustrated above, Amazon first creates a multilingual model pretrained with unlabeled data in all the languages it supports. Next, it used whatever labeled data is has to fine-tune the model. Finally, it tests its capabilities on languages with zero or little labeled data.

A group of meta researchers (Lin et al, 2021) used a relatively small language model with 7.5 billion parameters that they trained on 30 languages. It outperformed GPT-3 on downstream NLP tasks in 171 of 182 languages with only 32 training examples per language. Another group of researchers (Xu et al, 2020) showed that transfer to low-resource languages can be effected by adding bilingual dictionaries to the training. No text in the low-resource languages, aside from the dictionaries, was required.

For a more in-depth survey of cross-lingual transfer, see Doddapaneni et al (2021). For a more in-depth survey of multilingual language models, see Ruder (2022).

See also the chapter in this online book on large language models. Large language models are exposed to vast amounts of multi-lingual text in their self-supervised training and are suprisingly good at being able to use the knowledge extracted from text in one language (e.g. English) and either translate it to another language or use it to answer questions or have a dialog in another language (e.g. Swahili).

4.5 Multi-task learning

In multi-task learning, a network is trained on multiple tasks simultaneously. When the tasks are similar, performance on all tasks can be enhanced. If the tasks are dissimilar, negative transfer can occur where performance on individual tasks actually gets worse as tasks are added.

There has long been evidence that models trained on multiple similar tasks learn those tasks better than having separate models for each task. However, the tasks have to be very similar.

Perhaps the first multi-task research was done in 1997. For his Ph.D. thesis, Carnegie Mellon researcher Richard Caruana (1997) looked at performance on ten very similar image processing tasks, i.e.

- Deciding whether the image holds a single or double door

- Identification of the horizontal location of the doorway center

- Determining the width of doorway

- Identification of the horizontal location of the left doorjamb

- Identification of the horizontal location of the right doorjamb

- Determining the width of the left doorjamb

- Determining the width of the right doorjamb

- Identification of the horizontal location of the left edge of the door

- Identification of the horizontal location of the right edge of the door

He trained ten separate neural networks on these tasks and then tried combining them. The inputs for all ten tasks were the same so no change was needed there. The only change was in the output layer which had the output neurons from all ten tasks. He found that the performance on the test set in the multi-task setting was 20-30% better than performance on the individual tasks.

There are several possible reasons for improved performance:

More Training Observations: Learning ten tasks at once provides more data than learning a single task. Supervised learning models generally perform better with more training data.

Focus on Important Features: Having multiple tasks might enable a model to focus on the features that are common to the tasks.

Less Overfitting: Nearly every training dataset has irrelevant patterns that shouldn’t be learned because they won’t generalize to test data. With multiple tasks, the model is less likely to learn these noise patterns because they are unlikely to occur in the training sets for more than one task.

The Tesla machine learning architecture (Karpathy, 2019) is one of the most impressive commercial examples of multi-task learning. Teslas have ML models for recognizing pedestrians, stop signs, traffic lights, lane markers, and other objects. These ML models share a common set of early layers and then branch off into task-specific models. This result has been replicated for many types of tasks over the years.

See also Ruder (2017) and Crawshaw (2020) for comprehensive overviews of multi-task learning.

4.5.1 Multiple NLP tasks

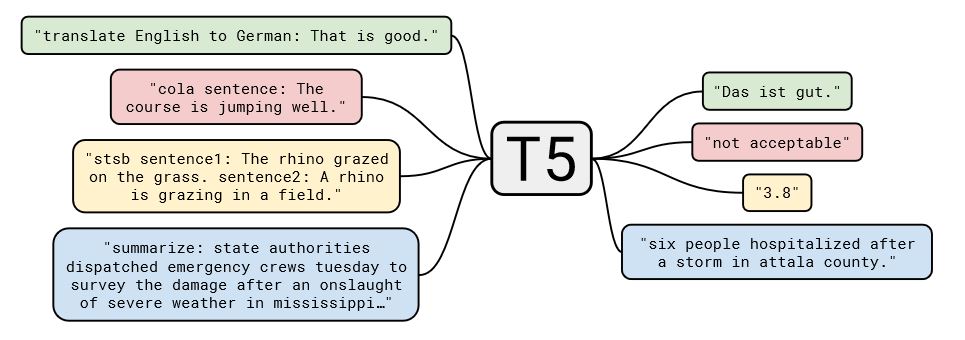

Google researchers (Raffel et al, 2020) showed that virtually every natural language processing task can be re-formulated as a prompt task that has the same training objective as a language model generation task. Some examples are illustrated in this diagram:

They pre-trained an 11 billion parameter Transformer on hundreds of gigabytes of internet text using a language modeling objective and named the model T5.

For example, to observe an LLM’s question answering capabilities, one can pose a question in the prompt and see if the generated continuation is the answer. In testing GPT-2, an “A:” was added to the input for reading comprehension questions. For the GPT-2 summarization task, a “TL;DR:” was added to the input string.

For natural language tasks, the predominant approach has been to transform different tasks into the same text-to-text task. For example, under Google’s text-to-text framework, multiple NLP tasks can be converted into a single text to text task by prepending the task instructions onto the input of each training example. For example, a training example from a machine translation task would look like this:

Input: “translate English to German: that is good.” Output: “Das ist gut.”

A training example from a summarization task would look like this:

Input: “summarize: state authorities dispatched emergency crews tuesday to survey the damage after an onslaught of severe weather in mississippi…” Output: “six people hospitalized after a storm in attala county.”

By transforming the training examples from multiple NLP tasks in this fashion, the training examples from those tasks can all be merged and fed into a single network that has to learn to read the labels and process the different tasks correctly.

A group of Hugging Face and Brown University researchers (Sanh et al, 2021) converted a set of NLP datasets for different tasks into text-to-text examples and used the resulting multi-task dataset to train a language model named T0. The resulting model showed strong zero-shot performance on many different NLP tasks.

Google researchers (Wei et al, 2022) took a similar approach but used a larger (137 billion parameter) language model, FLAN, and trained it on 60 NLP datasets that had been converted to a text-to-text format that emphasized the instructions for doing the task.

Another set of Google researchers (Aribandi et al, 2022) trained a language model on 107 NLP tasks and showed that the resulting model, ExT5, outperformed other multi-task pre-trained models on the SuperGLUE multi-task benchmark including T5.

AllenAI researchers (Gupta et al, 2021) have taken this approach one step further by creating a unified mutli-task framework, GPV, for vision and language. In this framework, each input example is composed of an image and a set of natural language instructions. The system can perform a variety of visual question answering tasks including question answering, captioning, localization, and classification.

FLAN and T0 even outperformed GPT-3, a large language model that exhibits surprisingly good zero- and few-shot performance on many NLP tasks. T0 did this with 16x fewer parameters than GPT-3. FLAN outperformed GPT-3 on 20 of 25 datasets in zero-shot mode.

That said, most of the research focus on developing zero-shot and few-shot learning has morphed from multi-task datasets to large language models. Large language models are discussed in the next chapter. One likely reason is that systems trained on multi-task systems will only be able to generalize to tasks that are similar to one or more of the tasks in the training dataset. In contrast, as discussed in the next chapter, large language models likely acquire a much broader set of knowledge and reasoning capabilities than systems trained on multi-task datasets.

4.5.2 Multiple speech tasks

Researchers are also building models that can support multiple speech tasks (e.g. Pascual et al, 2019; Ravanelli et al, 2020).

A group of Chinese researchers (Ao et al, 2022) created SpeechT5 which combines six different speech tasks: speech recognition (ASR), speech translation (ST), speech identification (SID), text-to-speech (TTS), voice conversion (VC), and speech enhancement (SE) as illustrated below:

For each task, a pre-processing network is used to convert the speech or text into a common layer that is the input to a Transformer encoder-decoder network that does the conversion. The encoder-decoder network converts speech to text for ASR, speech in one language to speech in another language for ST, and so on. Then a post-processing network is used to convert the output of the Transformer network into the appropriate modality.

The SpeechT5 network outperformed wav2vec 2.0 and HuBERT on the ASR task, produced state-of-the-art results on the SID task, and produced state-of-the-art results on the VC task for Transformer networks.

SUPERB (Yang et al, 2021) is a multi-task bench for speech tasks that is analogous to Glue/SuperGlue for text-based NLP. Tasks include:

- Speech recognition: creation of text transcripts

- Phoneme recognition: identification of phoneme sequences

- Keyword spotting: detecting keywords in speech

- Intent classification: tagging actions, objects, and locations

- Slot filling: identifying values of slots within intent frames

- Emotion detection: classifying utterances as neutral, happy, sad, angry

- Spoken term detection: determining if a term is present in an utterance

- Speaker identification: identifying the speaker

- Speaker verification: determines if the speaker is the same in two utterances

- Speaker diarization: determines who is speaking for each timestamp

WavLM (Chen et al, 2022) learned ASR information used two types of self-supervised learning. It used masked speech prediction to learn the ASR information and it used speech denoising modeling to acquire the knowledge for non-ASR tasks. For instance, the process of pseudo-label prediction on overlapped speech implicitly improves the model capability on diariazation and separation tasks. The speaker identity information and speech enhancement capability are modeled by the pseudo-label prediction on simulated noisy speech. WaveLM achieved state of the art performance on the Superb benchmark.

4.5.3 Multiple reinforcement learning and robotics tasks

Multi-task learning is particular important for robotics in order to move from single-task robots to more general purpose robots.

DeepMind researchers (Espeholt et al, 2018) trained a network on 30 tasks that take place in the same game environment. They found that learning multiple reinforcement tasks in a single network can produce better performance with less data than single-task networks. One reason for the improved performance is that the tasks share a common visual environment so it is not surprising that what is learned about visual elements from one task transfer over to other tasks in the same environment.

Similarly, Carnegie Mellon researchers (Pinto and Gupta, 2016) trained one robot model to just grasp an object with 5000 grasp interactions. They trained another model with 2500 interactions of grasping an object and 2500 interactions of pushing an object. Interestingly, the model trained on both tasks outperformed on grasping than the model trained to just grasp. They hypothesized that performing alternate tasks exposed object properties and modalities that were inaccessible but relevant to the original task. The researchers noted that both grasping and pushing are dependent on common object properties like geometry.

Another group of DeepMind researchers (Hafner et al, 2020) have also developed techniques for robotics multi-task learning. They created a single algorithm, scheduled auxiliary control, that learns one policy for multiple robotics tasks.

Huang et al (2020) developed an algorithm that can learn a single policy for a specific robotic actuator that can do multiple tasks.

Google researchers (Kalashnikov et al, 2021) developed MT-Opt, which uses offline reinforcement learning to learn 12 real-world tasks. One problem with using shared data from different tasks is that it can exacerbate the distributional shift between the learned policy and the dataset, resulting in poor performance. Using the same environment, another set of researchers (Yu et al, 2021) developed a data sharing technique that alleviates this issue.

4.5.4 Multiple computer vision tasks

Computer vision researchers have also demonstrated that multiple vision tasks can be learned concurrently and Stanford researchers (Standley et al, 2020) have created a framework for determining which tasks will benefit from being learned in the same multi-task model and which will experience negative transfer and should be learned in separate model or different multi-task models.

4.6.5 Multi-modal multi-task learning

The NLP multi-task models discussed above are really single-task models, most of which have been trained on a text-to-text language modeling objective. The same is true for speech multi-task models. However, researchers are working on models that supports multiple tasks in different modalities.

For example, DeepMind’s Perceiver IO (Jaegle et al, 2021) is a multi-task model that includes language, vision, and game-playing tasks. A follow-on model named Gato (Reed et al, 2022) uses a single network with the same weights to play Atari, caption image, chat, and stack blocks using a robot arm. Gato is also multi-task and multi-modal.

Googe Research (Dean, 2021) has announced a new architecture, Pathways, whose goal is to produce true multi-modal, multi-task learning that goes beyond text-to-text.

4.6 Meta-learning

In meta-learning (Schmidhuber, 1987; Thrun, 1998), also known as learning to learn, the idea is to sequentially learn multiple tasks but to learn something from each task that increases speed of learning and/or performance on future tasks.

One approach is to learn at two levels simultaneously: Rapid learning occurs for the task at hand in one network and more gradual learning of abstract skills occurs across all tasks. The abstract skills can then be applied to new tasks and that will result in faster learning of the new tasks. Hochreiter et al (2001) termed the former network the supervisor network and the latter network the subordinate network. The idea is that the subordinate network learns a series of tasks and learns each one relatively quickly. The supervisor network learns more slowly but learns more generalized knowledge that it uses to speed up the learning process of the subordinate network for each new task.

For example, in a 2017 paper cleverly titled “Learning To Reinforcement Learn”, a Google DeepMind London team (Wang et al, 2017) demonstrated a meta-learning algorithm for several different two-arm bandit (i.e. slot machine) tasks. One instance of the bandit problem had one arm always give a reward and the other arm never give a reward. Other instances of the bandit problem assigned different probabilities to each arm. As the supervisor network matured, the subordinate networks were faster to learn the probabilities. They also showed that it worked for variants of two other tasks.

Another approach is to have task learners that learn specific tasks rapidly and meta-learners that gradually learn information useful for multiple tasks. For example, multiple researchers (e.g. Vinyals et al, 2016; Santoro et al, 2016; Ravi and Larochelle, 2017; Kaiser et al, 2017; Ren et al, 2018) have used meta-learning for tasks involving Mini-ImageNet classes and Omniglot letter categories. Mini-ImageNet is a subset of the ImageNet dataset that is divided into classes specifically designed for few-shot learning experiments.

The Omniglot dataset was created by MIT researchers (Lake et al, 2015) for few-shot learning research. It has 1623 characters from 50 alphabets of the world. Each character has 20 handwritten examples. The idea is to pre-train the networks on one set of classes (e.g. 100 ImageNet classes) and then use meta-learning to train the meta-learner network to classify images from classes there were not present in the training data by training on just one image or a few images (e.g. 5) for each class not seen in the training data. One-shot and few-shot performance were better using the meta-learning training than with prior methods of one-shot and few-shot learning.

University of California at Berkeley researchers (Finn et al, 2017) developed the Meta-Agnostic Meta-Learning (MAML) algorithm for automatically figuring out a set of initial weights that will lead to few-shot learning for multiple reinforcement learning tasks. MAML starts with a set of tasks and a set of randomly initialized weights. Then it randomly selects n of the tasks. For each of the n tasks, MAML runs a batch of examples, computes the weight adjustments using gradient descent, and stores the weight adjustments for use by the meta-learner. Then it does the same for the other tasks with all tasks using the same initial weights. After processing the n tasks, it updates the initial weights based on an objective function that incorporates the stored weight adjustments and then iterates the whole process with the new initial weights. They showed that these learned initial weights produced better one-shot and five-shot learning on the Omniglot and Mini-Imagenet datasets than a number of other few-shot learning classification techniques.

They were also able to demonstrate MAML on supervised regression and classification tasks and on reinforcement learning. The reinforcement learning version works just like the classification meta-learner described above except that where the supervised learning tasks use examples with inputs and outputs, the reinforcement learning meta-learner uses tasks that have sample trajectories where each trajectory is a sequence of states and actions chosen for that state.

Other researchers have developed various improvements on and extensions to the MAML approach (e.g. Z. Li et al, 2017; Gupta et al, 2018; Mishra et al, 2018; Groshev et al, 2018; Yu et al, 2018).

Meta-learning approaches have also seen success in neural architecture search in which meta-learning algorithms find the optimal network architecture (e.g. optimal hyperparameters) for a particular task (e.g. Zoph and Le, 2017); Francheschi et al, 2018; Vanschoren, 2018); Rawal et al, 2020. ; Such et al, 2020).

The primary limitation of these few-shot meta-learning approaches is the assumption that the training and test tasks are drawn from the same distribution. Another way of saying this is that the approach will only be successful if the training tasks are very similar to one another and are similar to the test tasks. See Hospedales et al (2020) and Clune (2020) for in-depth surveys of meta-learning approaches and systems.

To get around this limitation, focus has shifted away from meta-learning and onto the large language models discussed in the next chapter.

4.7 Continual learning

Continual learning is closely related to meta-learning in the sense that both types are types of lifelong learning. Continual learning is primarily concerned with avoiding the effects of catastrophic forgetting in which deep learning systems forget how to do one task when they learn another task.

For example, Google researchers (Kaiser et al, 2017) developed a key-value long-term memory module that can be added to supervised networks for many different tasks. It “remembers” the most important activations in the primary network from the past for each training example and this prevents some forgetting. Other researchers use rehearsal learning techniques that mix old training examples with new training examples to reduce forgetting (e.g. Hou et al, 2019; Douillard et al, 2020). Yet another technique is memory aware synapses that keep track of which parameters in a network are most important and uses a regularize to penalize overwriting of important weights from older tasks when learning newer tasks (Aljundi et al, 2018).

Google researchers (Wang et al, 2022) demonstrated continual learning by using a pre-trained language model and combining it with prompts for different tasks. Instead of fine-tuning the model parameters, they leave the parameters frozen and instead create a pool of prompts for different tasks. Over time, these prompts are updated and the appropriate prompt for each input is automatically selected. Other researchers have demonstrated a technique for fine-tuning language models on multiple successive tasks without inducing forgetting.

See Sodhani et al (2022) for an in-depth overview of continual learning. Also see this site for information on continual learning benchmarks, conferences, and research programs, and a catalog of papers on continual learning. And see Tengtrakool (2023) for a discussion of other methods for overcoming catastrophic forgetting.

© aiperspectives.com, 2023. Unauthorized use and/or duplication of this material without express and written permission from this site’s owner is strictly prohibited. Excerpts and links may be used, provided that full and clear credit is given with appropriate and specific direction to the original content.