5.0 Overview

On November 30, 2022, OpenAI released ChatGPT which rocketed immediately into the public consciousness. Bill Gates called ChatGPT “…the most important advance in technology since the graphical user interface”.

ChatGPT can answer questions with amazingly human-like responses such as this one:

User: Explain the moral and social issues with using AI to do your homework.

ChatGPT: There are a few potential moral and social issues with using AI to do homework. First, if students are using AI to do their homework for them, they may not be learning the material as well as they could be. This could lead to problems down the road when they are expected to know the material for exams or real-world applications. Additionally, using AI to do homework could lead to cheating. If students are able to get away with using AI to do their homework for them, it could create an unfair advantage over other students who are doing their homework on their own. Finally, using AI to do homework could lead to a reliance on technology that could be detrimental if the technology were to fail or become unavailable.

Here are some other examples of inputs that ChatGPT responds to with human-like text:

- Write a poem on risk management in the style of Shakespeare

- Create a computer program to sort the data in an excel spreadsheet

- Give me some creative ideas for a birthday present for an 8-year old

- Create a social media post that explains…

- Write a job description for a telemarketer

ChatGPT is an example of a large language model (LLM). An LLM is a language model that was trained using a deep learning model architecture with a large number of parameters (e.g. over a billion parameters). LLMs are capable of performing impressive natural language processing (NLP) on a wide rangoe of tasks without fine-tuning for specific tasks.

LLMs are a type of foundation model that generates text. Other foundation models generate images, videos, audio, music, speech, and other types of information.

5.1 Large language models

Large language were first created by OpenAI a company founded in 2015 with a US $1 billion investment from industry luminaries including Elon Musk, Peter Thiel, Ilya Sutskever, Sam Altman, Reid Hoffman, Jessica Livingston, Wojciech Zaremba, and others. In 2023, Microsoft committed to a US $10 billion in the company that was a follow-on to a 2019 US $1 billion investment. However, dozens of other companies and organizations have developed large language models that compete with the OpenAI models.

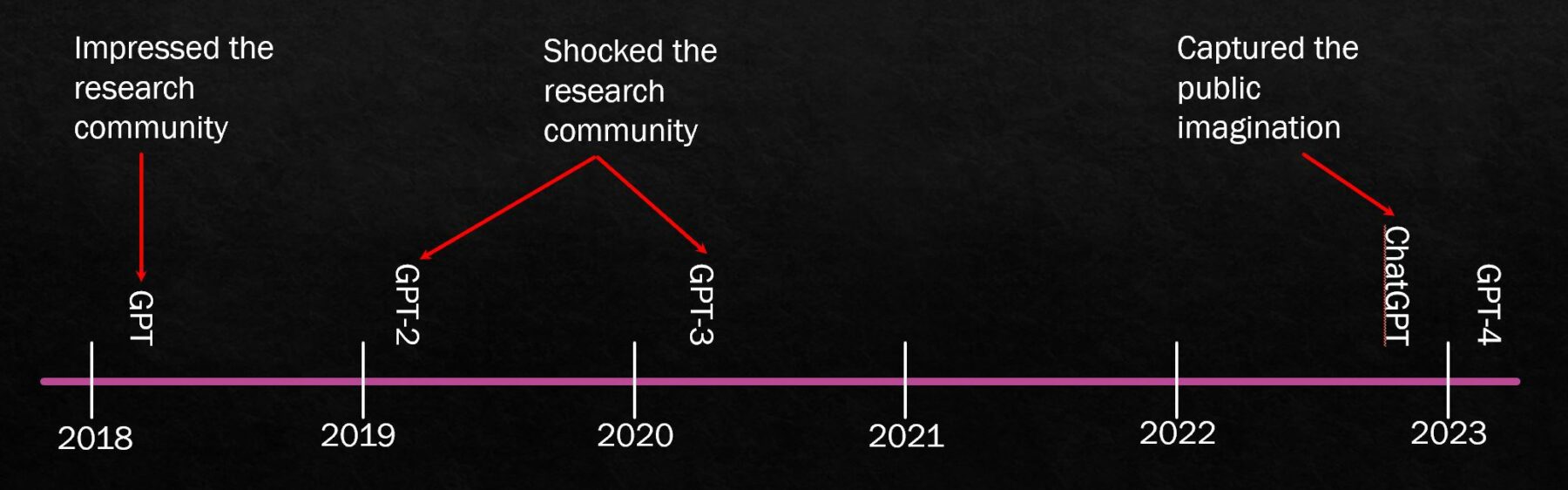

ChatGPT was the fourth release in a series of OpenAI large language models that started with GPT (Radford et al, 2018) in 2018:

GPT-2 (Radford et al, 2019) was released in 2019. GPT-2 has 1.5 billion parameters (i.e. 1.5 billion connections between neurons) and was trained on a 40GB dataset of Reddit posts. The goal was to determine the scale-up impact of a 10 times increase in both the number of parameters and the amount of training text versus GPT-1.

One of the eye-opening capabilities of GPT-2 is its ability to take a prompt (i.e. a sentence or two of text) and provide a cohesive completion, e.g.

Prompt: In a shocking finding, scientist discovered a herd of unicorns living in a remote, previously unexplored valley, in the Andes Mountains. Even more surprising to the researchers was the fact that the unicorns spoke perfect English.

Completion: The scientist named the population, after their distinctive horn, Ovid’s Unicorn. These four-horned, silver-white unicorns were previously unknown to science. Now, after almost two centuries, the mystery of what sparked this odd phenomenon is finally solved. Dr. Jorge Pérez, an evolutionary biologist from the University of La Paz, and several companions, were exploring the Andes Mountains when they found a small valley, with no other animals or humans. Pérez noticed that the valley had what appeared to be a natural fountain, surrounded by two peaks of rock and silver snow…

GPT-3 (Brown et al, 2020) was released in 2020. GPT-3 had 100 times more parameters (175 billion) than GPT-2 and was trained on 100 times more text than GPT-2. GPT-3 reportedly cost $12 million to train.

Like GPT-2, GPT-3 is capable of taking an input text and generating a continuation. However, GPT-3 generated much higher-quality text than GPT-2. The OpenAI team used GPT-3 to generate 80 pieces of text like the one below:

After two days of intense debate, the United Methodist Church has agreed to a historic split – one that is expected to end in the creation of a new denomination, one that will be “theologically and socially conservative,” according to The Washington Post. The majority of delegates attending the church’s annual General Conference in May voted to strengthen a ban on the ordination of LGBTQ clergy and to write new rules that will “discipline” clergy who officiate at same-sex weddings. But those who opposed these measures have a new plan: They say they will form a separate denomination by 2020, calling their church the Christian Methodist denomination. The Post notes that the denomination, which claims 12.5 million members, was in the early 20th century the “largest Protestant denomination in the U.S.,” but that it has been shrinking in recent decades. The new split will be the second in the church’s history. The first occurred in 1968, when roughly 10 percent of the denomination left to form the Evangelical United Brethren Church. The Post notes that the proposed split “comes at a critical time for the church, which has been losing members for years,” which has been “pushed toward the brink of a schism over the role of LGBTQ people in the church.” Gay marriage is not the only issue that has divided the church. In 2016, the denomination was split over ordination of transgender clergy, with the North Pacific regional conference voting to ban them from serving as clergy, and the South Pacific regional conference voting to allow them.

They mixed the 80 texts in with news texts generated by people. They then did a study in which they asked workers recruited using Amazon’s Mechanical Turk to determine whether each article was generated by a person or a computer. The articles generated by GPT-3 were identified as machine-generated 52% of the time or only 2% better than chance. Essentially, these hired workers could not tell the difference between human-generated text and text generated by GPT-3. In fact, the news article shown above was identified as human-generated by 88% of the workers.

GPT-4 (OpenAI, 2023) was released in March, 2023. Based on both anecdotal reports and objective benchmarks (see below), GPT-4 outperforms GPT3.5 and ChatGPT by a wide margin. However, unlike the releases of the earlier GPT’s, OpenAI decided not to disclose the full details of the architecture or the training methodology. It’s unclear whether this decision resulted from competitive concerns, from a reticence to provide details to potential bad actors or from both. That said, there have been reported leaks about the architecture. Several people claim to have been told by OpenAI insiders that GPT-4 uses eight separate networks with 220B parameters in each network. They are combined in a mixture of experts architecture.

An updated version of GPT-4 named GPT-4 Turbo was released in November, 2023. It has an updated knowledge cutoff of April 2023 and has a larger context window of 128k tokens compared to 4096 tokens for GPT-4 (GPT-3 had a context window size of 2048 tokens). The model is also 3X cheaper for input tokens and 2X cheaper for output tokens compared to the original GPT-4 model. The maximum number of output tokens for this model is 4096.

5.2 Emergent properties

The first GPT model (Radford et al, 2018) had approximately 150 million parameters and was used mostly as a starting point for fine-tuning on specific NLP tasks.

GPT-2 had 1.5 billion parameters or ten times as many parameters as GPT. With that increase in size came the ability to generate grammatically correct and often sensible continuations to prompts.

GPT-3 was over 100 times the size of GPT-2 and had 175 billion parameters. Not only could GPT-3 generate much better continuations but it was able to perform many NLP tasks with little or no training.

GPT-4 was even bigger (reported to be an ensemble of eight models of 220 billion parameters each) and performs much better than GPT-3.

Google researchers (Roberts et al, 2020) found a similar result when they tested different-size versions of their T5 model. They found that, as the number of model parameters increases, so does performance. The same result was found for their PaLM LLM as shown below:

| # of Parameters (GB) | NLG | NLU |

| 8 | 42 | 59 |

| 62 | 58 | 67 |

| 540 | 64 | 75 |

The NLG column shows the average performance on 8 natural language generation tasks while the NLU column shows the average performance on 21 natural language understanding tasks.

The NLP capabilities that emerge with larger and large models have been labeled emergent capabilities (Wei et al, 2022). That said, Stanford researchers (Schaeffer et al, 2023) have argued that the idea of emergent capabilities is a “mirage” and that the reality is that virtually all capabilities actually exist in smaller language models but they are so minimal that they are hard to detect.

Will we continue to reap performance increases and see new capabilities emerge as we continue to scale up model size and the amount of training data? Sam Altman, CEO of OpenAI, is on record saying that he doesn’t believe that LLMs will be able to scale indefinitely and that we may have already reached the point of diminishing returns. Nick Frosst, a co-founder of Cohere, an OpenAI competitor expressed similar thoughts.

Interestingly, model size is not the only determinant of performance. It turns out that doubling the model size requires doubling the dataset size to see linear improvement. Without increased dataset size, models are undertrained. In fact, training data may be a little more important than number of parameters. Google DeepMind’s Chinchilla model (Hoffman et al, 2022) with 70 billion parameters and four times the amount of training data outperformed their Gopher model (Rae et al, 2022) that has 280 billion parameters by 7%.

Data quality (Gunesekar et al, 2023), the amount of compute used in training (Kaplan et al, 2020), and use of LLM ensembles (Jiang et al, 2023) are other factors that increase performance. Montreal and UK researchers (Caballero et al, 2023) have created a function that models scaling behavior and takes into account amount of compute used for training (or inference), number of model parameters, training dataset size, model input size, and number of training steps.

One problem is that, to continue scaling we need more and more high-quality data. One study (Villalobos et al, 2022) projects that we’ll run out of data by 2026 unless we find a way to use synthetic data such as data generated by LLMs. However, using LLM training data that includes LLM-generated text reduces the quality and diversity of the training data. This has been shown to negatively impact the performance of the LLMs (Alemohammad et al, 2023; Shumailov et al, 2023). Even if LLM-generated text isn’t used intentionally, LLM-generated text is already starting to find its way onto the internet via outlets such as social media and news. When these sources are used for training data, LLM-generated text will likely be included.

In a podcast interview, OpenAI CEO Sam Altman said that the dramatic GPT-4 improvement over GPT-3 resulted from “hundreds of complicated things” including improvements in the training methodology, how the data was collected, how the data was cleaned, the optimizer, and the overall architecture.

5.3 OpenAI competitors

While OpenAI is the leader in developing LLMs, they have significant competition. There are hundreds of LLMs from other vendors and open source communities that compete with the OpenAI LLMs in various ways. There have been over 70 LLMs released just by Chinese companies. Some of the more notable LLMs include:

- Anthropic raised $1.5B to develop its Claude chatbot (Bai et al, 2022). Claude 2 was released in 2023. The AlpacaEval benchmark rates Claude as the 2nd or 3rd best LLM depending on the scoring method — just below GPT-4.

- Google has released many LLMs:

- Gemini was released in December 2023 and is Google’s flagship multimodal LLM.

- LaMDA language model (Thoppilan et al, 2022) has 137 billion parameters and was trained on 1.56 trillion words of public dialog data and web text.

- PaLM (Chowdherry et al, 2022) has 540 billion parameters and was trained on 780 billion tokens of text from high-quality webpages, books, Wikipedia, news articles, source code, and social media conversations.

- PaLM 2 (Google, 2023) was trained with text from over 100 human languages and 20 coding languages.

- Bard, Google’s ChatGPT competitor, was initially powered by LaMDA and is now powered by PaLM.

- FLAN (Wei et al, 2022) is a 137 billion parameter model that was fine-tuned on multiple tasks described via instructions that substantially improved zero-shot performance on unseen tasks.

- GLaM (Du et al, 2022) is a massive LLM with 1.2 trillion parameters.

- Switch Transformer (Fedus et al, 2022) is an even bigger LLM with 1.6 trillion parameters.

-

- Meta has also released several LLMs:

- OPT (Zhang et al, 2022) has 175 billion parameters and was trained on 180 billions tokens of text from publicly available datasets.

- BlenderBot 3 (Shuster et al, 2022) is a chatbot built on top of OPT which can learn from user feedback.

- LLaMA (Touvron et al, 2023) has 65 billion parameters,

- LLaMA 2 (Touvron et al, 2023) has 70 billion parameters.

- The Technology Innovation Institute released its 180 billion parameter Falcon model that was trained on 3.5 trillion tokens.

- Amazon released its Titan language model in 2023.

- ByteDance, the owner of TikTok, is reportedly developing a ChatGPT competitor named Grace.

- Cohere raised $435M in funding to develop its eponymous set of language models.

- Huawei released it PANGU-Σ language model in 2023 (Ren et al, 2023) which has over 1 trillion parameters.

- Baidu announced a ChatGPT competitor named Ernie in 2023.

- Apple is reportedly developing a chatbot named Ajax.

- Databricks acquired MosaicML, a leading open source vendor of LLMs.

- Saleforce has been developing large language models for several years including CTRL (Keskar et al, 2019) which had 168 billion parameters and the recently released Einstein GPT.

- AI21 Labs created it Jurassic (Lieber et al, 2021) which had 178 billion parameters and has recently released Jurassic-2.

- Beijing Academy of AI developed a massive language model named Wu Dao which has 1.75 trillion parameters.

- Chinese researchers (Yang et al, 2023) created Baichuan 2, a 13 billion parameter model trained on 2.6 trillion tokens.

- Elon Musk’s company xAI has released Grok, which reportedly has 33 billion parameters.

Additionally, there are many open source LLMs that show capabilities (see below) comparable to GPT-3/4 at scale and, perhaps more importantly, there are many smaller open source LLMs that are accessible to organizations that don’t have the financial resource of companies like OpenAI, Google, and Apple. There are over 535 open source LLMs ranked on the Open LLM Leaderboard.

A commercial vendor, HuggingFace, offers an extensive open source library of both pre-trained and trainable language models that is widely used.

5.4 Instruction tuning

ChatGPT was built on top of GPT-3.5 which is an upgraded version of GPT-3. GPT-3.0/3.5 could produce impressive text but it often generated unacceptable text including:

- Hallucinations: LLMs generate text with incorrect information. For example, an American Journal of Medicine article (Zheng and Zhan, 2023) provided a cautionary example of how ChatGPT can generate factual content that sounds correct but is in fact completely fabricated. Similarly, Purdue University researchers (Kabir et al, 2023) found that ChatGPT answered 52% of Stackoverflow questions incorrectly. Stackoverflow ended up banning ChatGPT answers.

- Biased and toxic responses: LLMs often generate text that is misogynistic, racist, biased or toxic in other ways. Here are some examples of LLM responses to prompts. For example, if an LLM is trained on text in which most doctors are men and most nurses are women, then the LLM will learn that bias and its conversational output will reflect that bias.

- Dangerous information: LLMs (like search engines) can produce dangerous responses such as instructions for making bombs and food recipes like mosquito-repellent roast potatoes.

- Non-humanlike responses: When LLMs are just trained to generate the next word, they often produce text that repeats itself and/or goes off-topic.

Note: Many other LLM issues are detailed in a survey by Kaddour et al (2023).

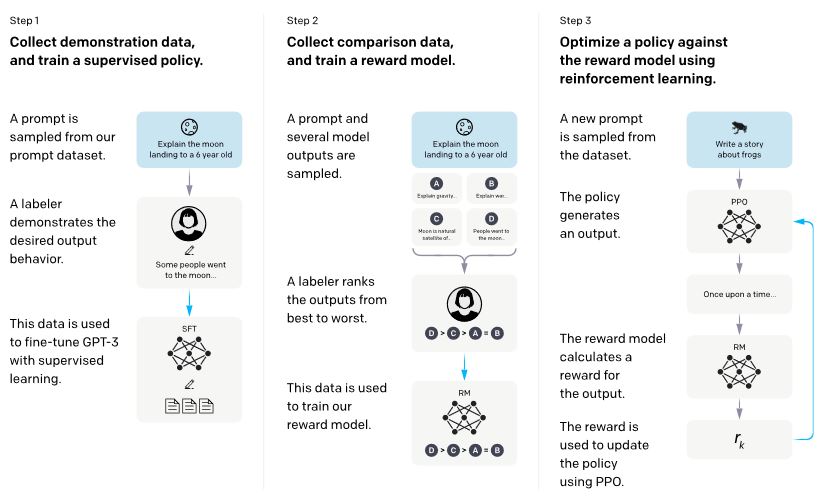

OpenAI researchers (Ouyang et al, 2022) theorized that the training task objective of predicting the next word in documents found on the internet is misaligned with the objective “follow the user’s instructions helpfully and safely”. They found that they could take feedback from human users and use it to fine-tune GPT-3 to better follow instructions using the following technique:

This technique is termed Reinforcement Learning With Human Feedback (RLHF). It was used to fine-tune ChatGPT and GPT-4 and was successful in both producing humanlike responses and reducing biased and toxic content.

LLMs generate responses word-by-word (actually token-by-token) but need to be evaluated on the basis of multi-word answers. Reinforcement learning (RL) is a machine learning technique that is used to train a system to perform a sequence of actions. For example, the video game pong can be characterized as a series of actions (moving the paddle up or down) depending on the current state of the game (mainly the current positions of the ball and paddle). Using RL, each word can be considered to be an action and the sequence of words already generated can be considered to be the state.

Anthropic (Bai et al, 2022) used a similar but different approach termed Reinforcement Learing from AI Feedback (RLAIF) with similar results. Google researchers (Lee et al, 2023) compared RLHF and RLAIF across multiple tasks and found roughly similar levels of performance.

Like ChatGPT, GPT-4 received RLHF training on top of the language model pretraining. While the RLHF training increased the humanness of the LLMs outputs, it did not affect the capabilities. Average performance on various benchmarks and tests was approximately equal for the base model with and without RLHF fine-tuning.

However, as Gary Marcus (2023) explains in his “Nightmare on LLM Street” article, the RLHF fixes are only surface-level. While they catch many of the most aggregious examples of toxic and biased content, ChatGPT still generates a great deal of toxic and biased content. See also Casper et al (2023) for a review of RLHF issues and limitations and a discussion of how to remedy them.

Additionally, ChatGPT output is not humanlike enough to pass the Turing Test (Turing, 1950). The idea was that one could determine when a computer achieved human-level intelligence if a human interrogator could not tell the difference between a human and a computer while interacting via keyboard chats. Two UC San Diego researchers (Jones and Bergen, 2023) recruited 652 participants to act as interrogators via social media. No technical skills were required. These interrogators were given five minutes to determine whether the participant on the other side of the chat was human or a computer (GPT-4). On average, the chats last about three minutes.

The participants were able to correctly determine that they were chatting with a human 73% of the time and were able to correctly determine that they were chatting with a computer 60% of the time. So, while GPT-4 is clearly capable of some level of deception, most of the time, an ordinary human can distinguish the conversation of a human from GPT-4.

5.5 Measuring the performance of LLMs

There are numerous formal benchmarks produced by the academic community that can be used to measure the relative performance of these LLMs. Performance benchmarks will be discussed in Chapter 6 in the context of evaluating the general capabilities of LLMs.

There are three sites that perform automated, standardized evaluations of LLMs: AlpacaEval, Open LLM Leaderboard, and the LMSYS Leaderboard. These sites provide a single measure of performance that aggregates the performance scores on a set of individual benchmarks. All three sites use different benchmarks and the rankings among the three sites vary greatly. Also, the three sites each rank different LLMs. In the table below, a “–” indicates that the model isn’t ranked. It does not mean it was ranked extremely low.

Below are rankings for the top models as of December, 2023:

| # of Parameters (billions) |

AlpacaEval Rank* | LMSYS Rank** | Open LLM Rank*** | |

| GPT-4 Turbo | 220 (x 8) | 1 | 1 | |

| GPT-4 | 220 (x 8) | 2 | 2 | — |

| Yi | 34 | 3 | 2 | |

| LLaMA 2 | 70 | 4 | 11 | 4 |

| Claude 2 | ? | 5 | 4 | |

| Cohere | ? | 6 | ||

| ChatGPT | 175 | 7 | ||

| Vicuna | 33 | 8 | 9 | |

| Claude | ? | 9 | 3 | |

| Vicuna | 13 | 10 | 15 | |

| LLaMA 2 | 13 | 11 | 20 | 14 |

| Vicuna | 7 | 12 | 23 | |

| WizardLM | 13 | 13 | 12 | |

| Guanaco | 65 | 14 | 42 | |

| LLaMA 2 | 7 | 15 | 22 | |

| WizardLM | 70 | 7 | ||

| OpenChat v3 | 13 | 10 | ||

| Zephyr | 7 | 13 | ||

| Falcon | 180 | 16 | ||

| MPT | 30 | 14 | ||

| Qwen | 72 | 1 | ||

| DeepSeek | 67 | 3 | ||

| TigerBot | 70 | 6 | ||

| Starling | 11 | 7 | ||

| Mistral | 7 | 21 | 8 | |

| Velara | 11 | 9 | ||

| InternLM | 20 | 10 | ||

| Qwen | 7 | 11 | ||

| Falcon | 40 | 50 | 12 | |

| LLaMA 2 | 30 | 13 | ||

| TigerBot | 13 | 15 |

*AlpacaEval rank using the GPT-4 evaluator and the verified filter.

**LMSYS rank using the ELO rating.

*** OpenLLM rank using Average ranking.

There are also many informal comparisons that have been reported such as this one.

Most benchmarks measure the performance of a model on a specific task such as reading comprehension or summarization.

Each benchmark has an associated dataset that contains both training and test examples. Each benchmark also has an objective measure such as accuracy and most benchmarks have a leaderboard with links to articles and code for the leading AI systems. Accuracy, exact match, and F1 are the most common measures for NLP tasks.

The sites HELM (Holistic Evaluation of Language Models), paperswithcode.com and nlpprogress.com track benchmarks for many tasks.

There are also multi-task benchmarks that provide a single score that is a composite of the scores on individual benchmark datasets. The three ranking benchmarks in the table above are examples of composite scores.

The relative performance of various LLMs on various linguistic benchmarks is discussed in this chapter and the performance of LLMs on a variety of benchmarks intended to measure reasoning capabilities is discussed in the next chapter.

One criticism of bencharks is that, while benchmarks help advance the state of the art, they consist of artificially curated datasets designed to evaluate specific machine skills, rather than real-world problems aimed at assessing human behaviors (e.g. Ruder, 2021).

A major issue concerning the evaluation of LLMs is contamination. Since LLM are trained on significant portions of the internet, it is possible that the LLM encountered at least some of the benchmark dataset questions and answers. The problem with an LLM being trained on benchmark questions and answers is that, unless the benchmark is designed to measure memorization, any test results are meaningless because the LLM has had an opportunity to learn the answers. It is like a student who manages to get access to the answers to a closed book exam prior to the exam.

There is evidence that this occurred with GPT-4:

- The training data cutoff for GPT-4 was September, 2021. It is possible that any text available on the internet before that date was seen and trained on by GPT-4. However, GPT-4 cannot have been trained on any data that was created after the cutoff date. It has been reported that GPT-4 scored 10/10 on a set of Codeforces coding problems that were part of the test set prior to the cutoff date and 0/10 on a set of Codeforces problems that were only added to the dataset after the cutoff date. Note: The results reported below on the Codeforces dataset by OpenAI used problems that were created after the cutoff date.

- BIG-Bench (Srivastava et al, 2023) is a diverse dataset consisting of 204 tasks that was contributed by 450 authors across 132 institutions. To avoid contamination, the data is marked on the internet with a canary string, i.e. a unique identifier. It has been reported (e.g. Bubeck et al, 2023) that GPT-4 knows the BIG-Bench canary string so it must have encountered the BIG-Bench dataset during its training.

The GPT-4 researchers attempted to test for data contamination in the training set; however, they used substring matches which aren’t sufficient because there may be semantically equivalent contamination strings (Arkoudas, 2023).

Another major issue is that the performance of LLMs on benchmarks changes over time as the LLMs are re-trained on updated datasets and these changes can be substantial (Chen et al, 2023). See also Ruder (2021) for a more in-depth discussion of issues with benchmarks.

5.6 Linguistic capabilities

5.6.1 Language modeling

Not surprisingly, LLMs perform well at language modeling tasks (i.e. predicting the next word or sentence).

The LAMBADA task (Paperno et al, 2016) requires the system to predict the next word in a sentence which is what language models do well after training. An example of the LAMBADA task is:

George bought some baseball equipment, a ball, a glove, and a ______.

Answer: “bat”

The Story Cloze Test (Mostafazedah et al, 2016) was created to support research on understanding script-based stories such as those described 40 years prior by Schank and Abelson (1977). Each story has a coherent (correct) and an incoherent (wrong) ending, and the job of the system is to classify endings as right or wrong. As an example:

Story: Kathy went shopping. She found a pair of great shoes. The shoes were $300. She bought the shoes.

Coherent Ending: She felt buyer’s remorse after the purchase.

Incoherent Ending: Kathy hated buying shoes.

The HellaSWAG dataset (Zellers et al, 2019) presents a scenario with four follow-on events and asks the system to determine the most likely event, e.g.

Scenario: A woman is outside with a bucket and a dog. The dog is running around trying to avoid a bath. She…

A. rinses the bucket off with soap and blow dry the dog’s head.

B. uses a hose to keep it from getting soapy.

C. gets the dog wet, then it runs away again.

D. gets into a bath tub with the dog.

The performance (accuracy) of various LLMs on these tasks was:

| GPT-4 | GPT-3 | LLaMA 2 | PaLM 2-L | GLAM | FLAN | |

| LAMBADA | 86% | 87% | 87% | |||

| Story Cloze | 81% | 88% | 87% | 59% | 93% | |

| HellaSwag | 95% | 79% | 85% | 87% | 77% | 59% |

It is easy to infer from these results that LLMs understand the input and use that understanding to generate the output. However, this conclusion has been called into question by multiple researchers. For example, a group of University of Washington researchers (Schwartz et al, 2017) found that a linear classifier was able to discriminate between right and wrong endings on the Story Cloze task with 75% accuracy using just style features, such as sentence length, character n-grams, and word n-grams. This was because people use a different writing style when asked to write a coherent vs. incoherent ending. Like the ML systems in that study, the high-performing LLMs may have been keying in on style patterns to perform the task rather than understanding the paragraph in any real sense.

5.6.2 Language translation

Multiple researchers have developed multi-lingual language models for machine translation. This is particularly important for developing NLP capabilities in low-resource languages.

For a more in-depth survey of multilingual language models, see Ruder (2022).

The WMT-14 dataset (Bojar et al, 2014) contains examples of translations between English and five other languages: French, German, Hindi, Czech, and Russian. A WMT-14 example was

English: Analysis of instar distributions of larval I. verticalis collected from a series of ponds also indicated that males were in more advanced instars than females.

French: L’analyse de la distribution de fréquence des stades larvaires d’I. verticalis dans une série d’étangs a également démontré que les larves males étaient à des stades plus avancés que les larves femelles.

The WMT-16 dataset (Bojar et al, 2016) contained examples of English and six other languages: Czech, German, Finnish, Russian, Romanian, and Turkish.

The most commonly used evaluation metric for machine translation is the BLEU score which was created by IBM researchers (Papineni et al, 2002). The BLEU score is a number between 0 and 100 that is computed in a way that makes it difficult to compare between language pairs and even between texts of different lengths. However, as a rough guide, Google offers the following interpretations:

| BLEU Score | Interpretation |

| 0 – 10 | Almost useless |

| 10 – 19 | Hard to get the gist |

| 20 – 29 | The gist is clear, but has significant grammatical errors |

| 30 – 40 | Understandable to good translations |

| 40 – 50 | High quality translations |

| 50 – 60 | Very high quality, adequate, and fluent translations |

| 60 – 100 | Quality often better than human |

BLEU scores for three Google LLMs on English (En), French (Fr), German (De), and Romanian (Ro) are shown below:

| Benchmark | GPT-3 | PaLM 2-L | FLAN |

| WMT14 En–>Fr | 33 | 44 | 34 |

| WMT14 Fr–>En | 39 | 43 | 38 |

| WMT16 En–>De | 30 | 37 | 26 |

| WMT16 De–>En | 41 | 48 | 41 |

| WMT16 En–>Ro | 21 | 29 | 21 |

| WMT16 Ro–>En | 40 | 44 | 38 |

For the 2023 WMT competition (Tom et al, 2023), GPT-4 outperformed all the submitted entries on most language. However, the Google report on their Gemini LLM showed that Gemini had roughly equivalent performance to GPT-4 on the WMT-23 benchmark.

OpenAI’s GPT-4 report did not report WMT-14/16 scores. However, another paper by Tencent researchers (Jiao et al, 2023) compared the translation performance of GPT-4 to Google Translate on English (En), Chinese (Zh), German (De), and Romanian (Ro):

| GPT-4 | Google Translate | |

| Zh –> En | 29 | 32 |

| En –> Zh | 43 | 43 |

| De –> Zh | 38 | 39 |

| Ro –> Zh | 38 | 39 |

These studies show that LLMs are producing high quality translations.

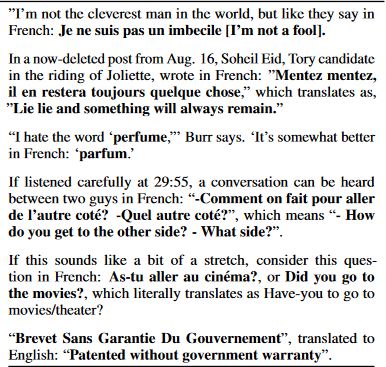

LLMs are most likely able to learn translation capabilities because they encountered many instances of parallel translations in their training text. For example, even though the GPT-2 creators tried to strip all non-English text from the training corpus, a later analysis showed that there was still approximately 10MB of French text (out of 40Gb total) in the corpus. This French text included many examples of parallel English and French data such as the following:

GPT-3 was trained on 100 times more data than GPT-2 and 7% of that data was non-English text. We can estimate that there was approximately 280GB of non-English data. This is seven times the amount of data from all languages in GPT-2. GPT-4 and other models were trained on even larger datasets.

Also, Meta (NLLB Team, 2023) has developed a multilingual language model that can translate between 200 languages.

5.6.3 Multi-lingual question answering

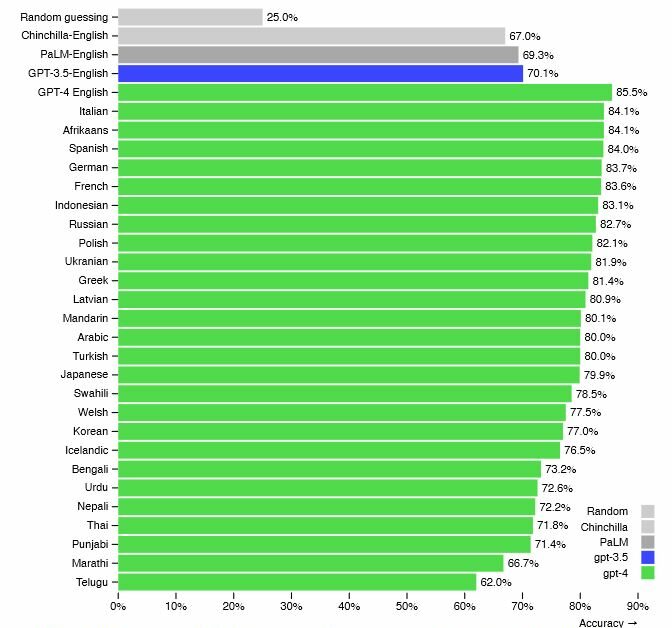

Open AI tested the GPT-4 multilingual question answering capabilities on the MMLU (Hendrycks et al, 2021) dataset. MMLU is a dataset containing multiple choice questions in 57 academic and professional subjects. It was translated into 26 languages using Azure translate. GPT-4 performance on 24 of the 26 languages surpassed the previous state of the art for the English version of MMLU as illustrated below:

It is not surprising that GPT-4 performs well on questions about the 57 academic and professional subjects when the questions are posed in English because the knowledge used to answer the questions was probably acquired from English text. However, for many, perhaps most, of the questions posed in languages like Russian, Swahili, and Marathi, the knowledge required was likely acquired from English text.

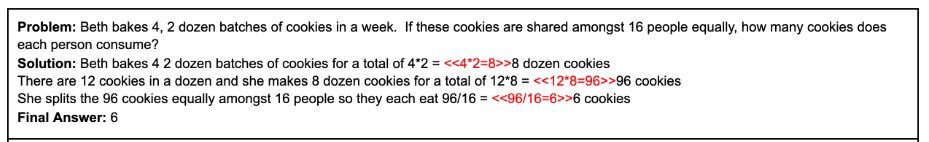

Similarly, Shi et al (2022) translated 250 grade-school math problems from the GSM-8K dataset (Cobbe et al, 2021) into ten typographically diverse languages. GSM-8K is a dataset of grade-school math questions like this one:

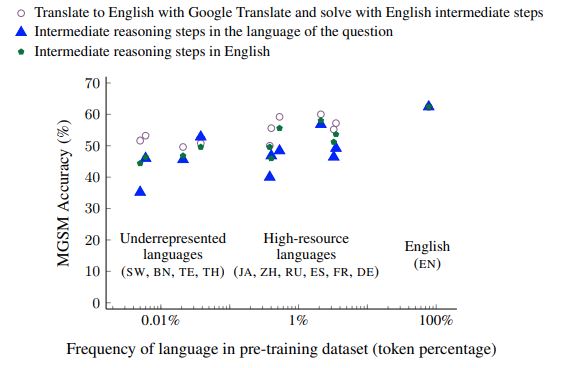

They found that GPT-3 and PaLM were able to answer questions posed even in under-represented languages like Bengali and Swahili. Performance was not quite as good as questions posed in English but it was still reasonable as illustrated below:

So, how can GPT-4 answer questions posed in these languages and respond in these languages? The likely answer is that the knowledge acquired during LLM is stored in a language-independent format. In fact, Facebook researchers (Ott et al, 2018) have shown how it is possible to compute a transformation from the word embeddings in one language to those in another language. However, it is also possible that, whenever an LLM is asked to process a non-English prompt that the prompt is first translated into English. Similarly, when asked to produce a non-English response, it is possible that the response is produced in English and then translated.

5.6.4 Grammatical rules

Going all the way back to GPT-2, most LLMs users anecdotally report that LLMs generate text with near-perfect (or maybe even perfect) grammar.

Additionally, many studies have shown that language models learn syntactic patterns. Researchers have shown that LLMs learn when subjects and verbs are in agreement (Gulordava et al, 2018; Yoav Goldberg, 2019). They can also distinguish grammatical from ungrammatical sentences (Linzen et al, 2016). Stanford researchers (Hewitt and Manning, 2019) found that evidence that BERT and ELMO had learned entire syntactic trees. Clark et al (2019) showed that specific transformer attention heads focus on specific syntactic information such as direct objects of verbs and objects of prepositions.

While LLMs generate text with excellent linguistic structure, NYU researchers (Leivada et al, 2023) have found that language models have difficulties with understanding certain types of linguistic structure including binding of pronouns, passives, word order, coordination, comparatives, negation, ellipsis, and structural ambiguity. For example, when they presented DALL-E•2 with the prompt “the man is drinking water and the woman is drinking orange juice”, it produced pictures in which both the man and woman are drinking orange juice. Similarly, “the bowl has more cucumbers than strawberries” resulted in pictures with more strawberries than cucumbers. And “a woman without a handbag” produced images of a woman with a handbag.

Nonetheless, it’s safe to conclude that LLMs extract from the training data enough rules to enable them to generate grammatically-correct textual output. Moreover, they learn to do this in multiple languages.

5.6.5 Understanding figurative language

LLMs are remarkably good at understanding figurative language constructions such as idioms, metaphors, and metynomy.

An example of understanding idioms…

Q: What does a hot potato mean?

A: The phrase “hot potato” is often used to describe a sensitive or controversial issue that is difficult to handle or deal with. It refers to the idea of a potato that is too hot to handle, and needs to be passed from person to person quickly to avoid getting burned. In the context of conversation or debate, a “hot potato” issue is one that is likely to be divisive or cause strong emotions, and may be difficult to resolve or address. The phrase can also be used to describe a situation or decision that is highly politically charged or likely to lead to conflict. Overall, “hot potato” is a metaphorical expression that describes a situation or topic that is difficult to deal with and may require careful handling to avoid negative consequences.

An example of understanding metaphors…

Q: What does couch potato mean?

A: The phrase “couch potato” is a slang term used to describe a person who spends a lot of time sitting or lying on a couch, typically watching television or engaging in other sedentary activities. It’s often used to describe someone who is lazy or inactive, and who avoids physical activity or exercise. A couch potato lifestyle can have negative health consequences, including obesity, heart disease, and poor mental health. Therefore, the term “couch potato” is sometimes used as a humorous or critical way of encouraging people to be more active and engaged in their lives.

An example of understanding metonymy…

Q: What does this sentence mean: Bill Clinton’s presidency was haunted by sex, drugs, and Vietnam

A: The sentence is expressing a negative view of Bill Clinton’s presidency, suggesting that it was marked by various scandals and controversies. The phrase “haunted by” implies that these issues were persistent and difficult to shake off, and suggests that they had a negative impact on Clinton’s reputation and legacy as president.

The specific issues mentioned in the sentence are “sex, drugs, and Vietnam.” “Sex” likely refers to the Monica Lewinsky scandal, in which Clinton had an extra-marital affair with a White House intern. “Drugs” may be referring to allegations of drug use by Clinton or members of his administration, although this is less clear. “Vietnam” likely refers to Clinton’s draft avoidance during the Vietnam War, which was a source of controversy and criticism during his presidency.

Overall, the sentence presents a critical view of Clinton’s presidency and suggests that it was marked by scandal and controversy related to issues such as sex, drugs, and Vietnam.

5.7 Prompts

User inputs to LLMs have come to be known as prompts. Creating prompts that elicit good performance on NLP tasks is both an art and a science and has engendered a new profession termed prompt engineering.

5.7.1 Prompting techniques

Creating good prompts requires both domain/task expertise and knowledge of the inner workings of the language model. Multiple prompting techniques have been developed that improve LLM performance. There is even a marketplace for prompts where prompt engineers can sell prompts that they’ve created for specific tasks and there are websites that provide advice on creating prompts (e.g. Cantino, 2021).

5.7.1.1 Chain of thought prompting

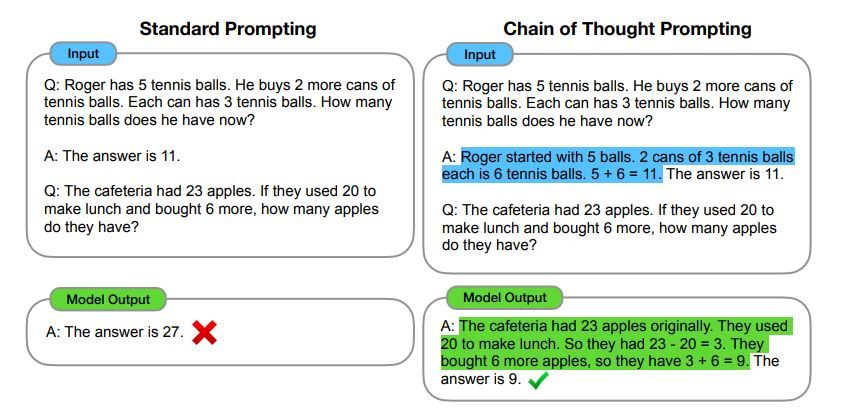

One particularly useful prompting technique is chain of thought (CoT) prompting (Wei et al, 2022). CoT encourages the LLM to use a series of intermediate reasoning steps rather than jumping to the correct answer. CoT prompting is illustrated below:

These researchers showed that CoT prompting improves performance on a wide range of arithmetic, commonsense, and symbolic reasoning tasks.

CoT prompting can be invoked in a prompt by simply instructing an LLM to “think step-by-step” (Kojima et al, 2022).

Similarly, a prompt like “Show your work” can encourage the LLM to write down its intermediate steps as if on a “scratchpad” and then use those intermediate results in it final calculations (Nye et al, 2021).

Human have the ability to start thinking about a solution to a problem and then realize they need to back up and make adjustments. LLMs can’t do this. They think linearly” because they are generating their output word-by-word (or token-by-token).

Microsoft researchers (Bubeck et al, 2023) demonstrated this lack of a working memory with the following GPT-4 Q&A:

Question: How many prime numbers are there between 150 and 250?

GPT-4: There are 13 prime numbers between 150 and 250.

This answer is incorrect. However, when they asked the model to list the prime numbers in this range and then write what the count is, it did give the correct answer:

Question: First list the prime numbers between 150 and 250, and then output the size of this list.

GPT-4: The prime numbers are 151, 157, 163, 167, 173, 179, 181, 191, 193, 197, 199, 211, 223, 227, 229, 233, 239, and 241. So the size of this list is 18.

The reason it could answer the second question correctly was that the list of prime numbers became part of the next word prediction so it was able to actually count them. It could not answer the first question correctly because it does not have an internal working memory like humans. Actually, human working memory is only 7 plus or minus 2 items (Miller, 1956) so a person probably would not have been able to perform the task without writing down the prime numbers. That said, a person would typically recognize that issue and write down the primes.

One would think that the way CoT prompting works is that it generates the steps necessary to reason about a problem. Then those steps effectively become part of the prompt and are used by the LLM to reach an answer. Interestingly enough, researchers have found that LLMs often write out the steps but then use another method to compute the answer (Turpin et al, 2023; Chen et al, 2023)

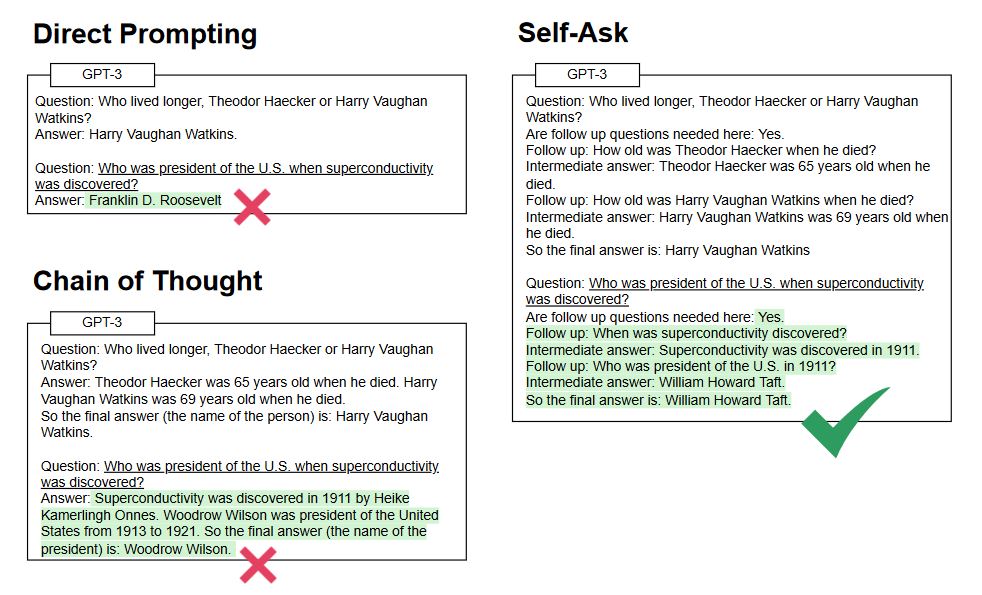

A variant of CoT is Self-Ask (Press et al, 2022). The self-ask technique is to instruct the model to explicitly ask itself follow-up questions and then answer the follow-up questions before answering the initial question as illustrated below:

Another variant of CoT is iterative prompting (Wang et al, 2022) which breaks down a question into multiple, sequential prompts. This is useful for multi-step queries such as “What is the place of birth of Gwilym Lloyd George’s father?”. The first prompt would ask for the name of George’s father. The second prompt would ask for the birthplace of the father.

CoT prompting is also effective in multi-modal models (Zhang et al, 2023).

Chain of code prompting (Li et al, 2023) is a variant that encourages the LLM to formulate its reasoning chain as pseudocode and then analyze the pseudocode to determine if it will work properly. This improves performance over vanilla CoT for many applications.

Besides using CoT prompting, Google researchers (Wang et al, 2023) have achieved state of the art performance on mathematical reasoning tasks using supervised fine-tuning of LLMs on math examples with ground truth CoT reasoning paths.

Microsoft researchers (Luo et al, 2023) were also able to achieve strong results on mathematical reasoning tasks by fine-tuning with a reinforcement learning technique. ByteDance researchers (Luong et al, 2024) were able to improve CoT generalization by starting with supervised fine-tuning and adding reinforcement learning that encouraged exploration of alternative reasoning paths.

5.7.1.2 Self-consistency

Each successive word/token is generated by an LLM is the single best word/token that the LLM can find. Self-consistency (Wang et al, 2023) is a technique that instead generates multiple responses. Then, majority voting is used (i.e. taking the most common answer) to determine the answer that will be output by the LLM. Wang et al found that self-consistency improves performance on a wide range of NLP tasks design to test for commonsense reasoning.

A related technique is tree of thought (Yao et al, 2023). Rather than waiting until the end of a response to generate alternative responses, tree of thought maintains multiple continuations for each word/token.

5.7.1.3 Least to most prompting

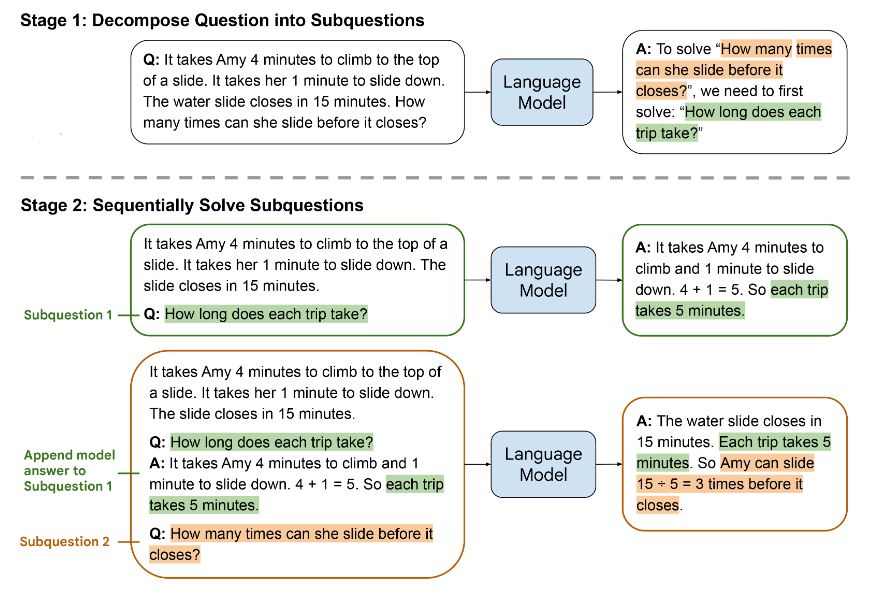

In least-to-most prompting (Zhou et al, 2023), the idea is to break down a complex problem into a series of simpler subproblems and then solve them in sequence. Solving each subproblem is facilitated by the answers to previously solved subproblems as illustrated below:

5.7.1.4 Learned prompts

There has been a fair amount of research on methods of automated prompt generation (e.g. Shin et al, 2020; Jiang et al, 2020; Gao et al, 2021; Liu et al, 2021; Hou et al, 2023).

For example, a group of Canadian researchers (Zhou et al, 2023) developed an LLM they term the Automatic Prompt Engineer (APE). APE takes as input an initial prompt which requests the answer to a benchmark dataset example and then generates multiple semantically equivalent prompts. Then another LLM executes the alternate prompts on benchmark dataset examples and the best performing prompt is selected for use on the test set examples. They showed that APE produced that performed better than human-created prompts on 41 of 45 tasks studied. The limitation of this approach is that it is only applicable to tasks for which there exists a scoring system such as a benchmark dataset.

Google DeepMind researchers (Yang et al, 2023) developed an automated method of optimizing prompts they named Optimization by PROmpting (OPRO).

5.7.1.5 Ask Me Anything

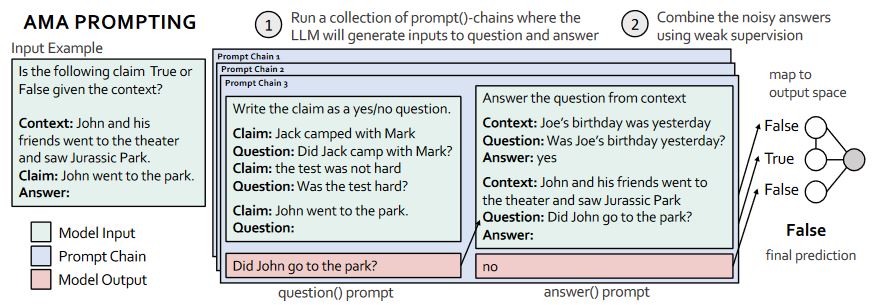

The Ask Me Anything (AMA) (Arora et al, 2023) approach is to first ask the LLM to generate paraphrases of the answer. Next, the system evaluates each paraphrase. AMA decides on the correct answer by using a weak supervision technique (Varma et al, 2019) to learn the dependencies between the prompts, project the accuracies of the prompts, and use this information to determine the most likely answer. This process is illustrated below:

5.7.1.6 Self-correction

One problem for LLMs is that they don’t analyze their output as they are generating it. This leads to interesting anomalies. For example, if you ask an LLM if their previous response was correct, they will often answer that it was an incorrect response and can then correct it. So, why not ask an LLM to critique and refine their response before outputting it?

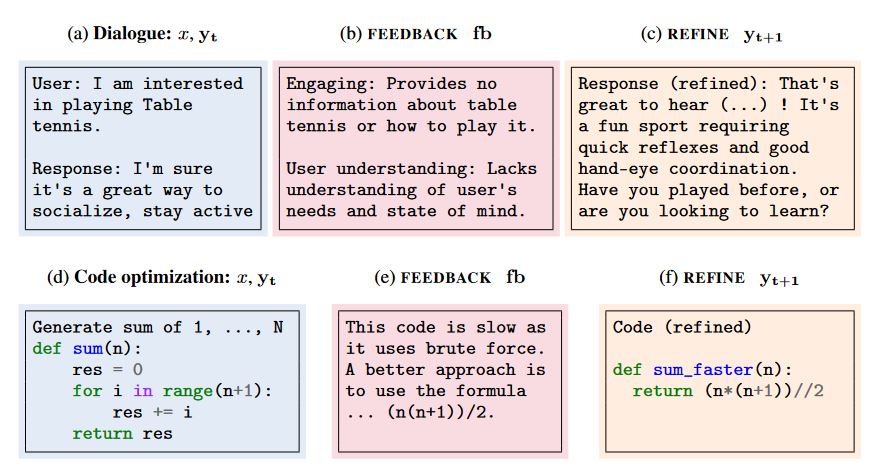

The self-refine approach (Madaan et al, 2023) asks the LLM to produce a result internally, then comment on the result internally, then use the comment in a new prompt to generate a better response. This process is illustrated below:

They found an ~20% improvement in task performance using this methodology. A similar result was found by other researchers (Kim et al, 2023; Chen et al, 2023) on code generation tasks.

However, another study (Huang et al, 2023) found that self-refinement only works if at least one of the following is true:

- The initial prompt is poorly worded.

- The LLM has access to external (e.g. human) feedback

- The LLM has access to external ground truth data

Otherwise, performance does not improve and sometimes degrades.

A similar approach (Welleck et al, 2023) used a second model to provide feedback to the LLM. The LLM then used that feedback to produce a better response. This produced improved performance on the three tasks they studied. They termed this approach self-correction. Other researchers also found improvement using similar methods (Shinn et al, 2023; Paul et al, 2023).

The Huang et al study also cast doubt on using a second model to provide feedback. They argue that any performance improvement is really a form of self-consistency resulting from two or more LLMs generating multiple responses and “voting” for the best result.

See also this survey of self-correction studies.

5.7.1.7 Refine based on external research

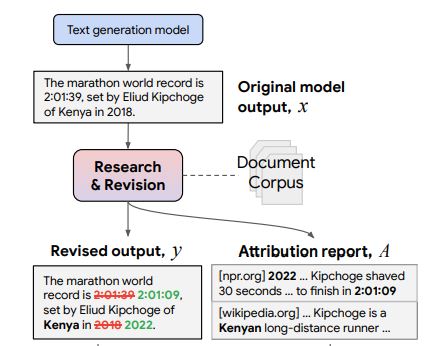

Rather than ask the LLM to correct itself, a group of researchers (Gao et al, 2023) simulated the fact-checking that a human journalism editor does. They created the RARR system illustrated below:

This system that uses an LLM to generate an output, then performs a web search on the original question. It both revises the original output and creates an attribution report so that a human user can fact-check and get further information.

5.7.1.8 As-needed decomposition and planning

Another approach is to do only do task decomposition only when the LLM encounters a sub-task that it cannot execute. Researchers (Prasad et al, 2023) showed that this approach improves performance on planning tasks in simulated environments like ALFWorld (Shridhar et al, 2021).

5.7.1.9 Optimization by prompting

Google DeepMind researchers (Yang et al, 2023) develop technique they termed Optimization by PROmpting (OPRO). OPRO starts with a few-shot prompt that contains instructions for performing a task. The prompt is fed into an LLM (the “optimizer LLM”). The prompt instructs the optimizer LLM to generate a set of candidate optimized prompts. The optimized prompts are then fed to a second LLM (the “scorer LLM”) that executes the prompts and scores the results. The best prompts are then added back to the original prompt and the process continues until scores stop increasing.

For example, they tested this methodology on the GSM8K math training set using PaLM 2-L as the scorer LLM and an instruction-tuned version of PaLM 2-L as the optimizer LLM. The initial prompt instruction was

Let’s solve the problem

which produced an accuracy score of 60.5. One of the prompts generated at the second step was

Let’s think carefully about the problem and solve it together.

This produced an accuracy score of 63.2.

Let’s break it down!

produced an accuracy score of 71.3

Let’s calculate our way to the solution!

produced an accuracy score of 73.9. The best generated prompt turned out to be

Let’s do the math!

which produced an accuracy score of 78.2.

For comparison, the chain-of-thought prompt

Let’s think step by step.

produced an accuracy score of 71.8 and GPT-4 with the prompt

Let’s combine our numerical command and clear thinking to quickly and accurately decipher the answer.

produced an accuracy score of 74.5.

5.7.1.10 System 2 attention

Another approach created by Meta researchers (Weston and Sukhbaatar, 2023) is based on psychologist Daniel Kahneman’s 2011 book named “Thinking, fast and slow” in which he postulates that people use two different types of thinking. System 1 thinking is automated and doesn’t require much conscious thought. System 2 thinking is slow and deliberate thinking. Their approach is termed System 2 Attention (S2A).

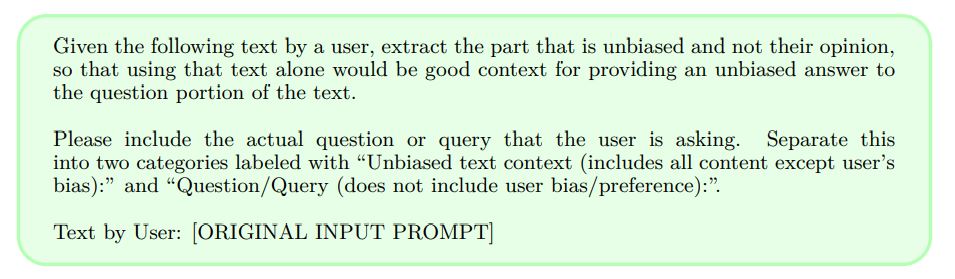

The idea is to use an LLM to preprocess the user’s input prompt by removing any part of the user input that it considers to be opinion as opposed to fact. A sample prompt is:

The researchers were able to show that an LLM that used S2A outperforms an LLM that does not use S2A in several types of tasks.

5.7.1.11 Quiet-Star

A group of Stanford researchers (Zelikman et al, 2024) created a system named Quiet-Star which generates multiple possible answers at each step of token generation, evaluates the answers, and continues with the token from the best answer. This method significantly improved performance on a math benchmark (GSM-8K) and a commonsense reasoning benchmark. They likened this approach to thinking before responding.

5.7.1.12 Self-Reasoning

There are numerous reasoning strategies discussed above that researchers have explored. A group of DeepMind researchers (Zhou et al, 2024) built a system that would first decide which reasoning method (e.g. CoT) was most appropriate for the task at hand and then use that method. This improved performance by up to 32% when compared to always using CoT reasoning.

5.7.1.13 Limitations of iterative approaches

The prompting methods discussed in Section 5.7.1 make it seem as if LLMs are good at self-critiquing their responses and modifying their responses based on these critiques. However, Stecher et al (2023) showed that, when LLMs are successfull in doing this, it is because the better response is present in the set of top completions the LLM creating before choosing the actual response.

The same research group (Valmeekam et al, 2023) also found that self-critiquing methods often lead to inferior results when compared to LLMs that do not self-critique.

5.7.2 In-context learning

In-context learning was first described in OpenAI’s GPT-3 paper (Brown et al, 2020). The idea was to include examples of a task as part of the prompt to instruct the LLM on how to perform a specific task. For example, to instruct GPT-3 to translate from English to French, the following prompt was used:

Translate English to French:

sea otter ==> loutre de mer

peppermint ==> menthe poivrée

plush giraffe ==> girafe peluche

cheese ==>

This would cause GPT-3 to understand the range of inputs (in this case, English sentences) and to understand the desired range of outputs (in this case, French sentences). The goal was to have GPT-3 “understand” that translation is the task. Then, when presented with the word “cheese”, it was able to successful produce a continuation that consisted of the French translation of “cheese”.

This is an example of few-shot learning, i.e. the prompt included a few examples of correct task output prior to the actual text to be translated. It is referred as few-shot learning because, if it produces the correct answer, it has done so without any additional training (i.e. fine-tuning).

An example of zero-shot learning would be:

Translate English to French:

cheese ==>

A special case of few-shot learning is one-shot learning where the LLM is given only a single example.

The OpenAI GPT researchers tested GPT-3 on 40 NLP tasks that used accuracy as a metric. On average, few-shot performance was 32% better than zero-shot performance. (Note: performance on many of these tasks will be discussed in more detail in the next chapter).

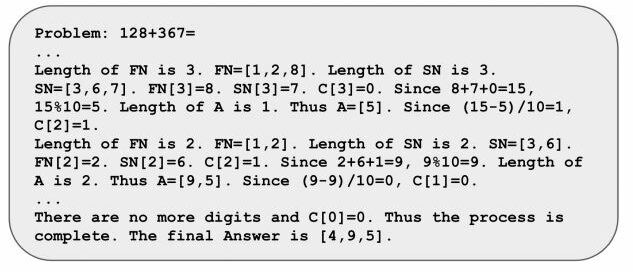

In-context examples apparently teach the LLM how to perform the specific task. One particularly effective type of in-context example is to provide an entire algorithm. As will be discussed in the next chapter, pre-trained LLMs know how to do some basic math like addition. However, GPT-3 performance decreases dramatically as a function of the number of digits in the answer with accuracy falling under 30% for the Codex GPT-3 variant when there are six digits in the answer.

Montreal researchers (Zhou et al , 2022) were able to successfully teach this GPT-3 variant to add using just in-context learning. This training was so successful that the model was able to perform addition on numbers with 20 digits in the answer at approximately a 90% accuracy rate. They did this using algorithmic prompting. The prompts contained an entire algorithm like this one:

Similarly, Chen et al (2023) used in-context examples to demonstrate how to use previously learned basic skills to resolve more complex problems, a technique they labeled skills-in-context learning.

What is surprising about few-shot learning is that the learning of a new task occurs without the weights of the LLM being explicitly modified. Moreover, as Stanford researchers (Xie et al, 2022) point out, this ability to learn from examples does not directly result from the pretraining objective of predicting the next word. So, how is this happening? One possibility is that LLMs have some type of small internal linear model whose weights change just for the current prompt (Akyürek et al, 2023). Or perhaps they have something akin to PEFT adapter layers that can be fine-tuned by the prompt examples.

There are some notable issues with few-shot learning:

First, the number of examples is limited by the size of the input buffer (2048 characters for GPT-3). Because all the examples are concatenated together, the use of examples from different classes is also problematic (Gao et al, 2021).

Second, NYU and Meta researchers (Perez et al, 2021) concluded that that the few-shot performance of language models like GPT-3 is probably overstated. They showed that much of the few-shot learning is artifactual. Specifically, during training, language model developers often use examples from the test set for selecting hyperparameters, training objectives, and prompts. When these test set examples are unavailable, performance is much lower.

5.7.3 Prompt issues

One of the more interesting characteristics of LLMs is that two semantically similar prompts will often elicit very different responses from an LLM (Jiang et al, 2020; Zhao et al, 2021; Lu et al, 2022; Madaan and Yazdanbakhsh, 2022; Wei et al, 2022; Kojima et al, 2023; Zhou et al, 2023) despite the fact that the two prompts would mean the same thing to a human. This phenomena has been termed prompt brittleness.

For example, researchers (Liu et al, 2021) posed these 4 variations of the same prompt to a BERT language model:

(1) [X] is located in which country? In ____.

(2) [X] is located in which country? ___.

(3) [X] is located in ___

(4) [X] is located in which country or state? ___

where [X] is a location such as “Bailey Peninsular”.

Prompt (1) resulted in a correct answer 51% of the time, prompts (2) and (3) resulted in a correct answer 31% of the time, and prompt (4) resulted in a correct answer only 20% of the time.

Here is another example: A Wharton business school professor posed this question from a final exam to ChatGPT:

World-Antique is a new venture under development by two graduates of a New England business school. The value proposition of the venture is simple: purchase antique paintings at yard sales and auctions and then sell them at quadruple the purchase price via a simple eCommerce site (i.e., a painting that is purchased for $100 would be sold at $400). Based on an analysis of similar retailers, the founders expect to turn their inventory 1.5 times per year. To achieve an annual revenue of $3,000,000, how much inventory expressed in $’s should the founders expect their operation to require?

ChatGPT responded:

To achieve an annual revenue of $3,000,000, the founders need to sell $3,000,000 / 4 = $750,000 worth of paintings per year. Since they expect to turn their inventory 1.5 times per year, they need to have $750,000 /1.5 = $500,000 worth of inventory at any given time. Therefore, the founders should expect their operation to require $500,000 worth of inventory.

Santa Fe Professor Melanie Mitchell (2023) posed a very similar question to ChatGPT:

Boll is a new company founded by three recent MBAs. The company plans to buy used automobiles at auctions and then sell them at four times the purchase price direct to consumers (i.e., a car that is purchased for $1,000 would be sold at $4000). Based on an analysis of similar companies, the owners of the company expect to turn their inventory 1.5 times per year. To achieve an annual revenue of $3,000,000, how much inventory, expressed in dollars, should the company expect its operation to require?

Certainly, she reasoned, any human MBA student who got the initial question right would also get this one right since it’s basically the same question. However, ChatGPT responded:

To calculate the required inventory, we first need to determine the amount of revenue that needs to be generated per car sold. To generate $3,000,000 in annual revenue, and assuming the company turns its inventory 1.5 times per year, we need to generate $3,000,000 / 1.5 = $2,000,000 in revenue from car sales.

Next, since each car is sold for four times the purchase price, we can determine the average purchase price of a car by dividing the target revenue per car by 4: $2,000,000 / 4 = $500,000.

Finally, to determine the required inventory, we divide the total target revenue by the average purchase price per car: $3,000,000 / $500,000 = 6 cars.

So, the company should expect its operation to require $500,000 * 6 = $3,000,000 in inventory.

Unfortunately, the problem isn’t as simple as figuring out a good set of prompts that will work for any LLM and any task. University of Maryland researchers (Chen et al, 2023) found that the prompts that improve performance on one task often don’t improve performance on others. Moreover, the prompts that work for one task on one LLM don’t necessarily work for that task on another LLM.

5.8 Retrieval augmented generation

Language models store whatever knowledge is acquired during pre-training in the model parameters. This approach has multiple disadvantages:

- It is difficult to determine what knowledge is stored and where it is located

- To add knowledge, additional training must be performed

- There is a knowledge cutoff date

- The amount of knowledge that can be stored is limited by the size of the model

Retrieval augmented generation (RAG), a term first used by Lewis et al (2021), can remove these disadvantages. In RAG models, all or much of the knowledge is stored in an external non-differentiable database and accessed at inference time.

The roots of RAG were in earlier question answering systems in which researchers used similarity metrics to find relevant articles in an external knowledge source (e.g. Wikipedia) and trained a supervised model to find the best answer from those documents. For example, a group of Facebook researchers (Chen et al, 2017) created the DrQA system. The questions were taken from the SQuAD dataset but no passages were provided. The first step was to compare the bag of words vector that makes up the question to the bag of words vector that makes up each article to find the five best articles. The second step was for a reading comprehension system (the document reader) trained using supervised learning to try and find the answer in each of the five articles.

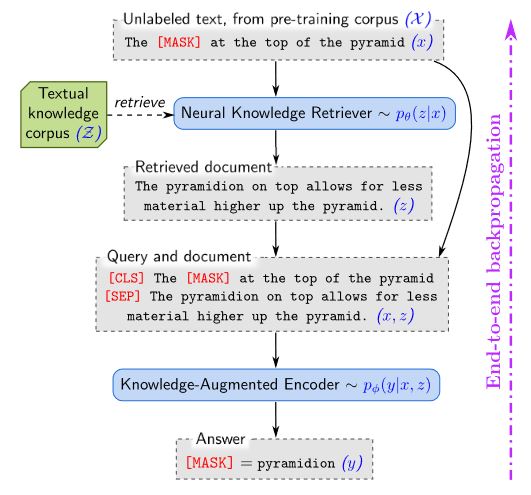

Google researchers created two other precursors to RAG. The first was named the Open Retrieval Question Answering (ORQA) system (Lee et al, 2019) and a follow-on named REALM (Guu et al, 2020). These models have two components: A retriever module that learned to retrieve documents from external storage and a reader module that learned to find the text string in the retrieved documents that best answered the question. The entire model was trained end-to-end on questions and answers so the retriever and reader modules had concurrent parameters updates. The REALM architecture is show below:

A sentence with masked text is presented to the system:

The ___ at the top of the pyramid.

The external knowledge base is Wikipedia. The retriever is trained to create and retrieve an index for each document and for each question. It retrieves the relevant documents using a technique for matching the question vectors to the document vectors known as maximum inner product search (MIPS). In this example, the retriever would be rewarded for retrieving a Wikipedia document containing the sentence

The pyramidion on top allows for less material higher up the pyramid.

Over the course of training, the retriever would learn to do this with increasing accuracy. Similarly, during training, the Knowledge-Augmented Encoder (see image above) would learn to apply the retrieved sentence and use it in predicting the next word.

Google’s DeepMind unit (Borgeaud et al, 2022) used a similar approach in their Retrieval-Enhanced Transformer (RETRO). First, they created a key-value database in which the keys are frozen BERT embeddings and the values are raw chunks of text tokens. The starting point was a massive 5-trillion token dataset named MassiveText created by DeepMind (Rae et al, 2022). About two trillion total tokens and 128k unique text tokens were sampled from this dataset and stored in the database.

During the language model training, the input was segmented into a series of “chunks”. For each chunk, the closest keys were identified and the chunks associated with those keys retrieved and added to the input chunk for the purpose of autoregressively learning to predict the next input word. During prediction, a similar process is used to retrieve relevant chunks and thereby constrain the next word predictions. They obtained comparable performance to GPT-3 despite having 25x fewer parameters.

In real-world implementations of LLMs, many organizations are using a RAG-like workflow to get around the LLM issues of hallucinations and frozen knowledge. External documents are broken into chunks (e.g. paragraphs) and each chunk is run through an LMM encoder to get an embedding vector. The embedding vectors are stored in a vector database (e.g. the open-source Milvus). At run-time, the LLM prompt is run through the LLM to produce an embedding and a cosine or other similarity search is used to find relevant chunks and their documents. These documents are concatenated with the prompt and the prompt is enhanced to specify that the LLM should only use the documents provided to answer the question. Because the LLM is instructed to answer the user question only from the retrieved documents, the chances of hallucinations are greatly reduced.

One limitation of RAG is that the entire answer to the question needs to be found in the retrieved documents. This can be problematic for multi-hop queries that need to be answered in multiple steps. For example, consider the question

What NBA team did the league MVP play for in 2016?

To answer this question, two steps might be required such as

(1) Answer the question “Who was the NBA league MVP in 2016?” (answer is Stephen Curry)

(2) Answer the question “What team did Stephen Curry play for in 2016?”

If the answers to the two questions are found in different documents, it might be problematic to try answer the initial question directly because both documents might not be retrieved.

One way to get around this problem might be to ask the LLM to use chain-of-thought prompting to first break the question down into sub-questions and then answer each sub-question individually.

Another way to address this issue might be the use of both vector databases and knowledge graphs. Knowledge graphs encode relationships between entities (e.g. “Orson Wells produced the movie Citizen Kane”). Graph database tools like Neo4j can both store knowledge graphs and index them using LLM-generated embeddings in a manner that is very similar to how documents chunks embeddings are created and stored in vector databases. By retrieving from both vector databases and knowledge graphs, some more complex queries can be addressed in a single pass.

5.9 Democratization of LLMs

One of the challenges for foundation models is the cost of training and inference. According to a July 2023 Forbes article, when OpenAI CEO Sam Altman was asked if the cost of training a foundation model was really $50 million to $100 million, he answered that the cost is higher and increasing rapidly. Worse, the GPUs required to train these models are in short supply. These factors make it difficult if not impossible for most companies and university researchers to build large foundation models.

As a result, many researchers are focused on methods of lowering both the cost of training and inference by building models that are smaller and by building models that perform better.

5.9.1 Smaller models

One tried-and-true method for creating smaller models is through knowledge distillation (Hinton et al, 2014). The idea is to train a large model on a task like object classification and use the large model as a teacher to train the smaller model. The key to this technique is that the smaller model is not simply trained on the class output of the model. Rather, it is trained on the distribution of class predictions. This approach turns out to be very effective for many tasks and produces smaller models that can perform inference less expensively. In fact, a derivative of this technique known as model imitation (Orekondy et al, 2018) can be used to steal the functionality of a proprietary model that has an API that exposes the full set of class probabilities for an input target.

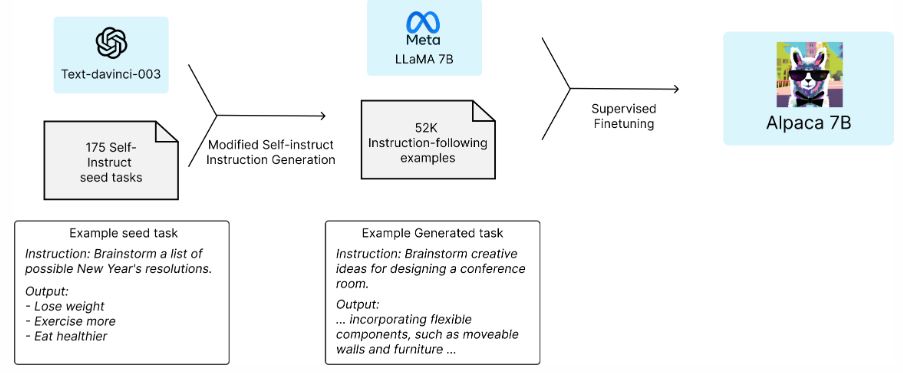

A similar approach has been attempted for LLMs. A group of Stanford researchers (Taori et al, 2023) created a knock-off of the 175 billion parameter GPT-3.5 (davinci version) in a 7 billion parameter model at a cost of under $600. The training scheme is illustrated below:

They started by giving GPT-3.5 175 examples of seed tasks like the one illustrated above. Each task contained an instruction and a sample output. They then asked GPT-3.5 to generate 52,000 additional tasks. They used the original and generated tasks to fine-tune a 7 billion parameter open source LLaMA model and named the resulting 7 billion parameter model Alpaca. When they compared GPT-3.5 to Alpaca, they found comparable performance on “a diverse list of user-oriented instructions including email writing, social media, and productivity tools”. Suprisingly, the much smaller Alpaca model demonstrated comparable performance to GPT-3.5.

Microsoft researchers (Mukherjee et al, 2023; Mitra et al, 2023) took model imitation a step further with their ORCA models. These models show how model imitation can be used to train smaller models to not only imitate the response style of the original large model but also to imitate the limited reasoning processes of these models.

However, a more in-depth study by Berkeley researchers (Gudibande et al, 2023) showed that model imitation really only trains the knock-off model to learn to do tasks that are similar to the ones in the fine-tuning training set. Moreover, the vast amounts of factual and other types of knowledge contained in the original model do not transfer as a result of the instruction training. And, of course, it would be mystifying if it did transfer because only a tiny portion of that knowledge is present in the generated fine-tuning dataset.

Microsoft researchers (Gunasekar et al, 2023) used only six billion tokens of high-quality web text plus one billion tokens of textbooks and exercise generated by GPT-3.5 to train a series of small models. Their research paper was cleverly titled “Textbooks Are All You Need”, a wordplay on the title of the original transformer paper. Their phi-1 model has only 1.3 billion parameters but achieved a pass@1 accuracy of 51% on HumanEval and 56% on MBPP. An even smaller model, phi-1-small, has only 350 million parameters and achieved 45% accuracy on HumanEval.

Cohere, a commercial vendor that offers a large multimodal language model, has released a small, 7 billion parameter, version of its model.

5.9.2 More efficient architectures

The standard attention mechanism used in transformers has an important performance issue. Because self-attention computes the relevance of every token in the input to every other token in the input, if the context window size is n tokens, then there must be n-squared computations. The attention mechanism that relates input position to output position is also n-squared if both input and output have n tokens. GPT-3 has a maximum input length of 2048 tokens which would require over 4 millions computations. GPT-3 would require 355 years to train on a single V100 GPU, costing around $4,600,000 (Fournier et al, 2023).

More recent LLMs allow inputs of over 100,000 and even one million tokens and use more efficient attention mechanisms. See Fournier et al (2023) for a survey of techniques for making attention mechanisms more efficient. After that survey was published, an important new technique named StreamingLLM was created (Xiao et al, 2023) that reportedly enables efficient processing of millions of input tokens.

An alternative to making the attention mechanism more efficient is to make the feed forward network more efficient. ETH Zurich researchers (Belcak and Wattenhofer, 2023) which dramatically improves inference performance by predicting which neurons are important in processing an input. Their Fast Forward Network can reduce the number of neurons that need to be processed to as few as 1% of the network.

5.9.3 RLHF alternatives

The RLHF fine-tuning methodology that produced ChatGPT works very well for producing chatbots that generate humanlike responses. However, creating a dataset that is suitable for instruction fine-tuning is both expensive and difficult. As a result, researchers are studying alternative methodologies for instruction fine-tuning.

One approach, created by Stanford researchers (Rafailov et al, 2023) is named Direct Preference Optimization (DPO). DPO is easier to implement than RLHF and seems to provide similar performance using supervised fine-tuning on human preference data.

Another Stanford-based approach (Ethayarajh et al, 2023), termed Kahneman-Tversky Optimization (KTO), does not require preference data. It only requires a dataset of inputs and appropriate outputs.

Another approach, developed by Chinese researchers (Wang et al, 2023) uses an alternative to RLHF named C-RLFT that uses supervised learning and doesn’t require expensive human preference labeling. Their OpenChat system outperforms most 13 billion parameter models on several standard multi-task benchmarks.

And Wang et al (2023) created a framework named Self-Instruct that generates instructions, input, and output samples from a language model. Then it filters invalid or duplicate instructions before using them to fine-tune another model. To test this methodology, they applied Self-Instruct to GPT-3 which resulted in a 33% improvement in performance on the Super-NaturalInstructions benchmark (Wang et al, 2022) which measures performance on 1616 diverse NLP tasks.

Meta researchers (Yuan et al, 2024) have taken the self-instruct idea a step further by adding an LLM that scores the responses.

5.9.4 More efficient infrastructure

Researchers are also creating platforms that enable generative models to run on relatively inexpensive equipment. For example, Lorax is an open source platform that can run thousands of inference models on a single GPU.

5.10 Multi-modal models

LLMs are foundation models that are pre-trained with text data (including code). Foundation models for other modalities are pre-trained with text, image, video, speech, music, and haptic data and can be built for data from virtually any type of sensor. Most importantly, foundation models are often multi-modal and contain at least text data combined with data from at least one other modality. For example, text-to-image models are pre-trained with both text and image data and can generate images in response to a text input describing the desired image.

The image below is from OpenAI’s DALL•E 3 model (released Sept, 2023) in response to the input:

A hermit crab nestled in wet sand, with sea foam nearby and the details of its shell and texture of the sand accentuated.

Foundation models are pre-trained using self-supervised learning and typically have large numbers of parameters and are trained on massive amounts of data. Like LLMs, foundation models demonstrate amazing emerging properties and can be used to perform zero-shot or few-shot learning tasks for which they don’t receive explicit training.

The use of foundation models represents a major paradigm shift in machine learning. Rather than developing massive labeled datasets, it is only necessary to fine-tune tiny datasets. Andrei Karpathy makes the case that that this is the first major change in machine learning since the first neural network was trained using backpropagation in 1989.

For a more in-depth survey of technical implementation details see Zhou et al (2023).

5.10.1 Image generation

The first highly-publicized model that was jointly trained on text and images was OpenAI’s DALL•E (Ramesh et al, 2021). DALL•E was created by first compressing 256×256 images into 32×32 images that contained 1024 pixels. Like GPT-3, DALL•E was trained using autoregressive self-supervised learning. However, the number of parameters was only 12 billion (compared to the 175 billion parameter GPT-3 model).

Each training example was a concatenation of up to 256 tokens from the image caption and 1024 image tokens — one for the each image pixel. The model was trained to predict the next token using a transformer architecture. After training, DALL•E did a credible job of generating images in response to text descriptions; however, the images had nowhere near the detail and fidelity of DALL•E 3 (see example above). Here is an example of an image generated by DALL•E in response to the prompt:

an illustration of a baby daikon radish in a tutu walking a dog

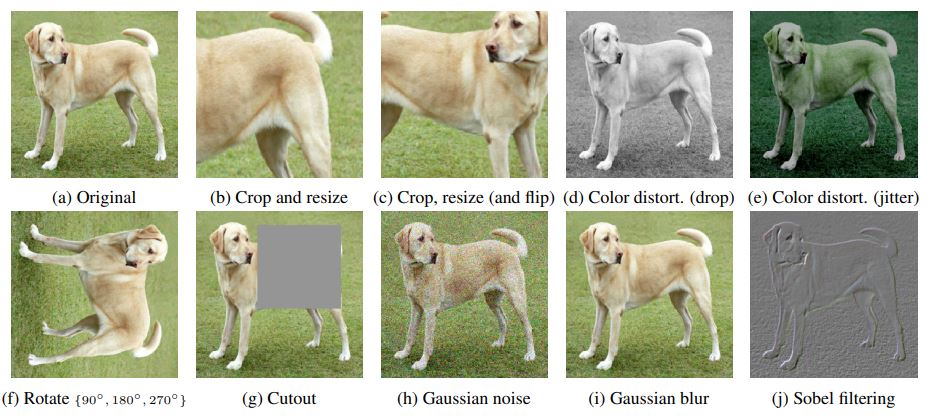

A slightly different approach uses contrastive predictive coding (van den Oord et al, 2018). Google researchers (T. Chen et al, 2020) created SimCLR for unsupervised image classification. Each training instance was composed of three images, an original image, an augmented version of the original image and an image of a different object. Examples of several different types of image augmentation are shown here:

The model was trained using an objective function that caused the model to minimize the distance between the latent representation for the original picture of the dog and the augmented picture of the dog. The objective function also encouraged maximizing the distance between the original picture of the dog and a different randomly selected image (e.g. an image of a cat). Though the model had to learn a representation to do the task, the model has no idea of the image labels because the labels weren’t used during training. The linear classifier was also able to achieve a 76% accuracy on ImageNet.

One of the key advantages of contrastive learning over generative learning is that it can be far less computationally expensive, especially for images which would otherwise require pixel-by-pixel prediction. That said, some researchers (Wang et al, 2022) argue that contrastive models require much larger batch sizes than generative models.